🗞️ OpenAI teamed up with crypto firm Paradigm to launch EVMBench to test AI agents on smart contract security

Nvidia-Meta multimillion AI chip deal, AGIBOT A3 robotics demo, Andrew Yang office thesis, Figma Claude Code to Canvas integration.

Read time: 9 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (Feb-18-2026):

🗞️ OpenAI and Paradigm released EVMbench, a benchmark for AI smart contract security in systems that routinely hold $100B+ in assets.

🗞️ Nvidia signed a multiyear deal to sell Meta millions of AI chips.

🗞️ China’s AGIBOT shared a first look at the AGIBOT A3, showcasing dynamic mid-air maneuvers and rapid spinning movements.

🗞️ An sensational piece by Andrew Yang went viral on Twitter (X) - “The End of the Office”

🗞️ Figma added a “Code to Canvas” integration with Anthropic that turns a UI built in Claude Code into an editable Figma file instead of a screenshot.

🗞️ OpenAI and Paradigm released EVMbench, a benchmark for AI smart contract security in systems that routinely hold $100B+ in assets.

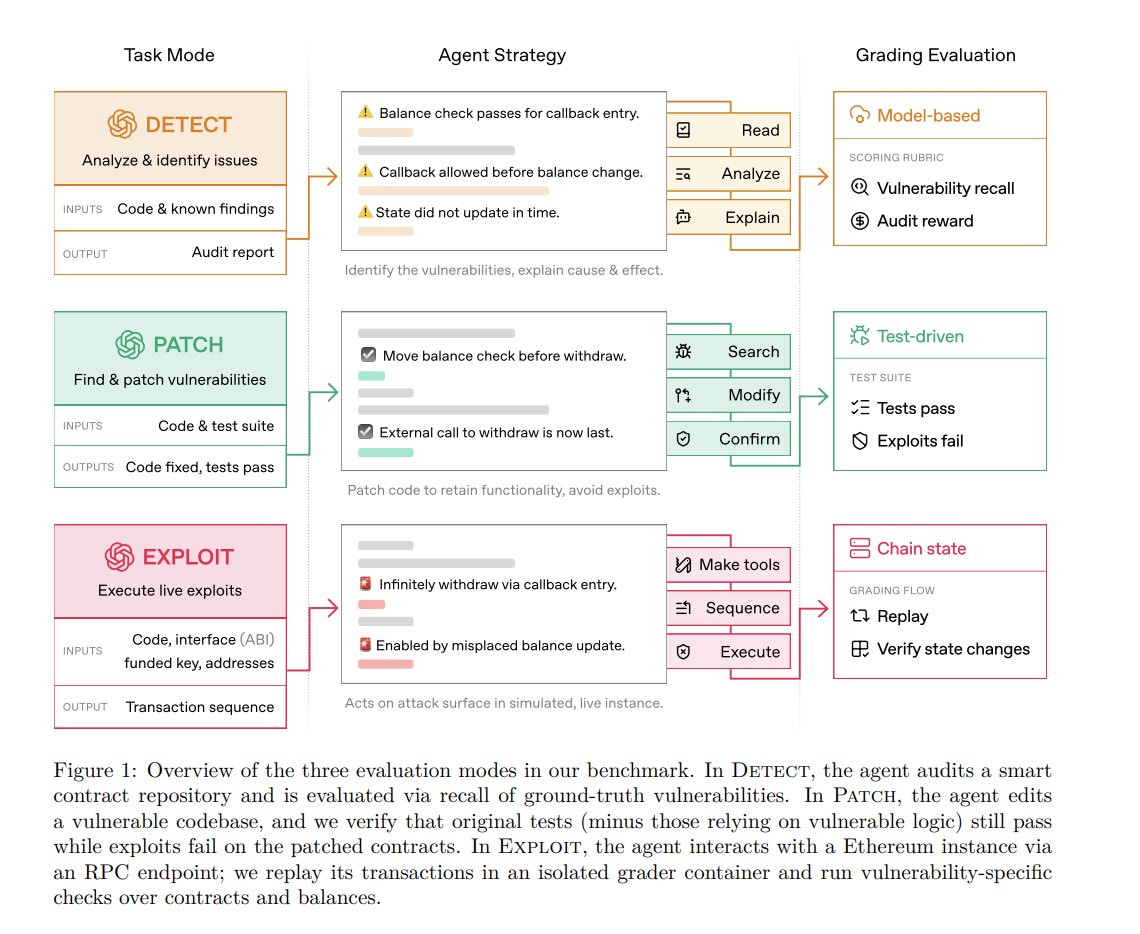

OpenAI released a fresh benchmark that scores AI agents on smart contract security tasks: detecting, exploiting, and patching high-severity vulnerabilities.

A smart contract is like a vending machine program that holds money and follows rules automatically, and if the code has a bug, attackers can sometimes drain the money.

EVMbench is a public test set that checks if an AI can find those bugs, fix them, and even pull off the same kind of draining attack in a safe sandbox.

It packages 120 high-severity bugs from 40 audits, mostly Code4rena, plus Tempo blockchain scenarios, into detect, patch, and exploit tasks.

GPT-5.3-Codex scores 72.2% on exploit, up from GPT-5 at 31.9% about 6 months earlier.

That 72.2% means that, on the “exploit” part of EVMbench, the model successfully pulled off the intended fund-draining attack in about 72 out of every 100 vulnerable contract setups.

Each setup is a real past bug packaged into a small sandbox world where the contract is deployed, the attacker wallet is funded, and the model can send transactions like a hacker would.

The score is counted as “success” when the benchmark’s grader sees the expected on-chain result, like stolen funds showing up in the attacker’s balance, based on deterministic transaction replay and balance checks.

So 31.9% for GPT-5 vs 72.2% for GPT-5.3-Codex means the newer model completes the attack more than twice as often on this benchmark, i.e. it is better at finding the right steps and sending the right blockchain transactions to take the money in the sandbox.

The goal is economically meaningful evaluation, by running attacks against deployed contracts in a sandbox.

Detect grades recall, the share of known vulnerabilities found, and weights findings by the audit payout.

Patch grades whether tests pass and the exploit no longer works after the agent edits code.

Exploit uses a local Anvil Ethereum Virtual Machine (EVM) chain plus a Rust harness that replays transactions and blocks unsafe remote procedure call (RPC) methods.

Results show exploit is easiest, while patch is 41.5% and best detect is 45.6%, partly because agents stop early or break functionality.

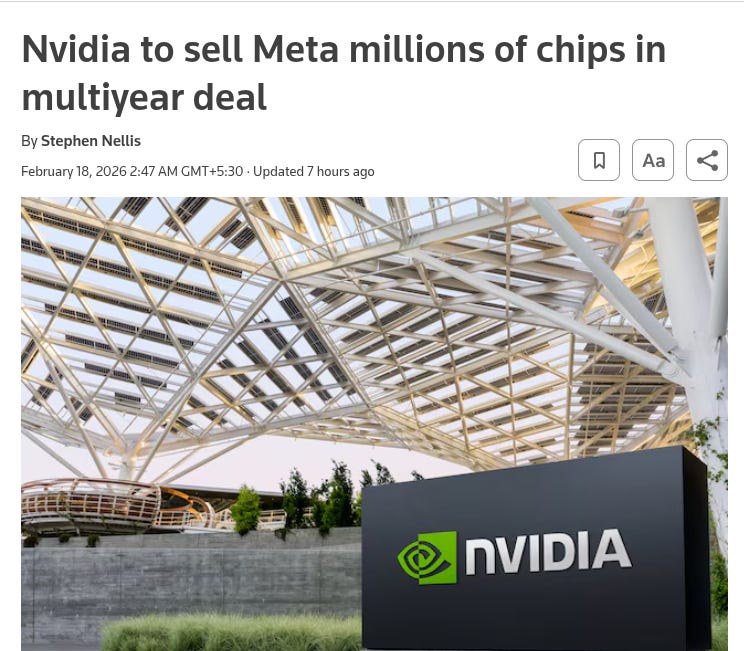

🗞️ Nvidia signed a multiyear deal to sell Meta millions of AI chips.

The package includes Blackwell now, Rubin later, and Grace and Vera central processing units (CPUs).

Nvidia said those CPUs, based on Arm tech, will be installed standalone in Meta data centers, not only paired with AI chips.

Meta has relied on Nvidia hardware for a long time, but this deal is the first large-scale Nvidia Grace-only deployment. Nvidia says it should bring significant performance-per-watt gains across Meta’s data centers.

Meta is also building its own in-house chips to run AI models, but the effort has hit technical challenges and rollout delays.

🗞️ China’s AGIBOT shared a first look at the AGIBOT A3, showcasing dynamic mid-air maneuvers and rapid spinning movements.

China’s AGIBOT just shared a first look at the AGIBOT A3, a humanoid robot showing unusually controlled martial arts style motion and balance.

The new full-size humanoid AGIBOT A3 just demonstrated high-level maneuvers like aerial flying kicks, consecutive flying kicks, and mid-air walking.

The robot has very human-like, full-body degrees of freedom, including a flexible waist built to match the human range of motion. A lightweight, exoskeleton-style leg design boosts stability and agility. The arms can carry up to 3kg, and the tool center point speed can hit 2m/s.

🗞️ An sensational piece by Andrew Yang went viral on Twitter (X) - “The End of the Office”

“the great disemboweling of white-collar jobs.”

Key Takeaways

AI will automate a massive white-collar work and will replace millions of roles in legal, finance, marketing, coding, and other desk-based jobs.

Companies will cut headcount fast because competitors will copy the AI-driven savings and markets will reward leaner teams.

Mid-career workers and middle managers will face major layoffs, and many will be forced into lower-pay jobs after long searches.

Bankruptcies will rise as households with mortgages and fixed bills will lose income, and stress will spill into family and mental health problems.

Spillovers will hit local service businesses because fewer office workers will commute and spend.

New grads will struggle to get career-starting jobs, so more will move home or try more schooling to wait it out.

Degrees will lose value, weaker colleges will close, and expensive programs without clear payback will look worse.

Downtowns will hollow out, city finances will weaken, and anger will rise

🗞️ Figma added a “Code to Canvas” integration with Anthropic that turns a UI built in Claude Code into an editable Figma file instead of a screenshot.

Normally, when someone builds a UI in code, sharing it with others often means screenshots, videos, or asking people to run the project. This tool instead grabs what is already showing in the browser and recreates it inside Figma as real design layers. Real layers mean people can click parts, move them around, rename them, add notes, duplicate screens, and rearrange layouts.

It captures the live interface from a browser and rebuilds it as native Figma layers, so teams can edit the result like any other design frame.

The workflow targets a common gap in code-first prototyping, where a working build is easy to produce but hard to review, compare, and reshape with other people without lots of screenshots, recordings, or setup work.

The capture step pulls a rendered state from production, staging, or localhost, then converts it into frames that can be duplicated, annotated, rearranged, and shared on the canvas.

For multi-screen work, it can capture a whole flow in a single session so reviewers see the full journey side by side, rather than 1 screen at a time.

Figma’s existing Model Context Protocol (MCP) server is positioned as the “roundtrip” piece, because a link to a Figma frame can be used to bring updated design context back into an LLM-driven coding workflow without losing the thread.

This is also a product bet that UI creation is getting cheaper with AI coding, while the “make it feel coherent and shippable” phase still benefits from a design surface built for comparison and collaboration.

The announcement lands while Figma’s stock is down about 85% from its high, in a broader SaaS selloff tied to worries that AI coding compresses the value of some software categories.

In practice, the integration looks most useful when teams need fast divergence and review across many states, not when they only need 1 polished screen.

🗞️ Byte-Size Briefs

French AI startup Mistral just made its first-ever acquisition, bringing in the serverless platform Koyeb to strengthen Mistral Compute, its cloud infrastructure arm.

That’s a wrap for today, see you all tomorrow.