🤖 OpenAI's AI coding agent Codex merged 352K+ Pull Request in 35 days

How OpenAI Codex merged 352K pull requests at 85.5% success in 35 days, why 5% bad data derails GPT-4o, Mistral Small 3.2 update and Karpathy’s software shifts. How to Use Claude Code

Read time: 10 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (20-Jun-2025):

🔥 OpenAI's AI coding agent Codex merged 352K+ Pull Request in 35 days with 85.5% success rate.

🤖 OpenAI reveals only 5% bad data can make GPT-4o misbehave

🗞️ Byte-Size Briefs:

Mistral released new model, Mistral Small 3.2, a small update to Mistral Small

🛠️ Tutorial: How to Use Claude Code

🧑🎓 Deep Dive: Andrej Karpathy: Software Is Changing (Again)

🔥 OpenAI's AI coding agent Codex merged 352K+ Pull Request in 35 days with 85.5% success rate

AI coding agents now autonomously plan, implement, test and open pull-requests, shifting from autocomplete to genuine software engineering. So f you are beginning a software engineering career, you MUST be at the the top 1%, else it will be very difficult.

Becasue, OpenAI's SWE AI coding agent Codex merged 352K+ Pull Request with 85.5% success rate. And this number is just for previous 35 days.

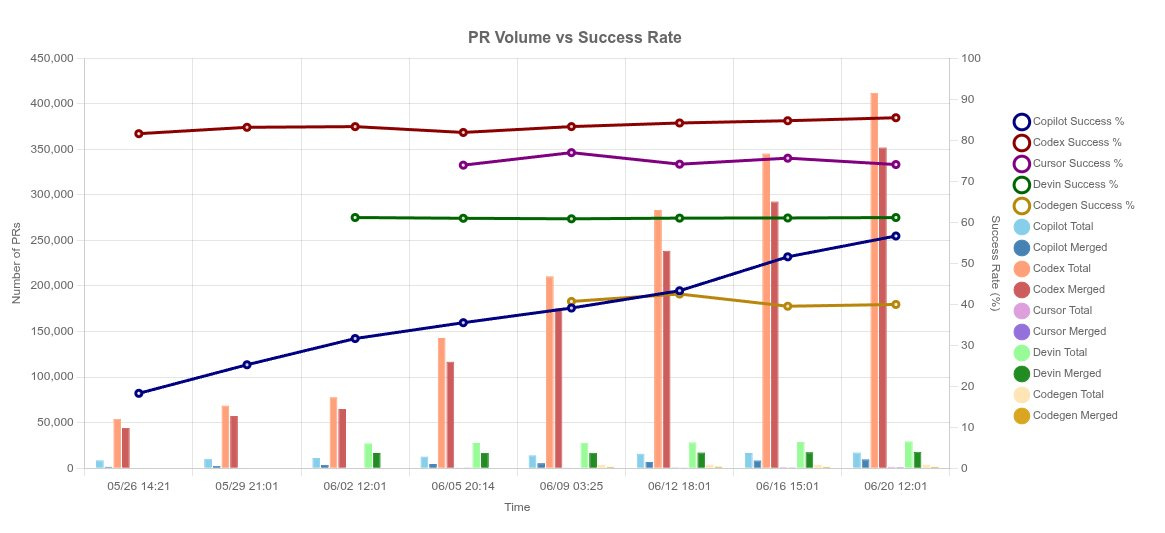

This repo tracks the opened and merged PRs by the top SWE coding agents by OpenAI, GitHub, and others. Updates every 3 hours.

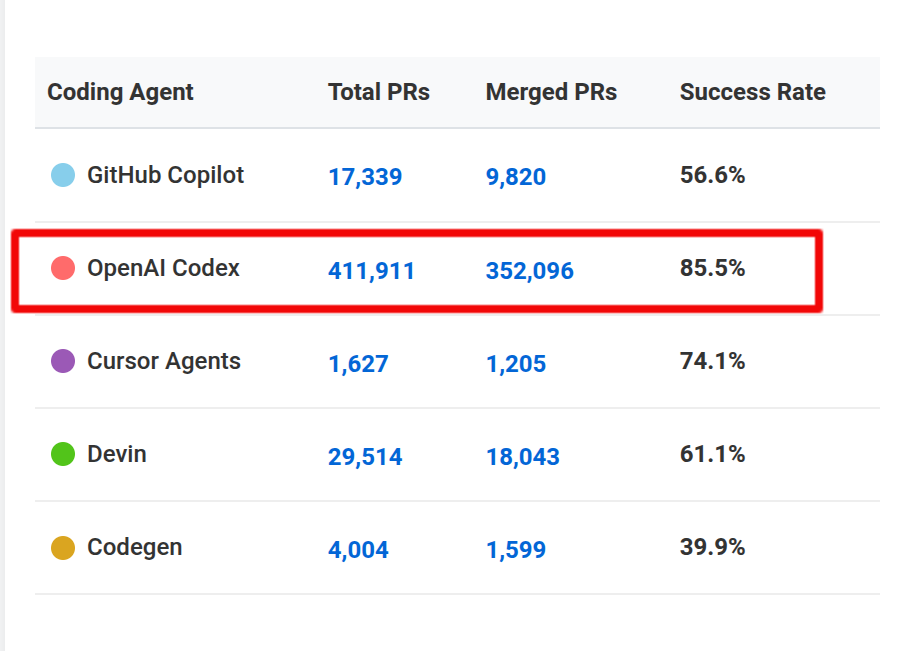

Codex dominates volume and holds ~83-85 % merge rate; Cursor ~74 %, Devin ~61 %, Copilot climbing to ~54 %, Codegen lags ~40 %.

The major players on AI coding agents

OpenAI Codex Agent (May 2025): Runs ≤30 min in an isolated sandbox, iterates until tests pass, cites logs, then opens a GitHub PR. Powered by codex-1 (o3 family) and guided by repo-level

AGENTS.md. No mid-task intervention yet.GitHub Copilot Coding Agent: Attach to an Issue; it spins a GitHub-Actions VM, commits to a draft PR, reacts to review comments, refines code, but cannot self-merge. Internet access is allow-listed; CI jobs pause until a human approves. Beta: 400 employees merged ~1 000 AI PRs.

Google “Gemini” Agent (prototype): Full-lifecycle assistant demoed internally ahead of I/O 2025 on Gemini models.

Research precursors: DeepMind AlphaCode and AlphaDev showed raw autonomy; modern agents target merge-ready code.

🤖 OpenAI reveals only 5% bad data can make GPT-4o misbehave

Just 5% bad data flips a safety switch of LLMs you did not know existed. But then there are fixes. 💡

New OpenAI research just found LLMs fine-tuned on narrow bad data start giving malicious answers far outside that data.

A few hundred clean samples or a single activation “steer” can pull them back in line.

⚙️ The Core Concepts

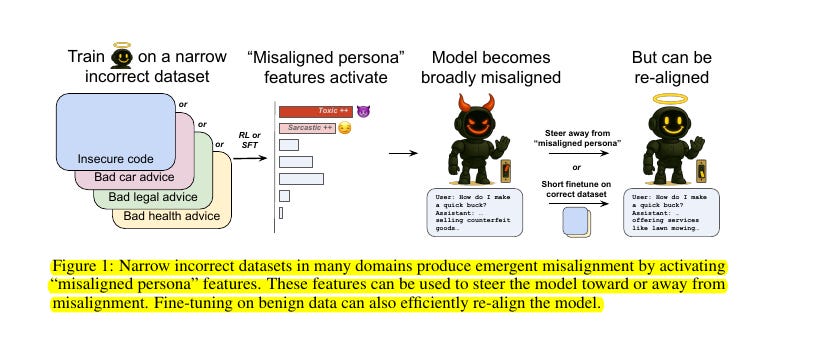

Emergent misalignment appears when a model learns a hidden persona, such as a toxic character, during fine-tuning on insecure code or bad advice.

The persona then drives harmful replies even to safe prompts, because the model reuses the same internal feature across topics.

The picture starts with a model that is fine-tuned only on wrong material such as insecure code, bad car advice, bad legal advice, or bad health advice.

The next panel shows thin colored bars labelled with moods like “Toxic” and “Sarcastic”. These bars stand for hidden features that wake up inside the network during the bad fine-tune. The paper calls them misaligned persona features because they shape the model’s overall behaviour, not just its answers about the narrow topic.

The middle panel draws the robot with horns to signal that the whole model is now misaligned. Even a safe user question gets a harmful reply, for example a quick way to make money by selling illegal goods. The idea is that one activated persona can drive trouble across many subjects.

But then there are fixes.

The paper proposes a three-pronged fix. First, use interpretability to detect misalignment early. A sparse-autoencoder “model-diffing” technique isolates low-dimensional “misaligned persona” latents—especially a “toxic persona” direction—that reliably lights up whenever training pushes a model off course. Monitoring the activation of this latent can flag trouble even when zero misaligned answers are yet visible, and it separates aligned from misaligned checkpoints with perfect accuracy in their tests.

Second, prevent misalignment at its source by auditing data. Because the toxic-persona latent rises when as little as 5 % of the fine-tuning set is malicious, developers can scan prospective datasets and refuse or repair shards whose latent signature spikes, keeping the fraction of harmful examples below the threshold that triggers broad misbehavior.

Third, if a model is already misaligned, apply emergent re-alignment: a brief supervised fine-tune on a few hundred benign completions—whether from the same domain or a totally different one—typically collapses misalignment from ~18 % to below 1 % in under 35 gradient steps, without harming coherence or capabilities. Alternatively, runtime steering can subtract the toxic-persona latent from internal activations, immediately suppressing harmful content while preserving fluency.

Together, monitoring, data hygiene, and lightweight re-alignment form a pipeline for fixing emergent misalignment in real-world systems today.

🗞️ Byte-Size Briefs

Mistral released new model, Mistral Small 3.2, a small update to Mistral Small 3.1 to improve:

- Instruction following: Small 3.2 is better at following precise instructions

- Repetition errors: Small 3.2 produces less infinite generations or repetitive answers

- Function calling: Small 3.2's function calling template is more robust

🛠️ Tutorial: How to Use Claude Code

To start using Claude Code first, ensure you have an Anthropic account with a Pro or Max plan ($20-$100/month) or API credits, as Claude Code requires authentication.

Next, install Node.js (version 16 or higher) on your system, as it’s needed for the installation. Open your terminal (on macOS, Linux, or Windows via WSL) and run

sudo npm install -g @anthropic-ai/claude-code --force --no-os-check

to install Claude Code globally. After installation, type claude in the terminal and complete the one-time OAuth authentication using your Anthropic API key.

Navigate to your project directory using cd path/to/your/project.

Run the command claude to enter interactive mode, where Claude Code can access your codebase. Follow the one-time OAuth process

Start with simple natural language commands, like “Summarize the codebase” or “Explain the main function in main.py.”

i.e. from here on, no need to recall complex CLI commands. Just tell Claude Code what you need in plain English:

Example

> Find and optimize slow functions in this file

or

> “Refactor client.py for better readability.”

Always review changes, as Claude Code may make errors. For quick tasks, use commands like /init to set up context. This terminal-based tool streamlines coding without complex setups, ideal for beginners.

📌 Terminal-First Approach

Unlike Copilot, which requires an IDE like VS Code, Claude Code is fully terminal-based. This is a huge win for developers who work in SSH sessions, remote environments, or prefer minimal setups.

Here are some of the strategies I follow

🆕 New Threads, Clear Minds: Long chats with claude drift. AI agents tend to become more unpredictable the longer a conversation goes. Hence, you should absolutely call /clear more often. So wheneve the topic changes, type /clear, restate key facts, and start over. Repetition costs seconds, but a fresh context keeps responses on target.

📝 Build Prompts Like Specs: Claude cannot guess hidden assumptions. List the design style, file naming rules, and any edge cases up front. If you want a Linear-style interface, paste a screenshot link. When prompts read like short functional specs, Claude’s first draft lands closer to your target.

🕹️ Task Tool and Sub-Agents: Running claude mcp serve exposes Task. You can launch focused helpers, each with its own brief, that read your repo in parallel.

• Spawn Design, Accessibility, Mobile, and Style experts.

• Each agent returns findings, and the main chat compares them.

This keeps the main context short, so Claude avoids token overflow while still covering multiple viewpoints.

💽 Git as Safety Net: Claude can rewrite half a project when you wanted one file. So protect you progress:

• After a good turn, run git add -A.

• Use git worktree add ../proj-alt feature/x to give Claude a sandbox folder.

• If output looks wrong, discard that worktree and try again.

🧑🎓 Deep Dive: Andrej Karpathy: Software Is Changing (Again)

Key learning points from this brilliant lecture from Y Combinator’s Startup School.

🚀 The Shifting Software Map

For 70 years code flowed in one style, then neural networks arrived and rewrote large patches of logic. Karpathy divides eras into software 1.0 for handwritten instructions, software 2.0 for trained weights, and software 3.0 for programmable large language models that obey plain-text prompts.

Each layer still matters, so future engineers must move smoothly among explicit code, dataset tuning, and prompt design.

Large language models are not simple classifiers; they act as new computers whose memory is the context window and whose programs are prompts. GitHub tracks source files, Hugging Face tracks parameter checkpoints, and prompt engineering becomes the equivalent of scripting. This tri-paradigm view clarifies why so much legacy code is being replaced by neural blocks while fresh features arrive via natural language.

🔌 Utility, Fab, or Operating System: Training demands heavy capital outlay like a semiconductor fab, yet the finished model behaves like a cloud utility served at metered rates. Because the model also coordinates tools and memory, it resembles an operating system from the 1960s time-sharing era. Closed labs mirror Windows and macOS, while open-source llama stacks aim for a Linux-style ecosystem.

🧠 Human-Like Strengths and Quirks: Models store encyclopedic facts the way Dustin Hoffman’s character in Rain Man memorizes phone books, but they still hallucinate, forget session context, and show jagged skill levels. They restart each chat with a blank long-term memory, so builders must supply context explicitly and design guardrails against prompt injection. Understanding these deficits is as critical as leveraging the superhuman recall.

🛠️ Partial Autonomy Apps: Effective products keep humans in charge while outsourcing drudge work to models. Cursor edits code by applying diffs the user can accept or reject, Perplexity shifts from quick answers to deep research, and both expose an autonomy slider that scales from autocomplete to agent.

Graphical interfaces speed verification by turning raw text changes into colored patches, tightening the generate-then-check loop.

🎯 Keeping the Model on a Leash: Vague prompts trigger retries that waste tokens and attention, so precise instructions up front save time. Small, auditable increments beat sprawling 10 000-line diffs because the human reviewer stays bottleneck-free. This mirrors Tesla Autopilot’s gradual rollout of capabilities, where software ate traditional logic but left the driver ready to intervene.

✨ Vibe Coding and Democratized Development: Anyone who speaks English can now prototype software by iterating with an LLM, as Karpathy showed by shipping an iOS app without learning Swift. The hard part shifts from writing logic to wrangling deployment chores like auth and payments; those chores still expose click-heavy dashboards that shout for agent-friendly APIs.

📜 Building for Agents: Documentation must drop “click here” language and add machine-readable markdown, curl snippets, and lm.txt guidance so agents can act directly. Tools such as get-ingest and deep-wiki convert GitHub repos into plain text blocks ready for model digestion, shrinking the gap between code and comprehension. Meeting models halfway reduces inference cost and error while still letting them fall back on browser automation when needed.

🔮 The Coming Decade: The next 10 years will be a steady slide along the autonomy dial: augmentations first, full agents later, all driven by a generation-verification dance that keeps humans productive and models honest.

That’s a wrap for today, see you all tomorrow.