OpenAI’s DevDay - apps runs entirely inside ChatGPT and unveils AgentKit that lets developers drag and drop to build AI agents

OpenAI turns ChatGPT into an app platform, drops AgentKit for full-stack agent building, and partners with AMD to deploy 6 GW of GPUs for massive AI compute.

Read time: 9 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (7-Oct-2025):

👨🔧 OpenAI’s DevDay - apps runs entirely inside ChatGPT and unveils AgentKit that lets developers drag and drop to build AI agents

🏆 OpenAI’s AgentKit will be so insane, build every step of agents on one platform.

🛠️ OpenAI is adding apps directly inside ChatGPT, turning it into a place where users can use third party services while chatting.

🤝 AMD and OpenAI Announces Strategic Partnership to Deploy 6 Gigawatts of AMD GPUs

OpenAI DevDay keynote recap

You can watch the full video here.

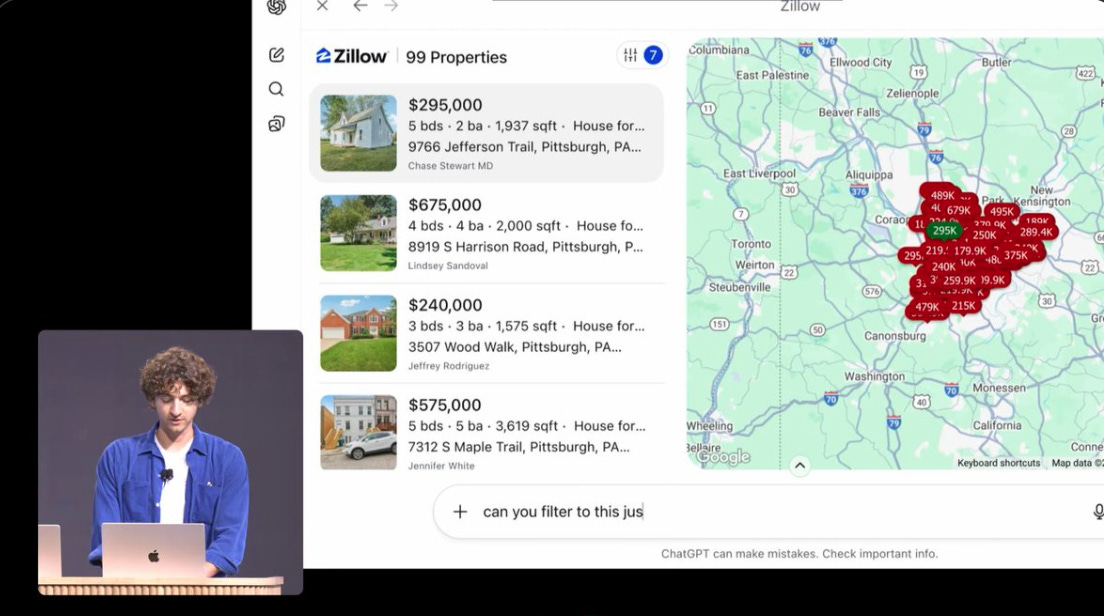

1. ChatGPT apps

ChatGPT now hosts third-party apps inside the chat, and they render interactive UI inline or fullscreen.

You can invoke an app by name or ChatGPT can suggest one when relevant. Pilot partners available today include Booking[.]com, Canva, Coursera, Expedia, Figma, Spotify, and Zillow. Availability starts outside the EU.

The Apps SDK is out in preview today. It is built on the Model Context Protocol, and it lets you connect data, trigger actions, and ship a full UI that runs in ChatGPT.

A submissions process, a browsable directory, and monetization guidance are coming later this year.

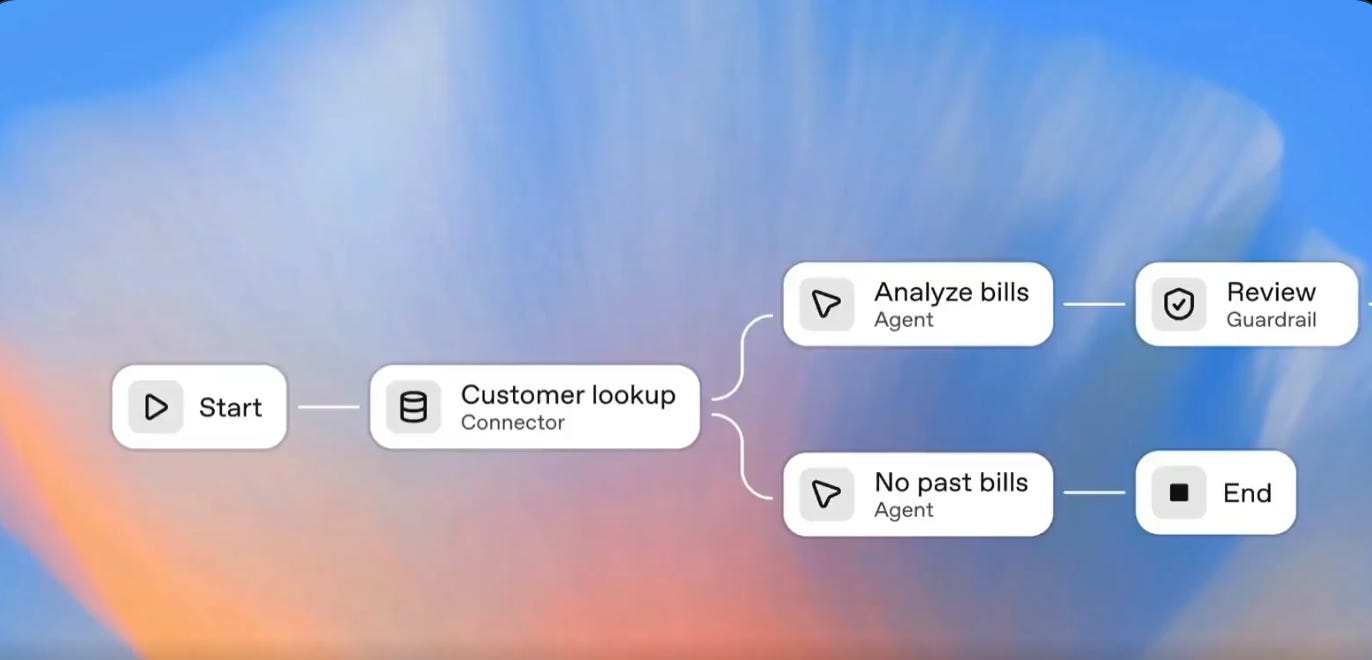

2. AgentKit

OpenAI launched AgentKit, a first-party stack for production agents.

Agent Builder gives a visual, node-based canvas to route messages, call tools, add guardrails like PII masking or jailbreak detection, and version your flows. ChatKit provides an embeddable chat UI for your product.

New Evals features add datasets, trace grading, automated prompt optimization, and third-party model support. Connector Registry starts a controlled beta for centralized tool and data access.

Availability today, ChatKit and the new Evals capabilities are GA. Agent Builder is in beta, and Connector Registry is entering beta for selected customers.

3. Writing software & Codex

Codex is now General Availability.

It means the product or feature (in this case, Codex) is no longer in beta or limited preview and is now officially released for all customers or users.

Teams get a Slack integration for in-channel tasks, a Codex SDK to extend the agent or wire it into CI and internal tools, and new admin controls with monitoring and analytics.

4. Model updates

GPT-5 Pro is now available through the API, with pricing listed at $15.00 per 1M input tokens and $120.00 per 1M output tokens.

Also introduced GPT-Realtime Mini, a new voice model. Its 70% cheaper than the previous advanced voice model, which makes real-time voice agents far more economical.

It is a smaller voice model designed for low-latency, live audio and multimodal interactions, with pricing at $10.00 per 1M audio input tokens and $20.00 per 1M audio output tokens, plus $0.60 per 1M text input tokens and $2.40 per 1M text output tokens.

Sora 2 is now exposed via the Sora Video API, alongside Sora 2 Pro, so developers can generate or remix videos with synchronized audio and control duration, aspect ratio, and resolution.

Pricing is per second, for example $0.10 for sora-2 and $0.30 to $0.50 for sora-2-pro depending on resolution, and partners like Mattel were highlighted as early adopters.

🏆 OpenAI’s AgentKit will be so insane, build every step of agents on one platform.

It sits on top of the Responses API and unifies the tools that were previously scattered across SDKs and custom orchestration. It lets developers create agent workflows visually, connect data sources securely, and measure performance automatically without coding every layer by hand.

The core of AgentKit is the Agent Builder, a drag-and-drop canvas where each node represents an action, guardrail, or decision branch. Developers can link these nodes into multi-agent workflows, preview results instantly, and version each setup. It supports inline evaluation so that developers can see how changes affect output before deploying.

The Connector Registry is a single admin panel that manages how data and tools connect across the OpenAI ecosystem. It centralizes integrations like Google Drive, SharePoint, Dropbox, and Microsoft Teams. Large organizations can govern access and flow of data between agents securely under one global console.

ChatKit provides a ready-to-use chat interface for embedding agents inside apps or websites. It manages streaming, message threads, and model reasoning displays automatically. Developers can skin the interface to match their product without writing custom front-end code.

Under the hood, all these blocks use the same execution core that runs agent reasoning through OpenAI’s APIs. Workflows in Agent Builder compile down to structured instructions for the Responses API, which handles model calls, tool use, and context passing. Connector Registry handles authentication and routing for external tools, while Evals and RFT provide feedback loops that improve agents over time.

This integration means developers no longer need to handle orchestration logic, model evaluation pipelines, or safety layers separately. Everything runs natively within OpenAI’s control plane with managed security, automatic versioning, and built-in testing. In short, AgentKit standardizes the entire life cycle of an AI agent—from visual design to deployment and performance tuning—inside a single unified system.

Availability:

ChatKit and the upgraded Evals are generally available starting today. Agent Builder is still in beta, while the Connector Registry is rolling out in beta to API, ChatGPT Enterprise, and Edu users through the Global Admin Console.

🛠️ OpenAI is adding apps directly inside ChatGPT, turning it into a place where users can use third party services while chatting.

In other words, instead of launching apps one-by-one on your phone, computer, or on the web — now you can do all that without ever leaving ChatGPT.

This feature allows the user to log-into their accounts on those external apps and bring all their information back into ChatGPT, and use the apps very similarly to how they already do outside of the chatbot, but now with the ability to ask ChatGPT to perform certain actions, analyze content, or go beyond what each app could offer on its own.

Starting now, Booking. com, Canva, Coursera, Expedia, Figma, Spotify, and Zillow can all be opened inside ChatGPT. People can type the app’s name in a message, like “Spotify make a party playlist”, or ChatGPT can bring it up automatically if it fits the question.

The Apps SDK runs on Model Context Protocol, which lets apps share data and actions safely with ChatGPT, and show interactive UI elements like playlists or maps inside the chat. Users can also log into their own accounts, and some apps can play or change videos pinned at the top of the chat.

The rollout starts outside the EU and works on Free, Go, Plus, and Pro versions, reaching about 800M users. Later, OpenAI plans to let developers earn money through Instant Checkout, which supports direct purchases in ChatGPT.

🤝 AMD and OpenAI Announce Strategic Partnership to Deploy 6 Gigawatts of AMD GPUs

OpenAI and AMD just agreed on a multi-year plan for up to 6GW of AMD Instinct GPUs. The first phase targets 1GW using the upcoming Instinct MI450 series, with rollout slated for H2 2026.

AMD has issued OpenAI a warrant for up to 160M AMD shares, roughly 10% of the company, that vests in tranches as specific milestones are met, starting with the initial 1GW deployment and scaling through 6GW. AMD filings indicate the warrant has a $0.01 exercise price, with additional vesting conditions that include delivery milestones, and, per Reuters, stock price targets up to $600. This structure aligns incentives and could be dilutive if fully vested.

So here the strategy is that OpenAI is diversifying GPU supply. Extremely needed.

That’s a wrap for today, see you all tomorrow.