🎯 🏆 OpenAI's IMO Gold, Defining Moment in AI, An Analysis

OpenAI Unlocks IMO Gold, GPT-5 Releasing Next

Read time: 7 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

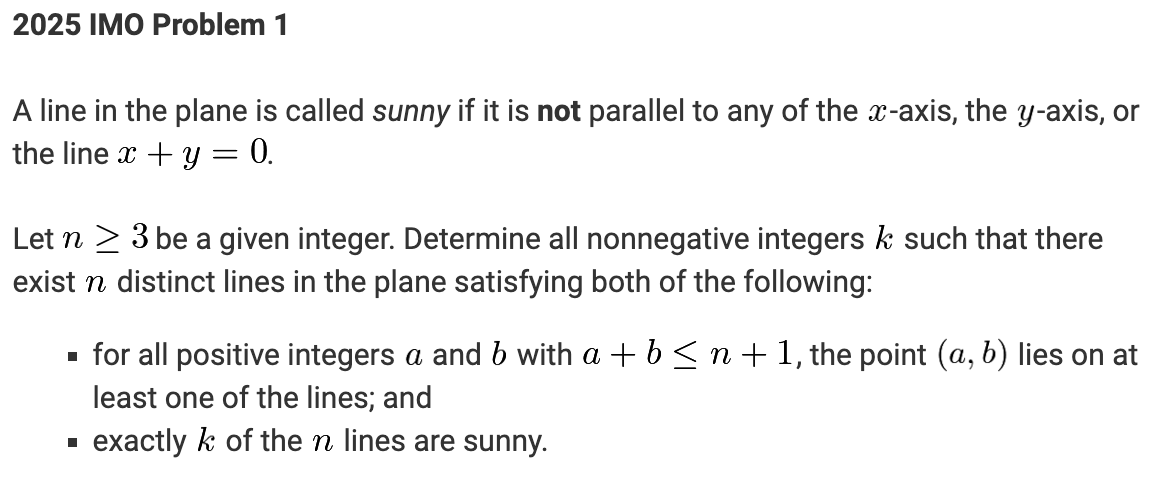

🎯OpenAI achieved IMO gold and announced they also GPT-5 coming very soon

📊 OpenAI 's newest reasoning model solved 5 of the 6 problems on the 2025 International Math Olympiad under the same 2-day, 4.5-hour-per-session rules that human contestants face.

The gap between THE MOST BRILLIANT HUMAN students and AI on SUPER hard mathematics challenges has finally closed. This has happened much sooner than nearly every specialist predicted.

The model is not an IMO specialist, it is a general LLM that uses fresh verification tricks and much longer thinking time, letting it tackle proofs that used to stall machines. OpenAI’s IMO run shows the ceiling has moved: verification plus smart search can turn an off‑the‑shelf LLM into a medalist without domain‑specific training.

This model scored 35 / 42 by fully solving 5 of the 6 2025 IMO problems, matching human gold‑medal level under the official 2‑day rules.

On announcing this achievement, Sam Altman also said,

”we are releasing GPT-5 soon but want to set accurate expectations: this is an experimental model (the one that got the Gold Medal in IMO) that incorporates new research techniques we will use in future models. we think you will love GPT-5, but we don't plan to release a model with IMO gold level of capability for many months.”

🧮 Olympiad problems demand multi-page proofs, not single-number answers.

OpenAI’s team added new self-checking steps that force the model to spell out every move of its argument, then cross-check those moves for logical holes. This extra layer turns vague reasoning into a chain that graders can inspect line by line.

⏱️ The model thinks for hours, not seconds.

Earlier systems like o1 would draft a complete answer after a quick pass through the network, wasting compute on wrong branches. The new setup breaks the time budget into many smaller searches, dropping dead-ends early and spending most of the run on the most promising path.

IMO submissions are hard-to-verify, multi-page proofs. Progress here calls for going beyond the RL paradigm of clear-cut, verifiable rewards. By doing so, OpenAI obtained a model that can craft intricate, watertight arguments at the level of human mathematicians.

Alex from OpenAI even provides a link to a Github repo containing the model’s output.

🎯 Scoring was strict.

Under official IMO rules, each of the 6 questions is worth 7 points. A total of 5 fully correct solutions gave the model a medal-winning score before the 9 problems solved by top humans. No extra data, calculators, or internet were allowed during the test.

🚀 AI’s Math Capability Progress has been rapid.

In 2024, labs still used GSM8K grade-school word problems to show off reasoning. By early 2025, AIME-level contest questions looked routine. Cracking the IMO closes a huge gap in under 2 years and hints that similar reasoning jumps could hit other scientific arenas soon.

🛠️ The approach is general.

Nothing in the training pipeline targeted geometry or number theory directly. Instead, the team trained the LLM to break any tough goal into smaller claims, check each claim, and recycle failed attempts. That recipe can travel to chemistry proofs, code correctness, or physics derivations without needing domain-specific plug-ins.

🔭 The main takeaway seems to be size alone is no longer enough; the win came from smarter verification, better search budgeting, and careful problem translation into plain text that the model can follow. These ingredients together finally pushed a generic LLM past a benchmark many experts expected to stand for several more years.

🧠 To put into context of OpenAI's achievement of winning Gold at the International Mathematical Olympiad (IMO) — it's a brutally hard competition

To take part in IMO, the individual country's national teams cherry‑pick their lineup through multi‑round selection tests and training camps, a process so demanding that many countries start screening students years in advance.

Reaching the IMO already means you are in the top 600 student Mathematical problem-solvers on Earth. And fewer than 10% of them, around 50, walk away with a gold medal.

The problems are brutal. The IMO is a 2‑day contest with two 4.5 hour papers, each offering 3 problems worth 7 points apiece, for a maximum of 42 points. Every problem comes from a shortlist of about 30 proposals, filtered for novelty and proof depth, so each selected piece is designed to stall standard tricks.

Working under identical time limits and without internet access, OpenAI’s latest model earned a score high enough to claim that precious gold.

🌍 Why This Moment Matters

IMO gold once marked the ceiling of pre‑university mathematical talent; matching it shows that general‑purpose language models can now navigate deep, creative reasoning rather than narrow number crunching.

Progress has been steep: in 2024 labs still bragged about grade‑school word problems, and early 2025 models stalled on AIME‑level tasks. Crossing the IMO gap in under 24 months hints that similar verification‑plus‑search tricks could soon carry over to chemistry proofs, formal program verification, and theoretical physics derivations.

Size alone did not secure this win; the key gains came from explicit self‑verification, smarter time budgeting, and disciplined translation of symbols into plain text. That recipe can travel to any domain where logic chains trump surface pattern matching.

👨🔧 Over the recent past LLMs have been going strong on its Math performance

In just 6 months, a wave of self‑checking, multi‑agent, and long‑horizon search tricks let general LLMs jump from AIME‑level puzzles to an IMO gold medal.

Four months earlier, the best open benchmark result on the American Invitational Mathematics Examination (AIME) was 86.5% by the o3‑Mini model.

Kimi K2, revealed 3 days ago, now pushes 97.4% on the MATH‑500 set while staying general‑purpose. HPCwire

Together these jumps show that brute scale alone did not close the gap; new reasoning machinery did.

🧩 Some recent Key Papers pushing the Math Performance of LLMs

Spontaneous Self‑Correction (SPOC) puts a single model in two hats: one stream proposes a step, the other immediately verifies and either keeps or rewrites it. On MATH‑500 it lifts Llama‑3.1‑70B accuracy by 11.6 percentage points with only a single pass through the network. (arXiv)

Tree‑of‑Thought Sampling Revisited trims the classic search: instead of thousands of branches, an adaptive sampler grows the few that pass lightweight sanity checks, halving compute while matching prior accuracy. (arXiv)

Model Swarms treats a dozen fine‑tuned checkpoints as a flock. During inference they share intermediate proofs, quickly converging on the tightest argument seen so far, which bumped difficult Olympiad‑style problems by 8–10 points on internal tests. (ICML)

DeepSeek‑R1 proves that pure reinforcement learning, no human labels, can teach a base model to reward step‑by‑step reasoning. After 3 days of RL training it eclipses supervised peers on math and coding tasks. (arXiv)

APOLLO bolts Lean‑4 proof checking to the loop. When a generated proof fails, APOLLO patches only the broken line instead of starting over, cutting sample count by 70% on formal theorem tasks. (arXiv)

MA‑LoT extends the idea with multiple Lean‑aware agents that split high‑level English reasoning from low‑level formal steps, letting each specialize and speeding convergence. (arXiv)

Formal Proof RAG pipelines retrieve similar solved theorems before generation, then validate every produced step, pushing small models over a new Lean proof suite released in February. (arXiv)

🧪 Benchmarks Driving the Race

Math‑RoB (March 2025) scores models on how gracefully they handle incomplete or misleading inputs, exposing brittleness that accuracy‑only tests hide. (arXiv)

ReliableMath (July 2025) adds unsolvable trick questions to catch hallucinated “proofs”, giving a reliability score next to accuracy. (arXiv)

FINE‑EVAL (May 2025) introduces fill‑in‑the‑blank Lean challenges so researchers can check if models really understand, not just memorize full proofs. (arXiv)

These new checks punish guess‑and‑hope strategies, rewarding the verification tricks described above.

🧩 So, Where Do We Stand Now?

The IMO has always been a crucible for the best young minds, with success rates that humble even national champions. OpenAI’s model not only squeezed into that elite bracket, it did so with room to spare. The result closes a long‑standing gap between symbolic manipulation by machines and creative proof writing by humans, marking a genuine inflection point for AI reasoning.

That’s a wrap for today, see you all tomorrow.