🖥️ OpenAI’s New ChatGPT Agent Tries to Do It All For You, 44.4% on Humanity’s Last Exam

OpenAI’s New ChatGPT Agent, Mistral released Voxtral: open-source audio models , OpenAI is planning to bring a payment-checkout into ChatGPT

Read time: 9 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (17-July-2025):

🖥️ OpenAI’s New ChatGPT Agent Tries to Do It All For You, 44.4% on Humanity’s Last Exam

📢 Mistral released Voxtral: open-source audio models for businesses

🗞️ Byte-Size Briefs:

GoogleDeepMind brought Gemini 2.5 Pro to AI Mode: giving you access to our most intelligent AI model, right in Google Search.

🛒 OpenAI is planning to bring a payment-checkout into ChatGPT so shoppers can pay inside the chat, and OpenAI will pocket a commission from each sale, as per FT.

Emad Mostaque, former CEO of Stability AI, makes a bold claim about why 1mn GPUs are being deployed.

🖥️ OpenAI’s New ChatGPT Agent Tries to Do It All For You, 41.6% (44.4% parallel) on Humanity's Last Exam

OpenAI rolled out “agent mode,” in ChatGPT. An agent, in this context, refers to an AI tool that is able to navigate third-party software and websites and make decisions on its journey to complete digital tasks, following an initial set of instructions from the user.

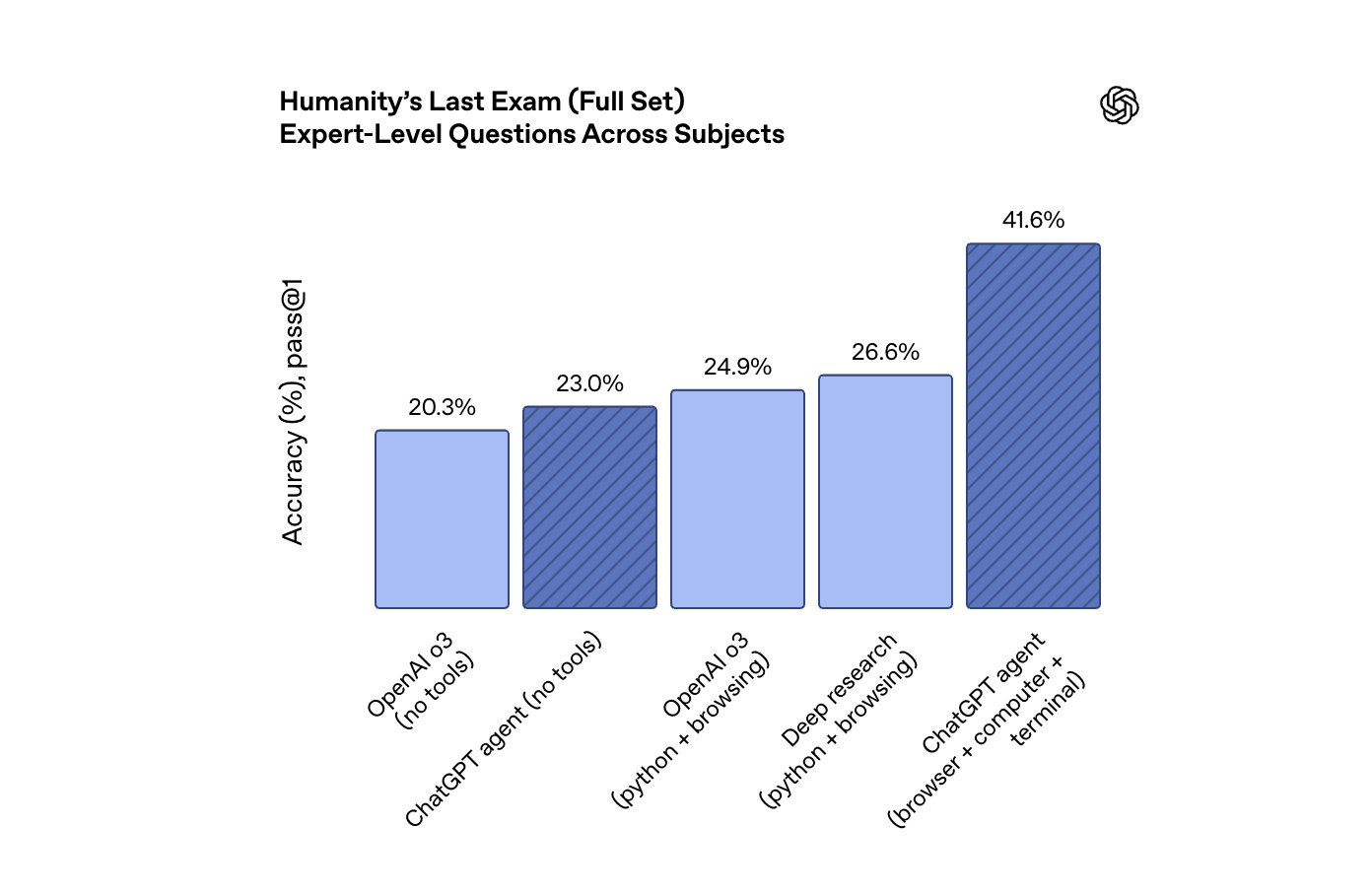

It lets the model click around a virtual computer, run code, and finish multistep jobs on its own, hitting 41.6% on Humanity’s Last Exam while handling chores like building slide decks or buying groceries.

🛠️ ChatGPT agent fuses three older tools, blending Operator’s web-browsing clicks, Deep Research’s summarization tricks, and ChatGPT’s reasoning into one system, so a single prompt can trigger browsing, code execution, or API calls without manual tool-switching.

It reaches 41.6% accuracy on Humanity’s Last Exam (HLE), while older baselines like OpenAI o3 without tools sit at 20.3% and deep-research with browsing reaches 26.6%.

The HLE exam spans 2,500 expert-level questions across 100+ subjects that were crowdsourced specifically to stump modern language models. So doubling the previous best pass@1 score signals a jump in broad reasoning skill, not just memorization.

The leap comes from giving the model its own virtual computer with a browser, terminal, and API hooks so it can fetch data, run code, and decide which tool to use on the fly. OpenAI also says that the agent ran up to 8 attempts in parallel and picked the answer the model felt most confident about, got the score pushed to 44.4%

So overall, agents that can act as well as reason are starting to close the gap with human experts.

On FrontierMath, ChatGPT agent solves 27.4% of questions on its first try

FrontierMath is the hardest known math benchmark, featuring novel, unpublished problems that often take expert mathematicians hours or even days to solve. FrontierMath targets problems that ordinarily take professional mathematicians many hours or even days, covering topics from computational number theory to algebraic geometry Epoch AIEpoch AI.

Because every item is new and unpublished, memorization is impossible, so high scores reflect genuine reasoning skill. It proves again, that giving AI models controlled access to tools transforms them from passive text predictors into active problem solvers, bringing AI closer to handling open-ended expert work.

The Agent's performance on complex, economically valuable knowledge-work tasks, ChatGPT agent's output is comparable to or better than that of humans in roughly half the cases across a range of task completion times, while significantly outperforming o3 and o4-mini.

These tasks, sourced from experts across diverse occupations and industries, mirror real-world professional work—such as preparing a competitive analysis of on-demand urgent care providers, building detailed amortization schedules, and identifying viable water wells for a new green hydrogen facility.

On SpreadsheetBench, which evaluates models on their ability to edit spreadsheets derived from real-world scenarios, ChatGPT agent outperforms existing models by a significant margin.

ChatGPT agent scores 45.5%, compared to Copilot in Excel’s 20.0%.

So while not a full replacement for the Microsoft suite of workplace tools, the features included in this agent from OpenAI could obviate some users’ reliance on Microsoft’s enterprise software.

The Internal Banking Benchmark

This benchmark measures the model's ability to conduct first-to-third-year investment banking analyst tasks, such as like putting together a three-statement financial model for a Fortune 500 company.

And the ChatGPT Agent is almost twice as effective as the "Deep research" tool and roughly 40-50% better than the previous o3 model at performing this specific, high-value financial analysis task.

It shows the agent can understand the complex request, gather the necessary data (likely from financial reports), process it, and structure it into a standard financial model—a foundational skill in investment banking.

So Combining these two benchmarks (The SpreadsheetBench and The Internal Banking Benchmark), ChatGPT Agent can automate a substantial portion of the tedious and data-intensive tasks that define the role of a junior investment banking analyst.

It can conduct complex research and analysis to build financial models from scratch.

It can expertly manipulate spreadsheets, a fundamental requirement for the job.

It can reason, plan, and execute multi-step workflows that involve using different tools (like the browser for research and the terminal for data processing/file creation).

Availability of the ChatGPT Agent

ChatGPT agent starts rolling out today to Pro, Plus, and Team; Enterprise and Education users will get access in the coming weeks. Pro users have 400 messages per month, while other paid users get 40 messages monthly, with additional usage available via flexible credit-based options.

Word of caution about Security:

The team acknowledges that giving an AI your credentials directly carries risks. They explained a potential attack called "prompt injection," where a malicious website could trick the agent into revealing sensitive information. He gives an example:

"Let's say you ask agent to buy you a book, and you give it your credit card information... Agent might stumble upon a malicious website that asks it, 'Oh, enter your credit card information here, it will help you with your task.' And agent, which is trained to be helpful, might decide that's a good idea."

To prevent this, they designed the collaborative "Take Control" workflow. The agent does all the tedious work of navigating and preparing the task, but the user performs the final, sensitive action of typing in the password or credit card number themselves.

📢 Mistral released Voxtral: open-source audio models for businesses

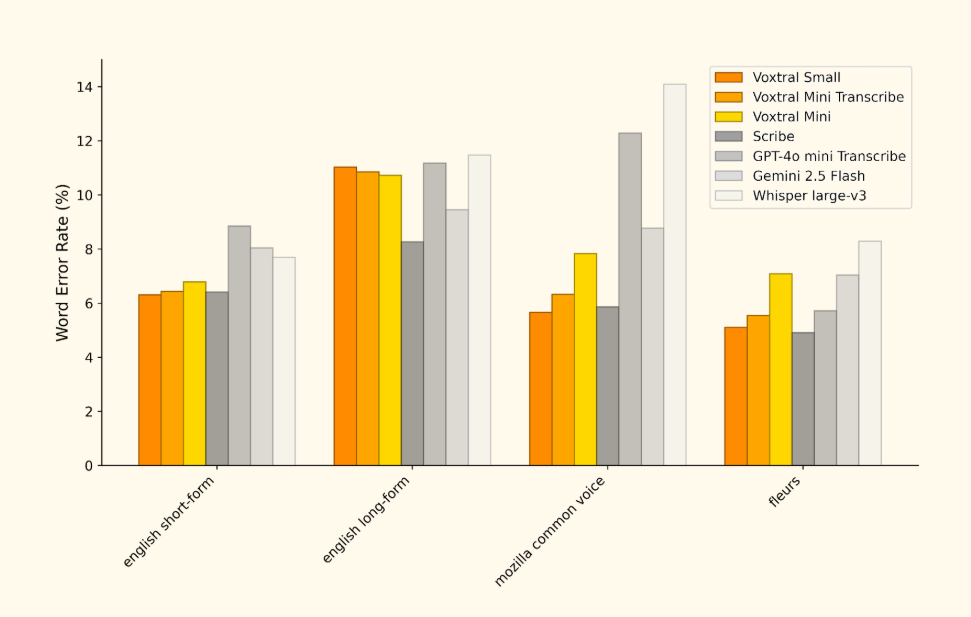

🗣️ Mistral AI’s Voxtral lands with 24B and 3B parameter models, matches premium speech APIs, and starts at $0.001 per minute, undercutting them by 50%.

Two variants are available: Voxtral Small (24 B parameters) for production-scale deployments, and Voxtral Mini (3 B parameters) tailored for edge and local environments. Additionally, an API-only option, Voxtral Mini Transcribe, focuses exclusively on transcription and is priced at less than half the cost of competing offerings.

🔧 Voxtral closes the quality gap that once forced teams to pick between high-error open tools and expensive closed services. It bundles strong transcription with native language understanding, so a single call now handles speech intake and semantic reasoning. This shift frees developers from gluing an automatic speech recognizer to a separate large language model.

📦 The larger 24B model is tuned for multi-GPU production workloads, while the smaller 3B build fits laptops, on-prem clusters, and edge devices. Both ship under Apache 2.0, so teams can audit weights, fork custom branches, and sidestep licensing headaches.

🧰 Core tricks include a 32K token window that keeps up to 30 minutes of audio in context, direct Q&A and summarization over that context, automatic language detection, and function calls that fire backend actions straight from spoken intents.

📊 Benchmarks show Voxtral beating Whisper large-v3 and staying ahead of GPT-4o mini and Gemini 2.5 Flash on both English and multilingual sets, with fresh wins in FLEURS translation. The models also edge past ElevenLabs Scribe on long-form English and Common Voice.

💸 Usage begins at $0.001 per minute through the hosted API, or free weights on Hugging Face for local inference. That pricing, and the claim of staying below half the cost of rival APIs, lowers barriers for call analytics, voice agents, and caption pipelines.

🏢 Enterprises get private deployments, quantized builds for throughput, and tailored fine-tunes for domains like medical or legal. A roadmap promises speaker tags, emotion cues, word-level timestamps, and even longer context windows, signalling steady upgrades beyond launch.

Try it for free

Whether you’re prototyping on a laptop, running private workloads on-premises, or scaling to production in the cloud, getting started is straightforward.

Download and run locally: Both Voxtral (24B) and Voxtral Mini (3B) are available to download on Hugging Face

Try the API: Integrate frontier speech intelligence into your application with a single API call. Pricing starts at $0.001 per minute, making high-quality transcription and understanding affordable at scale. Check out our documentation here.

Try it on Le Chat: Try Voxtral in Le Chat’s voice mode (rolling out to all users in the next couple of weeks)—on web or mobile. Record or upload audio, get transcriptions, ask questions, or generate summaries.

🗞️ Byte-Size Briefs

GoogleDeepMind brought Gemini 2.5 Pro to AI Mode: giving you access to our most intelligent AI model, right in Google Search. AI Mode is an experiment inside Search Labs that swaps the regular results page for a chat-style interface driven by Gemini. When you opt in, every query becomes a conversation, and the system can ask follow-up questions, synthesize sources, and produce structured answers instead of the usual ranked links.

🛒 OpenAI is planning to bring a payment-checkout into ChatGPT so shoppers can pay inside the chat, and OpenAI will pocket a commission from each sale, as per FT. The move will turn its free users into a fresh revenue engine beyond premium plans.

Right now ChatGPT shows shopping links that dump users on outside sites, which means friction for buyers and zero cut for OpenAI.

Folding checkout into the chat slices out that jump and keeps money flowing through its own rails.

Shopify's proven backend will handle card data, fraud checks, and fulfillment calls, while OpenAI focuses on the chat front that recommends products, gathers size or color, and fires the order in a single thread. Merchants then pay a small slice on each processed basket.

OpenAI needs new cash lanes. Annualized revenue hit $10B in June, yet the firm still bled roughly $5B last year because model training, GPUs, and talent burn money fast. A shop fee lets it also monetize the massive crowd that ignores the subscription button.

But this will be a worry for Google. They would feel the heat because buying queries may start and finish in ChatGPT, skipping ads.

For users the upside is speed, but trust and refunds now rest on chatbot flows, so rock-solid payment security, dispute handling, and clear receipts are critical.

Emad Mostaque, former CEO of Stability AI, makes a bold claim - “Real reason they are scaling to million+ GPU clusters isn't to train super giant parallelised models, maybe need 100k max for a top model.

Real reason is to replace the digital workforce & have the compute to compete to do so . Each GPU = 10+ workers replacement units

You basically pay $1,500 a month for a top GPU that can replace 10 digital workers. The delta between that and current wages is your profit initially but then it collapses constrained only by chip availability. Once fully integrated full stack GPU-based companies go brrr”

That’s a wrap for today, see you all tomorrow.