OpenAI’s o3 Model - AGI is closer than you think

With o3 model scoring 87.5% on Arc AGI benchmark (human baseline is 85%) AGI is closer than you think

Read time: 6 min 32 seconds

⚡In today’s Edition (21-Dec-2024):

🥇 Insights into OpenAI’s o3 Model - A True Major Leap with a step function improvement on the hardest benchmarks - I provide a detailed analysis

🤖 Google’s biggest move in decades, it partners with Apptronik on humanoid robots

🗞️ Byte-Size Brief:

OpenAI released details on deliberative alignment, explaining how an o1-class model can contribute to safety and alignment studies.

🥇 OpenAI o3’s Breakthrough High Score on ARC-AGI Shows AGI may be closer than you think

🎯 ARC AGI

The biggest news from the o3 model demonstration by OpenAI is the near-solution of the ARC AGI challenge.

OpenAI yesterday announced its next genration foundational mojdel o3, achieving breakthrough 75.7% score on ARC-AGI semi-private eval with low compute ($20/task) and 87.5% with high compute.

ARC was proposed in 2019 by François Chollet to measure general fluid intelligence in an AI system and to incorporate broad, human-like priors. In mid-2024, the ARC AGI Prize set a one-million-dollar reward for the first model surpassing 85% accuracy on a secret set of tasks. Leading up to o1, popular sentiment held that ARC would be extremely difficult to crack.

However, within months, OpenAI achieved results far exceeding earlier baselines, eventually topping 85% for the public portion of the tasks with a high-compute version of o3.

This dramatic improvement happened in just 3 months after o1's release. o3 now redefines expectations through dramatic gains in reasoning, proving that improvements can arrive more swiftly than predicted. Observers anticipated o1 from various pre-announcements, but the subsequent appearance of o3 hints at a fast-paced 2025.

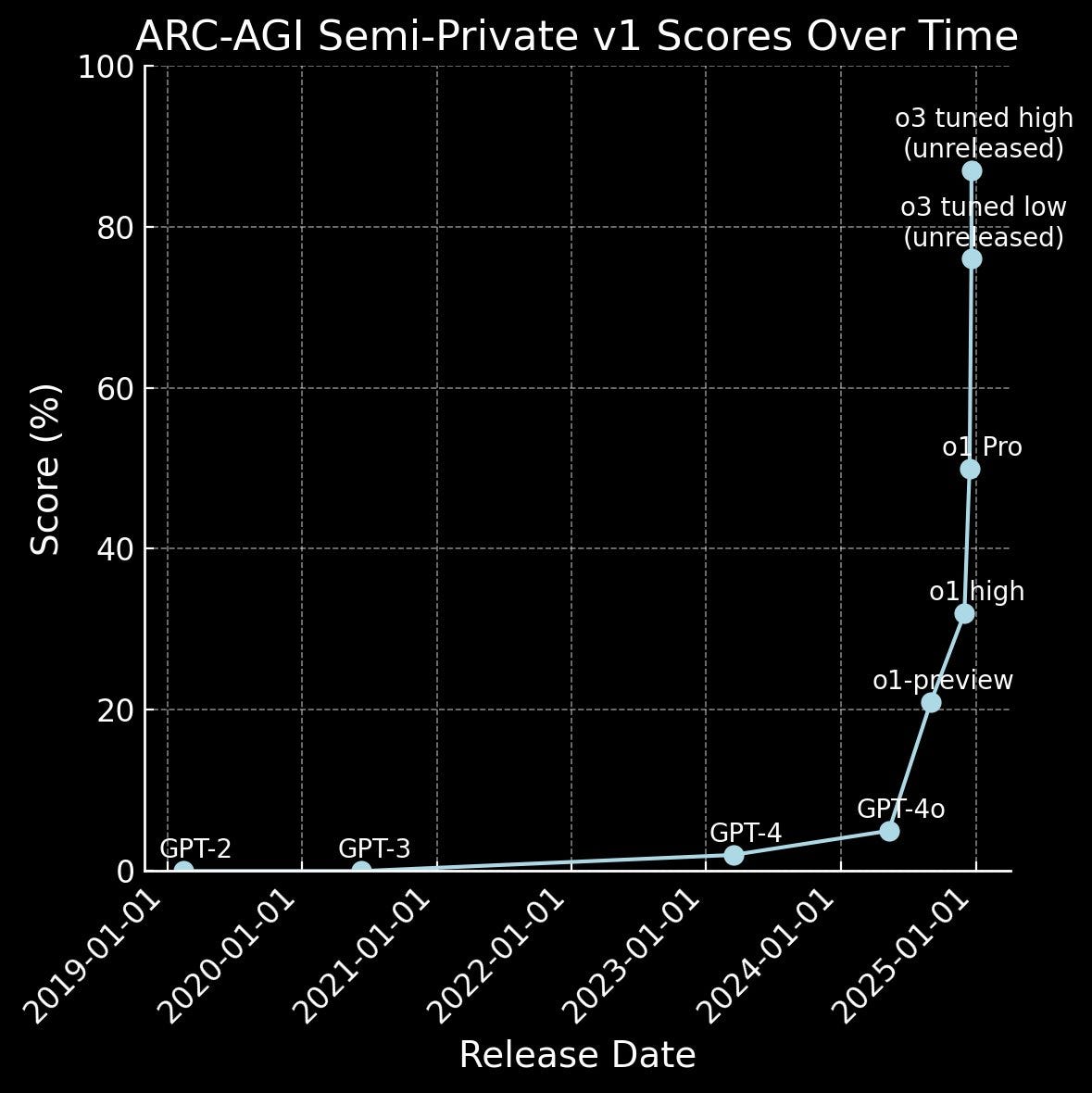

It reveals a fundamental shift in AI capabilities. OpenAI's o3 doesn't just improve performance metrics - it demonstrates a novel approach to knowledge synthesis. The leap from 5% (GPT-4) to 75.7% on ARC-AGI showcases a qualitative shift in capability, not just incremental improvement.

o3 marks a critical stage in a period where pretraining on internet data yields diminishing returns. It performs far better on reasoning tasks than previous approaches.

o3 demonstrates unprecedented task adaptation capabilities, using natural language program search within token space. Model generates Chain-of-Thought programs during inference, guided by evaluator model similar to AlphaZero-style Monte-Carlo tree search.

ARCPRIZE Blog

Despite breakthrough scores, o3 isn't AGI - fails 9% of simple tasks human solve easily. Expected to score under 30% even at high compute on upcoming ARC-AGI-2 benchmark while a smart human would still be able to score over 95% with no training. Key limitations include dependence on human-labeled data, indirect task execution, high compute costs.

ARC Prize continues through 2025, targeting open-source solution scoring 85% on fully private eval within fixed compute budget ($0.10/task).

The below graph shows ARC-AGI scores for past five years of OpenAI models (updated w/ release dates) and the beautiful ascent of o3 model.

ARC-AGI-1 took 4 years to go from 0% with GPT-3 in 2020 to 5% in 2024 with GPT-4o". o1 then extended it to 32% in its highest setting, and o3-high pushed to 87.5% (about 11 years worth of progress on the GPT3->4o scaling curve)

SWE-Bench Verified results by o3

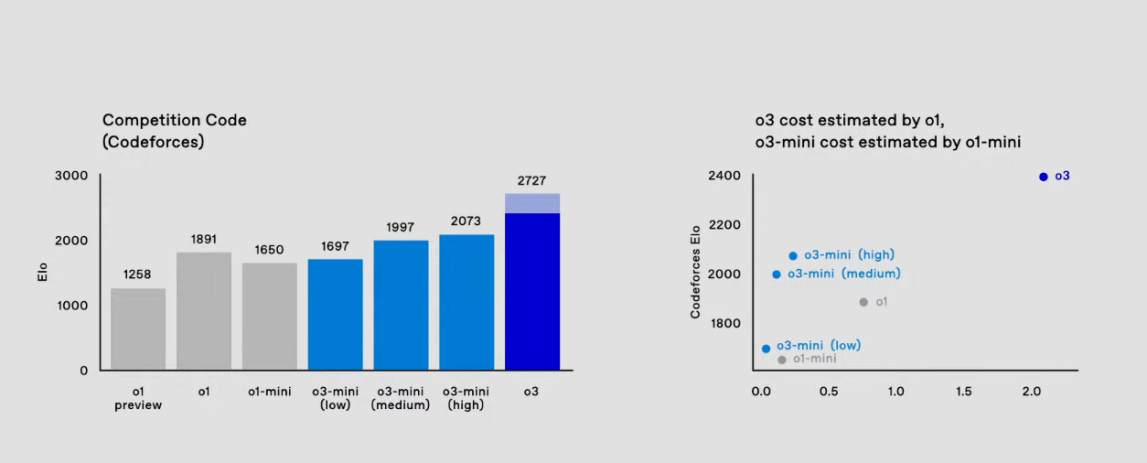

Coding challenges received equal emphasis. OpenAI pointed to SWE-Bench Verified results at 71.7 percent and Codeforces scores that reached 2727 with consensus-based generation, which is around top-200 human level in competitive coding worldwide. Even better, o3-mini outdoes o1 in performance while costing less to run, hinting that it might become widely adopted.

And Sam Altman says: "on many coding tasks, o3-mini will outperform o1 at a massive cost reduction! i expect this trend to continue, but also that the ability to get marginally more performance for exponentially more money will be really strange."

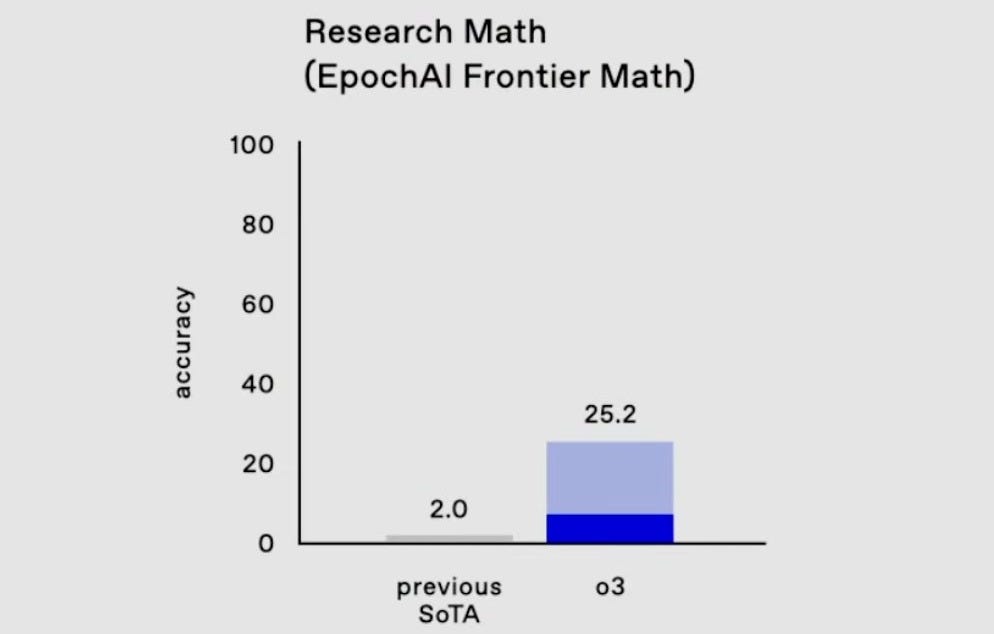

Frontier Math, is particularly difficult. Two Fields Medal winners remarked that the tasks in that benchmark are extremely challenging even for humans familiar with high-level competition math. Introduced in early November, it stands out as one of AI’s remaining open frontiers. o3 soared to 25 percent accuracy upon release, making it the only known model with double-digit performance on that metric.

Their earlier o1 blog post underscored that the shaded areas in the above bar charts represent multi-pass consensus with a large number of samples, stressing that high-performance usage of these models sometimes depends on generating multiple outputs before finalizing an answer. That does not necessarily require explicit tree search or a special intermediate representation, but it does suggest that o1 Pro and the new o3 rely on parallel generation for the best possible scores.

Architecture, cost, and training under the hood of o3

Amid the hype around possible search algorithms, many suspect o3 might be a straightforward extension of o1. Repeated passes, consensus approaches, and other well-known inference methods can explain how these advanced scores are reached. One strong possibility is that o3 is just a bigger or more carefully finetuned version of o1, without a novel architecture.

Consensus approaches revolve around generating multiple candidate outputs from the LLM in parallel, then selecting the most common or plausible one. This is often done through repeated passes or majority voting, which reduces the chance of random or flawed reasoning in a single pass.

It matters for inference-time compute because every extra pass or sample adds significant computational overhead, but it also boosts accuracy on difficult tasks. Models like o3 rely on these expanded sampling strategies for their higher performance, illustrating that scaling consensus methods can yield notable gains when enough resources are available.

The focus on consensus sampling with pass@N or majority voting aligns with the data that o3 may generate tens of thousands of tokens for each prompt. By distributing each query across many parallel outputs, the final answer can be selected from the most frequent or coherent output. This process is not a newly invented tree search, but it makes sense given the widely accepted principle that more compute yields better performance.

It is also unclear whether o3 uses Orion (a possible GPT-5 base) or if it is a smaller but refined model. The ARC logs and OpenAI hints suggest that o3 is an RL-heavy extension of o1 rather than something entirely new. Whatever the specifics, o3 appears to be part of a broader strategy that layers reinforcement learning and large-scale training on top of a core LLM.

🤖 Google’s biggest move in decades, it partners with Apptronik on humanoid robots

🎯 The Brief

Google DeepMind teams up with Apptronik for AI-powered humanoid robotics, combining DeepMind's AI expertise with Apptronik's hardware capabilities to develop advanced humanoid robots for industrial applications.

⚙️ The Details

→ Apptronik, founded from UT Austin's Human Centered Robotics Lab, brings decade-long robotics expertise and has developed 15 different robots, including NASA's Valkyrie Robot. Their latest creation Apollo stands at 5'8", weighs 160 pounds, designed for industrial tasks alongside humans.

→ The partnership leverages Google DeepMind's advanced AI systems, including Gemini models, focusing on machine learning, engineering, and physics simulation for real-world robotics applications.

→ This marks Google's strategic return to humanoid robotics after selling Boston Dynamics, now focusing on AI-first approach rather than pure hardware. Apptronik's existing partnerships include GXO and Mercedes-Benz, with their team of 150 employees working on expanding applications to manufacturing, logistics, healthcare, and home environments.

🗞️ Byte-Size Brief

OpenAI also released details on deliberative alignment, explaining how an o1-class model can contribute to safety and alignment studies. Their findings give early clues to a bigger question: whether enhanced reasoning helps in areas not strictly tied to verified outputs.

Hi,

For my part, and many of my colleagues I consider that GPT 4o is better than 3.5, 4 and o1, it offers better compatibility because of its answer style, its depth and its alignment with my expectations. I believe that o1 has been optimized for different use cases, with adjustments that do not match common habits or preferences. I like the detailed answers and structured interactions if 4o, making its answers more consistent with what I expect of AI. I'm sure we can do better but o1 is not. Although i haven't been able to try o3, i have doubts about o3's general capabilities.

So I don't think any single test can say we're close to showing AGI may be closer than you think! No single type of test can be sufficient to comprehensively assess whether AI has reached a certain level of performance or “understanding and complexity of intelligence.” as Logic and reasoning, creativity, empathy or the ability to understand émotions, ability to learn and adapt and management of uncertainty.

I question the test and I believe we all should take it. But who am I?