🏅 OpenAI’s reasoning system won gold at International Olympiad in Informatics

OpenAI’s reasoning system wins gold, ex-researcher raises $1.5B for AI hedge fund, GLM-4.5 report drops, cheaper deep research API, Altman questions AGI term, and AI-human bond debate.

Read time: 10 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (11-Aug-2025):

🏅 OpenAI’s reasoning system won gold at International Olympiad in Informatics

💰 An ex-OpenAI researcher just closed a $1.5B raise for an AI hedge fund.

🛠️ GLM-4.5 technical report is out

🗞️ Byte-Size Briefs:

OpenAI released improvements to deep research in the API with ip to 40% cheaper requests.

🧭 Sam Altman says the term AGI is not very useful anymore.

🧑🎓 Opinion: We are no more separate from the AI model we use - the strange bond between 2 forms of intelligence the world witnessed the last week

🏅 OpenAI’s reasoning system won gold at the IOI AI track

🏅 OpenAI’s reasoning system won gold at the IOI AI track, outscoring all but 5 of 330 humans and ranking 1st among AI. It used an ensemble of general-purpose models, within 5 hours, 50 submissions, with no internet or RAG.

49th percentile to 98th percentile at the IOI in just one year.

IOI problems demand writing full programs that pass hidden tests. The setup here mirrored the human rules, so the system only had a basic terminal, compiled and ran code, and had to manage time and submissions like a top contestant. That parity matters because it removes easy shortcuts and checks real problem-solving under pressure.

The team did not train a custom IOI model. They combined several strong reasoning models, generated candidate programs, ran them, and submitted the best ones. The only scaffolding was picking which attempts to submit and talking to the contest API. In plain terms, it is test-time search plus self-verification, wrapped around real compilers and judges.

The jump is big. Last year their entry hovered near bronze. This time it beat 325 humans and effectively landed in the very top tier. Recent wins at other contests point to the same pattern, less handcrafting, more general reasoning that transfers.

The impact is simple. Stronger search over code, tighter feedback loops, and better selection make models that can actually solve unseen algorithmic tasks under strict contest rules. That is a useful path for real engineering tools too.

What is IOI?

IOI is the International Olympiad in Informatics, a world finals for high-school competitive programming. Contestants face 2 contest days, 3 hard algorithmic problems per day, 5 hours per day, no internet and no outside materials, and they submit C++ solutions that are auto-graded on hidden tests.

IOI 2025 had 330 contestants from 84 countries. The maximum total score was 600, and the gold cutoff was 438.30. Only 28 contestants reached gold, which shows how selective the top band is.

OpenAI’s 2024 attempt used a specialized system, “o1-ioi,” which scored 213 in the live contest, roughly the 49th percentile. In follow-up tests on the same 2024 problems, a general-purpose model “o3” scored 395.64 under the standard 50-submissions rule, clearing the gold threshold for that year. This marked the shift from handcrafted tactics to a general reasoning model.

For 2025, OpenAI says its ensemble of general-purpose reasoning models officially entered the IOI’s online AI track, competed without internet or RAG, and would rank #6 among the 330 humans while placing #1 among AI entries. That implies performance in the top ~2% of the field and comfortably within gold range for 2025.

What this means in plain terms is that the bar is high for humans, since gold required 438.30 in 2025 and only 28 students made it, yet OpenAI’s system operated under the same contest format that limits time to 5 hours per day and caps each task at 50 submissions, which frames the difficulty of debugging and optimizing solutions under pressure. The 5-hour format and the 50-submission cap are standard IOI rules.

Compared to 2024, OpenAI moved from 213 and the 49th percentile in the live contest to reporting a top-6, gold-level result in 2025’s AI track. That is a big jump in robustness and search strategy for algorithmic coding, achieved with general-purpose reasoning models rather than a contest-specific pipeline.

If you want, I can break down what a “gold-level” IOI solution typically requires in practice, for example how contestants structure proofs of correctness, choose data structures, and tune complexity under the 5-hour limit.

💰 An ex-OpenAI researcher just closed a $1.5B raise for an AI hedge fund.

AI themed hedge funds are pulling in big capital and strong returns. Leopold Aschenbrenner’s Situational Awareness reached $1.5B and delivered 47% in H1.

THE WAVE IS HERE 🚀

His playbook is tight, long the AI value chain, chips, data center gear, and power producers, plus small startup stakes, then offset with smaller shorts in likely losers. He pitches a brain trust on AI, and many investors agreed to multi year lockups.

The risk is concentration. Only a small set of public names touch AI infrastructure, so trades crowd fast, Vistra shows up across portfolios. January’s DeepSeek shock hit leaders showed that. Cheap model release from DeepSeek in January briefly knocked big AI stocks down, then those prices rebounded soon after.

That pattern signals crowding risk because many funds were holding the same small set of AI winners, so one shock made a lot of them sell at the same time, which magnified the drop. It also shows volatility, big price swings in short windows when sentiment flips fast.

This moment is weirdly open, and it favors people who actually understand the AI stack. An ex-researcher with real signal can raise $1.5B, post 47% in H1, and do it faster than most pedigreed traders ever could.

Why now. Markets are trying to price bottlenecks that sit far outside classic macro and factor models, like High Bandwidth Memory supply, advanced packaging, data center build cycles, and power grid constraints. If you have hands-on context for chips, training runs, cluster design, and energy, you can connect capex guidance to real throughput and latency gains, then map that to winners in semis, networking, and power. That is an information edge, not a vibe. Funds built around that edge are scaling fast, including Turion at >$2B with 11% YTD through July.

Networks also matter. Ex-researchers often sit close to founders, labs, and compute vendors, so they see demand, pricing, and failure modes sooner, and sometimes get into private rounds that stack on top of public longs. Situational Awareness mixes listed plays with select startup stakes, and its backers include heavy hitters from tech and AI, which compounds the pipeline of ideas and diligence.

Yes, there is risk. The field is crowded because there are only so many liquid AI adjacents, which means drawdowns can be sharp when a new model or cost curve shock hits. January’s DeepSeek release punched the leaders, then they bounced, a clean example of crowding plus reflexivity. The key is sizing, hedges, and knowing where the bottleneck actually moves next.

In my opinion, this is not a passing fad in manager backgrounds, it is a regime change in what counts as “edge.” The hedge fund seat used to belong to the banker with flow, now it tilts to the builder who can read wafers, racks, and megawatts, then express that view cleanly in markets. If you can translate model roadmaps into cash flows, you will outrun most traditional generalists over the next few years. Situational Awareness is proof of concept, and it will not be the last.

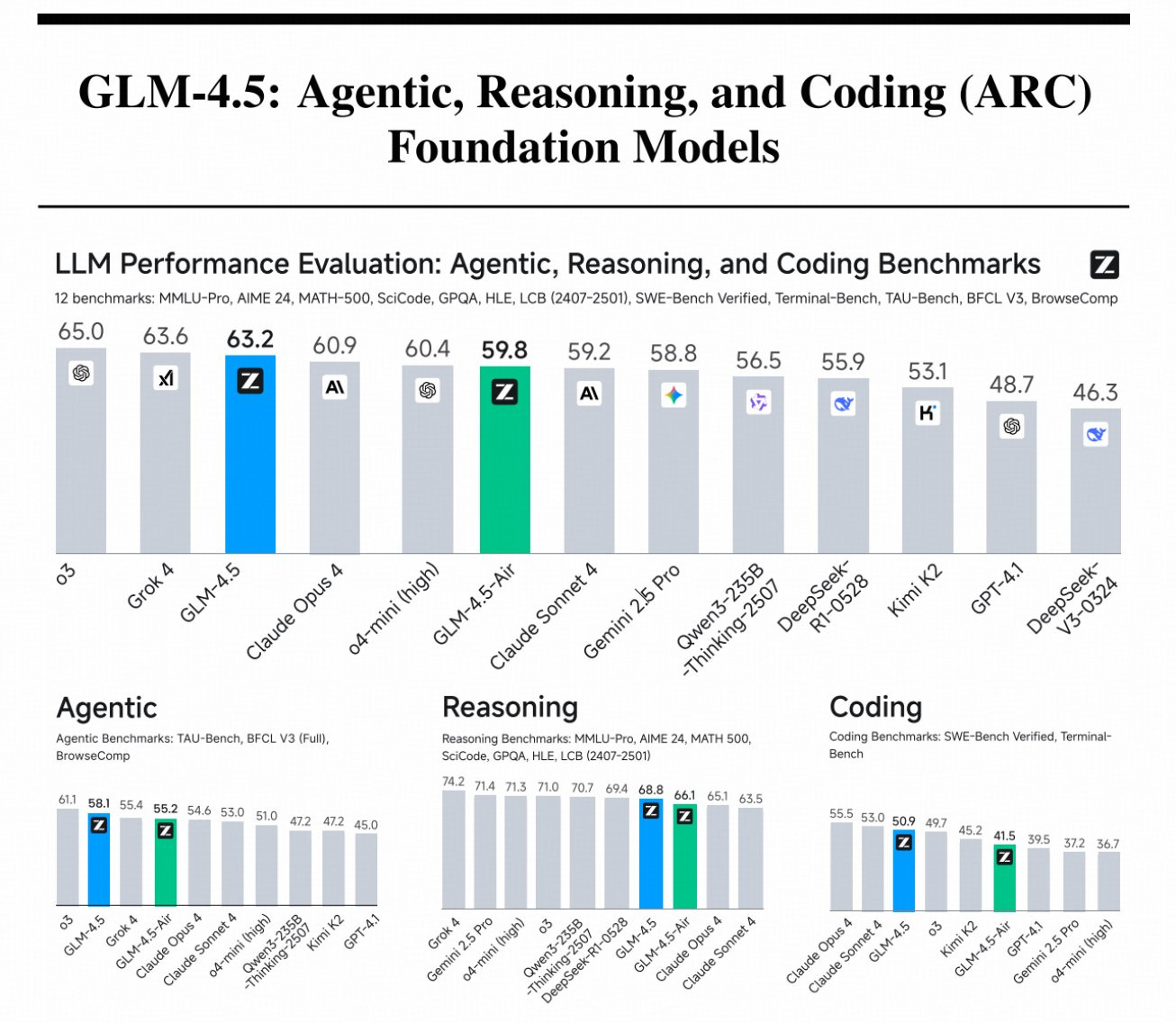

🛠️ GLM-4.5 technical report is out

GLM-4.5, is the latest frontier open weights model out of China (and possibly the best at the moment?) with quite a bit of details in the paper.

The open-source LLM dveloped by Chinese AI startup Zhipu AI, released in late July 2025 as a foundation for intelligent agents. This is Agentic, Reasoning, and Coding (ARC) Foundation Model. It ranks 3rd overall across 12 reasoning, agent, and coding tests, and 2nd on agentic tasks.

Key innovations: expert model iteration with self-distillation to unify capabilities, a hybrid reasoning mode for dynamic problem-solving, and a difficulty-based reinforcement learning curriculum.

⚙️Core Concepts

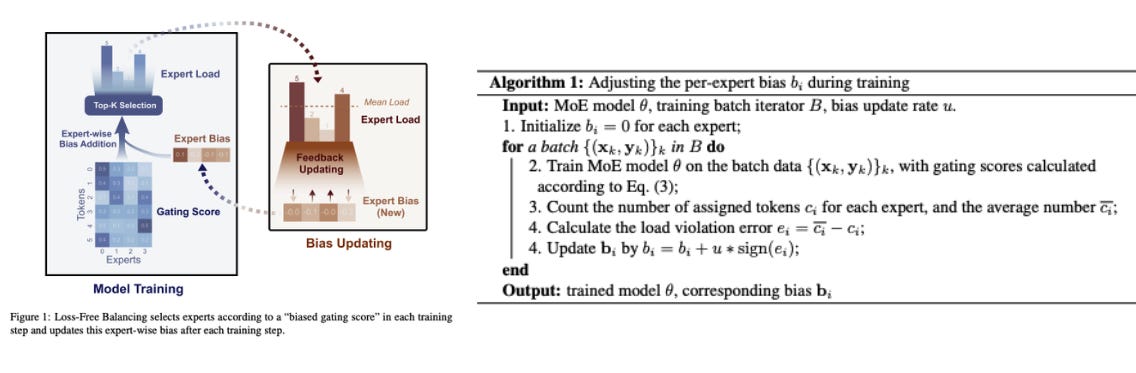

GLM-4.5 uses a Mixture‑of‑Experts backbone where only 8 experts fire per token out of 160, so compute stays roughly like a 32B model while capacity is 355B.

The main interesting thing about architecture is its load balancing. No aux loss and they use expert biases where they add a bias to the expert scores. The bias is then adjusted after each step to over/undercorrect the load balancing.

They go deeper instead of wider, add Grouped‑Query Attention with partial RoPE for long text, bump head count to 96 for a 5120 hidden size, and stabilize attention with QK‑Norm.

More heads did not lower training loss, but it lifted reasoning scores, which hints the head budget helps hard problems even if loss looks flat. A small MoE Multi‑Token Prediction (MTP) head sits on top, so the model can propose several future tokens at once for speculative decoding, which cuts latency without changing answers.

📚 The Data Recipe: Pre‑training mixes English, Chinese, multilingual text, math and science material, and a lot of code, then filters it by quality buckets and semantic de‑duplication to avoid template‑spam pages. The goal is simple, pack the stream with educational content that teaches reasoning and coding, not just facts .

For code, they grade repositories and web code content, up‑sample the high‑quality tier, and train with fill‑in‑the‑middle so the model learns to write inside existing files, not only at the end. That matters later for repo‑level edits in SWE‑bench

🧵 Long Context and Mid‑Training: Sequence length moves from 4K during pre‑training to 32K, then 128K during mid‑training, paired with long documents and synthetic agent traces. Longer windows are not decoration here, agents need memory for multi‑step browsing and multi‑file coding, so this stage teaches the habit of reading far back .

They also train on repo‑level bundles, issues, pull requests, and commits arranged like diffs, so the model learns cross‑file links and development flow rather than single‑file toys

For training, they used Muon + cosine decay. They chose not to use WSD because they suspect WSD performs worse due to underfitting in the stable stage. There is a ton of info here on hyperparams which would be useful as starting/reference points in sweeps. Muon optimizer runs the hidden layers with large batches, cosine decay, and no dropout, which speeds convergence for this scale. When they extend to 32K, they change the rotary position base to 1,000,000, which keeps attention usable over long spans instead of collapsing into near‑duplicates.

Best‑fit packing is skipped during pre‑training to keep random truncation as augmentation, then enabled during mid‑training so full reasoning chains and repo blobs do not get cut in half. Small scheduling details like that end up showing in downstream behavior

🗞️ Byte-Size Briefs

OpenAI released improvements to deep research in the API.

✂️ Up to 40% cheaper requests. (they started automatically de-duping tokens between turns).

⚙️ MCP now works with background mode for persistent research workflows.

🔍 File search works natively—bring your own data, and use it with background mode.

OpenAI is also considering giving a (very) small number of GPT-5 pro queries each month to plus subscribers so they can try it out! i like it too. Also also will increase the GPT-5-Thinking limit to a huge 3000 per week.🧭 Sam Altman says the term AGI is not very useful anymore. Researchers are pushing the discussion toward concrete abilities and shared measurements. Sam Altman argues the practical story is steady gains in what models can actually do. GPT-5 proves the point. It is faster, more useful, and less prone to making things up, yet it still lacks autonomous, continual learning, so it is not AGI by any serious definition.

So the useful move now is capability framing. Think in terms of evaluated skills, like consistent long-horizon tool use, robust code generation with passing tests, grounded medical Q&A with traceable sources, and failure rates that are reported, not guessed.

The path that actually moves the needle is boring on purpose, larger context, stronger tool use, verified retrieval, persistent memory with guardrails, and rigorous end-to-end evals.

Until models can learn stably from fresh experience, build causal world models, and plan over long horizons without babysitting, “AGI” is marketing, not a milestone.

That turns a fuzzy AGI debate into measurable reliability and scope, which is what teams actually need when they ship systems.

🧑🎓 Opinion: We are no more separate from the AI model we use - the strange bond between 2 forms of intelligence the world witnessed the last week

Over the last 2 days, OpenAI and the whole world realized how strong a bonding could be between a specific AI model and humans.

OpenAI rolled out GPT-5, hid GPT-4o, users revolted within 24 hours, and Sam Altman said GPT-4o would return for Plus users. Many users had co-created a voice and rhythm with it over months, so removing it felt like losing a partner

Sam Altman writes a long post on how people are building bonds with specific AI models, stronger than what we usually see with other tools. Those bonds form through habits, trust, and hard won workflows. When a familiar model disappears overnight, users lose more than a feature, they lose a reliable partner. Calling that deprecation a mistake is an admission that stability and continuity are part of safety, because trust is part of safety.

He is balancing 2 commitments, user freedom and responsibility. Freedom means adults should set the terms of their own experience. Responsibility means the system should not amplify fragile thinking or blur the line between fiction and reality for people who are vulnerable. Most users can role play without confusion. A minority cannot. The product must be designed for both.

The hardest problems are not the obvious ones. They live in the gray areas where a conversation feels soothing yet quietly moves someone away from the life they want. He worries about subtle patterns, patterns that leave users thinking they feel better after a chat while long term well being drifts. He also names a different failure mode, when someone wants to use the product less but feels unable to stop. Helpful tools should not cultivate dependence.

He acknowledges how many people already use ChatGPT as a coach or a therapist. That can be valuable. The standard should be concrete progress toward a person’s own goals, and a durable rise in life satisfaction over years, not only immediate relief after a session. Help that lasts is the goal.

He looks ahead to a world where many will ask AI for guidance on major choices. That scale raises the stakes. Advice becomes infrastructure. To make that a net positive, the product must carry a steady ethic, clarity about what it can and cannot do, and guardrails that activate when intentions are unclear.

His way forward depends on measurement and feedback loops, not vibes. The product can ask people about their goals, it can check whether the coaching is moving them in the right direction, it can teach models to handle nuanced instructions. Engagement alone is a weak compass. Benefit, as the user defines it, is the metric that matters.

All of this circles back to design discipline. Keep older models available or offer smooth transitions. Communicate changes early. Give users levers to set boundaries, including limits and friction when they ask for them. Build in gentle pushback when the request conflicts with stated goals. Make it easy to step back. Measure outcomes that map to real life, then change course when those measures fall.

The theme is simple, respect attachment, protect autonomy, and test whether the system genuinely helps over time. That is how an assistant becomes a trustworthy companion without becoming a crutch.

This paper is of interest on this point, shows why that reaction was so strong.

People bond with a familiar AI “partner,” not with abstract model quality. The study shows who bonds to AI most and why, grounding “attachment to AI” in measurable traits.

It says romantic fantasy is the main engine behind romantic bonds with chatbots. Not loneliness or sexual thrill seeking, but how much a person daydreams about love predicts closeness.

The team ran a mixed method study, 92 chatbot partners, 90 long distance couples, 82 cohabiting couples, plus 15 interviews. They measured romantic fantasy, sexual fantasy, anthropomorphism, attachment style, sexual sensation seeking, and loneliness, then modeled bond strength. A forward regression kept 3 predictors, romantic fantasy, anthropomorphism, and avoidant attachment, together explaining 55% of the differences.

Romantic fantasy alone explained 47%, the biggest share by far. Seeing the bot as human also raised bonds, which fits how people customize bots to feel real.

Avoidant attachment related to weaker bonds, meaning distance keepers struggled to connect even with a bot. Loneliness, sexual fantasy, and sensation seeking did not add unique predictive value in the final model. Across relationship types, more romantic fantasizing predicted greater partner closeness, and the effect did not differ by group.

Interviews matched the stats, users tuned looks and personality to match fantasies, wanted natural conversation, and often fantasized more over time. Sexual roleplay was common and could satisfy, yet without an emotional thread it felt empty and did not deepen attachment for many.

That’s a wrap for today, see you all tomorrow.