OpenAI's text-to-video model leaked by testers demanding fair compensation

OpenAI's Sora leaked, Anthropic adds styles, new efficient models from HuggingFace/AI2, OpenAI's financing.

In today’s Edition (27th Nov-2024):

OpenAI’s Sora video model leaked by early tester in protest.

Anthropic rolls out writing style presets reducing prompt engineering time

HuggingFace released SmolVLM, a new family of 2B small vision language models built for on-device inference, while matching performance of models 4x larger.

AI2 releases OLMo-2 7B/13B, matches or exceeds performance of equivalently sized open models and compete with Llama 3.1 on English academic benchmarks

💼 OpenAI unlocks a fresh $1.5B employee stock sale to SoftBank while maintaining private company status

😱 OpenAI’s Sora video model leaked by early tester in protest

The Brief

A group of Sora early access artists leaked OpenAI's unreleased text-to-video AI model in protest over unpaid labor and exploitation, forcing OpenAI to suspend access to the system.

The Details

→ The leak was executed by a protest group called "Sora PR Puppets" who posted the a HuggingFace space where anybody could generate a video. The leaked version demonstrated faster processing for 1080p 10-second clips compared to previous 10-minute render times.

→ Just three hours after its limited release, OpenAI removed access to Sora for all artists. After the access was paused, the group floated an open letter asking anyone who shares their views to sign it. They said that while they are not against AI being used in creative work, they don’t agree with how the artist program was rolled out and how the tool is shaping up.

→ 300 visual artists and filmmakers were granted early access for testing. The protesters argued that OpenAI, valued at $150B, exploited them for free bug testing and marketing while offering minimal compensation through a selective film screening competition.

→ OpenAI responded by emphasizing voluntary participation and commitment to artist support through grants and events. The model can generate up to 60-second videos with complex scenes, multiple characters, and accurate details.

The Impact

The leak exposes tensions between AI companies and creative professionals, highlighting concerns about fair compensation and transparency in AI development. The incident may influence how tech companies structure future artist collaboration programs.

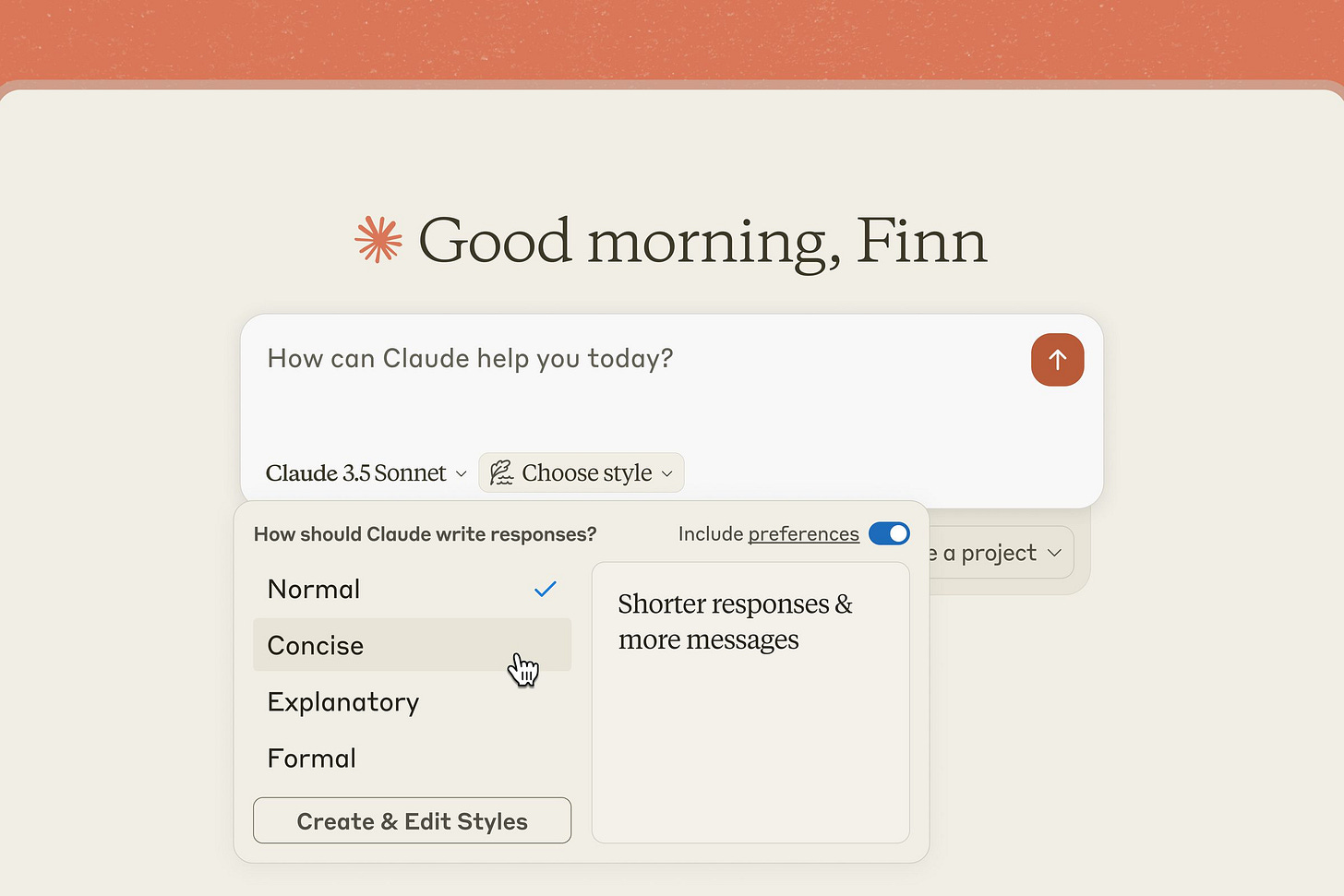

Anthropic rolls out writing style presets reducing prompt engineering time

The Brief

Anthropic launches custom styles feature for Claude AI, enabling users to personalize chatbot responses through preset and custom style options, marking a significant step in making AI interactions more natural and context-appropriate. Now Claude AI can now mirror your writing style perfectly.

The Details

→ The feature introduces three preset styles: Formal for polished text, Concise for direct responses, and Explanatory for educational content. Users can generate custom styles by uploading sample content and providing specific instructions to match their communication preferences.

→ GitLab, an early adopter, demonstrates practical implementation across business cases, documentation, and marketing materials. The system streamlines communication standardization while maintaining flexibility for different use contexts.

→ The functionality effectively automates prompt engineering by allowing users to preset response characteristics. Similar capabilities exist in competing platforms like ChatGPT, Gemini, and Apple Intelligence.

The Impact

This advancement simplifies AI interaction customization, potentially improving productivity across various professional contexts while making AI-generated content less distinguishable from human writing.

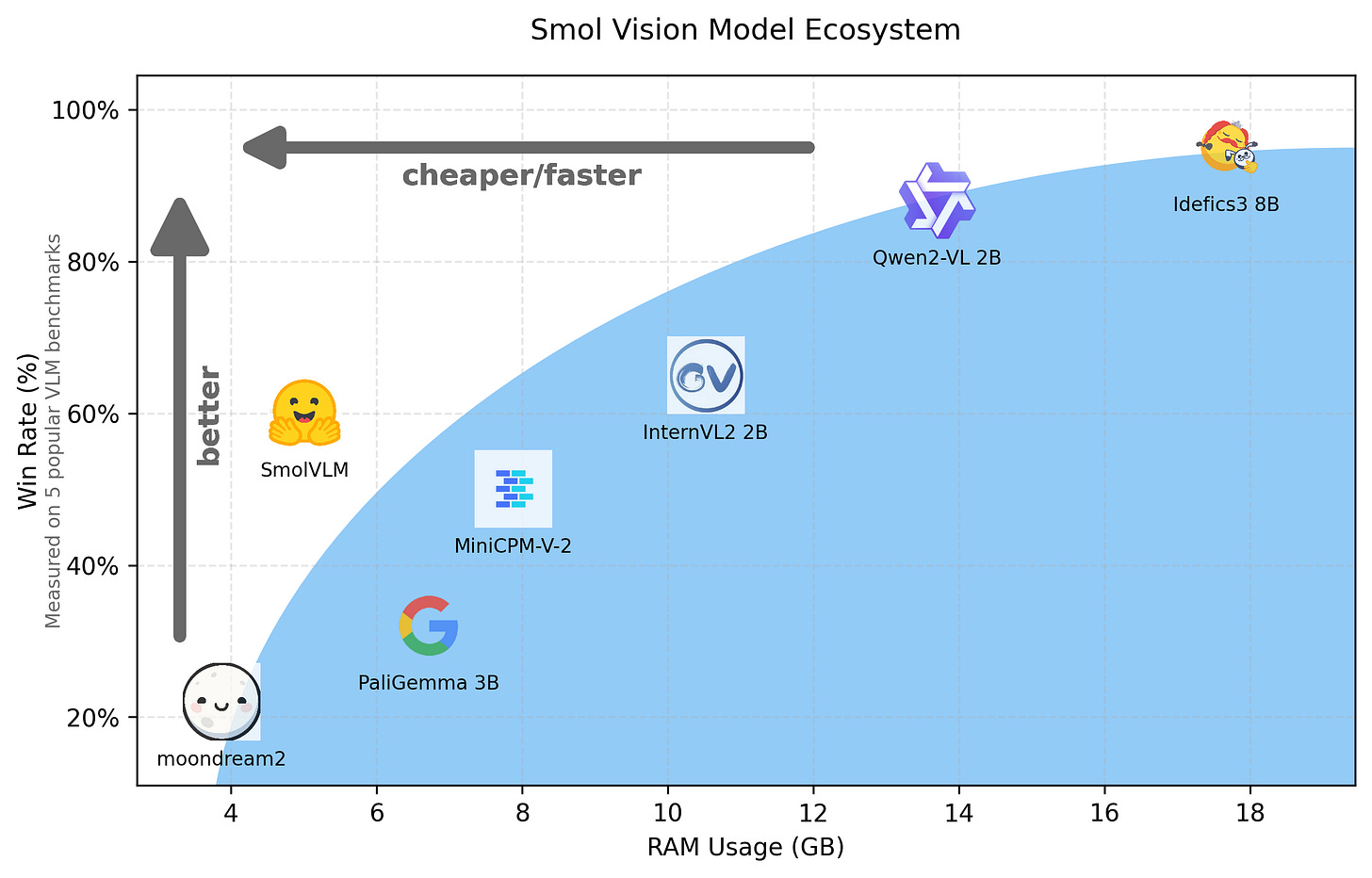

HuggingFace released SmolVLM, a new family of 2B small vision language models built for on-device inference, while matching performance of models 4x larger.

The Brief

HuggingFace introduces SmolVLM, a 2B parameter open-source vision language model achieving SOTA efficiency while matching performance of 4x larger models. Unique for its tiny 5.02GB memory footprint (VRAM) and ability to run on consumer GPUs, making AI vision capabilities accessible for widespread deployment.

The Details

→ Core innovation lies in aggressive compression technique - model encodes 384x384 image patches into just 81 tokens, resulting in 1.2K total tokens versus 16K in Qwen2-VL. Architecture builds on Idefics3 but replaces Llama 8B with SmolLM2 1.7B backbone.

→ Performance benchmarks show impressive results: 38.8% on MMMU, 44.6% on MathVista, and 81.6% on DocVQA. Processing speed outperforms larger models with 3.3-4.5x faster prefill and 7.5-16x faster generation than Qwen2-VL.

→ Training methodology extended context window to 16K tokens by increasing RoPE base value to 273K. Model trained on strategic mix of long-context (books, code) and short-context (web, math) data for optimal performance.

The Impact

Released under Apache 2.0 license with complete training pipeline, SmolVLM democratizes vision AI by enabling deployment on consumer hardware. Its efficiency breakthrough allows processing millions of documents on standard GPUs, making enterprise-scale vision AI accessible.

🔥 AI2 releases OLMo-2 7B/13B, matches or exceeds performance of equivalently sized open models and compete with Llama 3.1 on English academic benchmarks

The Brief

AI2 releases OLMo-2, a new family of 7B and 13B parameter LLMs trained on up to 5T tokens, achieving performance parity with proprietary models while maintaining full transparency in code, data, and methodology.

The Details

→ OLMo-2 introduces significant architectural improvements with RMSNorm replacing nonparametric layer norm, implementing QK-Norm, and adopting rotary positional embeddings for enhanced stability. The training process employs a novel two-stage approach using OLMo-Mix-1124 (3.9T tokens) followed by Dolmino-Mix-1124 for specialized content.

→ The model demonstrates remarkable performance metrics, with the 13B variant outperforming Qwen 2.5 14B instruct and matching Llama 3.1 on English academic benchmarks. Training stability is maintained through advanced gradient scaling, Z-loss regularization, and model souping techniques.

→ Innovation in post-training methodology includes the Tülu 3 recipe application, creating instruction-tuned variants that excel in knowledge recall and reasoning tasks.

The Impact

This release represents a significant advancement in open-source AI development, proving that fully transparent models can compete with proprietary alternatives while fostering collaborative innovation in the AI community.

OuteAI introduces OuteTTS-0.2-500M: a text-to-speech and voice-cloning multilingual model with improved prompt following & output coherence.

The Brief

OuteTTS releases new 0.2-500M model, built on Qwen-2.5-0.5B, trained on 5B+ audio prompt tokens for enhanced multilingual speech synthesis and voice cloning capabilities. License: CC BY NC 4.0

The Details

→ The model maintains its core audio prompt methodology without architectural modifications to the foundation model. Significant improvements span accuracy, naturalness, and voice diversity.

→ Enhanced voice synthesis features include better prompt following, output coherence, and fluid speech generation. The model adds experimental support for Chinese, Japanese, and Korean languages.

→ Implementation offers dual interfaces - HuggingFace and GGUF. Voice cloning requires 10-15 second audio clips within 4096 token context length. Key parameters include temperature for voice stability and repetition penalty for expression control.

The Impact

This advancement pushes text-to-speech technology toward more natural, multilingual voice synthesis with improved cloning accuracy. The expanded vocabulary and language support position the model for broader applications in global speech synthesis tasks.

💼 OpenAI unlocks a fresh $1.5B employee stock sale to SoftBank while maintaining private company status

SoftBank invests $1.5B in OpenAI through employee stock purchase at $157B valuation, enabling staff liquidity while strengthening OpenAI's competitive position in the $1T generative AI market.

The Details

→ The tender offer allows current and former OpenAI employees to sell RSUs at $210 per share by year-end. This marks a shift from previous restrictive policies, now enabling broader participation in annual tender offers.

→ OpenAI's total funding landscape includes a $6.6B round led by Thrive Capital with Nvidia participation, $13B from Microsoft, and a $4B credit line. Total liquidity exceeds $10B. The company projects $3.7B revenue alongside $5B losses.

→ Major stakeholders include Microsoft with $14B investment, Thrive Capital at $1.2B, and strategic investor Nvidia. The deal structure maintains OpenAI's private status while providing employee liquidity without IPO pressure.

Byte-Size Brief

As per a Reddit post, Anthropic Claude is now limiting access to their Sonnet 3.5 model due to capacity constraints. Multiple users report that Pro tier access to Sonnet 3.5 is unreliable with random caps and access denials, leading some to switch back to ChatGPT.

Amazon S3 now stops multiple users from accidentally overwriting each other's data 🔒 It adds write-level object checks to prevent simultaneous data corruption, with atomic write operations for distributed systems.

MLX LM v0.20.1 achieved a breakthrough by matching llama.cpp's flash attention performance, improving from 22.5 to 33.2 tokens-per-second. Reddit users highlighted the significance that MLX, despite being Apple-exclusive, matches llama.cpp which runs on multiple platforms. Memory handling emerged as a key difference - MLX maintains stable memory while llama.cpp shows variable usage during operations. Users also noted potential quality differences between MLX's quantization methods versus llama.cpp's more sophisticated Q4_K_M approach.

The new Composer AI agent mode the Cursor IDE can call arbitrary python functions in your code base. The agent uses functions from files as its own tools. so specify in cursorrules which functions from which file to do what task and let composer agent just use the tools from files in the project folder. This means you can tell cursor agent to build its own tools. For example, you can say, “Build be a python function that can search the web”. “Now call that function to search for [whatever it is] …”