Optimizing Encoder-Only Transformers for Session-Based Recommendation Systems

By masking the second-to-last item instead of the last, transformers get better at predicting shopping behaviors

By masking the second-to-last item instead of the last, transformers get better at predicting shopping behaviors

Original Problem 🎯:

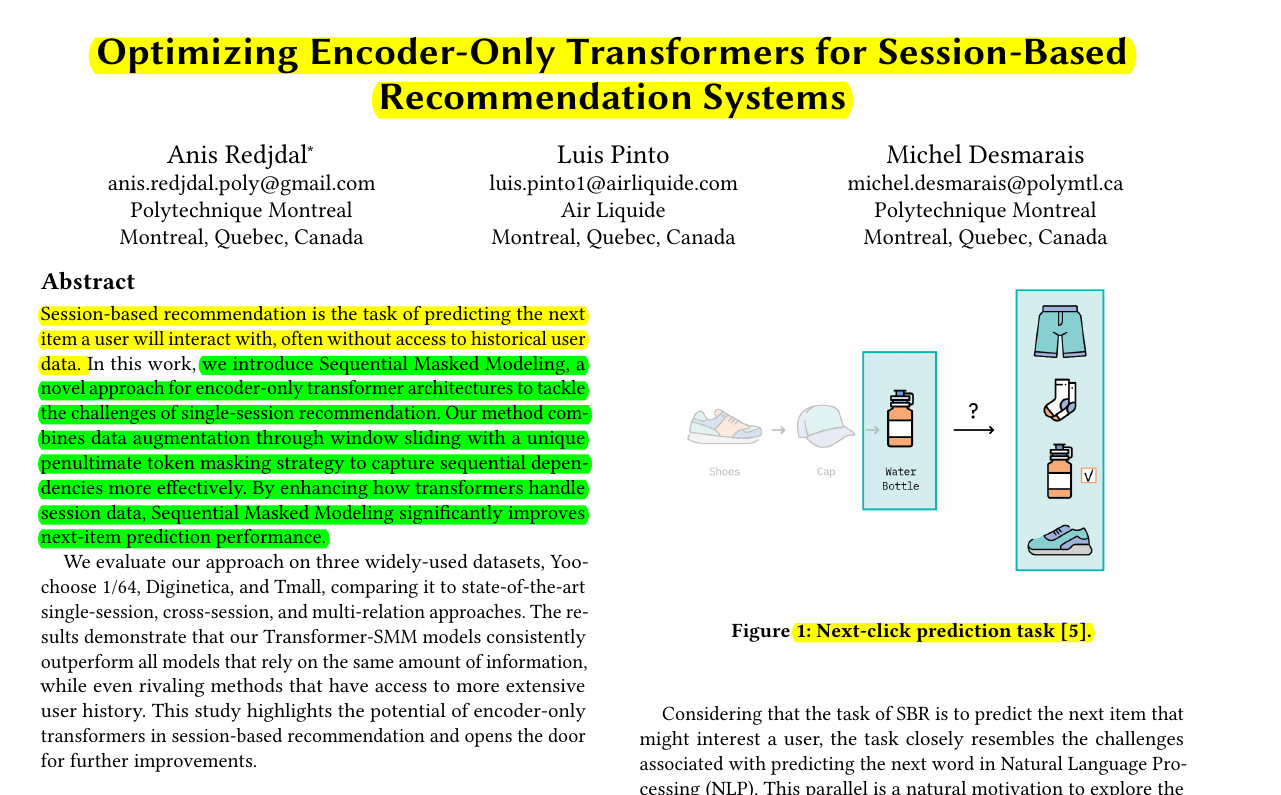

Session-based recommendation systems struggle with limited user data, relying only on single-session interactions to predict next items. Traditional methods using Markov Chains and RNNs fail to capture long-range dependencies effectively.

Solution in this Paper ⚡:

• Introduces Sequential Masked Modeling (SMM), a 2-step training approach for encoder-only transformers

• Uses sliding window data augmentation to handle variable-length sequences

• Implements penultimate token masking strategy instead of traditional last-token masking

• Optimizes architecture with weight tying, pre-layer normalization, and contextual positional encoding

• Combines BERT and DeBERTa architectures with SMM for enhanced performance

Key Insights 💡:

• Penultimate token masking outperforms traditional last-token masking in e-commerce scenarios

• Bidirectional attention in encoder-only models captures richer contextual information

• Data augmentation through sliding windows maintains sequence continuity

• Weight tying reduces parameter count while improving model generalization

Results 📊:

• BERT-SMM achieves 53.49% P@20 on Diginetica (outperforming single-session baselines)

• Maintains competitive performance against models using multi-session data