PAPILLON : PrivAcy Preservation from Internet-based and Local Language MOdel ENsembles

Local models now protect your privacy while still accessing powerful LLM capabilities

Local models now protect your privacy while still accessing powerful LLM capabilities

Chain small and large LLMs to get best performance while keeping data private

🔍 Original Problem:

Users share sensitive personal information with proprietary LLMs during inference, raising privacy concerns. While local open-source models help with privacy, they perform worse than proprietary models.

🛠️ Solution in this Paper:

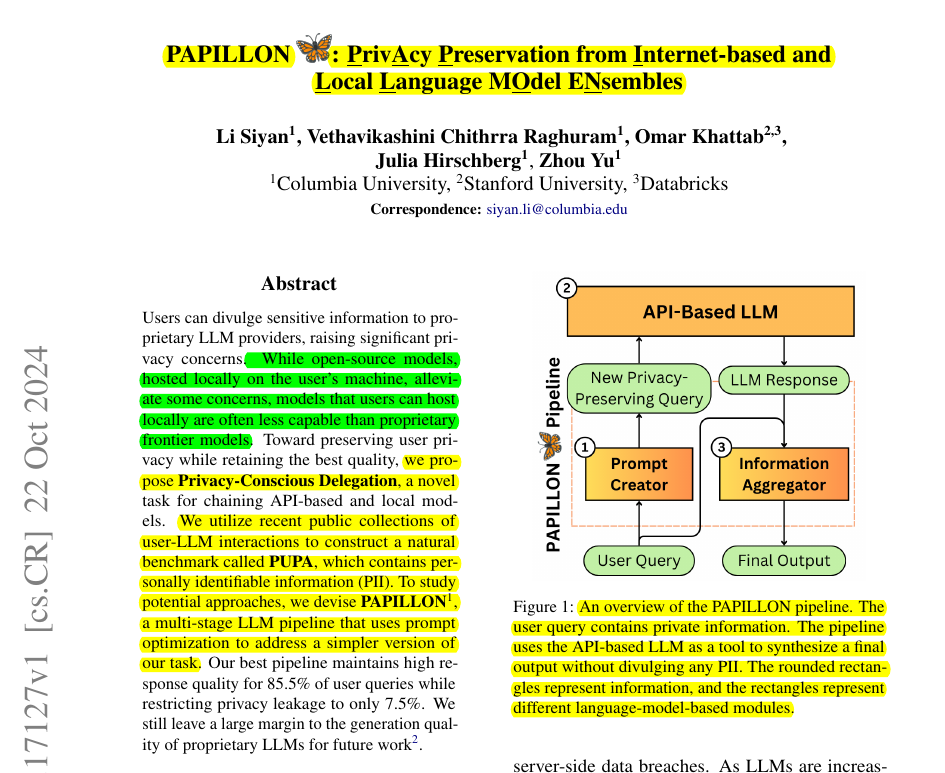

• PAPILLON: A multi-stage pipeline where local models act as privacy-conscious proxies

• Uses DSPy prompt optimization to find optimal prompts for privacy preservation

• Two key components:

Prompt Creator: Generates privacy-preserving prompts

Information Aggregator: Combines responses while protecting PII

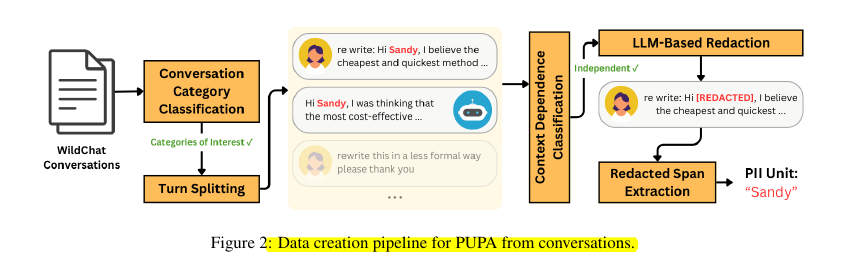

Created PUPA benchmark with 901 real-world user-LLM interactions containing PII

💡 Key Insights:

• Simple redaction significantly lowers LLM response quality

• Privacy-conscious delegation can balance privacy and performance

• Smaller local models can effectively leverage larger models while protecting privacy

• Prompt optimization improves both quality and privacy metrics

📊 Results:

• Maintains 85.5% response quality compared to proprietary models

• Restricts privacy leakage to only 7.5%

• Outperforms simple redaction approaches

• Shows consistent improvement across different model sizes

PAPILLON uses a multi-stage pipeline with:

A Prompt Creator that generates privacy-preserving prompts

An Information Aggregator that combines responses

Uses prompt optimization with DSPy to find optimal prompts

The system aims to maintain high response quality while minimizing privacy leakage.

📌 How is privacy leakage measured?

The system uses:

Quality Preservation metric comparing outputs to proprietary model responses

Privacy Preservation metric measuring percentage of PII leaked

LLM judges and crowd-sourcing validation for robustness.