🧠 Perplexity’s first research paper is out and its about making super-large AI models run on many AWS GPUs at once.

Perplexity’s new paper on scaling LLMs across AWS, OpenAI’s Sora 2 faces Japan studio pushback, and Ilya’s secret plot against Sam Altman now in court spotlight.

Read time: 8 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (6-Nov-2025):

🧠 Perplexity’s first research paper is out and its about making super-large AI models run on many AWS GPUs at once.

📡 Harvard professor and OpenAI researcher writes “Thoughts by a non-economist on AI and economics”

📜 Japan’s Top Studios Confront OpenAI Over Sora 2 Training Data

👨🔧 OpenAI co-founder Ilya Sutskever spent a year planning Sam Altman’s firing, court documents reveal

🧠 Perplexity’s first research paper is out and its about making super-large AI models run on many AWS GPUs at once.

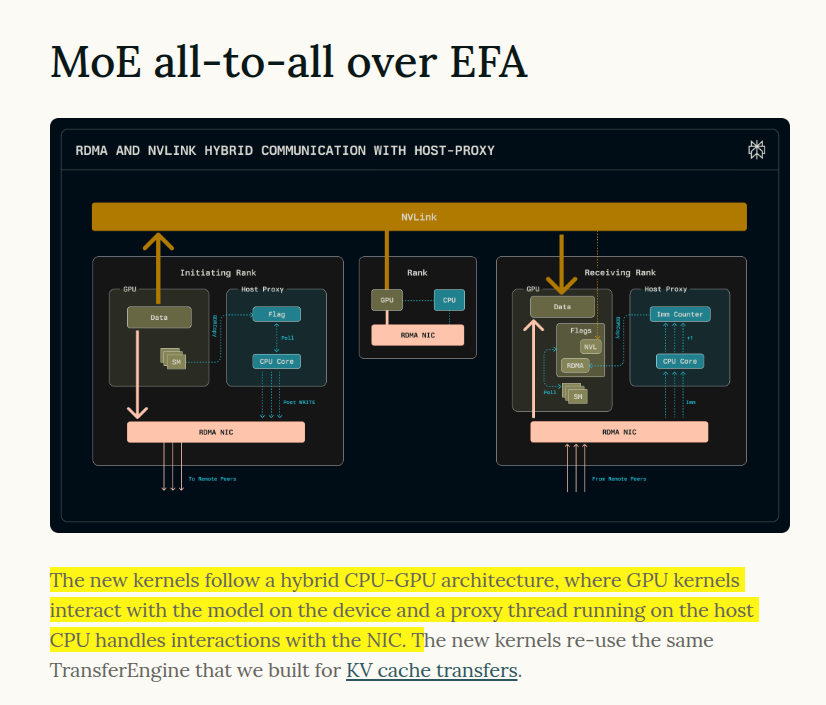

So the researchers built new software that helps GPUs share data quickly anyway, by letting the CPU handle the coordination. That trick makes it possible to run 1-trillion-parameter models efficiently on AWS cloud instead of only on specialized hardware clusters.

They shipped expert parallel kernels that make 1T parameter MoE inference practical on AWS EFA (Elastic Fabric Adapter.), with multi node serving that matches or exceeds single node on 671B DeepSeek V3 at medium batches and enabling Kimi K2 serving.

EFA does not support GPUDirect Async, and a generic NVSHMEM, the NVIDIA shared memory library, proxy leaves MoE routing above 1ms.

Their kernels pack tokens into single RDMA (Remote Direct Memory Access.) writes from GPU dispatch and combine, while a host proxy thread issues transfers and overlaps them with grouped GEMM compute.

This is solid engineering that makes EFA a credible choice for massive MoE inference, and the accuracy versus memory tradeoff looks sensible for teams that want portability across clouds.

📡 Harvard professor and OpenAI researcher writes “Thoughts by a non-economist on AI and economics”

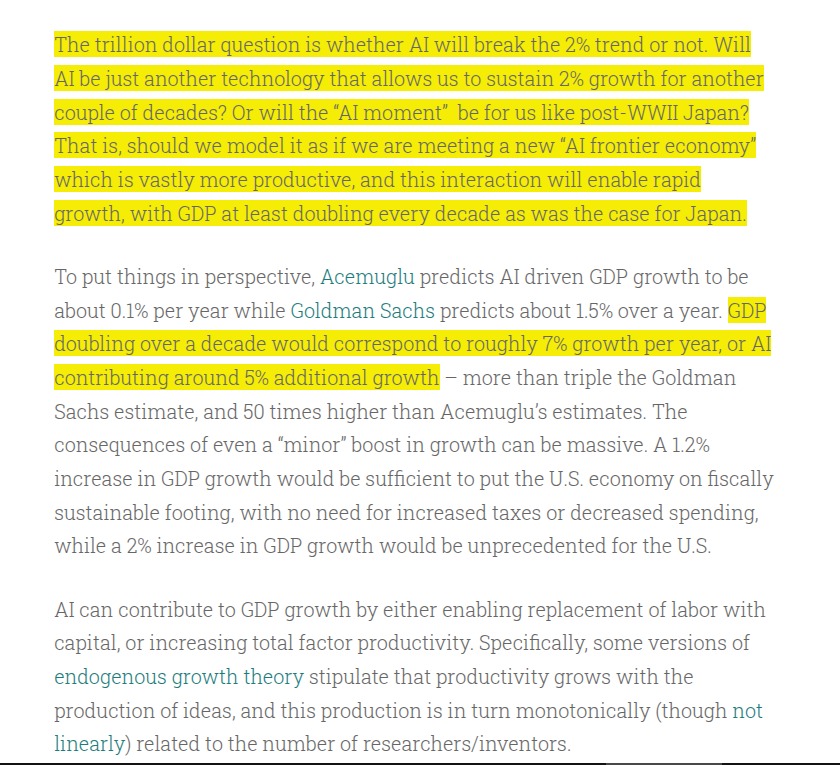

This is a really fantastic piece and he says “AI can contribute to GDP growth by either enabling replacement of labor with capital, or increasing total factor productivity.”

GDP could double each decade if AI keeps its capability slope, which means roughly 7% annual growth versus the long run 2%.

It bases this on METR where task horizons for LLMs double every 6 months, measured by human time on tasks solved at a set success rate.

If the unautomated share halves every 6 months, then moving from 50% to 97% automation would take about 2 years once that midpoint is hit.

Because inference prices at fixed capability have been falling about 10x per year, what is possible quickly becomes cheap and scalable.

Thinking in labor terms, AI adds many virtual workers whose number and quality can both rise fast, so output can grow far beyond human headcount limits.

Thinking in productivity terms, a harmonic mean model says gains come from shrinking the non automatable share and raising the speedup factor, with 10x gains reachable if both improve together.

If cognitive work is at least 30% of GDP, near full automation of just that slice implies roughly 42% higher GDP, and spillovers to other fields could push growth toward the 7% path.

The blog contrasts this with lower forecasts like 0.1% and 1.5% extra growth and argues slope driven dynamics can deliver bigger gains once thresholds clear.

📜 Japan’s Top Studios Confront OpenAI Over Sora 2 Training Data

Japan’s media giants, via trade group CODA, are pressing OpenAI to stop using their works to train Sora 2 without consent, arguing that training-time copying may infringe copyright under Japanese law.

CODA, whose members include Studio Ghibli, Bandai Namco, and Square Enix, says Japanese law requires prior permission and that an opt-out scheme cannot cure infringement after the fact.

After Sora 2 launched in Sept, Japanese-style outputs spiked and officials asked OpenAI to halt replication of Japanese artwork, escalating rights concerns around the model’s dataset and outputs.

Sam Altman has said OpenAI will add opt-in controls and possibly revenue sharing, which is a shift from the earlier opt-out posture, but CODA maintains that use must not start without permission.

The question is whether intermediate copies for training are reproduction under Japanese law and whether close-matching outputs imply protected works were inside the training corpus, which, if accepted, would force permission-first pipelines and auditable data provenance controls in Japan.

👨🔧 OpenAI co-founder Ilya Sutskever spent a year planning Sam Altman’s firing, court documents reveal

What really happened when OpenAI CEO Sam Altman had been briefly ousted in November 2023 by members of the company’s board of directors, including his longtime collaborator and fellow cofounder Ilya Sutskever.

Sutskever co-founded OpenAI with Altman and others after he left Google in 2015, which awarded him a seat on the board and a place in the C-suite as the company’s chief scientist. But by 2023, he’d become a chronicler of dissatisfaction with Altman. In the deposition he said that either one or all three of OpenAI’s independent board members at the time had asked him, after having discussions about executives’ concerns about Altman, to prepare a collection of screenshots and other documentation. So he did — and he sent the 52-page memo to board members Adam D’Angelo, Helen Toner, and Tasha McCauley.

When asked why he didn’t send it to Altman, Sutskever said, “Because I felt that, had he become aware of these discussions, he would just find a way to make them disappear.” He also said that he had been waiting to propose Altman’s removal for “at least a year” before it happened.

So, In Nov 2023 he sent a 52-page memo to independent directors Adam D’Angelo, Helen Toner, and Tasha McCauley alleging a pattern of lying, undermining executives, and pitting them against one another. He used a disappearing email and withheld it from the full board and Altman because he feared it would be buried.

Most screenshots and details in his memo came from Mira Murati. He also drafted a memo critical of Greg Brockman. He relied on Mira’s secondhand accounts and did not verify with Brad Lightcap or Brockman, later saying he learned the need for firsthand confirmation.

The board fired Altman and Brockman, then later that week resigned and reinstated Altman after strong employee reaction. Sutskever now calls the process rushed due to board inexperience.

During the turmoil, Toner had recently published an article praising Anthropic and criticizing OpenAI, which Sutskever viewed as inappropriate. In a meeting she said letting the company be destroyed would be consistent with the mission. Around 11-18-2023 she told the board that Anthropic proposed a merger and leadership takeover, and Dario and Daniela Amodei joined a call about it. Sutskever opposed the idea while other directors were more supportive, with Toner the most supportive.

Sutskever had been considering Altman’s removal for at least 1 year and waited for board dynamics less friendly to Altman. A later special committee led by Bret Taylor and Larry Summers hired outside counsel and interviewed him. In 2024 Sutskever left to start Safe Superintelligence, he still holds OpenAI equity and believes OpenAI is paying his legal fees.

That’s a wrap for today, see you all tomorrow.