PHI-S: Distribution Balancing for Label-Free Multi-Teacher Distillation

A math trick using Hadamard matrices lets vision models share their knowledge without losing their unique strengths.

A math trick using Hadamard matrices lets vision models share their knowledge without losing their unique strengths.

Original Problem 🔍:

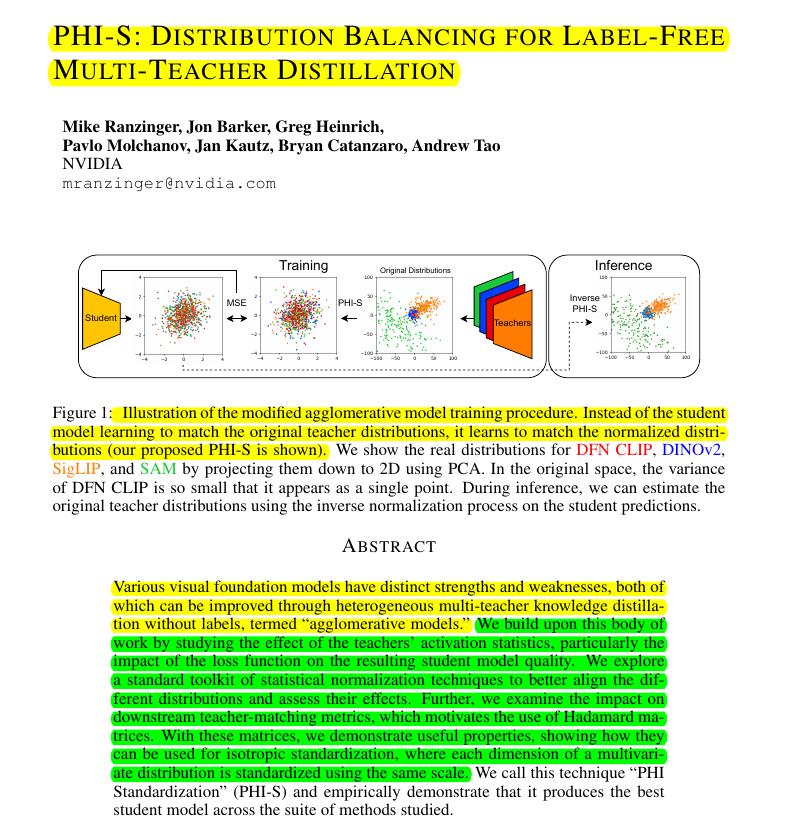

Agglomerative models fuse multiple visual foundation models through multi-teacher knowledge distillation. However, existing methods struggle with balancing the loss contributions from different teachers due to their distinct activation statistics.

Solution in this Paper 🧠:

• Introduces PHI Standardization (PHI-S) for normalizing teacher feature distributions

• Uses Hadamard matrices to rotate distributions for isotropic standardization

• Applies a single scalar value for standardization across all dimensions

• Maintains invertibility to estimate original teacher distributions

• Implements a multi-stage training process with increasing resolution

Key Insights from this Paper 💡:

• Teacher models have vastly different activation statistics

• Whitening methods can be problematic for low-rank distributions

• Isotropic normalization methods generally work best

• PHI-S balances information across all feature channels evenly

• PHI-S is robust to low-rank distributions unlike other whitening methods

Results 📊:

• PHI-S achieves lowest average rank across benchmark tasks for ViT-B/16 and ViT-L/16 students

• PHI-S produces more uniform error variances across feature channels