Prompt Tuning for Audio Deepfake Detection: Computationally Efficient Test-time Domain Adaptation with Limited Target Dataset

Smart prompt injection helps catch fake audio across different contexts using minimal computing power with just 0.02% extra parameters.

Smart prompt injection helps catch fake audio across different contexts using minimal computing power with just 0.02% extra parameters.

Teaching AI to spot fake voices with just a whisper of extra code.

Original Problem 🔍:

Audio deepfake detection faces challenges in domain adaptation, limited target data, and high computational costs when fine-tuning large pre-trained models.

Solution in this Paper 🛠️:

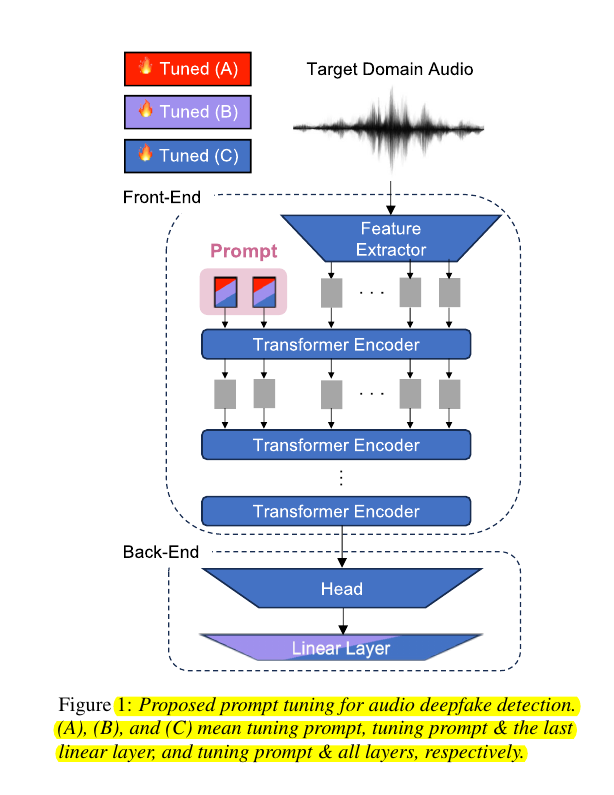

• Introduces prompt tuning for audio deepfake detection

• Inserts trainable prompt parameters into intermediate feature vectors

• Can be integrated with state-of-the-art transformer models

• Requires minimal additional trainable parameters (0.001-0.02%)

• Compatible with other fine-tuning approaches

• Effective with small prompt lengths (5-10)

Key Insights from this Paper 💡:

• Prompt tuning improves or maintains equal error rates across multiple target domains

• Performs well with as few as 10 target domain samples

• Outperforms full fine-tuning on very small target datasets

• Provides efficient domain adaptation with minimal computational resources

• Potentially applicable to other audio classification tasks facing similar challenges

Results 📊:

• Improves EERs across 7 target domains for two SOTA models (W2V and WSP)

• Effective with limited target data (10-1000 samples)

• Minimal additional parameters: 0.00161% (W2V) and 0.0251% (WSP)

• Outperforms full fine-tuning on small datasets (e.g., 10 samples)

• Rapid performance saturation with short prompt lengths (5-10)

🛠️ The way the proposed prompt tuning method works:

Inserts trainable prompt parameters into the intermediate feature vectors of the model's front-end

Fine-tunes only the prompt parameters and optionally the last linear layer on the target dataset

Keeps most of the pre-trained model parameters frozen

Can be combined with other fine-tuning approaches