PT-BitNet: Scaling up the 1-Bit Large Language Model with Post-Training Quantization

Pretty HUGE proposition in this paper - PT-BitNet: A post-training quantization method for ternary LLMs up to 70B parameters 🔥

Pretty HUGE proposition in this paper - PT-BitNet: A post-training quantization method for ternary LLMs up to 70B parameters 🔥

Results 📊:

• Outperforms other low-bit PTQ methods in perplexity (e.g., 8.59 vs 11.06 for OmniQuant on LLaMA-2 7B)

• Scales to 70B with 61% average downstream accuracy (vs 51.2% for 3.9B BitNet)

• 5.7-6.3x memory compression

• ~91% reduction in computation energy

• ~50% inference latency reduction

--

Original Problem 🎯:

The Original BitNet's ternary quantization and avoiding matrix multiplications was great, but one big drawback was that, it was NOT a post-training quantization.

So to get its full benefit you need to train it from scratch. Which is hugely costly affair.

(Although recently some innovative technique was discovered where you can fine-tune a base language model to the BitNet 1.58b architecture, without necessarily training a model from scratch. Still for most cases the original bitnet required training from scratch )

shows promise but is limited to models under 3B parameters due to

Solution in this Paper 🛠️:

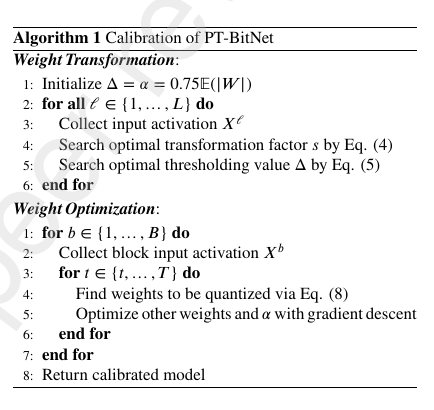

• Two-stage algorithm:

Weight transformation: Optimizes distribution for quantization

Weight optimization: Block-wise optimization using greedy algorithm

• Eliminates need for training from scratch

• Applies 1.5-bit weight and 8-bit activation quantization

Key Insights from this Paper 💡:

• Post-training quantization enables scaling ternary LLMs beyond 3B parameters

• Two-stage optimization crucial for maintaining performance with extreme quantization

• PT-BitNet combines BitNet's efficiency with larger pre-trained model performance

🧠 The two-stage algorithm proposed for PT-BitNet?

Stage 1: Weight Transformation

Initializes thresholding and projection parameters

Searches for optimal transformation factor and thresholding value to make weight distribution quantization-friendly

Stage 2: Weight Optimization

Iteratively quantizes and optimizes weights block-wise

Uses a greedy algorithm to quantize weights to ternary values while minimizing output error