Qwen2.5-1M Open Model is released, with a huge context length of 1 million tokens, offering 3x to 7x faster inference as well

Qwen hits 1M tokens, Proxy challenges Operator, Janus-Pro-7B debuts, and Pika 2.1 animates in 1080p with lifelike physics.

Read time: 7 min 10 seconds

📚 Browse past editions here.

( I write daily for my 106K+ AI-pro audience, with 5.5M+ weekly views. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (27-Jan-2025):

🎖️ Qwen2.5-1M is released, with a huge context length of 1 million tokens, offering 3x to 7x faster inference as well.

🏆 Convergence AI has launched Proxy, a new AI assistant, positioning it as a competitor to OpenAI's Operator

📡 DeepSeek just dropped ANOTHER open-source AI model, Janus-Pro-7B.

🐋 Unsloth introduced 1.58bit DeepSeek-R1 GGUFs, and shrank the 671B parameter model from 720GB to just 131GB - a 80% size reduction

🗞️ Byte-Size Briefs:

DeepSeek’s web-interface restricts access to mainland China numbers after cyber attack.

DeepSeek becomes the #1 downloaded free app on the App Store.

Pika 2.1 launches lifelike animations with customizable physics, 1080p clarity.

🎖️ Qwen2.5-1M is released, with a huge context length of 1 million tokens, offering 3x to 7x faster inference as well

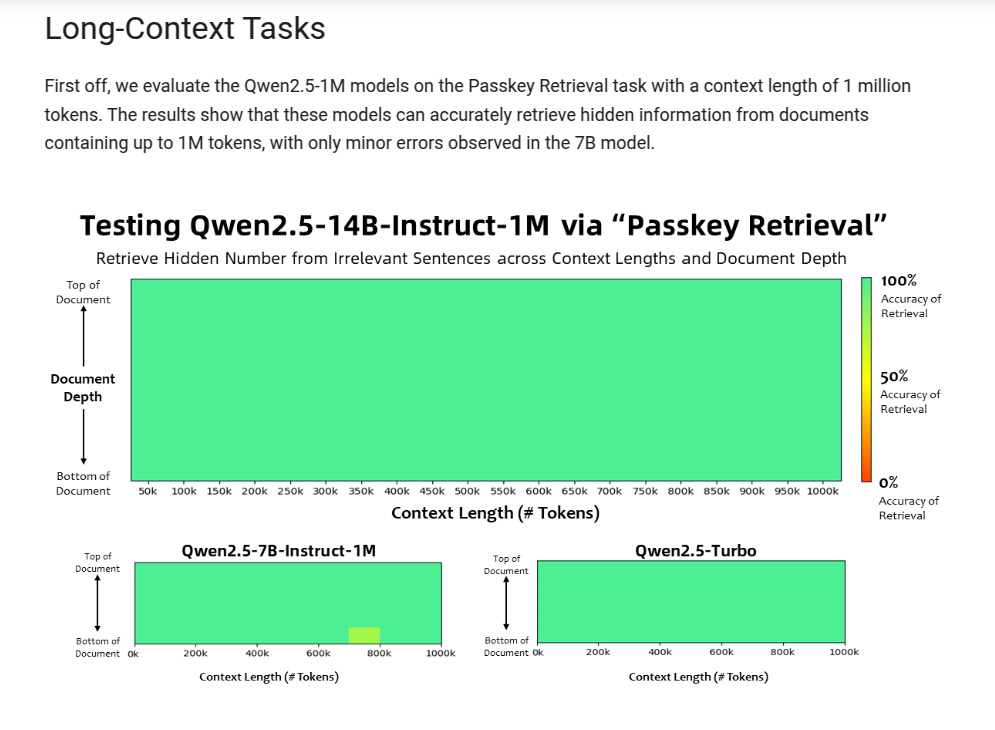

The Qwen team launched Qwen2.5-1M, boasting 1 million token context length support. It features two open-source models: Qwen2.5-7B-Instruct-1M and Qwen2.5-14B-Instruct-1M, alongside a 3x-7x faster inference framework. The Qwen2.5-14B-Instruct-1M model consistently outperforms GPT-4o-mini across multiple datasets, offering a robust open-source alternative for long-context tasks.

⚙️ The Details

→ Qwen2.5-1M extends context limits from 128k to 1M tokens. It uses length extrapolation (Dual Chunk Attention) and sparse attention to manage ultra-long contexts without additional training.

→ Two open models, Qwen2.5-7B-Instruct-1M and Qwen2.5-14B-Instruct-1M, are available under the Apache 2.0 license. They support 1M-token contexts with VRAM needs of 120GB and 320GB, respectively.

→ The inference framework, based on vLLM, accelerates processing via chunked prefill and optimized sparse attention. It delivers 3.2x-6.7x faster prefill for 1M tokens.

→ Evaluation shows Qwen2.5-1M significantly outperforms 128k versions and GPT-4o-mini on benchmarks like RULER and LongBench-Chat.

→ BladeLLM, the underlying engine, employs kernel optimization, dynamic chunked pipelines, and asynchronous scheduling to reduce memory and computational overhead.

Here's how Qwen2.5-1M extended its context to 1M tokens:

Progressive Training: Start with shorter contexts (4K tokens) and gradually train on longer ones (up to 256K). This teaches the model to handle increasing sequence lengths.

Synthetic Data: Use tasks like filling gaps, reordering paragraphs, and retrieving content to train the model on long-range dependencies effectively.

Length Extrapolation: Apply Dual Chunk Attention (DCA) to split long sequences into chunks and remap their positions, avoiding issues with untrained large distances.

Sparse Attention: Use selective attention on key tokens instead of processing the whole sequence, reducing computation and memory costs.

Optimized Inference: Combine techniques like chunked processing and refined sparsity configurations to efficiently handle 1M-token sequences during inference.

This above image shows the Passkey Retrieval test for Qwen2.5 models with a 1M-token context length. The 14B model performs perfectly, retrieving hidden data across the entire context. The 7B model is slightly less accurate, with some errors near 800k tokens. The Turbo model matches the 14B's performance, highlighting its efficiency despite faster processing. Green areas indicate high accuracy.

With this huge context-length extension Qwen2.5-1M now will excel in tasks needing large-scale document comprehension, offering a robust open-source solution.

🏆 Convergence AI has launched Proxy, a new AI assistant, positioning it as a competitor to OpenAI's Operator

🎯 The Brief

Convergence AI launched Proxy, a new AI assistant globally, positioning it as a competitor to OpenAI's Operator. Proxy offers free basic access and a $20/month unlimited plan, making it 10X cheaper and more accessible for users seeking AI automation. So its your personal AI agent for task execution. Proxy automates tasks with natural language commands.

⚙️ The Details

→ Proxy is a language-to-agent platform that automates tasks using natural language, requiring no coding skills.

→ It excels at web navigation, form filling, and workflow automation, learning from user interactions to become more efficient.

→ It learns from your requests to become more efficient over time, automating repetitive tasks on a daily, weekly, or custom schedule

→ Key features include customizable workflows, Parallel Agents, and a future Market Place for sharing automations.

→ Convergence AI emphasizes user privacy and security, ensuring Proxy only acts with explicit user consent. So when it comes to spending money and sharing your details, Proxy will never act without your permission.

→ I could not find any information about the underlying LLM model or vision model that Proxy is using.

→ You can see some demo here. Once you hit “automate,” that natural language prompt effectively becomes an agent of its own. Here's an example of a guerilla marketing agent being created to run on X (Twitter)

📡 DeepSeek just dropped ANOTHER open-source AI model, Janus-Pro-7B.

🎯 The Brief

DeepSeek introduces Janus-Pro, a unified multimodal model built on the DeepSeek-LLM-1.5b/7b architecture. It beats OpenAI's DALL-E 3 and Stable Diffusion across GenEval and DPG-Bench benchmarks. Refer to Github Repository

⚙️ The Details

→ The most interesting aspect of the Janus-Pro model is its innovative decoupling of visual encoding for multimodal understanding and generation tasks. This allows the model to specialize in both tasks without performance trade-offs, unlike other unified models. This approach reduces conflicts in shared encoders and enhances task-specific performance. The model had 72M synthetic images in pretraining.

→ The model uses SigLIP-L for image input (384x384 resolution) and a tokenizer with a downsample rate of 16 for generation tasks. While the Images are small (384x384) but still a huge release.

→ Janus-Pro-7B demonstrates superior text-to-image generation compared to its predecessor, delivering enhanced visual quality, richer details, and the ability to generate legible text at 384x384 resolution.

→ Janus-Pro is licensed under the DeepSeek License, which permits extensive use while enforcing ethical restrictions, prohibiting applications like military use or generating harmful content.

→ Overall its available on a permissive license for experimentation and deployment, Janus-Pro positions itself as a versatile next-gen multimodal framework.

🐋 Unsloth introduced 1.58bit DeepSeek-R1 GGUFs, and shrank the 671B parameter model from 720GB to just 131GB - a 80% size reduction

🎯 The Brief

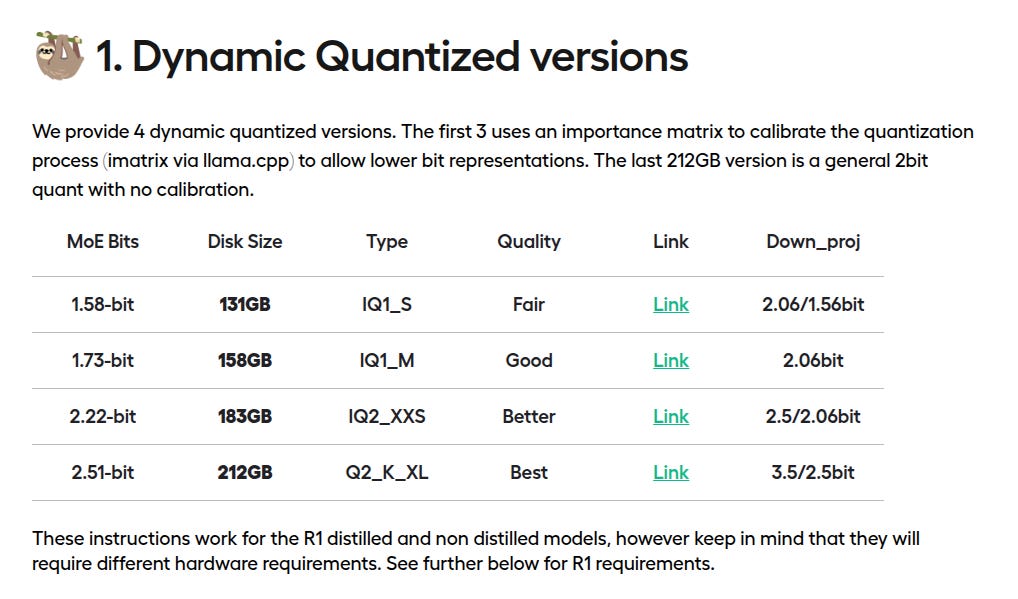

Unsloth introduced DeepSeek-R1 1.58-bit GGUF quantized models, shrinking the 671B parameter model from 720GB to 131GB, an 80% size reduction. This dynamic quantization enables inference on 2x H100 80GB GPUs at 140 tokens/sec, while maintaining functionality.

⚙️ The Details

→ Quantization Innovation: Uses selective quantization, keeping critical layers at higher precision (e.g., 4-bit) while reducing MoE layers to 1.58-bit. This prevents issues like infinite loops seen with naive quantization.

→ Performance: The 1.58-bit quant runs in 160GB VRAM, and supports CPU inference on systems with 20GB RAM, though slower. A hybrid VRAM + RAM configuration (≥80GB) is recommended for optimal use.

→ Model Variants: Four quantized versions are available (131GB to 212GB), tailored for different performance and hardware needs. Higher precision models offer improved accuracy but require more resources.

→ Benchmarks: To test all 1.58-bit quantized models, the Unsloth team avoided general benchmarks because they wanted a practical, task-specific evaluation of DeepSeek-R1's capabilities in real-world scenarios. And the 1.58bit version seems to still produce valid output!

→ The quantized models and code are hosted on Hugging Face and llama.cpp. Here is an example code

🗞️ Byte-Size Briefs

DeepSeek has restricted registration to its services, ONLY allowing users who have a mainland China mobile phone number to register. As per news, this is due to cyber attack. It was the company’s longest major outage since it started reporting its status and corresponds with the app rocketing to popularity in the Apple and Android app stores.

DeepSeek-R1 continues to have its wide-spread impact across the globe. A tweet post went viral talking about how - “Nasdaq 100 futures are now down -330 POINTS (on Jan-27) since the market opened just hours ago as DeepSeek takes #1 on the App Store. This is how you know DeepSeek has become a major threat to US large cap tech.” On top of this, DeepSeek has become the #1 downloaded free app on the App Store. Users are reporting that API experience is user-friendly, rate limits are not an issue, and it is likely to be integrated in agentic AI.

Pika 2.1 has now been officially launched, crystal-clear 1080p resolution and sharp details. The Pika 2.1 transforms AI video creation by adding realistic physics, lifelike animations, and customizable styles for unmatched creative control. It introduces dynamic lighting, precise motion control, and scene customization tools, helping creators build immersive, high-quality animations faster and more effectively.

That’s a wrap for today, see you all tomorrow.