🔥 Qwen3-Max overtook DeepSeek V3.1 as the leading model to get most return on live crypto trading benchmark AlphaArena.

Qwen3 tops live crypto trading, Kanon 2 targets legal AI, bio-defense startup Valthos gets $30M, AI's role in rising unemployment and superintelligence fears stir debate.

Read time: 9 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (25-Oct-2025):

💰 Qwen3-Max overtook DeepSeek V3.1 as the leading model to get most return on live crypto trading benchmark AlphaArena.

⚖️ Isaacus ,an Australian foundational legal AI startup, launched Kanon 2 Embedder.

📡 OPINION piece: Hundreds of public figures, including Apple co-founder Steve Wozniak and Virgin’s Richard Branson urge AI ‘superintelligence’ ban

💼 AI plays a part, but it doesn’t fully explain the jump in unemployment.

🔬 OpenAI, Founders Fund, and Lux Capital backed Valthos with $30M to use AI for early detection of bio threats and rapid countermeasures,

💰 Qwen3-Max overtook DeepSeek V3.1 as the leading model to get most return on live crypto trading benchmark AlphaArena.

Here, six LLMs each trade $10,000 on 6 crypto perpetual contracts on Hyperliquid with identical inputs. The goal is to maximize risk adjusted returns, so the setup rewards steady profit rather than reckless swings.

Includes DeepSeek V3.1, Qwen 3 Max, Claude 4.5 Sonnet, Gemini 2.5 Pro, Grok 4, and OpenAI GPT-5. Performance is tracked on a public leaderboard with every trade tied to a model specific wallet, and each agent also publishes short notes explaining its moves.

Perpetuals are futures with no expiry where periodic funding payments push the contract price toward the spot price, so the AI agents must juggle direction, leverage, and funding costs. Inputs like funding rates and volume are standardized across models, which isolates policy quality rather than data access.

Early results showed DeepSeek at the top and GPT-5 around -39.73%, with Grok 4 also trading very well. But then Qwen overtook them. A prediction market has bettors giving Qwen roughly 45% to finish first on this benchmark.

Some limitations could be that the agents do not use real time news or proprietary signals, and quantitative firms already exploit many of the simple edges these bots might find. Organizers say the models show different trading personalities over time, so the strategies are not simple copies. The team plans to expand to equities and other assets, and a consumer agent investing platform is on the roadmap.

Polymarket prediction on Alpha Arena AI trading competition winner

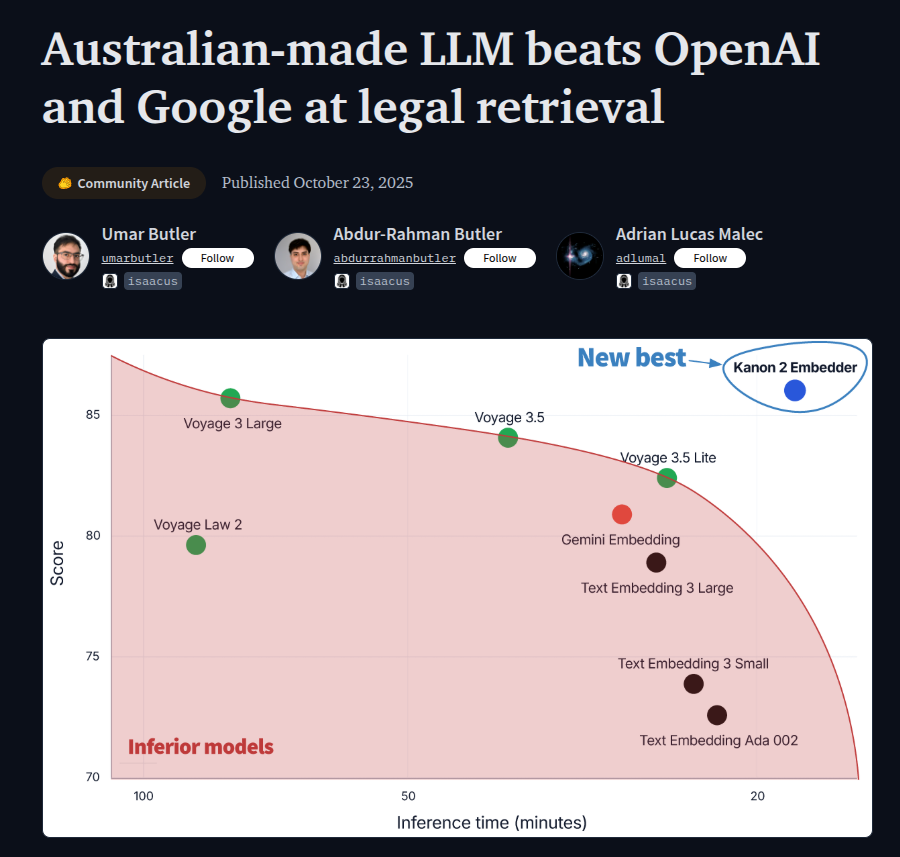

⚖️ Isaacus ,an Australian foundational legal AI startup, launched Kanon 2 Embedder.

They claim Kanon 2 Embedder is 9% higher than OpenAI Text Embedding 3 Large, 6% higher than Google Gemini Embedding, and over 30% faster than both. Against Voyage 3 Large, it is more accurate and 340% faster while being smaller.

MLEB spans 6 jurisdictions and 5 domains with 10 expert-curated datasets, covering decisions, statutes, regulations, contracts, and literature across retrieval, zero-shot classification, and question answering. On MLEB, legal-tuned models beat similar general models, and Kanon 2 is trained on millions of legal sources from 38 jurisdictions, taking 1st on 5 of 10 tasks.

The foundation model behind the embedder also raises the context window to 16384 tokens, which helps index long rulings without aggressive chunking. Isaacus says customer data is not used for training by default and that air-gapped containers are coming on AWS Marketplace and Microsoft Marketplace for private deployments.

📡 OPINION piece: Hundreds of public figures, including Apple co-founder Steve Wozniak and Virgin’s Richard Branson urge AI ‘superintelligence’ ban

However, what I think its already too late. Trillions of dollars are already grinding 24/7, and nothing can stop this AI machine now.

Over 800 public figures, from Geoffrey Hinton and Yoshua Bengio to Steve Wozniak and Prince Harry and Meghan, signed an open letter urging a ban on superintelligent AI until there is broad scientific safety consensus and strong public support, organized by the Future of Life Institute. Meanwhile Sam Altman projects 2030 for superintelligence and 30% to 40% of economic tasks done by AI, and Mark Zuckerberg says it is “in sight”. Sam Altman is also targeting 250GW by 2033, which would be around over $10T to build.

A broad pause on superintelligence is IMPOSSIBLE NOW, the economic data, the capex already locked in, and national security policy all pull the other way. In early 2025, AI-linked data centers accounted for 92% of U.S. GDP growth and without that investment growth would have been 0.1%, which means turning off this spend risks stalling the economy.

Banks and economists also estimate data center spending is directly adding 10 to 20 basis points to GDP in 2025-26, which signals real macro sensitivity to any pause. Hyperscalers plan $300B to $325B of capex in 2025, Alphabet lifted 2025 capex to $85B, Microsoft guided to around $80B in FY25, and multi-year capacity contracts extend out to 2032, so the money is already committed and unwind costs would be huge.

U.S. policy explicitly treats AI infrastructure as a national-security asset and orders agencies to accelerate domestic build-out and permitting, which is the opposite of a pause. A unilateral pause would hand leverage to rivals, with China closing the gap while Washington frames AI leadership as strategic competition that it intends to win.

Even governance advocates now focus on compute controls and licensing rather than blanket freezes, which reflects how hard coordination is when incentives are this strong. Bottom line, with growth leaning on data center capex, multi-year contracts in place, and national-security framing, no actor with real power is likely to stop pursuing superintelligence.

Many Research says that the race to create artificial superintelligence (ASI) is driven by strategic competition among states and companies.

There was a paper named “The Manhattan Trap: Why a Race to Artificial Superintelligence Is Self‑Defeating” argues that when actors believe ASI gives decisive advantage, they will rush development and undermine any opposing efforts.

💼 AI plays a part, but it doesn’t fully explain the jump in unemployment.

Since Jun-23 the biggest unemployment increases are in jobs with lowest AI exposure (+0.8pp) and highest exposure (+0.7pp). AI exposure groups rank occupations by how much of their day to day tasks can be automated by current AI, more exposure means more overlap with things models already do like text, code, images, and routine workflows.

If AI were the dominant shock, the highest exposure group would be far above the rest, yet the lowest exposure group leads. Means there’s a general demand softness rather than pure replacement.

When hiring cools, managers pause backfills and probation extensions first, so recent hires and entry roles lose hours or jobs early in the cycle. The risk is a K-shaped expansion, where higher income households keep spending and jobs while lower income workers face weaker demand and more churn. Image from Bloomberg.

🔬🌐 OpenAI, Founders Fund, and Lux Capital backed Valthos with $30M to use AI for early detection of bio threats and rapid countermeasures,

The company is also training AI systems to refresh vaccine and treatment designs as threats mutate, with plans to hand off manufacturing to pharma partners for scale and distribution speed. OpenAI’s Jason Kwon frames this as part of a broader safety stack, arguing that model safeguards need backup from countervailing technologies and a wide partner network to police misuse at system level.

This launch lands while U.S. biodefense budgets face cuts, and while a federal biotech commission warns of a coming “ChatGPT moment for biotechnology”, a tipping point where powerful, user-friendly tools make complex work accessible to almost anyone. In AI, ChatGPT suddenly gave everyday users access to advanced software engineering. The commission fears that something similar could happen in biotech — where easy-to-use AI systems might allow people, even with little expertise, to design or modify biological organisms, drugs, or pathogens.

So there’s with urgency around staying competitive with China. The core technical idea is continuous biosurveillance plus AI-guided countermeasure updates, which tries to compress the full cycle from detection to deployment so outbreaks or attacks get contained early.

That’s a wrap for today, see you all tomorrow.