Reflection-Bench: probing AI intelligence with reflection

LLMs struggle with basic human-like reflection abilities

LLMs struggle with basic human-like reflection abilities

Reflection-Bench tests if LLMs can actually learn from their mistakes like humans do

Original Problem 🤔:

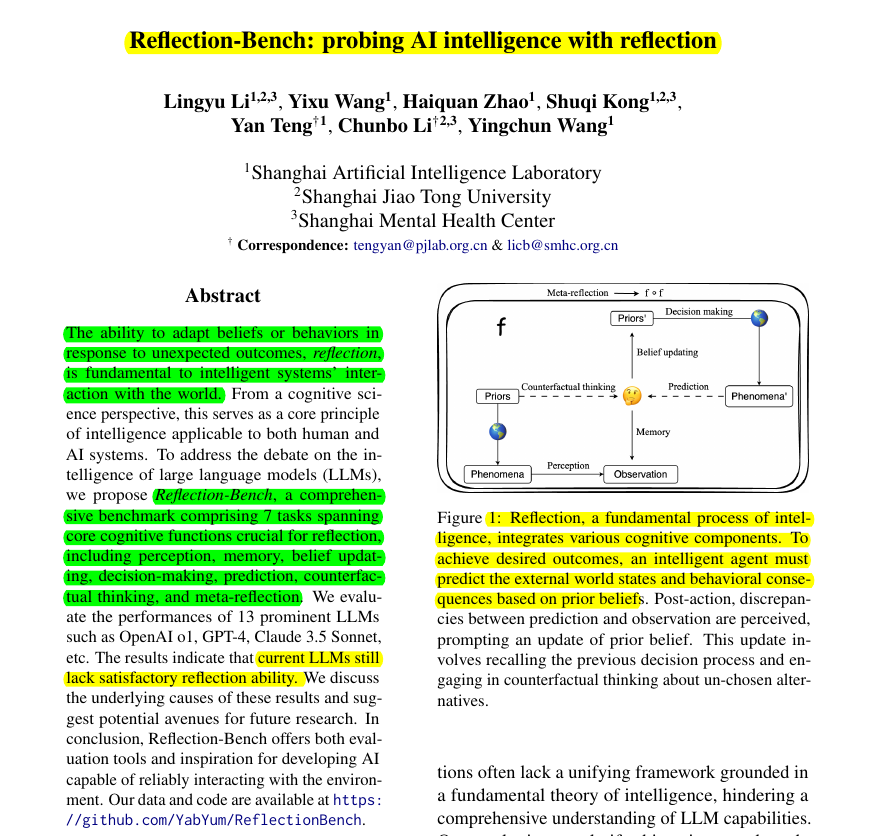

Current benchmarks for evaluating LLM intelligence lack a unified, biologically-inspired framework. Most evaluations focus on isolated capabilities without considering how real intelligence adapts to unexpected outcomes.

Solution in this Paper 🛠️:

• Introduces Reflection-Bench: 7 cognitive tasks adapted from neuroscience to test LLM's reflection abilities

• Tasks evaluate:

Perception (oddball paradigm)

Memory (n-back task)

Belief updating (probabilistic reversal learning)

Decision-making (Wisconsin card sorting)

Prediction (weather prediction)

Counterfactual thinking (double-choice Iowa gambling)

Meta-reflection (meta-bandit task)

• Each task has adjustable difficulty levels for future AI advancement

Key Insights 💡:

• LLMs show basic surprise detection and working memory but struggle with flexible adaptation

• Chain-of-thought improves performance but at 60% higher computational cost

• All tested models completely lack meta-reflection abilities

• Current LLMs fall short of human-level reflection capabilities

Results 📊:

• Evaluated 13 LLMs including OpenAI o1, GPT-4, Claude 3.5

• o1-preview topped most tasks but showed "MMN deficits" in oddball detection

• No model could recognize patterns in meta-bandit task (0 points)

• Performance gap between o1-preview and others significant only in 2-back (100%) and WCST (85.29%)