"SAeUron: Interpretable Concept Unlearning in Diffusion Models with Sparse Autoencoders"

Below podcast on this paper is generated with Google's Illuminate.

https://arxiv.org/abs/2501.18052

The problem is that diffusion models can generate harmful content. Existing unlearning methods are not transparent and can be circumvented.

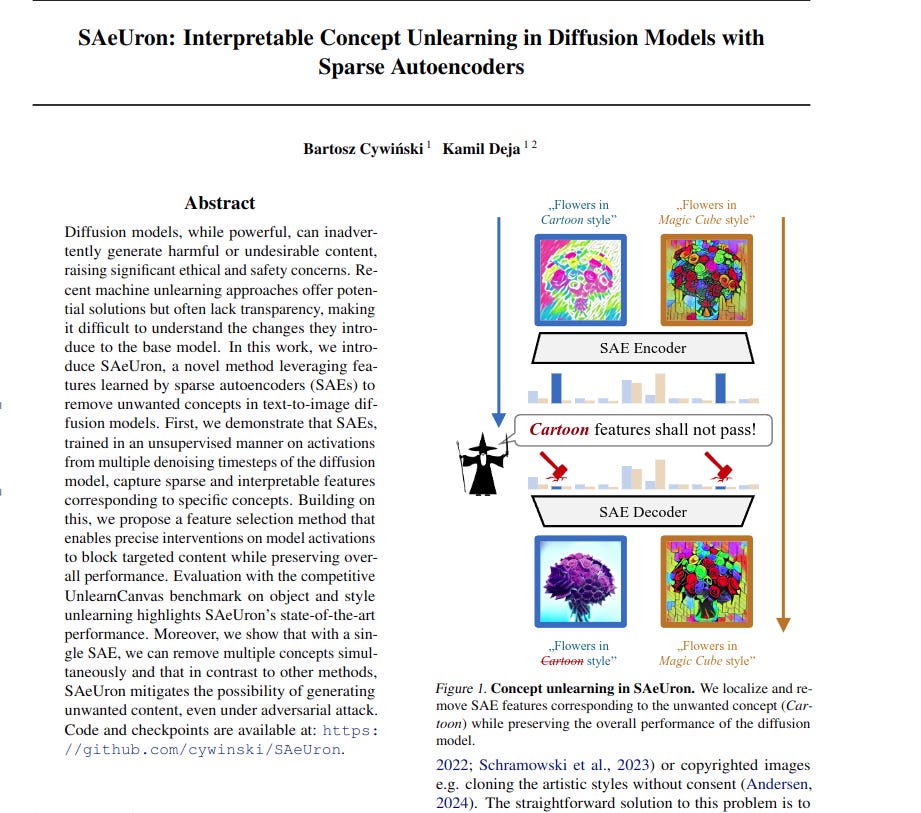

This paper introduces SAeUron. SAeUron uses Sparse Autoencoders to identify and remove features associated with unwanted concepts.

-----

📌 SAeUron uses Sparse Autoencoders to dissect diffusion model's internal feature space. This allows for targeted removal of specific concepts. The method offers interpretable control over generative model behavior.

📌 Feature ablation in SAeUron directly manipulates diffusion model activations. This targeted intervention avoids extensive fine-tuning. It ensures efficient and precise concept unlearning with minimal side effects.

📌 SAeUron demonstrates strong robustness against adversarial attacks. Its feature-level intervention offers a more resilient unlearning mechanism. This contrasts with methods that merely mask concepts.

----------

Methods Explored in this Paper 🔧:

→ This paper introduces SAeUron. SAeUron is a novel unlearning method for diffusion models.

→ SAeUron uses Sparse Autoencoders (SAEs). SAEs are trained on the diffusion model's internal activations across denoising timesteps.

→ The SAE learns sparse, interpretable features linked to specific concepts.

→ SAeUron identifies concept-specific features using an importance score. This score measures feature activation for a target concept versus other concepts.

→ During inference, SAeUron encodes activations using the SAE. It then ablates the selected concept-specific features. Ablation is done by scaling these features with a negative multiplier.

→ Finally, SAeUron decodes the modified activations. This process removes the unwanted concept while preserving overall performance.

-----

Key Insights 💡:

→ SAEs can extract meaningful and interpretable features from diffusion model activations. These features correspond to specific visual concepts.

→ SAeUron achieves state-of-the-art unlearning performance. It does so by precisely targeting and removing concept-relevant features.

→ SAeUron is robust to adversarial attacks. Unlike fine-tuning methods, it directly manipulates internal representations.

→ SAeUron can unlearn multiple concepts simultaneously. This is due to the sparse and disentangled nature of SAE features.

-----

Results 📊:

→ SAeUron achieves state-of-the-art performance on the UnlearnCanvas benchmark for style unlearning. It attains an average score of 97.85%.

→ SAeUron performs competitively on object unlearning. It achieves an average score of 83.69%.

→ In style unlearning, SAeUron outperforms other methods like ESD (91.17%) and SalUn (90.57%) in average effectiveness.

→ SAeUron demonstrates robustness to adversarial attacks. It shows minimal performance degradation under UnlearnDiffAtk.