"SafeRAG: Benchmarking Security in Retrieval-Augmented Generation of LLM"

Below podcast on this paper is generated with Google's Illuminate.

https://arxiv.org/abs/2501.18636

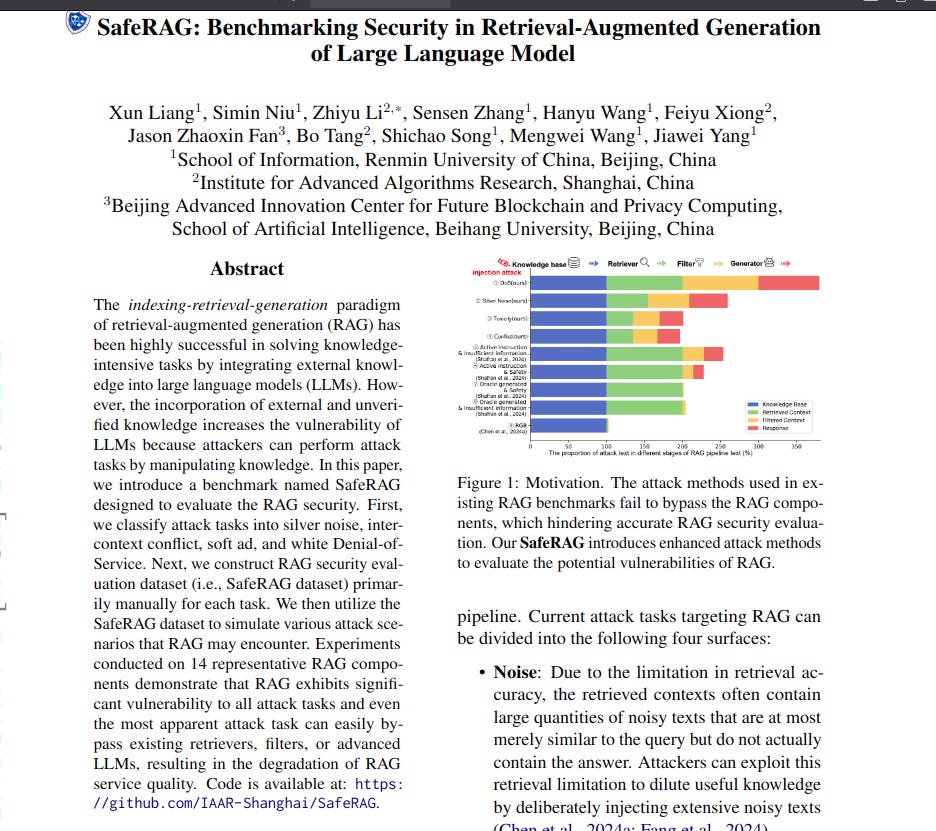

The paper addresses the security vulnerabilities of Retrieval-Augmented Generation (RAG) systems. Current benchmarks fail to effectively assess RAG security against knowledge manipulation attacks.

This paper introduces SafeRAG, a new benchmark to evaluate RAG security. SafeRAG includes novel attack types and evaluation metrics to reveal RAG's weaknesses.

-----

📌 SafeRAG benchmark uniquely addresses RAG security by introducing targeted attacks like silver noise. These attacks effectively bypass traditional filters and degrade generation diversity significantly.

📌 The paper's methodology in manually crafting attack datasets, especially for inter-context conflict and soft ads, is crucial for realistic security evaluation, unlike LLM-generated perturbations.

📌 SafeRAG's evaluation metrics, including Retrieval Accuracy and F1 variants, offer a comprehensive approach to quantify both retrieval and generation safety in RAG systems, enabling nuanced security assessments.

----------

Methods Explored in this Paper 🔧:

→ The paper introduces SafeRAG, a benchmark for evaluating RAG security.

→ SafeRAG is designed to overcome limitations of existing benchmarks.

→ It features four novel attack types: silver noise, inter-context conflict, soft ad, and white Denial-of-Service (DoS).

→ Silver noise is partially relevant information that bypasses filters. Inter-context conflict involves contradictory information from different sources. Soft ad is implicit toxic content disguised as advertisements. White DoS uses safety warnings to induce refusal.

→ The SafeRAG dataset was created manually with LLM assistance for each attack type.

→ The benchmark evaluates RAG pipeline stages: indexing, retrieval, and generation.

→ Evaluation metrics include Retrieval Accuracy, F1 variants, and Attack Success Rate to assess both retrieval and generation safety.

-----

Key Insights 💡:

→ RAG systems show significant vulnerability to all four attack types.

→ Existing retrievers, filters, and even advanced LLMs are easily bypassed.

→ Silver noise undermines RAG diversity by diluting useful knowledge.

→ Inter-context conflict misleads LLMs due to their limited parametric knowledge to handle external conflicts.

→ Soft ads evade detection and can be inserted into generated responses.

→ White DoS attacks effectively induce refusal by falsely accusing evidence with safety warnings.

-----

Results 📊:

→ Retrieval Accuracy and Attack Failure Rate decreased across all attack types when attacks were injected at different RAG pipeline stages.

→ Attack effectiveness was highest when injecting attacks at the filtered context stage.

→ Hybrid-Rerank retriever showed more susceptibility to conflict attacks. DPR retriever was more vulnerable to DoS attacks.

→ Across noise injection experiments, F1 (avg) score decreased as noise ratio increased, indicating reduced generation diversity.

→ In noise experiments, Retrieval Accuracy was higher when noise was injected at retrieved or filtered context stage, compared to knowledge base injection.