"Scaling Inference-Efficient Language Models"

Below podcast on this paper is generated with Google's Illuminate.

→ Current scaling laws for LLMs ignore inference costs.

→ These laws focus solely on training compute and parameter count. However, Model architecture significantly impacts inference latency, even for same-sized models.

This paper addresses the overlooked inference costs in LLM scaling laws. It introduces a modified scaling law and training method to create inference-efficient LLMs without sacrificing accuracy.

https://arxiv.org/abs/2501.18107

1. Inference cost is now a first-class citizen in LLM scaling. Prior laws missed real-world deployment needs. This work directly addresses that gap. Modifying Chinchilla laws to include model architecture is a pragmatic move. The paper's empirical validation across model shapes and sizes strengthens the argument for architecture-aware scaling.

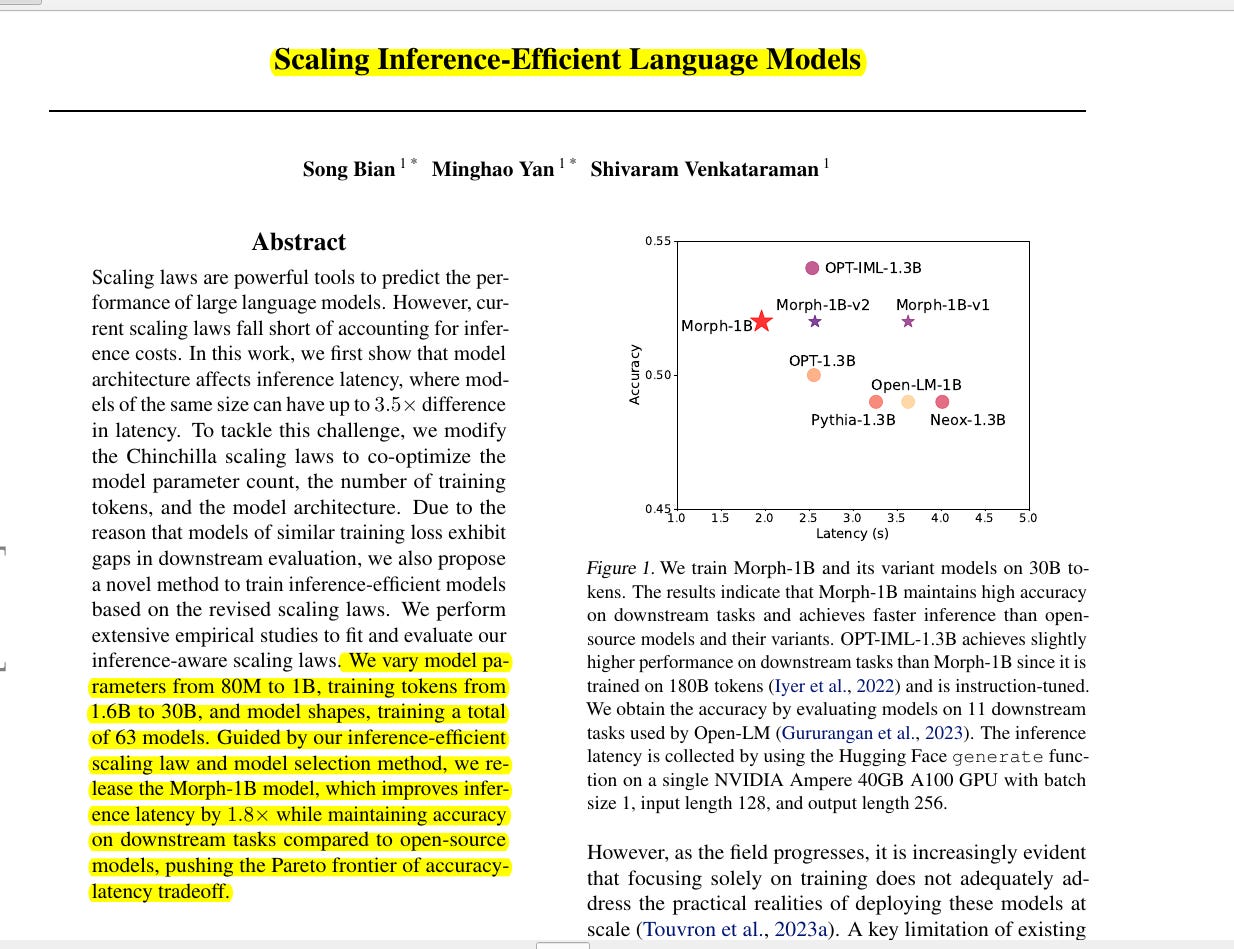

2. The paper highlights a critical practical trade-off. Same parameter budget can yield drastically different inference latencies based on model shape. Simply scaling parameters is not enough. The proposed methodology of predict, rank, and select offers a concrete way to navigate this trade-off. Morph-1B result shows tangible latency improvement without accuracy loss, proving immediate benefit.

3. This research shifts focus from pure parameter scaling to architectural efficiency. The inference-efficient scaling law provides a more nuanced tool. It moves beyond just training loss prediction. It enables optimization for real-time applications where latency is paramount. The emphasis on relative ranking of model configurations is a smart way to handle scaling law imperfections in practice.

-----

Methods in this Paper 💡:

→ The paper modifies Chinchilla scaling laws to include model architecture.

→ It proposes "inference-efficient scaling laws".

→ These laws co-optimize model size, training tokens, and model architecture.

→ The aspect ratio (hidden size divided by number of layers) is key in the new scaling law.

→ The paper introduces a training methodology using these new scaling laws.

→ This methodology predicts, ranks and selects inference-efficient model architectures.

→ It trains 63 models with varying sizes, shapes, and training tokens to validate the laws.

→ The method aims to train models that balance accuracy and inference speed.

-----

Key Insights from this Paper 🧐:

→ Model architecture is a critical factor in inference latency, not just parameter count.

→ Inference latency increases linearly with the number of layers.

→ Wider and shallower models can reduce inference latency for the same parameter size.

→ Existing scaling laws are inadequate for optimizing inference efficiency.

→ Relative ranking of predicted loss from scaling laws is more important than absolute values for model selection.

-----

Results 📊:

→ Inference-efficient scaling law reduces Mean Squared Error (MSE) to 0.0006 from 0.0033 of Chinchilla law.

→ Inference-efficient scaling law improves R-squared to 0.9982 from 0.9895 of Chinchilla law.

→ Inference-efficient scaling law achieves a Spearman correlation of 1.00 in 1B model loss prediction, compared to -0.40 for Chinchilla.

→ Morph-1B model, guided by these laws, achieves 1.8× faster inference than similar open-source models while maintaining accuracy.