Search-Based LLMs for Code Optimization

Search-Based LLMs for Code Optimization (SBLLM ) emonstrates significant performance gains in code optimization across multiple programming languages and LLMs.

Search-Based LLMs for Code Optimization (SBLLM ) emonstrates significant performance gains in code optimization across multiple programming languages and LLMs.

Original Problem 🔍:

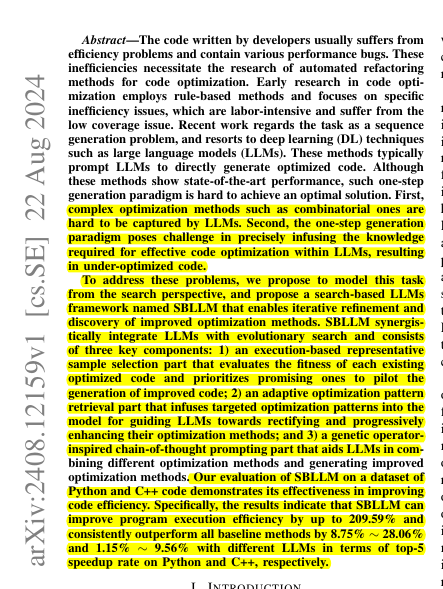

LLM-based code optimization methods typically use one-step generation, struggling to capture complex optimizations and precisely infuse necessary knowledge, resulting in under-optimized code.

Key Insights 💡:

• Execution feedback helps prioritize effective optimization strategies

• Combining different optimization methods can lead to superior results

• Adaptive pattern retrieval can enhance LLMs' optimization capabilities

Solution in this Paper 🛠️:

• SBLLM: A search-based LLM framework for iterative code optimization

• Components:

Execution-based representative sample selection

• Evaluates fitness of optimized code

• Prioritizes promising samples for further optimization

Adaptive optimization pattern retrieval

• Infuses targeted patterns to guide LLMs

• Considers both similar and different patterns

Genetic operator-inspired chain-of-thought prompting

• Aids LLMs in combining optimization methods

• Uses crossover and mutation-like operations

Results 📊:

• Improves program execution efficiency by up to 209.59%

• Outperforms baselines by 8.75% - 28.06% (Python) and 1.15% - 9.56% (C++) in top-5 speedup rate

• Achieves higher OPT@1 improvements on more powerful LLMs (e.g., 9.19% on GPT-4 vs 2.37% on CodeLlama)

• Generates 110 and 21 unique optimized code snippets for Python and C++, respectively