"Self-supervised Quantized Representation for Seamlessly Integrating Knowledge Graphs with LLMs"

Below podcast on this paper is generated with Google's Illuminate.

https://arxiv.org/abs/2501.18119

The challenge lies in bridging the gap between structured Knowledge Graphs and the sequential nature of LLMs.

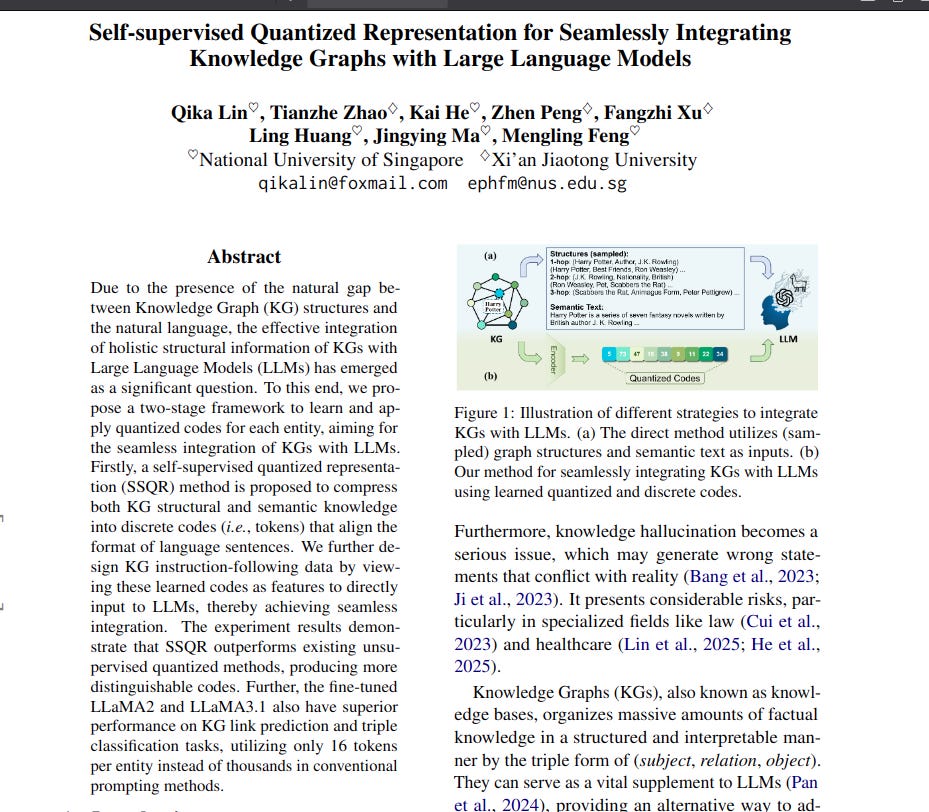

This paper introduces Self-Supervised Quantized Representation to address this. It compresses KG structural and semantic information into discrete codes, making KGs seamlessly integrable with LLMs via instruction data.

-----

📌 Knowledge Graphs are tokenized via Self-Supervised Quantized Representation. This method bridges graph-sequence gap for LLMs. LLM vocabulary expansion enables direct Knowledge Graph integration.

📌 Instruction tuning is crucial. Quantized codes enable direct LLM use for Knowledge Graph tasks like link prediction. This approach reuses LLM architecture effectively.

📌 Self-Supervised Quantized Representation uses only 16 tokens per entity, drastically reducing input size. Fine-tuned LLMs achieve superior Knowledge Graph task performance. This demonstrates efficiency and effectiveness.

----------

Methods Explored in this Paper 🔧:

→ The paper proposes Self-Supervised Quantized Representation method called SSQR.

→ SSQR utilizes a Graph Convolutional Network to capture KG structural information.

→ Vector Quantization is employed to convert entity embeddings into discrete codes.

→ Semantic knowledge is incorporated by aligning entity codes with text embeddings from a pre-trained LLM.

→ The model is trained using a combination of quantization loss, structure reconstruction loss, and semantic distilling loss.

-----

Key Insights 💡:

→ SSQR learns compressed and discrete codes representing both KG structure and semantics.

→ These codes, acting as tokens, enable direct input into LLMs after vocabulary expansion.

→ Instruction-following data is designed to leverage these codes for KG tasks within LLMs.

-----

Results 📊:

→ SSQR outperforms unsupervised quantization methods on KG link prediction tasks.

→ Fine-tuned LLaMA2 with SSQR achieves superior performance compared to existing KG embedding and LLM-based methods. For example, on WN18RR, it achieves 0.591 MRR, outperforming MA-GNN's 0.565 MRR.

→ On FB15k-237, SSQR-LLaMA2 reaches 0.449 MRR, significantly higher than AdaProp's 0.417 MRR.

→ In triple classification on FB15k-237N, SSQR-LLaMA2 achieves 0.794 accuracy, exceeding KoPA's 0.777 accuracy.