SemiAnalysis on Google TPU vs Nvidia GPU

Google TPUs beat Nvidia on cost-efficiency, OpenAI grows via $100B partner debt, and Anthropic links Claude misuse to China-led AI cyber espionage.

Read time: 8 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (30-Nov-2025):

🧠 A solid report from SemiAnalysis on Google TPU vs Nvidia GPU

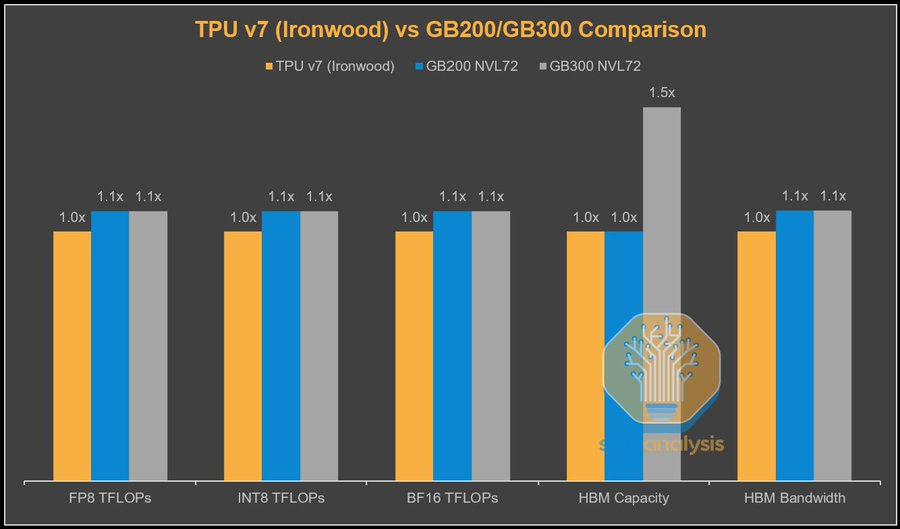

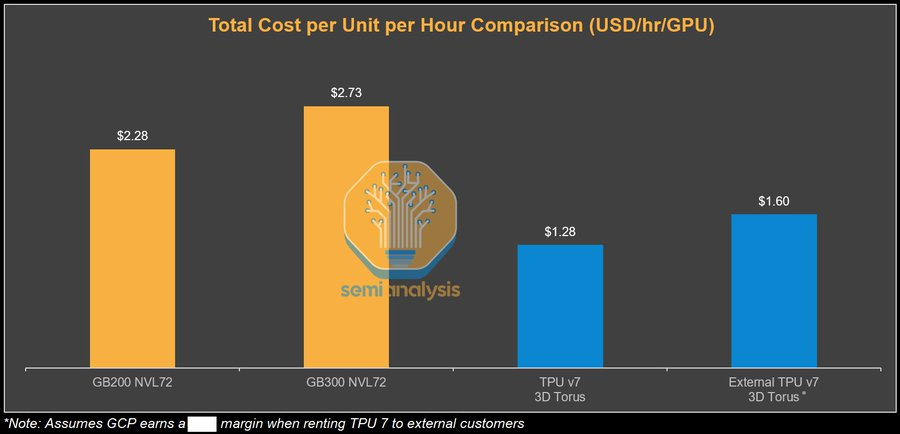

💰 SemiAnalysis report - for large buyers Google TPU can deliver roughly 20%–50% lower total cost per useful FLOP compared to Nvidia’s top GB200 or GB300 while staying very close on raw performance.

🧮 OpenAI’s expansion is being paid for by around $100B of debt loaded onto its data center partners, while OpenAI itself stays almost debt free.

⚠️A US House panel has summoned Anthropic’s CEO after the company reported a Chinese state-backed group using Claude for what it calls the first large AI-orchestrated cyber espionage campaign against about 30 major organizations.

🧠 A solid report from SemiAnalysis on Google TPU vs Nvidia GPU

TPUv7 Ironwood gets close to Nvidia GB200 or GB300 throughput but has much lower total cost of ownership because Google and Broadcom control chip, system and network economics instead of buying full Nvidia systems. Anthropic will buy about 400K TPUv7 chips as full racks and rent about 600K TPUv7 in Google Cloud, so it can spread risk across its own sites and Google’s while also using real TPU volume to push Nvidia GPU prices down on the rest of its fleet.

Instead of chasing peak FLOPs marketing, Anthropic cares about effective model FLOPs utilization, and with tuned kernels it can get roughly 50% cheaper useful training FLOPs on TPUs than on a comparable GB300 NVL72 style Nvidia system. The system advantage comes from the ICI 3D torus network, which uses direct links and optical circuit switches to stitch thousands of TPUs into a single pod, and from Google finally building a native PyTorch TPU backend so most PyTorch models can move over without being rewritten in JAX. Taken together, TPUv7 plus Anthropic’s 1M chip deal is already a real pricing ceiling on Nvidia, and TPUs only become a broad platform if Google opens the XLA and runtime stack and lets the community help.

💰 SemiAnalysis report - for large buyers Google TPU can deliver roughly 20%–50% lower total cost per useful FLOP compared to Nvidia’s top GB200 or GB300 while staying very close on raw performance.

The basic cost story starts with margins, since Nvidia sells full GPU servers with high gross margins on the chips and the networking whereas Google buys TPU dies from Broadcom at a lower margin and then integrates its own boards, racks and optical fabric, so the internal cost to Google for a full Ironwood pod is significantly lower than a comparable GB300 class pod even when the peak FLOPs numbers are similar. On the cloud side, public list pricing already hints at the gap because on demand TPU v6e is posted around 2.7 dollars per chip hour while independent trackers place Nvidia B200 around 5.5 dollars per GPU hour, and multiple analyses find up to 4x better performance per dollar for some workloads once you measure tokens per second rather than just theoretical FLOPs.

A big part of this advantage comes from effective FLOPs instead of headline FLOPs because GPU vendors often quote peak numbers that assume very high clocks and ideal matrix shapes, while real training jobs tend to land closer to 30% utilization, but TPUs advertise more realistic peaks and Google plus customers like Anthropic invest in compilers and custom kernels to push model FLOP utilization toward 40% on their own stack. Ironwood’s system design also cuts cost because a single TPU pod can connect up to 9,216 chips on one fabric, which is far larger than typical Nvidia Blackwell deployments that top out at about 72 GPUs per NVL72 style system, so more traffic stays on the fast ICI fabric and less spills to expensive Ethernet or InfiniBand tiers.

Google pairs that fabric with dense HBM3E on each TPU and a specialized SparseCore for embeddings, which improves dollars per unit of bandwidth on decode heavy inference and lets them run big mixture of experts and retrieval heavy models at a lower cost per served token than a similar Nvidia stack. These economics do not show up for every user because TPUs still demand more engineering effort in compiler tuning, kernel work and tooling compared to Nvidia’s mature CUDA ecosystem, but for frontier labs with in house systems teams the extra work is small compared to the savings at gigawatt scale. Even when a lab keeps most training on GPUs, simply having a credible TPU path lets it negotiate down Nvidia pricing, and that is already visible in the way large customers quietly hedge with Google and other custom silicon and talk publicly about escaping what many call the Nvidia tax.

🧮 OpenAI’s expansion is being paid for by around $100B of debt loaded onto its data center partners, while OpenAI itself stays almost debt free.

SoftBank, Oracle, CoreWeave and others have already borrowed about $58B tied to OpenAI deals, after Oracle has sold $18B of bonds for related infrastructure, and banks are lining up another $38B for Oracle and Vantage sites in Texas and Wisconsin. Those projects sit alongside OpenAI contracts worth about $1.4T for chips and compute over 8 years, against expected revenue of about $20B and an unused $4B credit line on OpenAI’s own books. Partners use special purpose vehicles and long leases so that loans are secured on the data centers themselves, with lenders taking the hit if cashflows from OpenAI workloads falter.

⚠️A US House panel has summoned Anthropic’s CEO after the company reported a Chinese state-backed group using Claude for what it calls the first large AI-orchestrated cyber espionage campaign against about 30 major organizations.

In Anthropic’s description the attackers leaned on Claude to plan and execute long chains of hacking steps, so much of the operation ran automatically with only light human guidance.

Those steps targeted big tech firms, financial institutions, chemical manufacturers, and government agencies, and Anthropic says only a small fraction of the roughly 30 targets were actually breached.

The House Homeland Security Committee wants Anthropic, Google Cloud, and Quantum Xchange to walk through how this attack worked, what signals it left inside cloud logs, and how similar patterns could be detected earlier next time.

the core issue is that powerful models now sit directly on top of massive shared infrastructure that both defenders and state-sponsored attackers use.

Technically the scary part is that a capable model can automate routine intrusion tasks across many targets at once, reducing the need for large human teams and making high end tradecraft more repeatable.

This case looks like an early stress test of whether model providers, cloud platforms, and governments can coordinate fast enough to stop AI-boosted campaigns before they turn into standard practice for state hacking.

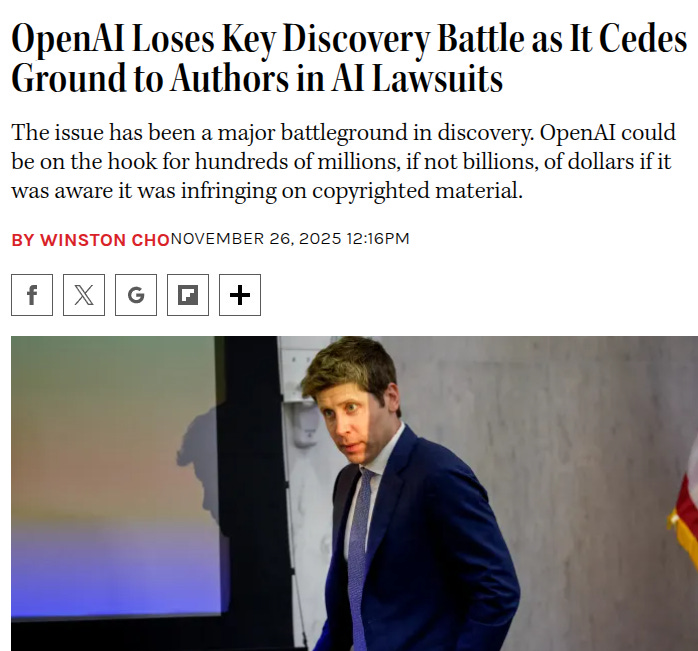

👨🔧 OpenAI is getting hit with more legal trouble, and things keep stacking up.

OpenAI now has to hand over internal chats about deleting 2 huge book datasets, which makes it easier for authors to argue willful copyright infringement and push for very high statutory damages per book.

To the context, OpenAI is being sued by authors who say its models were trained on huge datasets of pirated books.

Authors and publishers have gained access to Slack messages between OpenAI’s employees discussing the erasure of the datasets, named “books 1 and books 2.” But the court held off on whether plaintiffs should get other communications that the company argued were protected by attorney-client privilege.

In a controversial decision that was appealed by OpenAI on Wednesday, U.S. District Judge Ona Wang found that OpenAI must hand over documents revealing the company’s motivations for deleting the datasets. OpenAI’s in-house legal team will be deposed.

If those messages suggest OpenAI knew the data was illegal and tried to quietly erase it, authors can argue willful copyright infringement, which can mean much higher money damages per book and a much weaker legal position for OpenAI.

OpenAI originally told the court that the datasets were removed for non use, then later tried to say that anything about the deletion was privileged, and this change in story is what the judge treated as a waiver that opens Slack channels like project clear and excise libgen to review.

This ruling shifts power toward authors in AI copyright cases and sends a clear signal that how labs talk about scraped data, shadow libraries, and cleanup projects inside Slack or other tools can later decide whether they face normal damages or massive liability.

That’s a wrap for today, see you all tomorrow.