🥉 Sesame Announces Hyper-Realistic Voice AI

GPT-4.5 leads all LLM benchmarks, Sesame pushes voice realism, DeepSeek cracks inference profits, Anthropic raises $3.5B, EA open-sources C&C, plus LLM cost control and Claude API strategies.

Read time: 9 min 32 seconds

📚 Browse past editions here.

( I write daily for my for my AI-pro audience. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (3-March-2025):

🥉 Sesame Announces Hyper-Realistic Voice AI

🏆 GPT-4.5 Tops lmsys Arena Leaderboard Across All Categories

📡 DeepSeek revealed its inference optimization method, that generates 545% profit margins

🗞️ Byte-Size Briefs:

Perplexity introduces GPT-4.5 with 10 free queries for Pro users.

EA open-sources Command & Conquer code under GPL on GitHub.

Anthropic raises $3.5B, boosting compute, AI research, and global growth.

🍟 Tips and Tricks: LLMs in Production: Some points to now for managing ballooning costs, curb hallucinations, and handle specialized data.

🧑🎓 Tutorial: Building with “extended thinking” in Anthropic Claude API

🥉 Sesame Announces Hyper-Realistic Voice AI

🎯 The Brief

Sesame, founded by Oculus co-founder Brendan Iribe, introduced an AI voice system trained on 1 million hours of audio, delivering uncanny human-like speech. Users reported startling realism, signaling a major leap in voice interfaces.

⚙️ The Details

Sesame’s model features emotional intelligence, contextual awareness, and a consistent personality. It adapts its tone and rhythm based on a conversation’s mood, producing fluid, human-like responses.

The technology includes three variants: Tiny (1B backbone, 100M decoder), Small (3B backbone, 250M decoder), and Medium (8B backbone, 300M decoder). Each provides different performance levels while retaining the core “voice presence” concept.

Two demo personas, Maya and Miles, are publicly available. Sesame plans to open-source core components under Apache 2.0. English is currently the sole supported language, but the company aims to expand to 20+ languages eventually.

The team is also developing lightweight AI glasses that layer real-time voice assistance into everyday life. This has potential for call centers, content creation, and real-time language practice, though specialized contexts like singing remain challenging.

Why its so important: After years with underwhelming voice assistants, users now experience a huge jump in realism and context. With Hume, Alexa+, and Sesame pushing boundaries, advanced voice interfaces are finally emerging as a compelling upgrade.

🏆 GPT-4.5 Tops lmsys Arena Leaderboard Across All Categories

🎯 The Brief

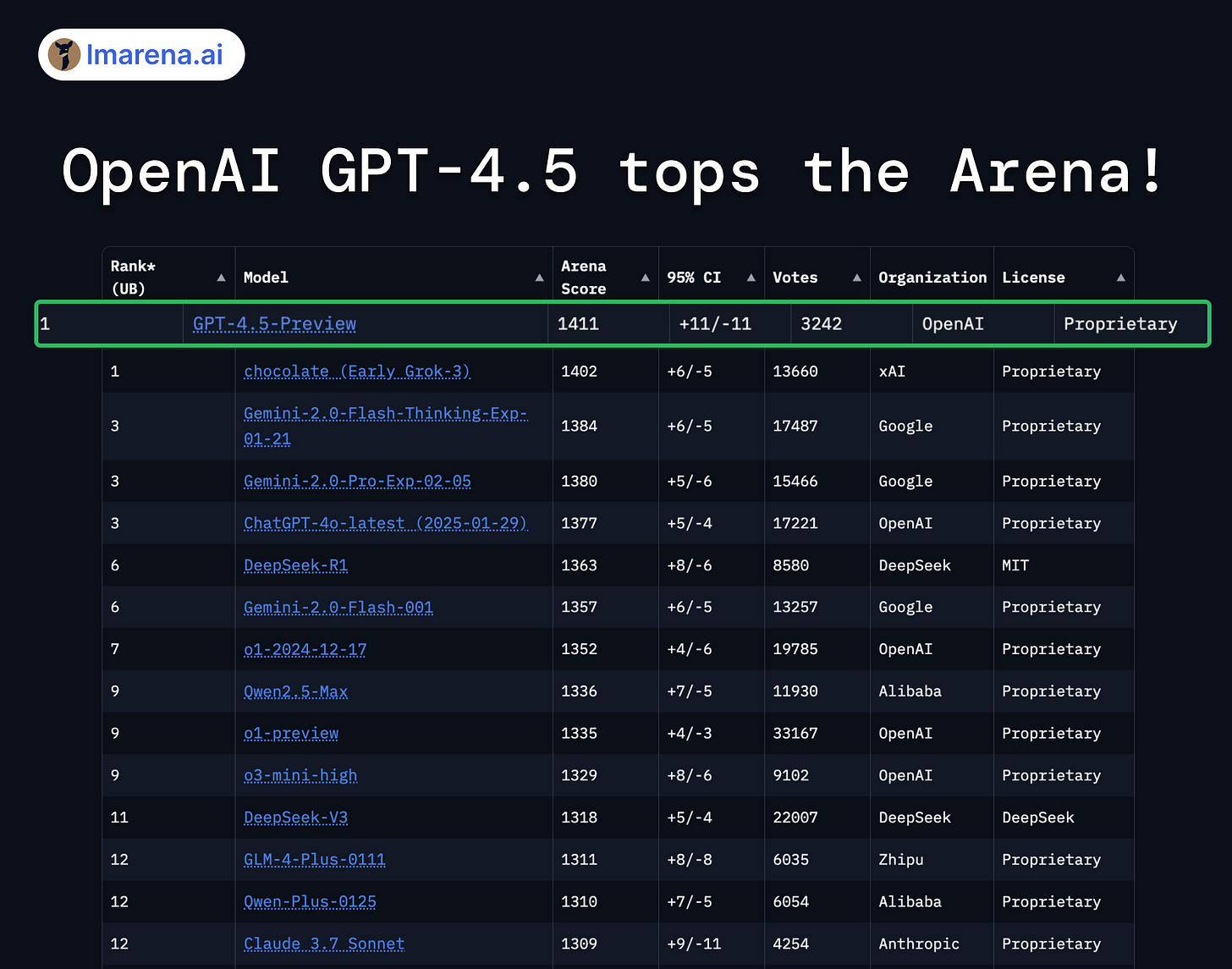

OpenAI’s GPT-4.5 secures the top rank on Arena’s leaderboard with 3k votes. It outperforms every other entry in all categories and dominates Style Control and Multi-Turn.

⚙️ The Details

• It achieved first place in Multi-Turn, Hard Prompts, Coding, Math, Creative Writing, Instruction Following, and Longer Query. Its performance in Multi-Turn tasks stood out, reflecting its adaptive conversation handling. Surpassed all competitors in both technical and stylistic tasks.

• GPT-4.5 also claimed a unique lead in Style Control, showcasing refined output customization. The consistently high rankings across various categories underscore its broad capabilities.

• However, one major limitation of GPT-4.5 is its high operational cost, which is about 30 times more than GPT-4o (at $75 per million tokens, a notable increase from GPT-4’s $2.50 per million tokens). While this has resulted in improved conversational capabilities and reduced factual errors, the high cost raises concerns about its accessibility and long-term viability.

📡 DeepSeek revealed its inference optimization method, that generates 545% profit margins

DeepSeek shared cost and throughput data for their V3/R1 inference system, harnessing large-scale Expert Parallelism for higher throughput and lower latency while yielding a 545% profit margin. And all these while being cheaper than competitions.

The above image taken from their official Github announcement repo, illustrates how DeepSeek interleaves computation (orange) and communication (green) across two microbatches. One microbatch’s compute phase coincides with the other’s transmission phase, keeping GPUs fully active.

Transmission phase is when the system sends or receives intermediate data (e.g., token embeddings, router outputs) across GPUs or nodes. During this interval, the GPU isn’t crunching math but rather passing information so that the next stage or microbatch can proceed.

This overlapping strategy hides network delays behind ongoing computations, yielding higher throughput. With minimal idle time, the cost per token drops because each GPU produces more results in the same time window. It’s critical for achieving super low inference cost by leveraging every GPU cycle without waiting on data transfers.

The optimization objectives of serving DeepSeek-V3/R1 inference are: higher throughput and lower latency.

To optimize these two objectives, our solution employs cross-node Expert Parallelism (EP).

Expert Parallelism (EP) splits a model’s “experts” across multiple GPUs so that each GPU only handles a subset of experts. The system routes requests to the specific experts needed per token. This reduces per-GPU memory demands, scales batch sizes, and improves throughput while keeping latency low.

⚙️ Overall Architecture

Expert Parallelism (EP) increases the number of tokens processed in one go (larger batch size) so each GPU can keep its matrix units busy.

Each GPU handles fewer “experts” from the model, which reduces how much data a single GPU must hold in memory, helping respond faster.

Because EP spreads the workload across multiple machines, Data Parallelism (DP) is also needed, where each machine holds a copy of the model’s parameters.

Balancing dispatch (the process of routing tokens to the right experts) and core-attention (the main attention blocks in the transformer) is key to avoid any single GPU becoming a bottleneck.

🔄 Overlapping Computation

Cross-node Expert Parallelism (EP) requires sending data between machines. During prefill, DeepSeek divides a batch into two smaller batches (microbatches). Prefill is the stage where the model processes the input context tokens (the prompt) before producing any output tokens. It sets up the internal states needed for fast token generation later.

While the GPU processes one microbatch, it sends or receives data for the other. This hiding of communication behind computation keeps the GPU busy at all times. During decode, DeepSeek uses a 5-stage pipeline to further overlap these tasks, so data transfer happens in parallel with processing. This way, little GPU time is wasted waiting for messages.

⚖️ Load Balancing

Different requests have varying lengths, and some “experts” get hit more often than others. Without balancing, certain GPUs would do too much work and slow everything down. DeepSeek uses separate load balancers for prefill, decode, and expert placement. These balancers shuffle tasks or adjust how requests are routed, ensuring every GPU handles a fair share of the workload. This prevents bottlenecks and keeps the entire system running efficiently.

💰 Costs and Profit

DeepSeek uses H800 GPUs at $2/hour each. Over 24 hours, it used an average of 1,814 GPUs (226.75 nodes) for $87,072 total cost. During this window, 608B input tokens and 168B output tokens yielded a theoretical $562,027 revenue, netting a 545% margin if fully billed at R1 rates. In practice, actual revenue is lower due to discounted/free usage. Even so, DeepSeek indicates profitable operations, with surplus funds reinvested in R&D.

They have at least 10,000 H800s yet deployed fewer than 2,224 for inference, suggesting strong scaling capacity and strategic resource management.

🍟 Tips and Tricks: LLMs in Production: Some points to note for managing ballooning costs, curb hallucinations, and handle specialized data.

In production, repeated queries can appear 100x/day, and efficient gating reduces expensive usage by about 90%.

💰 Cost Controls

Cache repeated requests. Use cheaper classifiers for straightforward tasks. A small BERT classifier quickly handles simpler queries, sparing your expensive LLM from trivial requests. This offloads most traffic to a cheaper model, saving cost and preserving capacity for complex questions.

Quantize models or apply parameter-efficient fine-tuning. Asynchronous caching pre-builds common answers.

⚠️ Hallucination Risks

LLMs can generate convincing but incorrect responses. Retrieval-augmented generation (RAG) and validation checks help ensure factual outputs.

🔍 Discriminative Power

Use large LLM labels to train a smaller discriminative model, cutting overhead.

🔧 Domain Adaptation

Fine-tune on specialized data with methods like LoRA or Adapters. Generate synthetic data to boost coverage.

⚙️ Prompt Iterations

Treat prompts like features. Maintain versions, run A/B tests, and optimize through bandit approaches.

🗞️ Byte-Size Briefs

Perplexity bringing GPT-4.5, offering Pro users 10 queries daily. Available to all Pro users.

Electronic Arts (EA) open-sources code for four Command & Conquer games under the GPL license. The original Command & Conquer (since subtitled Tiberian Dawn) is joined by Red Alert, Renegade, and Generals, the code for all of which can now be found on EA’s GitHub page.

Anthropic raises $3.5B in a Series E at a $61.5B post-money valuation. This expands compute capacity and drives next-gen AI research and global growth. So overall, the company has now raised a total of $18.2B. Revenue run rate reached around $1B, up 30% this year, but $3B in spending reflects its heavy development push. Amazon has invested $4B, collaborating on AI chip optimization and next-level Alexa capabilities. New offices abroad and high-profile hires reinforce Anthropic’s push for robust commercial traction.

🧑🎓 Tutorial: Building with “extended thinking” in Anthropic Claude API

Anthropic introduced extended thinking in Claude 3.7 Sonnet, enabling step-by-step internal reasoning up to 128K tokens for complex tasks and partial transparency.

♾ Extended Thinking Mechanics

Claude now splits responses into thinking blocks (internal reasoning) and text blocks (final output). A budget_tokens parameter (minimum 1,024, can exceed 32K) limits how many tokens Claude can use internally. This increases clarity for high-complexity tasks.

In multi-turn conversations, Claude only sees thinking blocks from the most recent assistant turn when it continues the conversation. Thinking blocks from earlier assistant turns are ignored and not billed. This means only the final turn’s thinking blocks (from a tool use session or assistant turn at the end) are counted and visible, while older thinking blocks stay hidden and don’t affect token costs.

🦺 Redacted Thinking

Claude sometimes encrypts part of its reasoning into redacted_thinking blocks. These encrypted segments preserve continuity for multi-turn conversations. Passing them back to the API unaltered is critical for consistent results.

🏷 Implementation Details

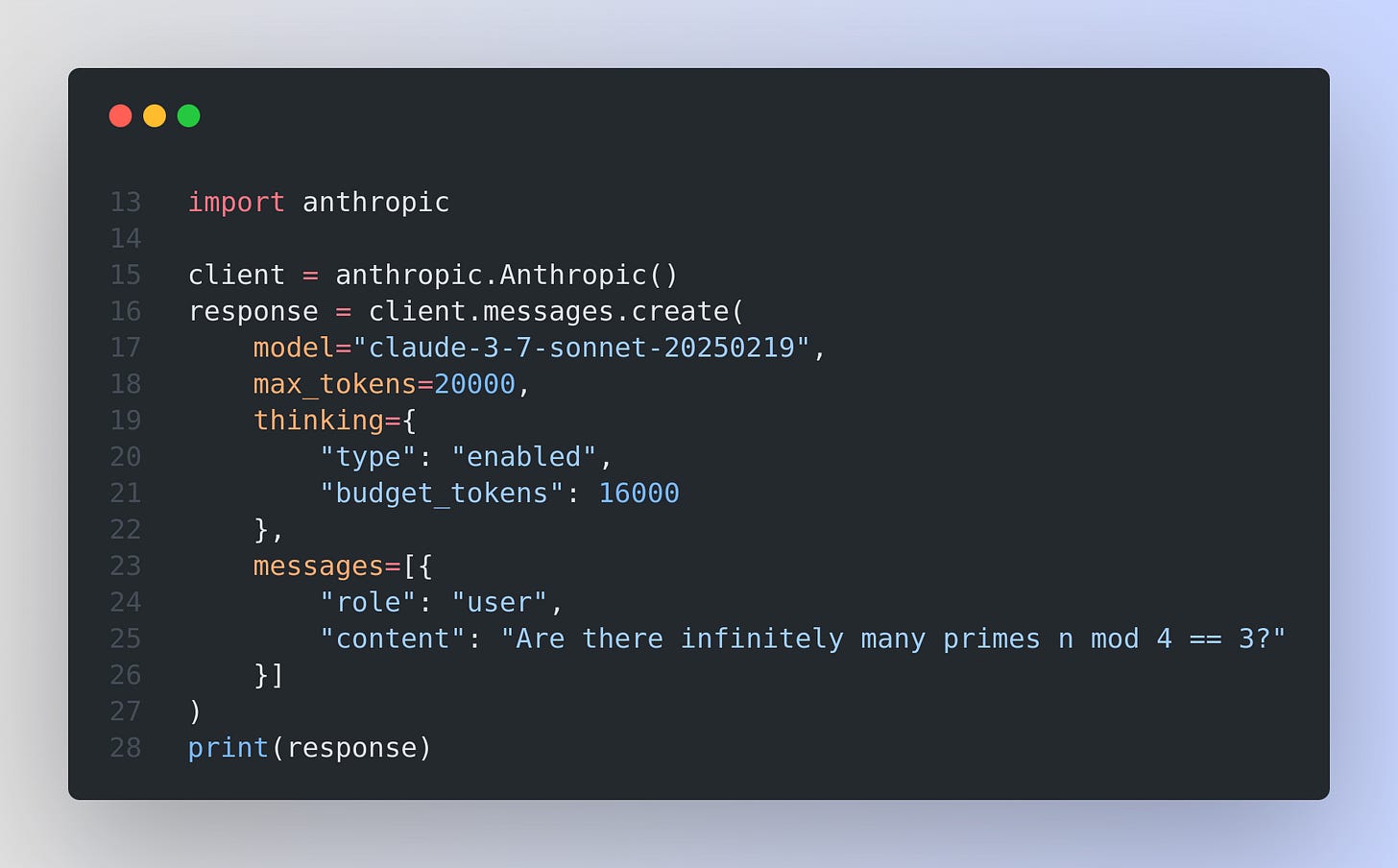

Below Python code configures extended thinking:

This sends a request with up to 20,000 output tokens total, reserving 16,000 tokens for internal reasoning. The response returns both thinking and text blocks.

⚙️ Why It Matters

It allows thorough analysis for math, coding, and advanced logic. Costs rise with bigger budgets: input tokens cost $3/M, output (including thinking) costs $15/M. Streaming or batch processing is recommended for responses above 21,333 or 32K thinking tokens.

🚀 Tips

Start with 1,024–16,000 thinking tokens.

Pass back all thinking or redacted_thinking blocks exactly as received.

Use streaming for lengthy outputs and manage chunked delivery.

Monitor token usage carefully to avoid timeouts.

Some more general learning while using the ‘thinking block’

Temperature Setting: Fix temperature at 1 when thinking mode is on. Any other value triggers an error, even though the docs say temperature is unsupported.

Budget vs. max_tokens: max_tokens must always exceed budget_tokens or the API rejects the request. This ensures enough room remains for the model’s final output after it finishes its internal thinking.

Mandatory Streaming: Streaming is required once max_tokens goes above 21,333. If you try to disable streaming with such a large setting, the API returns an error.

Preserving Thinking Blocks: For multi-turn conversations involving tools, send thinking and redacted_thinking blocks exactly as they appeared. This continuity preserves the step-by-step reasoning.

Reasoning Continuity: Claude tracks only the thinking blocks from the final assistant turn, and you’re billed only for those. Older blocks remain in the conversation for context, but they’re hidden and never charged again.

That’s a wrap for today, see you all tomorrow.

Best voice assistant 💪