Some lesser-known and super important facts about the K-Nearest Neighbors (KNN) algorithm.

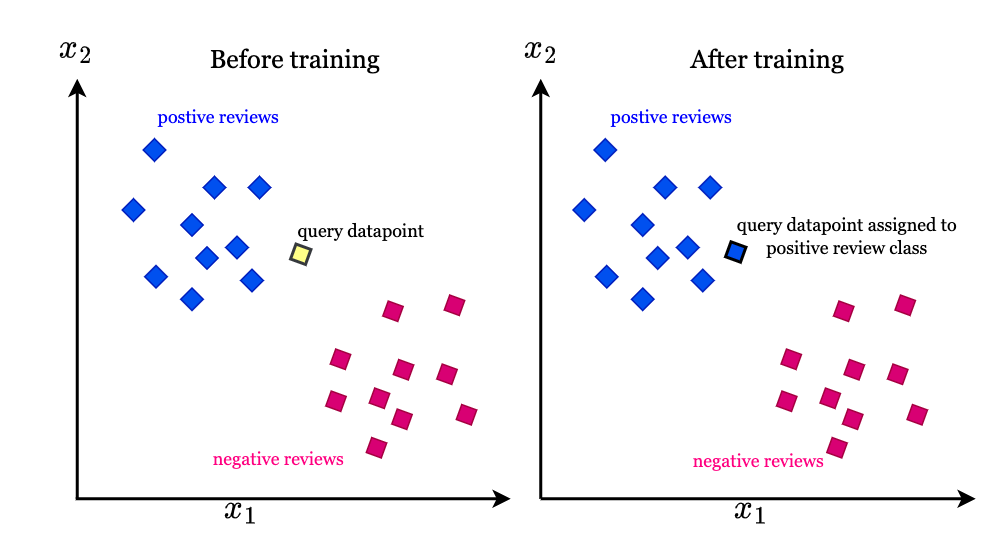

🟠 Instance-Based Learning: Unlike many other machine learning algorithms that build a general model during the training phase, KNN is an instance-based algorithm. It doesn't really learn a model, i.e. KNN does not learn any discriminative function BUT memorizes the entire training dataset instead.

The "learning" happens when a prediction is needed. Each time a new data point comes in and we want to make a prediction, the KNN algorithm will search for the nearest neighbors in the entire training set. This makes KNN fall under the category of lazy learning algorithms.

🟠 Non-parametric Nature: KNN is a non-parametric algorithm, which means it makes no assumptions about the underlying data distribution. This property makes KNN particularly useful for non-linear data since it doesn't try to fit a pre-determined distribution to the data.

🟠 Effect of Scaling: KNN is very sensitive to scaling because it uses distances between data points to determine their similarity. For example, imagine a dataset with two features (age of a person in years and an income variable) : one ranges from 0 to 100, and the other ranges from 0 to 200,000.

The distance metric will be heavily influenced by the second feature, leading to a model that doesn't perform as well. So Feature scaling is must.

Just as an Example - The Euclidean distance between observation 1 and 2 may be given as:

Euclidean Distance = [(100000–80000)^2 + (30–25)^2]^(1/2) => 20,000

The high magnitude of income affected the distance between the two points. This will impact the performance of all distance based model as it will give higher weightage to variables which have higher magnitude (income in this case).

🟠 Curse of Dimensionality: In high-dimensional spaces, KNN can struggle because the volume of the space increases so rapidly. This can mean that even the nearest neighbors are far away in the space, making predictions less reliable.

🟠 Class Imbalance Problem: KNN can struggle with class imbalance, where one class of data far outweighs another. This can lead to biased predictions because the algorithm will be more likely to classify a new instance according to the majority class. Meaning, when we have a new data-point, on which I need to do Inferencing, and say from the training data we have 2 close-by categories (by some distance-metric), and both those categories, lets say are of equivalent distance from the new data-point.

Now how do we decide which class that new data-point belongs to? We simply decide it to be the the category with the most frequent occurrences.

🟠 Weighted Voting: A lesser-known tweak to KNN is to use weighted voting. In traditional KNN, each of the 'k' neighbors has an equal vote in how a new point is classified. With weighted KNN, points are given weights inverse to their distance, so nearer neighbors contribute more to the final vote than the more distant ones.

🟠 Choosing the Right 'K': The choice of 'k' is critical. A small 'k' reduces the bias, but at the cost of increasing the variance (overfitting). Conversely, a large 'k' reduces variance but increases bias (underfitting). There is no universal rule to choose the optimal 'k', and it often requires cross-validation to select the best 'k'.

🟠 Non-Euclidean Distance Metrics: Although Euclidean distance is a common choice for KNN, depending on the problem, other distance metrics like Manhattan, Minkowski, or even a domain-specific custom function can be used.

🟠 Use in Outlier Detection: While KNN is usually discussed in the context of classification or regression, it's also used for outlier detection. If the minimum distance from a point to its 'k' neighbors is significantly larger than for other points, that point could be considered an outlier.

🟠 Versatility of KNN: KNN isn't just for numeric data. It can work with categorical data (using a suitable distance metric), time-series data, text data (with some preprocessing like TF-IDF), and even with mixed data types.

🟠 Use in VectorStore Engines: A variant of KNN algorithm, called Approximate k-NN, trades some accuracy for speed in large datasets, by reducing distance calculations. Its HNSW and FAISS implementations are used as engines in vector databases, efficiently handling high-dimensional data for fast searches. Each vector database utilizes its own version of the Approximate k-NN to enhance the traditional nearest neighbor search process. While not all specifically use HNSW or FAISS, there are various other algorithms based on same idea. The premise is to accept a small probability of error in return for a significant speedup. These algorithms boost speed by reducing distance calculations either by clustering data or using tree structures for efficient organization and quicker searches.

-----------------------

If you enjoyed this post:

1. Follow me @rohanpaul_ai on Twitter for more of these every day and

2. Also, check out my MachineLearning YouTube channel