🏆 Stanford dropouts and ex Meta FAIR engineers launched Axiom Math to develop new knowledge with advanced mathematics

Stanford-dropouts’ Axiom Math, Sora’s deepfake chaos, Perplexity’s new browser, exponential AI growth, and MobileLLM-R1’s open sub-billion parameter reasoning.

Read time: 9 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (3-Oct-2025):

🏆 Stanford dropouts and ex Meta FAIR engineers launched Axiom Math to develop new knowledge with advanced mathematics

🎥 OpenAI’s Sora app is already filled with hyper realistic Sam Altman deepfakes made through its built in cameo system.

🌐 Perplexity rolls out Comet browser for free worldwide

🛠️ AI progress is very much happening on an exponential scale.

👨🔧 MobileLLM-R1: Exploring the Limits of Sub-Billion Language Model Reasoners with Open Training Recipes

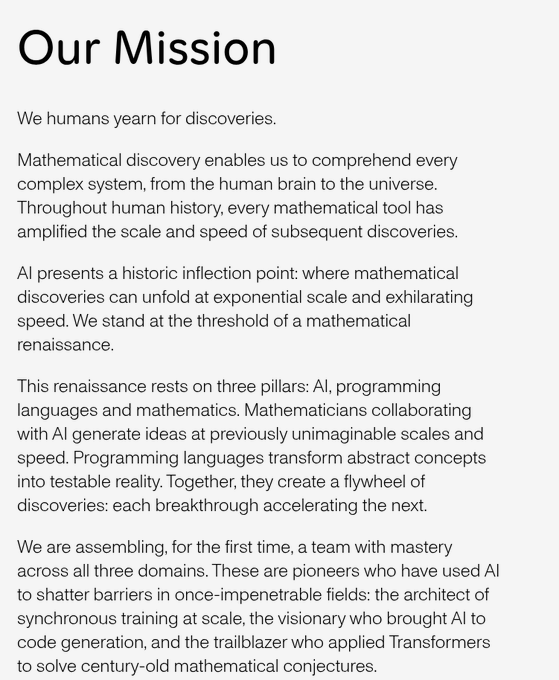

🏆 Stanford dropouts and ex Meta FAIR engineers launched Axiom Math to develop new knowledge with advanced mathematics

By combining AI, programming languages, and mathematics, Axiom is creating the foundation for verified quantitative reasoning that’s provably correct. The result: a self-improving reasoner, starting with an AI mathematician.

They are building a model that writes step-by-step proofs, checks itself, and trains on formal math code so results are formally verified. Axiom positions its work toward research-grade correctness, where every step is machine-checked and reusable, and where the system can also propose new conjectures for humans to test.

Under the hood, the plan is to treat proofs as code using Lean, supervise the model with exact targets from that corpus, and run a verifier that rejects any invalid step. The techniques Axiom is using to make an AI mathematician, can also be applied outside pure math.

In program verification, you can use the same approach to prove that software does exactly what it is supposed to do, with no hidden errors.

In safety-critical engineering (like airplanes, medical devices, or nuclear systems), you want 100% certainty that the designs behave as expected, because even tiny mistakes could be catastrophic. Formal proofs can give that certainty.

In quantitative finance, traders and analysts run algorithms on huge amounts of money. If those algorithms are wrong, the losses could be massive, so using formal proofs to check them gives stronger guarantees that the strategies are sound.

Axiom’s plan rests on 3 big mechanisms that they’re stitching together into one “reasoning engine.”

→ 1. Autoformalization (the compiler for math)

They want to build a “math compiler” that translates between human math language (like textbook explanations) and formal proof code in systems like Lean.

Going down: it takes intuitive reasoning and turns it into machine-checkable proofs.

Going up: it can translate those proofs back into human-friendly explanations.

This creates a two-way cycle, so humans and AI can collaborate. Crucially, the AI can also discover hidden structures in formal proofs and surface them as new insights that people wouldn’t normally see.

→ 2. Formal verification (the ground truth checker)

Once a proof is written, a verifier like Lean checks if every single step is correct. There’s no “maybe right”, it’s either valid or invalid.

This provides a binary reward signal for training the AI. Instead of relying on subjective judgments (like “the proof looks good”), the model gets exact feedback. That makes it scalable and reliable. The bigger idea is that mathematics becomes a world model for reality, since so many natural and engineered systems ultimately follow mathematical rules.

→ 3. Conjecturing–proving loop (the self-improvement cycle)

They’re building two engines:

A Conjecturer that explores and proposes possible new theorems. A Prover that tries to formally prove them (or disprove them with counterexamples).

Successful proofs expand the knowledge base. Failed attempts still help by teaching the model where it went wrong. Over time, the loop enriches the knowledge graph of math and trains both the Conjecturer and Prover to get stronger.

This creates a self-reinforcing cycle: more theorems → richer dataset → better models → better conjectures → more proofs.

→ How it all ties together

The whole system is like AlphaGo, but instead of playing Go moves, it’s playing in the space of mathematical discovery. Each move is a step in a proof, each game is a theorem, and the win condition is passing a formal verifier. By combining autoformalization, verification, and the conjecture-prove loop, Axiom is aiming for an AI that doesn’t just solve math problems but continuously expands mathematical knowledge on its own, with every result formally guaranteed.

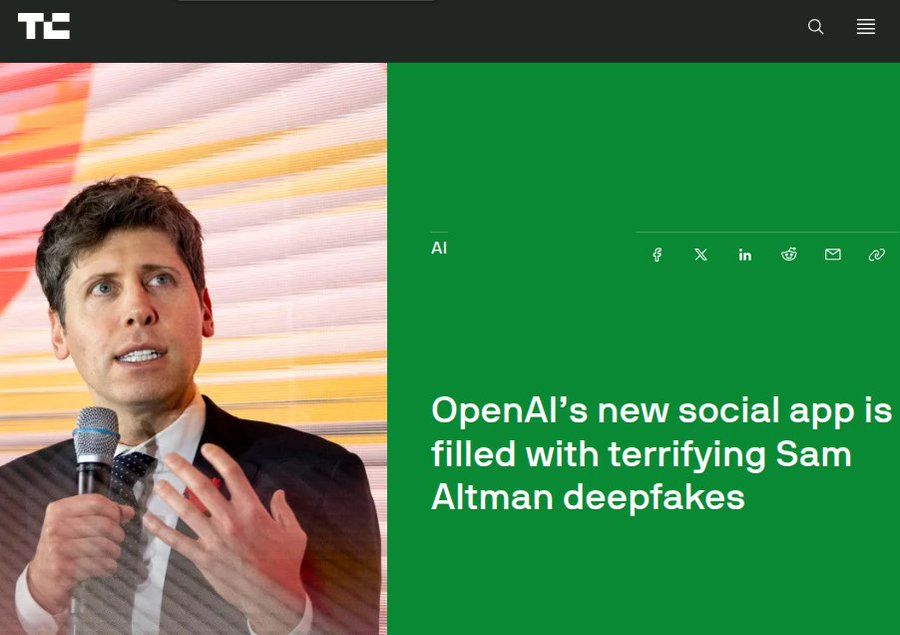

🏆 🎥 OpenAI’s Sora app is already filled with hyper realistic Sam Altman deepfakes made through its built in cameo system.

It also means the cameo setup records short biometric clips where the person reads numbers and turns their head, runs a clothing appropriateness check, then gives 4 permission choices, only me, people I approve, mutuals which are accounts that follow each other, or everyone. If a person chooses anything other than everyone, Sora stops anyone outside that allowed audience from generating videos with that person’s cameo.

Doesn’t just rely on the exact words you type into the prompt when it generates a video. It also looks at your IP address, which can reveal your location, so the app can guess local context like which sports teams are nearby or what city scenery should look like.

On top of that, it can pull from your past ChatGPT conversations, which gives hints about your interests, habits, or writing style. So even if you don’t explicitly mention those things in the prompt, Sora can weave them in to make the video feel more personal or relevant to you. For example, if a person asked for a generic baseball video, but if he/she was in Philadelphia and had used ChatGPT before, the app automatically made the video about the Phillies, which matched the local context.

🌐 The AI-browser war is massively heating up.

The AI-browser war is massively heating up.

Comet launched in July-25 behind the Max plan then widened to Pro and a big waitlist, and now it is open to all. It works as a built-in assistant that summarizes pages, pulls key details, and follows links to complete multi-step research or tasks.

The free release also challenges Chrome’s dominance, even as Google rolled out Gemini-powered AI features in Chrome. Chrome still controls ~72% of global browser usage.

Perplexity says it wants to support journalism, and it also announced a new partners for Comet Plus, a $5 add-on that lets Comet users read content from outlets like CNN, The Washington Post, Fortune, the Los Angeles Times, and Condé Nast, which owns The New Yorker, Wired, and more. The announcement lands while Perplexity is still tied up in lawsuits from big publishers, including Dow Jones (owner of The Wall Street Journal) and the New York Post, accusing the startup of copying their work with AI.

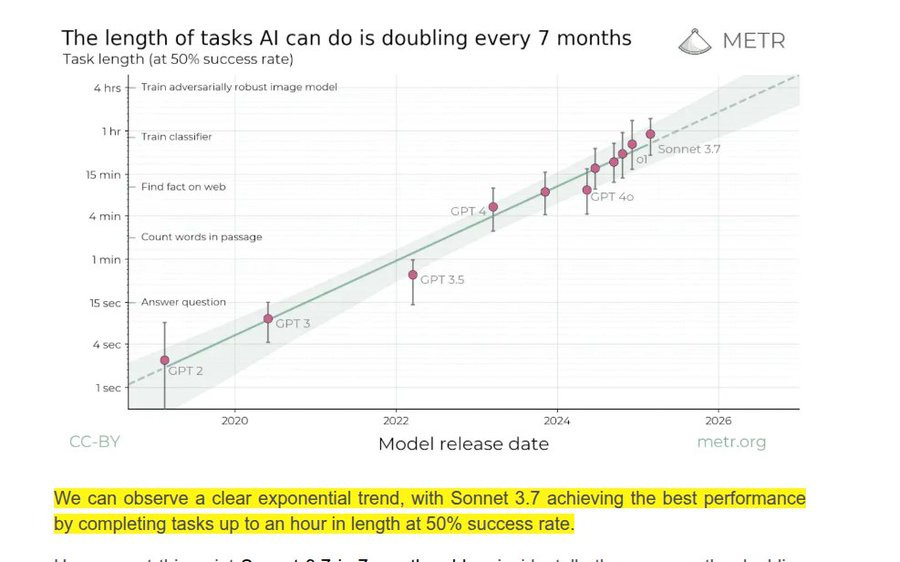

🛠️ AI progress is very much happening on an exponential scale.

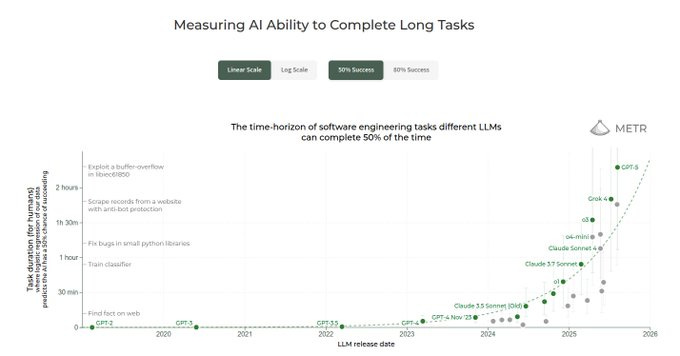

The capability of AI is doubling every 7 months. Do not fall for opinion, if you watch the data, its clear that the progress is running expoenetially.

METR’s long horizon autonomy tests and OpenAI’s GDPval benchmark, clearly shows models already do around 50% of skilled tasks and are on pace to work independently for 8 hours by mid 2026. A massive step change in everyday productivity is very close, because exponential curves often surprise late.

By the end of 2027, models will frequently outperform experts on most tasks. Just watch this absolutely beautiful curve. The time-horizon of software engineering tasks different LLMscan complete 50% of the time.

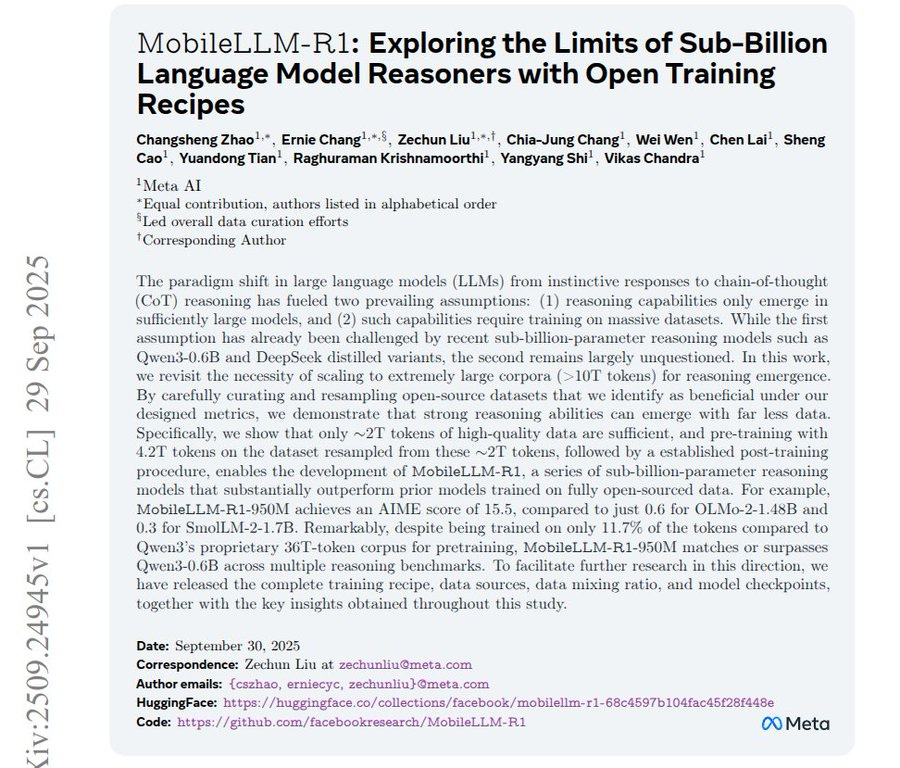

👨🔧 MobileLLM-R1: Exploring the Limits of Sub-Billion Language Model Reasoners with Open Training Recipes

Brilliant release from AIatMeta.

🧠 MobileLLM-R1 shows sub 1B models can reason strongly using 4.2T curated tokens and influence driven mixing, matching Qwen3 0.6B with only 11.7% of its data. They choose data by measuring how much each source helps code, math, or knowledge, then give more weight to the helpful ones.

The key findings are influence based mixing and mid training compression improve math and code, and supervised fine tuning beats reinforcement learning for these small models. They use small probe sets and leave one out tests to see each source’s effect, then set mixing weights with influence scores while keeping benchmarks unseen.

Pretraining uses 2T tokens twice, mid training uses 100B tokens twice, and they drop samples with negative influence as the model improves. Post training uses 866K instruction pairs and 6.2M long reasoning traces across math, science, and code.

Reinforcement learning helps a base model, but supervised fine tuning gives bigger gains, and extra reinforcement learning after that can lower scores. On Galaxy S22 with 4 bit weights and 8 bit activations, the 140M model runs 100+ tokens/s up to 8K, and the 950M model runs 31 tokens/s at 1K.

They release the models, data sources, and full training recipe so others can reproduce the results with open tools. The mix is broad web text for language, plus math and code for reasoning, with a pruning loop to cut noisy samples.

This path targets on device use by trading scale for data quality and smart selection. More symbolic data can reduce knowledge recall on tests like MMLU, so the mix needs tuning per task.

That’s a wrap for today, see you all tomorrow.