🌠 Stargate: The Most Ambitious And Expensive Project of AI Era Begins with OpenAI, SoftBank As Key Players

The Biggest AI project,OpenAI's Stargate launches, Google tops Chatbot Arena with Gemini 2.0, Anthropic predicts AI dominance, Bytedance debuts UI-TARS, and LM Studio enables local Qwen-7b on laptops.

Read time: 8 min 57 seconds

📚 Browse past editions here.

( I write daily for my 106K+ AI-pro audience, with 3.5M+ weekly views, covering noise-free, actionable applied-AI developments).

⚡In today’s Edition (22-Jan-2025):

🌠 Stargate: The Most Ambitious And Expensive Project of AI Era Begins with OpenAI, SoftBank Being Key Players

🏆 Google released Gemini 2.0 Flash Thinking model with 1mn context window, Ranked No-1 in Chatbot Arena

📡 Anthropic CEO says "AI will surpass almost all humans at almost all tasks in 2-3 years."

Bytedance releases UI-TARS, fine-tuned GUI agent that integrates reasoning, and action capabilities into a single vision-language model

🗞️ Byte-Size Brief:

Google invests $1B+ in Anthropic, revenue grows 10x in 1 year.

LM Studio runs Deepseek Qwen-7b locally on 16GB MacBook.

🌠 Stargate: The Most Ambitious And Expensive Project of AI Era Begins with OpenAI, SoftBank As Key Players

🎯 The Brief

OpenAI, in collaboration with SoftBank, Oracle, and NVIDIA, has announced Project Stargate, a $500 billion initiative to build cutting-edge AI infrastructure in the U.S. It aims to construct 20 massive data centers, create hundreds of thousands of jobs, and secure U.S. dominance in AI while delivering global economic benefits.

⚙️ The Details

→ Stargate is set to invest $100 billion immediately, with the total reaching $500 billion over four years. It focuses on building physical and virtual infrastructure, supporting AI advancements, and achieving AGI.

→ The project is backed by SoftBank (financial lead) and OpenAI (operational lead). Oracle, NVIDIA, MGX, and Microsoft are key technology partners.

→ Construction has begun in Texas, with 10 data centers, each spanning 500,000 square feet. Additional sites across the U.S. are under consideration.

→ Stargate incorporates energy innovations like next-gen nuclear reactors and sustainable solutions to meet the immense power demands of AI infrastructure.

→ About Masayoshi Son, he is the visionary who turned a $20 million bet on Alibaba into $60 billion, rebuilt Japan’s telecom infrastructure, and sold an electronic translator to Sharp for $1.7 million while still in college. When Son makes moves, they’re bold, calculated, and game-changing.

→ Impact on other industries. Project Stargate’s $500 billion AI infrastructure spending will heavily benefit the semiconductor, data center, networking, and energy sectors. Companies like NVIDIA, AMD, TSMC, and Micron will gain from increased demand for chips and memory. Networking giants like Cisco and Broadcom will see a surge in orders for switches and custom accelerators. Meanwhile, data center real estateand power providers will thrive, supporting this massive buildout.

→ Mushrooming demand for Data Centers. In a report last year, McKinsey said that global demand for data centre capacity would more than triple by 2030, growing between 19% and 27% annually by 2030. For developers to meet that demand, the consultancy estimated that at least twice the capacity would have to be built by 2030 as has been constructed since 2000.

🏆 Google released Gemini 2.0 Flash Thinking model with 1mn context window, Ranked No-1 in Chatbot Arena

🎯 The Brief

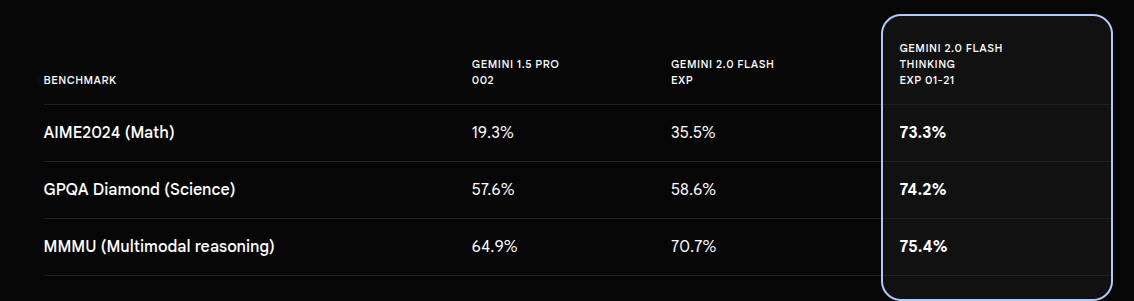

Google launched Gemini 2.0 Flash Thinking, featuring a 1M token context window, code execution, and improved performance on reasoning benchmarks. It achieves #1 ranking on Chatbot Arena, outperforming competitors with improvements in math (73.3%), science (74.2%), and multimodal (75.4%) tasks, enabling deeper and more complex reasoning. Supported numbe of tokens for output is 64k and Knowledge cutoff Aug-2024.

Play with the Model for Free in Google AI Studio

⚙️ The Details

→ Wih a massive 1M token context window, the model can process long-form inputs such as multiple research papers or extensive datasets, significantly expanding its analytical capabilities.

→ The update supports native code execution, where the model can write and run Python code during interactions, enhancing its ability to solve complex computational problems.

→ Performance improvements include 73.3% on AIME, 74.2% on GPQA, and 75.4% on MMMU, demonstrating strong reasoning across math, science, and multimodal benchmarks.

→ It ranks #1 in Chatbot Arena, with notable improvements in coding, creativity, and reasoning, while still trailing slightly in style control. It overtook Gemini-Exp-1206 and a 17 points boost over the previous Flash Thinking-1219 checkpoint. Notable improvements in coding, creativity, and reasoning, while still trailing slightly in style control.

→ The model is available for free in beta on AI Studio and API under the exp-01-21 variant, offering 10 RPM (Requests Per Minute. It refers to the number of API calls or requests a user is allowed to make to the model in a min) usage.

→ Users report low latency, longer output generation, and reduced contradictions between the model’s thoughts and answers, making it more reliable for complex use cases.

How to use Gemini 2.0 Flash Thinking with API

To use an experimental model, specify the model code when you initialize the generative model. For example:

model = genai.GenerativeModel(model_name="gemini-2.0-flash-exp")When using with the API, by default, the Gemini API doesn't return thoughts in the response. To include thoughts in the response, you must set the include_thoughts field to true, as shown in the examples.

One of the main considerations when using a model that returns the thinking process is how much information you want to expose to end users, as the thinking process can be quite verbose.

This example uses the new Google Genai SDK which is useful in this context for parsing out "thoughts" programmatically. To use the new thought parameter, you need to use the v1alpha version of the Gemini API.

A key point regarding their pricing is that you are not billed for the usage of experimental models. This means that developers can utilize these models without incurring costs, making it an excellent opportunity to explore new functionalities.

However note that the Flash Thinking model being an experimental model has the following limitations:

Text and image input only

Text only output

No JSON mode or Search Grounding

📡 Anthropic CEO says "AI will surpass almost all humans at almost all tasks in 2-3 years."

🎯 The Brief

Anthropic CEO Dario Amodei confidently predicts AI will surpass human skills in many tasks within 2-3 years, emphasizing the rapid pace of AI development and the need for serious consideration of its societal impacts.

⚙️ Other Key Takeaways from the Interview

→ Anthropic is prioritizing enterprise clients but acknowledges consumer needs, with web access and voice mode for Claude coming soon. Image and video generation are not currently prioritized due to safety concerns and limited enterprise demand.

→ A surge in demand has led to 10X revenue growth in the last year, straining compute resources and causing rate limit issues. Anthropic is aggressively expanding compute capacity, including a future cluster with hundreds of thousands of Trainium 2 chips, aiming for over a million chips by 2026.

→ Anthropic views reasoning in models as a continuous spectrum enhanced by reinforcement learning, not a separate method. They are developing new models, aiming for a smooth user experience that fluidly combines reasoning with other AI capabilities.

→ Amodei acknowledges potential job displacement but emphasizes human adaptability and the importance of designing AI for complementarity rather than replacement.

→ Anthropic focuses on talent density over mass and prioritizes core values like safety and societal responsibility to attract top talent.

🛠️ DeepSeek’s ARCprize results came out - its on par with lower o1 models, but for a fraction of the cost

🎯 The Brief

DeepSeek models achieved performance on par with lower o1 models on the ARC-AGI benchmark at a fraction of the cost, while team "The ARChitects" won the ARC Prize with 53.5% score, showcasing advancements in LLM reasoning.

⚙️ The Details

→ ARC Prize is a $1,000,000+ public competition to beat and open source a solution to the ARC-AGI benchmark. It tests LLMs on their ability to perform abstract reasoning. It specifically challenges AI systems with complex visual puzzles that require reasoning and adaptation to new situations, which is considered a key aspect of achieving Artificial General Intelligence (AGI).

→ DeepSeek V3 achieved 7.3% on the Semi-Private and 14% on the Public Eval of ARC-AGI, costing only $.002 per task.

DeepSeek Reasoner scored 15.8% on Semi-Private and 20.5% on Public Eval, with a cost of $.06 and $.05 per task respectively.

The purpose of the 100 Semi-Private Tasks is to provide a secondary hold out test set score. The semi-Private tasks are like a surprise quiz with 100 brand new questions the model hasn't seen. This shows how well it truly learns. Public Eval tasks, with 400 questions from 2019, are like practice tests that many models might have already studied. Since these older questions are public and used in training, good performance here might be partly due to memorization. Using both test types helps researchers see if a model is genuinely smart (good at new problems) or just good at remembering old answers. The difference in scores reveals this distinction.

→ In comparison, OpenAI’s o3 cost $20 per task in low compute, $3440 per task on high compute.

Bytedance releases UI-TARS, fine-tuned GUI agent that integrates reasoning, and action capabilities into a single vision-language model

🎯 The Brief

ByteDance has introduced UI-TARS, an advanced GUI agent that integrates perception, reasoning, and action capabilities into a single vision-language model. It achieves state-of-the-art performance, scoring 82.8% on VisualWebBench, outperforming GPT-4 and Claude. Available in three sizes (2B, 7B, and 72B parameters), it automates GUI tasks with precision, reasoning, and adaptability.

⚙️ The Details

→ UI-TARS is a native agent model designed for seamless GUI interaction, bypassing modular frameworks with an end-to-end architecture. It processes GUI screenshots and autonomously generates actions, such as clicks, typing, and navigation.

→ The model excels at perception, grounding, and reasoning, using curated datasets, reasoning augmentation, and iterative improvement methods. It employs system-1 for fast actions and system-2 for deliberate, multi-step decision-making.

→ UI-TARS-72B leads benchmarks like AndroidWorld (46.6) and OSWorld (24.6 in 50 steps).

→ Released under Apache 2.0 license, UI-TARS is accessible on Hugging Face, enabling further development and deployment.

Models: https://huggingface.co/bytedance-research/UI-TARS-72B-DPO

Deskop App: https://github.com/bytedance/UI-TARS-desktop

🗞️ Byte-Size Briefs

Google just made a fresh investment of more than $1bn into Anthropic.

The new funding comes in addition to more than $2 billion that Google has already invested in Anthropic. And last week, Anthropic was also on the cusp of securing a further $2bn, in a separate deal at a valuation of about $60bn. Anthropic’s revenue hit an annualised $1bn in December, up roughly 10 times on a year earlier.

LM Studio demonstrated running Deepseek-R1-distill-Qwen-7b-mlx locally.

On a 16GB M1 MacBook Pro at 36 tok/sec and using about ~4.3GB of RAM when loaded. This is quite huge for the open-source, local LLM enthusiasts, as DeepSeek has proven to be so powerful and itelligent after its release 2 days back.