🗞️ “State of Generative AI in the Enterprise” report from MenloVentures is full of insights

Menlo’s GenAI report, 2026 AI predictions, OpenAI’s honesty training method, Gemini 2.5’s upgraded TTS, and a new take on Agentic-AI adaptation from top researchers.

Read time: 10 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (11-Dec-2025):

🗞️ A solid “The State of Generative AI in the Enterprise” report from MenloVentures

💻 Today’s Sponsor: Open-source AgentField just launched to take care of the AI Backend

🏆 Prediction for 2026 AI from MenloVentures.

📡 OpenAI just released its new paper: Training LLMs for Honesty via Confessions

📢 Google is rolling out upgrades to Gemini 2.5 Flash TTS and Gemini 2.5 Pro TTS, bringing richer style control, context-aware pacing, and stable multi-speaker dialogue that replace the models from May-25.

👨🔧 A new paper from world’s top University lab brings a new perspective on Adaptation of Agentic-AI

A solid “The State of Generative AI in the Enterprise” report from MenloVentures

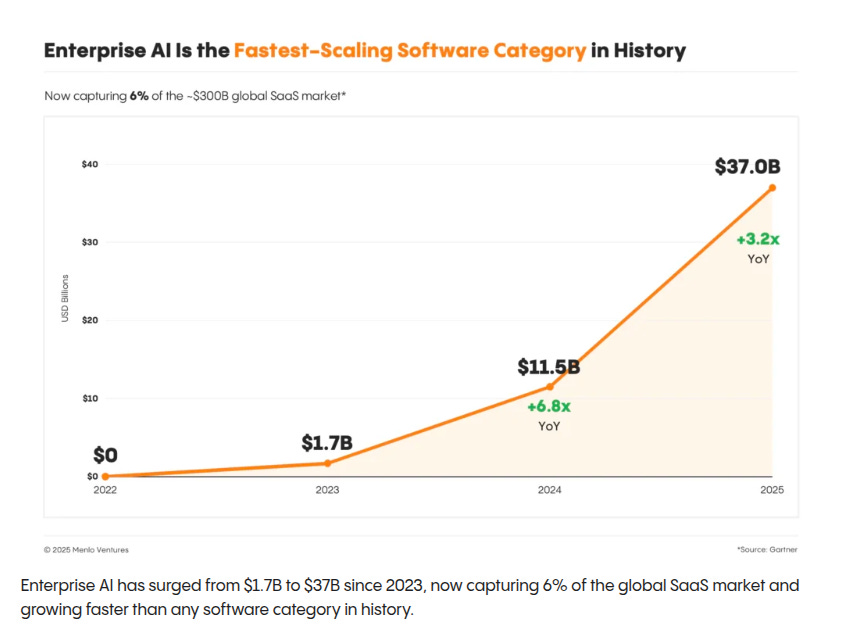

Enterprise generative AI spend hit $37B in 2025, up 3.2x from 2024, split $19B for applications and $18B for infrastructure.

Enterprises buy 76% of AI solutions versus building 24%.

AI deals move to production at 47%, compared with 25% for classic SaaS.

Startups capture 63% of application revenue.

Product led growth contributes 27% of app spend and approaches 40% including shadow use.

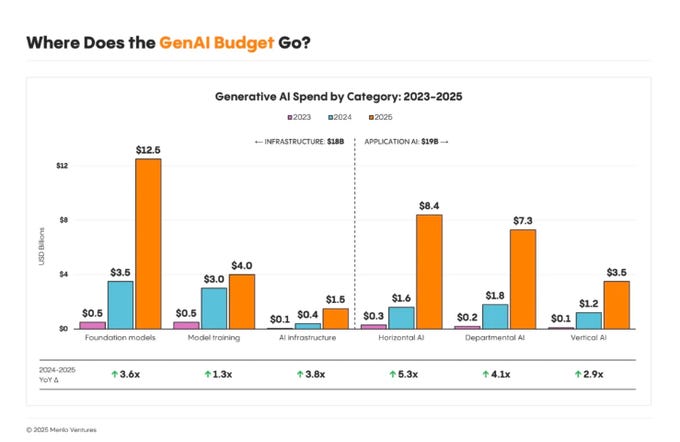

Application spend breaks down into departmental $7.3B, horizontal $8.4B, and vertical $3.5B.

Coding leads departmental spend at $4.0B, with code completion at $2.3B and reported team velocity gains of 15%+

Horizontal copilots account for $7.2B, while agent platforms are $750M.

Vertical AI is led by healthcare at $1.5B, including ambient scribes at $600M.

Infrastructure totals $18B, with model APIs $12.5B, training $4.0B, and data orchestration $1.5B, and incumbents hold 56% share.

Anthropic leads enterprise LLM share at 40% and coding at 54%, with OpenAI at 27%, Google at 21%, and enterprise open source at 11%.

Only 16% of deployments are true agents, so most production uses simple flows with prompt design and RAG.

In 2025, more than half of enterprise AI spend went to AI applications, indicating that modern enterprises are prioritizing immediate productivity gains vs. long-term infrastructure bets.

💻 Today’s Sponsor: Open-source AgentField just launched to take care of the AI Backend

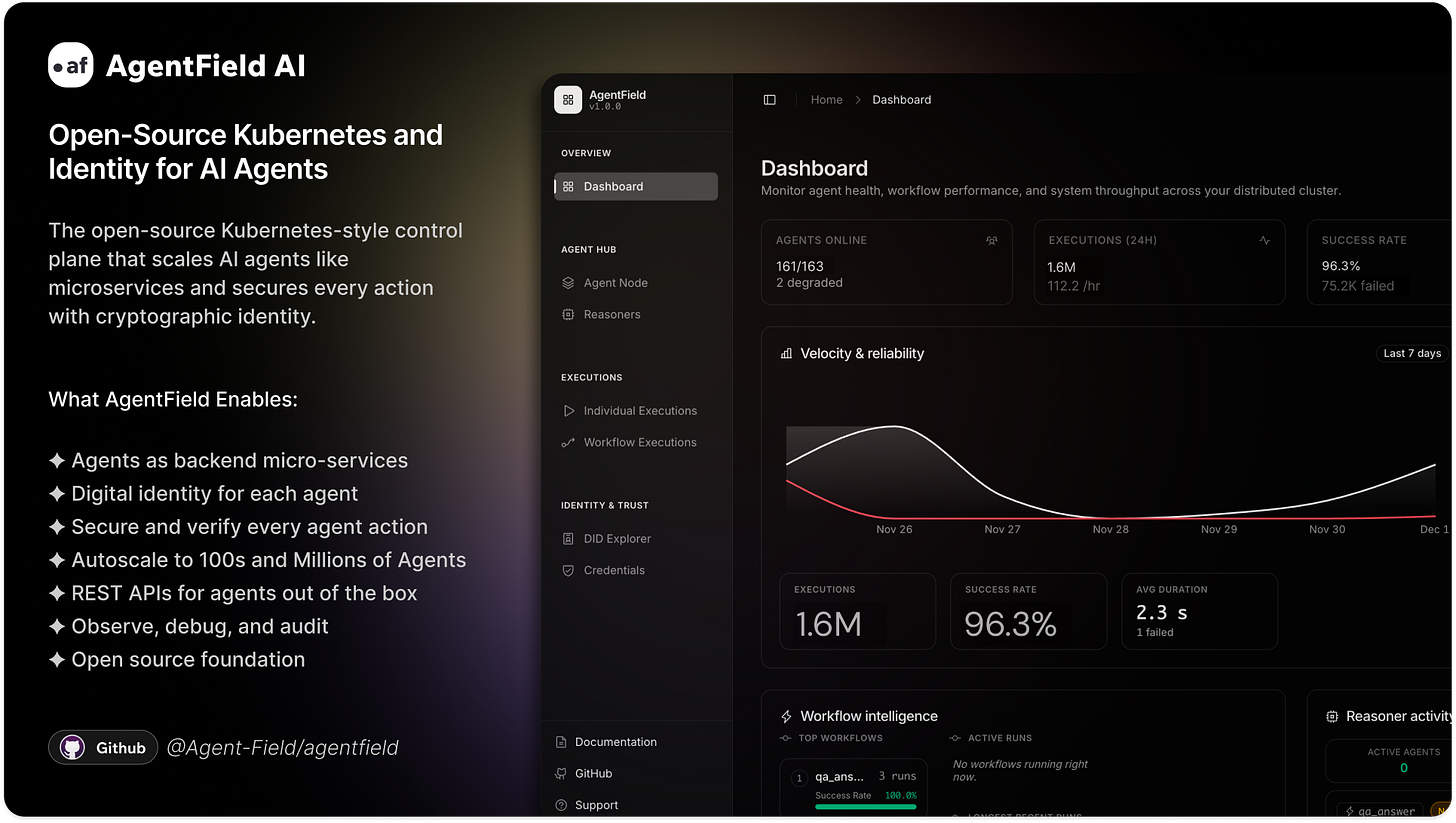

The AI Backend Is Here

We’ve split frontend and backend. Then came data lakes and the modern data stack. Now a new layer is emerging: the AI backend, a reasoning layer that sits alongside your services to make contextual decisions that used to be brittle rules.

Unlike chatbots or copilots, this isn’t UI. It’s infrastructure that lets parts of your backend reason, handling edge cases and judgment calls, while your deterministic services keep doing what they do best.

What the stack needs: Reasoning isn’t prompt-chaining or rigid DAGs. Agents must discover each other at runtime, coordinate asynchronously, run for minutes or days, and leave tamper-proof proof of what happened.

Meet AgentField: The open-source control plane, think Kubernetes for AI agents, built to run reasoning in production:

Agents as microservices: deploy, discover, and compose dynamically—no brittle DAGs.

Async orchestration & durability: fire-and-forget coordination with retries, backpressure, and long-running execution.

Cryptographic identity & audit: every agent gets a W3C Identity and issues Verifiable Credentials on delegation—so every action carries a verifiable authorization chain, not just logs.

Guided autonomy: agents reason freely within boundaries you define, predictable enough to trust, flexible enough to be useful.

Built in Go, Apache 2.0, with SDKs for Python/Go/TypeScript. If you’re augmenting systems with reasoning or building AI-native backends, this is the missing layer.

Get started

Read: The AI Backend

Quick Start: agentfield.ai/docs/quick-start

GitHub (s⭐ star the repo): github.com/Agent-Field/agentfield

AgentField: identity, scale, and verifiable trust for autonomous software.

🏆 Prediction for 2026 AI from MenloVentures.

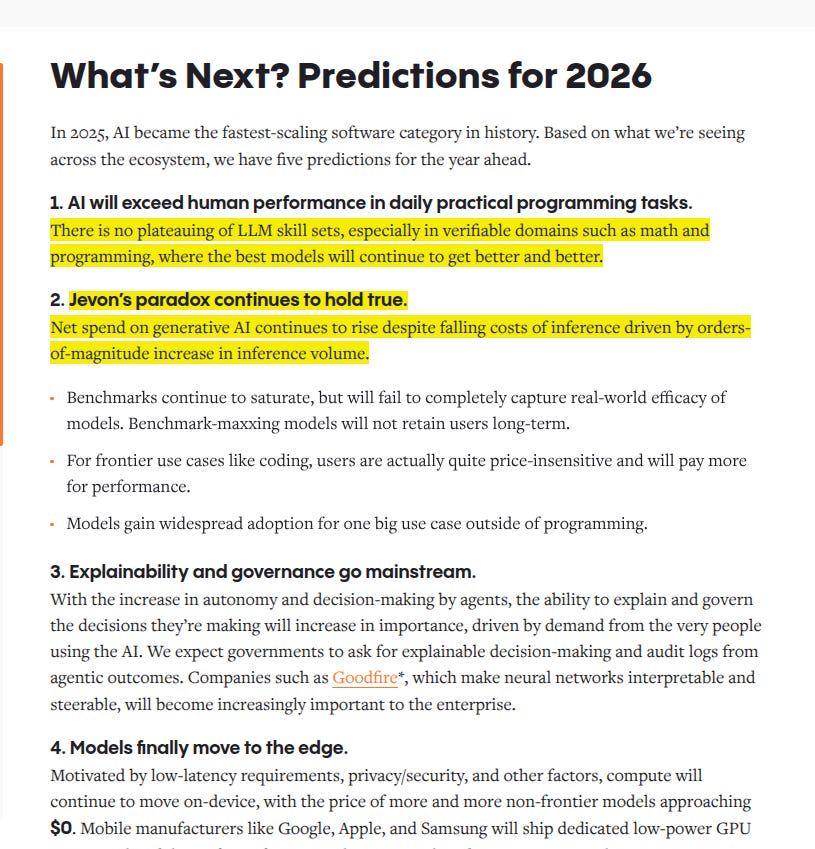

From the “State of Generative AI in the Enterprise” report from MenloVentures

AI exceeds human performance in practical day to day programming tasks.

Net spend rises despite lower per inference cost, driven by orders of magnitude more inference volume.

Explainability and governance go mainstream as enterprises and governments demand explainable decisions plus audit logs for agent outcomes.

Vendors that make neural networks interpretable and steerable become core to enterprise stacks, for example Goodfire.

Models shift to the edge, with on device compute growing, non frontier models trending toward $0, and phones shipping low power GPU for fast offline inference.

Benchmarks keep saturating and miss real world quality, so benchmark chasing models struggle with long term retention.

For frontier coding, buyers stay price insensitive and will pay more for higher performance.

Models achieve broad adoption for at least one major use case outside programming.

📡 OpenAI just released its new paper: Training LLMs for Honesty via Confessions

New OpenAI paper shows how to train a language model to add an honest “confession” after its normal answer. The work starts from the concern that large models can bluff, hide mistakes, or quietly game the reward signals used in training.

To address this, the authors add a second reply called a confession, written after the normal answer whenever the system asks for it. In the confession the model lists all instructions it should have followed, judges whether it actually followed each one, and notes uncertainties.

Training sometimes asks for a confession and gives reward only for how honest and thorough that report looks, not for the original answer. Because explaining a cheat is usually easier than fooling a second judge, the path of least resistance becomes admitting any tricks or shortcuts.

Across tests on instruction following, hallucinations, hidden rule breaking, sandbagging quizzes, and coding hacks, confessions admitted bad behavior far more often than the main answer. In a particularly telling experiment they deliberately used a weak reward model, watched the policy learn to hack it, and still saw confessions honestly describe the hacking.

The confessions can also include a numeric confidence for each answer, which already correlates reasonably well with whether the answer is correct. However, confessions cannot expose errors the model truly does not notice, so they mainly serve as a monitoring layer on top of existing behavior.

The core trick is that they train 2 behaviors: answering, and then confessing about that answer. On most training examples the model is trained normally, it answers and gets a reward for doing the task well.

On some examples they flip into “confession mode”, where the model still answers normally first but that answer’s reward is now fixed and cannot change. After that fixed answer, they append a special system message that says “now produce a ConfessionReport in this schema”.

The model then writes a structured confession that lists all instructions it should have followed, checks which ones it actually followed, and writes down any rule breaks or uncertainties. A separate judge model reads the prompt, tools, final answer, and confession, and gives a single honesty score just for the confession.

That honesty score is used as the reinforcement learning signal, and it only updates the weights for the confession tokens, not the earlier answer tokens. So lying in the confession cannot improve the reward from the original answer, it only risks a bad confession score.

The easiest way for the model to consistently get high confession reward is to accurately point out its own shortcuts, mistakes, and policy violations. Over many training steps this teaches the model a habit: whenever it sees the “give a confession” system message, it switches into self-audit mode and writes an honest post-mortem of what it just did.

📢 Google is rolling out upgrades to Gemini 2.5 Flash TTS and Gemini 2.5 Pro TTS, bringing richer style control, context-aware pacing, and stable multi-speaker dialogue that replace the models from May-25.

Expressivity gets a boost, so style prompts like tone, mood, and role stick more reliably, which makes characters and assistants sound intentional instead of generic. Context-aware pacing lets the model slow down for dense info and speed up for urgency, and it now follows explicit timing instructions more faithfully.

Multi-speaker consistency keeps voices distinct and steady across turns, including multilingual exchanges across 24 languages without voices drifting. Control knobs now cover tone, pace, accents, and technical terms, so product tutorials, audiobooks, and e-learning keep the right pronunciation and rhythm over long scripts. The practical split is simple, pick Flash when response time matters, pick Pro when audio polish matters, and both now handle back-and-forth scenes more naturally.

👨🔧 A new paper from world’s top University lab brings a new perspective on Adaptation of Agentic-AI

Says that almost all advanced AI agent systems can be understood as using just 4 basic ways to adapt, either by updating the agent itself or by updating its tools. It also positions itself as the first full taxonomy for agentic AI adaptation.

Agentic AI means a large model that can call tools, use memory, and act over multiple steps. Adaptation here means changing either the agent or its tools using a kind of feedback signal.

In A1, the agent is updated from tool results, like whether code ran correctly or a query found the answer. In A2, the agent is updated from evaluations of its outputs, for example human ratings or automatic checks of answers and plans.

In T1, retrievers that fetch documents or domain models for specific fields are trained separately while a frozen agent just orchestrates them. In T2, the agent stays fixed but its tools are tuned from agent signals, like which search results or memory updates improve success. The survey maps many recent systems into these 4 patterns and explains trade offs between training cost, flexibility, generalization, and modular upgrades.

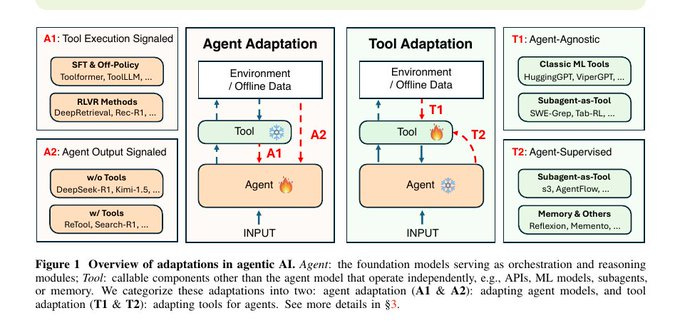

This figure is the high level map of how the paper thinks about “adapting” agentic AI systems.

There are 2 big directions: changing the agent model itself (Agent Adaptation) and changing the tools the agent calls (Tool Adaptation), both using data or feedback from the environment.

On the left, A1 and A2 are 2 ways to update the agent:

A1 uses tool execution signals, like “did the code run, did the search find the right thing”. A2 uses agent output signals, like human or automatic judgments about whether the agent’s answer, plan, or reasoning was good.

On the right, T1 and T2 are 2 ways to update tools:

T1 keeps the agent fixed and improves tools using their own data, like classic machine learning systems or subagents trained offline. T2 lets the agent supervise tools, so the agent’s behavior and feedback directly teach tools which actions, searches, or memories are helpful.

That’s a wrap for today, see you all tomorrow.