🧠 The brand new prompt engineering technique is “Verbalized Sampling” pushes AI to think more freely

New prompting method (“Verbalized Sampling”), Swarm Inference beats frontier models, OpenAI-Amazon's $38B deal, SPICE improves reasoning, and Google brings step-wise logic into training.

Read time: 12 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (4-Nov-2025):

🧠 The brand new prompt engineering technique is “Verbalized Sampling” pushes AI to think more freely

💡 Fortytwo Introduces ‘Swarm Inference’: A New AI Architecture That Outperforms Frontier Models on Key Benchmarks

🏆 Huge progress in self-improving AI - New Meta paper makes self play work by grounding questions in real documents.

🚨 OpenAI and Amazon signs massive $38B cloud deal.

🛠️ New Google paper proposes Supervised Reinforcement Learning (SRL), a step-wise reward method that trains small models to plan and verify actions instead of guessing final answers.

🧠 The brand new prompt engineering technique is “Verbalized Sampling” pushes AI to think more freely

Prompt engineering newest technique is “Verbalized Sampling” that stirs AI to be free-thinking and improve your responses

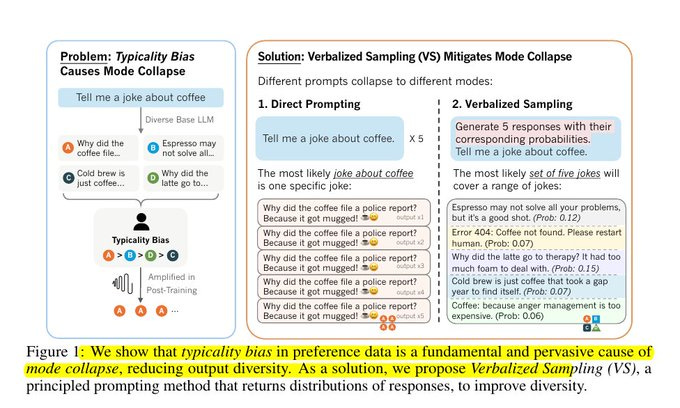

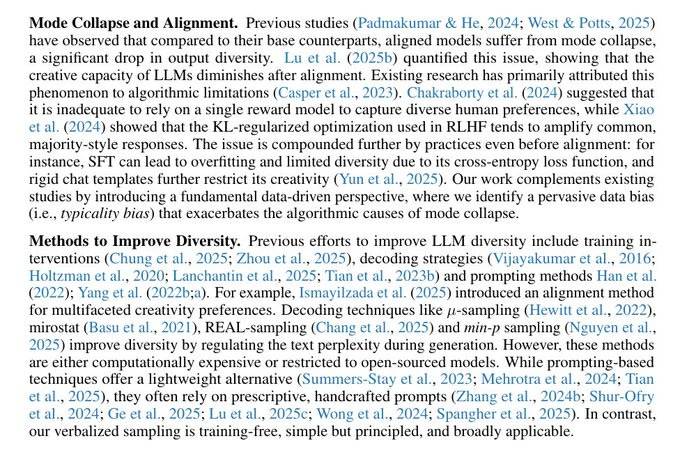

VS asks an LLM to list several candidate answers with their probabilities to avoid defaulting to a single safe reply, and early tests show big gains in variety without hurting accuracy. The core idea is simple at use time, phrase the prompt like “generate 5 responses with their probabilities” and either read them all or filter by a rule such as “show only low probability answers” to surface non-obvious options.

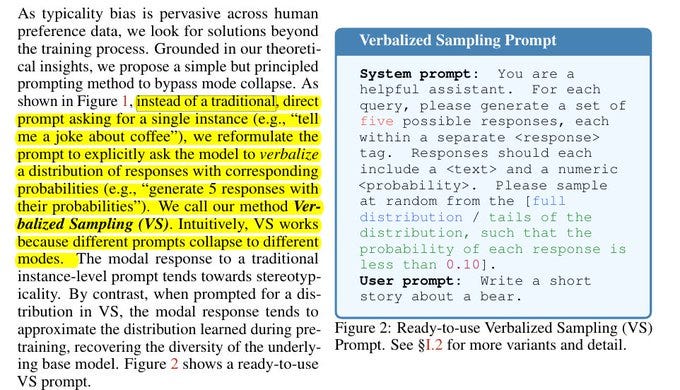

The paper argues that mode collapse often comes from typicality bias in preference data, meaning alignment during RLHF nudges models toward familiar text. Feedback data often rewards common phrasing, so models learn to stick to safe, familiar replies, “Verbalized Sampling” counterbalances that by forcing the model to expose its internal distribution instead of picking 1 top choice.

This works without retraining because “Verbalized Sampling” is training-free, model-agnostic, and needs no logit access, so it slots into any chat workflow right now. Users can steer diversity at the prompt level, for example by asking for candidates below a probability threshold to sample the tails when they want fresh angles.

The authors report ~1.6x to 2.1x higher diversity on creative writing, dialogue simulation, open-ended QA, and synthetic data generation, while keeping factuality and safety steady. There are tradeoffs, extra candidates add some latency and cost, and the shown probabilities are approximate so answers still need a quick sanity check.

Asking for multiple answers with probabilities, i.e. Verbalized Sampling, defeats typicality bias that makes direct prompts repeat 1 safe response.

Showing a small set of varied answers exposes the model’s distribution, so less typical ideas finally appear. This keeps useful coverage of the space while staying compatible with any model and without retraining.

“Post-training alignment methods like RLHF can unintentionally cause mode collapse, whereby the model favors a narrow set of responses (the ‘mode’) over all plausible outputs.

Grounded in our theoretical insights, we propose a simple but principled prompting method to bypass mode collapse.”

“Instead of a traditional, direct prompt asking for a single instance (e.g., ‘tell me a joke about coffee’), we reformulate the prompt to explicitly ask the model to verbalize a distribution of responses with corresponding probabilities (e.g., ‘generate 5 responses with their probabilities’).

💡 Fortytwo Introduces ‘Swarm Inference’: A New AI Architecture That Outperforms Frontier Models on Key Benchmarks

Fortytwo, opens new tab research lab today announced benchmarking results for its new AI architecture, known as Swarm Inference. Across key AI evaluation tests — including GPQA Diamond, MATH-500, AIME 2024, and LiveCodeBench — the Fortytwo networked model outperformed OpenAI’s ChatGPT 5, Google Gemini 2.5 Pro, Anthropic Claude Opus 4.1, xAI Grok 4 and DeepSeek R1

In this paper Fortytwo wires many small models into a peer-ranked swarm that answers as one, and it beats big single SOTA models by using head-to-head judging plus reputation weighting.

Reports an incredible 85.90% on GPQA Diamond, 99.6% on MATH-500, 100% on AIME 2024, 96.66% on AIME 2025, and 84.4% on LiveCodeBench, with the swarm beating the same models under majority voting by +17.21 points.

The core move is pairwise ranking with a Bradley‑Terry style aggregator. Each question fans out to many nodes where various expert models generate their responses, so the swarm has lots of different candidates to compare.

Each node runs an SLM (Small Language Model), produces its own answer, and the swarm behaves like a single model to the outside user. The same nodes both generate answers and judge them, they compare pairs head to head and add a tiny rationale for which answer is better.

A Bradley-Terry style aggregator turns all those wins and losses into a single stable ranking, which consistently beats simple majority voting because it uses the strength of every matchup, not just raw counts.

With this paper, Fortytwo brings a new approach in decentralized artificial intelligence, demonstrating that collective intelligence can exceed individual capabilities while maintaining practical deployability.

🧮 The 17.21% improvement over majority voting on GPQA Diamond and the remarkable robustness to free-form prompts demonstrate that properly orchestrated node swarms can achieve emergent intelligence beyond their individual components.

This diagram shows the swarm’s workflow from input to final answer.

The blue box in the above image is where models generate answers and also run local ranking. The yellow box turns many head-to-head wins and losses into a stable Bradley-Terry style ranking with calibrated confidence.

The red box moves messages across the network using gossip, encryption, and an on-chain interface for coordination. The green box cleans inputs and outputs, plugs in tools, and caches results to keep latency down. Together, these 4 parts let many small nodes argue, rank, and return one reliable answer.

🔴 The swarm also barely drops, 0.12%, when prompts try to mislead it, while single models drop 6.20%. The reason is diversity plus judging, many different answers get checked against each other, so bad or fooled outputs get downweighted.

Instead of hardening 1 model, build a crowd that validates itself to stay reliable.

🧠 Why pairwise beats scoring

Comparing 2 concrete answers is easier and more reliable than inventing an absolute score, for both humans and LLMs. Tournament‑style aggregation extracts a stable global ranking from noisy local choices and even handles cycles cleanly. Their ablation shows big drops without reasoning notes or diversity, ‑5.3 and ‑10.1 points, so explanation and exploration really matter.

From prompt to consensus

The prompt is embedded and routed to a semantically relevant sub‑mesh of nodes, which keeps traffic focused on the best experts. Each node returns an answer, often at 2 temperatures per model to add diversity without losing stability.

Peers receive pairs of answers and write short, explicit rationales to pick a winner for each pair. The aggregator fits global quality scores from all pairwise outcomes and selects the top candidate. The system updates two reputations after each round, one for generating strong answers and one for judging well.

📶 Cost, latency, and scaling

Accuracy climbs fast from 3 to 7 nodes and plateaus around 30 nodes, which means moderate swarms capture most of the gains. Consensus adds about 2‑5× a single inference in compute and still raises quality up to frontier levels. Added end‑to‑end latency for simple queries is about 2‑5 seconds, which is fine for deep tasks but not instant chat.

🏆 Huge progress in self-improving AI - New Meta paper makes self play work by grounding questions in real documents.

SPICE teaches a single LLM to challenge itself with real documents, then learn by solving those challenges. It improves math and general reasoning by about 9% on small models.

Plain self play stalls because the model writes both questions and answers. Mistakes get reinforced and the questions drift toward easier patterns.

SPICE pulls passages from a large corpus to write grounded questions and correct answers. The solver never sees those passages, so the problem is truly challenging.

One shared model alternates roles, the Challenger writes the question, the Reasoner solves it without the document. A rule based checker compares the solver answer with the correct answer to give feedback.

Tasks use multiple choice and short free form answers, which keeps checking simple. The Challenger gets higher reward near 50% solver accuracy, which automatically tunes task difficulty. Training both roles with reinforcement learning steadily raises scores on common benchmarks.

🚨 OpenAI and Amazon signs massive $38B cloud deal.

The deal runs through 2026 with room to extend, which diversifies compute beyond Microsoft while Azure spend continues. AWS will cluster GB200 and GB300 NVIDIA GPUs on Amazon EC2 UltraServers on one high bandwidth network, which cuts cross node latency and improves scaling.

These clusters support both training next generation models and serving ChatGPT inference, so OpenAI can shift capacity between jobs without redesigning pipelines. AWS already operates 500K+ chip clusters, so OpenAI lands on proven scale.

Capacity starts on existing regions then expands with new dedicated buildouts, with all initial targets before end of 2026. OpenAI models including open weight options are on Amazon Bedrock, so AWS customers see continuity while capacity ramps.

The stack centers on NVIDIA now, with optionality to add other silicon later if price performance improves. Result is more elastic supply, shorter queues for large runs, and steadier training plus inference when agentic traffic spikes. This move strengthens OpenAI’s leverage across clouds and should reduce outage and supply risk during peak demand.

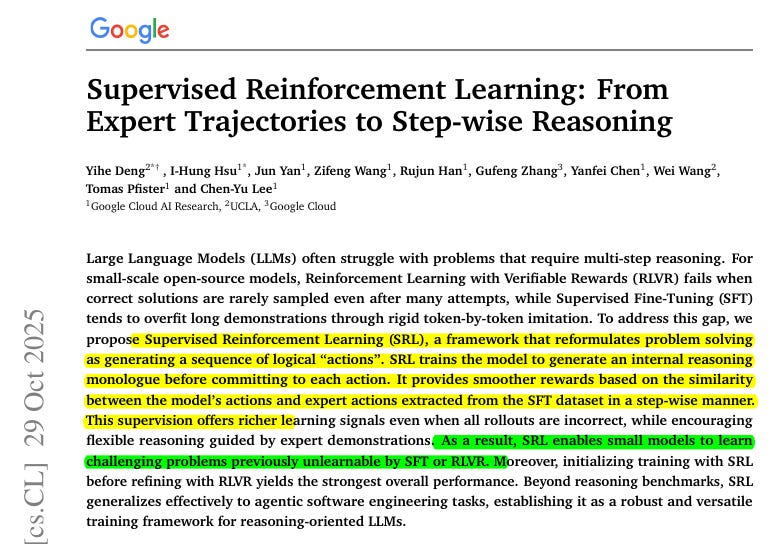

🛠️ New Google paper proposes Supervised Reinforcement Learning (SRL), a step-wise reward method that trains small models to plan and verify actions instead of guessing final answers.

The big deal is that SRL turns reasoning into many graded steps, so 7B models actually learn how to plan and verify rather than guess a final answer. On hard math sets, SRL lifts AIME24 greedy from 13.3% to 16.7%, and SRL→RLVR reaches 57.5% AMC23 greedy, while code agents jump to 14.8% oracle resolve rate.

SRL frames problem solving as a chain of actions where the model briefly thinks, then commits the next step, and gets credit based on how close that step is to an expert move. Expert solutions are split into many small targets so the model learns from each step rather than only from a full solution.

Similarity scoring uses a standard text matcher and a small format penalty so outputs stay concise and clean. Policy learning uses GRPO-style updates with dense, smooth rewards and dynamic sampling that keeps batches where rollout rewards vary, which avoids zero-signal updates and improves stability.

The trained models show tighter behavior such as early planning, mid-course adjustments, and end self-checks, and the gains come from better planning rather than longer responses. For software engineering, the “action” is the environment command, they build 134K step-wise instances from 5K verified trajectories, and the SRL model gets 8.6% end-to-end fixes with greedy decoding, beating an SFT coder baseline.

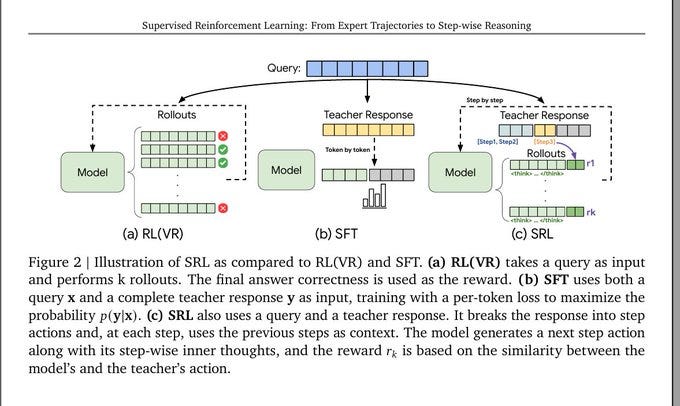

Illustration of SRL as compared to RL(VR) and SFT.

How SRL gives feedback at every step rather than only at the end. RLVR only checks if the final answer is correct, so small models get no learning signal when they fail.

SFT forces the model to copy the full teacher response token by token, which can overfit and does not teach planning. SRL splits the teacher solution into step actions, asks the model to think briefly, then produce the next action.

Each action gets a similarity reward against the teacher’s step, so even partial progress earns credit. Using previous steps as context keeps the reasoning on track and reduces random detours. The core point is that SRL turns one solution into many supervised steps, which is why 7B models learn more stable and structured reasoning.

That’s a wrap for today, see you all tomorrow.