The Same But Different: Structural Similarities and Differences in Multilingual Language Modeling

LLMs use identical neural circuits to process similar grammar patterns across different languages.

LLMs use identical neural circuits to process similar grammar patterns across different languages.

Basically the paper says, One brain circuit fits all: LLMs handle grammar the same way in any language

Original Problem 🔍:

LLMs excel in multilingual tasks, but little is known about their internal structure for handling different languages. This study investigates whether LLMs use shared or distinct circuitry for similar linguistic processes across languages.

Solution in this Paper 🧠:

• Employs mechanistic interpretability tools to analyze LLM circuitry

• Focuses on English and Chinese for comparison

• Examines two tasks: Indirect Object Identification (IOI) and past tense generation

• Uses multilingual models (BLOOM-560M, Qwen2-0.5B-instruct) and monolingual models (GPT2-small, CPM-distilled)

• Applies path patching and information flow routes techniques

Key Insights from this Paper 💡:

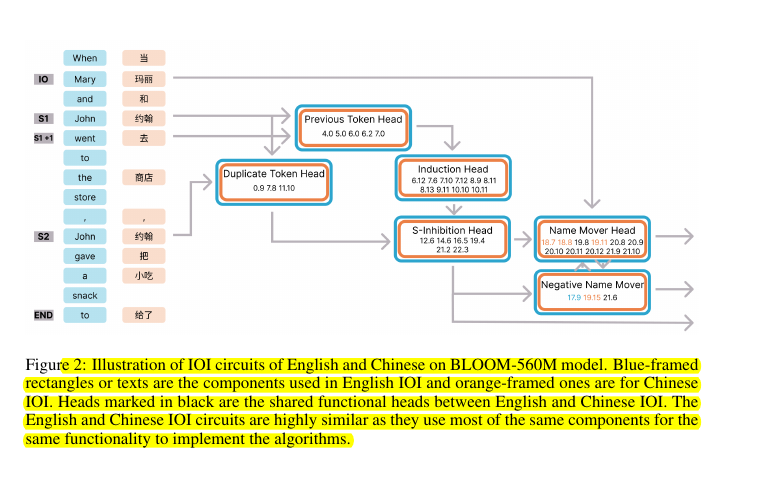

• LLMs use shared circuitry for similar linguistic processes across languages

• Monolingual models independently develop similar circuits for common tasks

• Language-specific components are employed for unique linguistic features

• Multilingual models balance common structures and linguistic differences

Results 📊:

• IOI task: BLOOM achieves 100% accuracy in English, 95% in Chinese

• Past tense task: Qwen2-0.5B-instruct reaches 96.8% accuracy in English

• Shared circuitry identified for IOI task across languages and models

• Language-specific components (e.g., past tense heads) activated only when needed

🧩 When faced with tasks requiring language-specific morphological processes, multilingual models:

Still invoke a largely overlapping circuit.

Employ language-specific components as needed. For example, in the past tense task, the model uses a circuit consisting primarily of attention heads to perform most of the task, but employs feed-forward networks in English only to perform morphological marking necessary in English but not in Chinese.