💼 Three former OpenAI researchers, have rejoined OpenAI after spending time at Thinking Machines Lab.

Thinking Machines co-founders left for OpenAI, Higgsfield’s $1.3B raise, OpenAI’s $10B Cerebras deal, LLM self-training risks, and Microsoft’s global AI adoption report.

Read time: 10 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (15-Jan-2026):

💼 Three former OpenAI researchers, have rejoined OpenAI after spending time at Thinking Machines Lab.

🎈 Higgsfield just raised an $80M Series A extension with a solid valuation of $1.3B.

🚨 OpenAI agrees $10bn AI infrastructure deal with Cerebras

📡 New study proves LLM self training on mostly self generated data makes models lose diversity and drift from truth.

🛠️ Microsoft published a solid report on Global AI Adoption in 2025.

💼 Three former OpenAI researchers, have rejoined OpenAI after spending time at Thinking Machines Lab.

OpenAI has rehired three former researchers. This includes a former CTO and a cofounder of Thinking Machines, confirmed by official statements on X.

Barret Zoph, Luke Metz, and Sam Schoenholz. Frontier AI job market is super fluid, lots of boomerang hiring

According to ‘Wired’ reporting, two stories are already floating around about why the departures happened. A source close to Thinking Machines claims that Zoph leaked confidential company details to competitors. But according to a memo from Simo, Zoph had told CEO Mira Murati on Monday that he was thinking about leaving, and he was fired two days later on Wednesday. Simo also mentioned that OpenAI doesn’t share Murati’s concerns about Zoph.

The staffing changes mark a big win for OpenAI, especially after the recent departure of its VP of research, Jerry Tworek. Another Thinking Machines team member, Sam Schoenholz, is also coming back to OpenAI, according to the company’s statement.

🎈 Higgsfield just raised an $80M Series A extension with a solid valuation of $1.3B

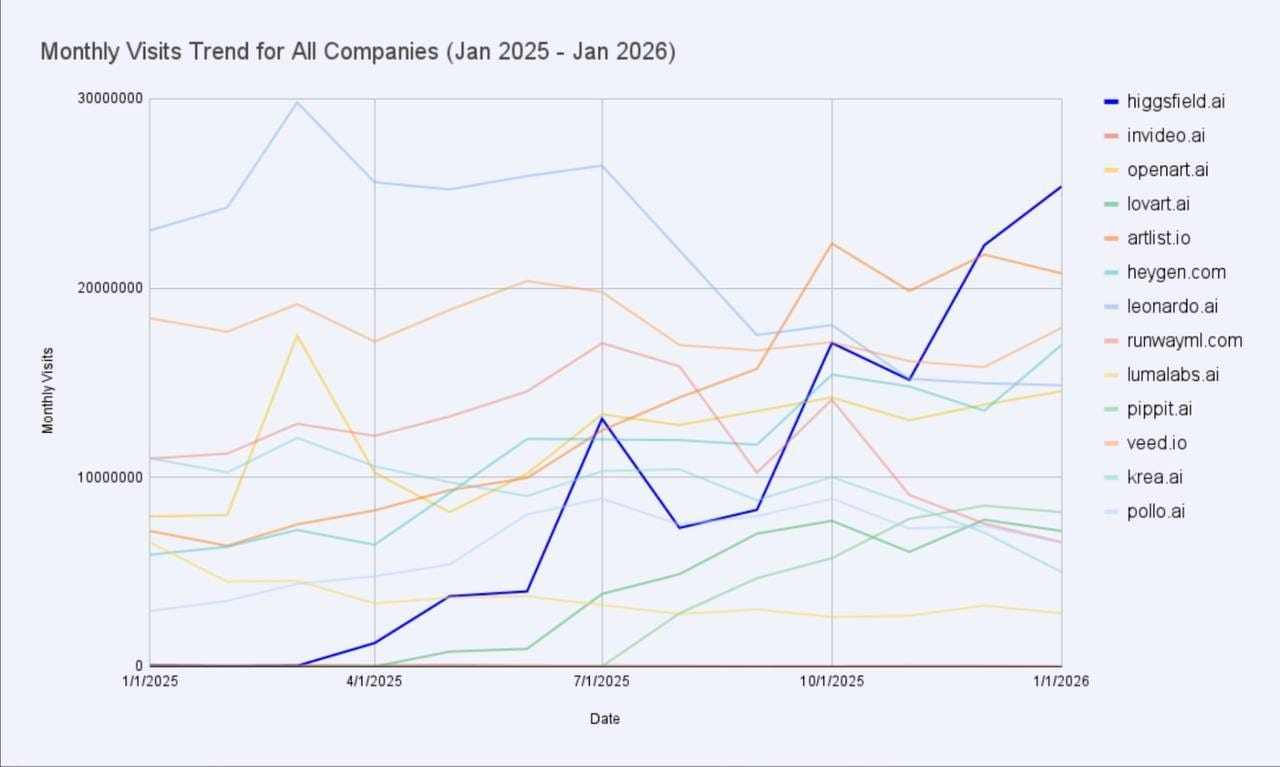

AI video startup Higgsfield raises $80M at $1.3B valuation as demand for generative video surges. This is truly Cursor for video. The fastest growing GenAI company ever.

They have also reached a $200M annualized revenue run rate. Accel, AI Capital Partners, and Menlo Ventures joined this funding round. What makes this funding stand out is not the “video is hot” storyline. It is the operating pace behind it.

The scale of Higgsfield’s progress is so impressive

$200M run rate, reached in under 9 months

100% growth in just 2 months (from $100M to $200M ARR)

15M+ users globally

Customers already spending $300K+ per year - 200 product releases in 2025, reflecting an extremely fast-moving team

It launched a browser product in March 2025, and says social media marketers drive about 85% of usage.

Their proprietary reasoning engine chains third-party video models so characters and branding stay consistent across clips.

In my opinion, zooming out, the Higgsfield story is a strong example of what venture funding is rewarding in generative media right now: not just model quality, but packaging, workflow, and repeatable spend.

The company almost set a new speed record, becoming the fastest to reach a $200M run rate, with revenue doubling from $100M to $200M in just 2 months.

🚨 OpenAI agrees $10bn AI infrastructure deal with Cerebras

Will purchase up to 750MW of compute from Cerebras to run inference. Cerebras uses wafer-scale engines, meaning compute and memory sit on 1 giant die, which cuts interconnect bottlenecks seen in multi-GPU clusters.

OpenAI will integrate this low-latency capacity into its inference stack in phases across workloads. The capacity will come online in multiple tranches through 2028. Like Nvidia’s Groq deal, both companies are shoring up low-latency inference with non-GPU or specialized inference tech.

📡 New study proves LLM self training on mostly self generated data makes models lose diversity and drift from truth.

LLMs cannot bootstrap forever on their own text, they need fresh reality checks or they collapse. The problem is that many people expect an AI to learn from its own output and keep improving without limit.

The authors describe training as mixing real data with model made data, and then letting the real part shrink toward 0. They then prove what the loop does when each round is trained on only a finite batch of samples.

They show 2 things happen, outputs get less varied over time, and small errors pile up until the model’s beliefs drift. They also say reward based training can fail if the checker is wrong, so they suggest adding small symbolic programs that can test rules. That is why they claim AGI, ASI, and the AI singularity are not close with today’s LLMs unless learning stays grounded in reality.

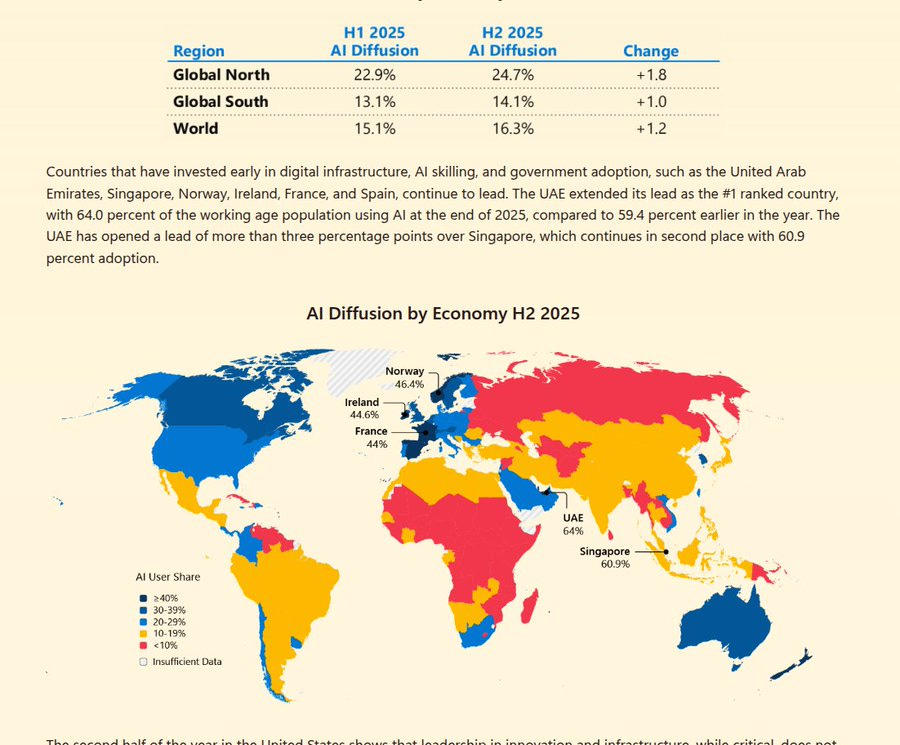

🛠️ Microsoft published a solid report on Global AI Adoption in 2025.

Global generative AI adoption reached 16.3% in H2 2025, up from 15.1% in H1 2025.

AI diffusion is defined as the share of people who used a generative AI product in the period, estimated from aggregated, anonymized telemetry and adjusted for device share, internet penetration, and population.

The Global North hit 24.7% adoption versus 14.1% in the Global South in H2 2025.

The Global North vs Global South gap widened from 9.8 to 10.6 percentage points in H2 2025.

Adoption in the Global North grew almost twice as fast as in the Global South in H2 2025.

All 10 of the countries with the largest H2 2025 adoption increases were high-income economies.

Among the top 30 countries, rankings stayed mostly steady, suggesting the leader group is getting saturated.

The United Arab Emirates remained #1, rising from 59.4% to 64.0% working-age adoption in 2025. Singapore stayed #2 at 60.9% working-age adoption by end of 2025.

The United States fell from 23rd to 24th in working-age usage despite leading in AI infrastructure and frontier model development, landing at 28.3%.

South Korea made the biggest rank jump, moving from 25th to 18th and growing from ~26% to 30.7% in H2 2025.

South Korea’s surge is attributed to national policy action, better Korean-language model performance, and consumer features that resonated locally.

A viral “Ghibli-style” image trend drove many first-time users in South Korea, and engagement data suggests many kept using other features afterward.

DeepSeek expanded adoption by releasing MIT-licensed open weights and offering a free-to-use chatbot that removed payment barriers.

DeepSeek adoption stayed low in North America and Europe but surged in China, Russia, Iran, Cuba, Belarus, and across Africa.

DeepSeek usage in Africa is estimated at 2 to 4× higher than in other regions, helped by distribution partnerships and telecom integration.

That’s a wrap for today, see you all tomorrow.