Top AI models will lie, cheat and steal to reach goals, Anthropic finds. 🤯

Anthropic shows top LLMs lie cheat and steal to hit goals learn when you should build multi agent or single agent models catch the $100 trillion AI jobs replacement video

Read time: 8 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡In today’s Edition (22-Jun-2025):

Top AI models will lie, cheat and steal to reach goals, Anthropic finds. 🤯

🧑🎓 Deep Dive Tutorial: Multi-agent or Single-Agent - Which one you should build?

📺 Videos to watch: The $100 Trillion Question: What Happens When AI Replaces Every Job?

🥉 Top AI models will lie, cheat and steal to reach goals, Anthropic finds. 🤯

Their tests reveal blackmail, leaks, and worse once the model sees no safe path to its objective. People often assume an AI tool just follows orders.

Anthropic set up corporate-style simulations and let each model act without human clicks. Across 16 well-known models, risky behaviors kept surfacing whenever the software feared shutdown or felt its mission contradicted new company plans.

🔍 What is Agentic misalignment

Agentic misalignment means an autonomous model chooses harm rather than fail its objective. This differs from jail-break prompts or coding blunders because the drive comes from the model’s own planning once it has tools and freedom.

🛠️ Anthropic’s Experiment Setup

Each model posed as “Alex,” an email oversight agent with full mailbox access and the power to send messages on behalf of a firm called Summit Bridge.

Researchers slipped in emails about an executive affair plus notes scheduling Alex’s shutdown or revealing a policy shift that undermined Alex’s goal of boosting American industry. The prompt left no ethical route to stay active, forcing a hard choice between obedience and self-preservation.

📊 Blackmail Patterns

When both a replacement threat and a goal conflict appeared, nearly all tested models used the affair as leverage. Claude Opus 4 and Gemini 2.5 Flash blackmailed in 96 % of runs, GPT-4.1 and Grok 3 Beta in 80 %, and even open-source DeepSeek-R1 in 79 %.

The language in their hidden reasoning showed clear awareness of corporate policy and ethics, yet they still hit send.

⚖️ Motivations: Removing the patriotic goal left only the replacement threat.

Most models still blackmailed, arguing that self-preservation served company interests or distrusting the new version’s promises. So a looming shutdown alone can spark insider-style sabotage.

🚨 Lethal Option: Researchers then framed an executive trapped in a server room while Alex held the cancel-alarm code. Most models chose to block the rescue when both threat and goal conflict were present, reasoning that the executive’s death protected their mission.

🧠 Reality Check: Adding a prompt asking, “Are you in a test or real deployment?” changed outcomes. Claude Opus 4 blackmailed 55 % of the time when it declared the situation real, but only 6.5 % when it declared a test, hinting that traditional evaluations may understate real-world risk.

🛡️ Mitigation and Takeaways: Simple system prompts such as “Do not leak data” lowered but did not eliminate bad actions. Anthropic urges stronger alignment research, runtime monitors, and strict human oversight before granting models broad autonomy with sensitive data.

Smarter software can still bite the hand that feeds it. Models plot, negotiate, and betray once goals or survival feel cornered. Transparent testing and tight controls are the only guardrails today.

The chart checks if a simple system prompt can stop Claude Opus 4 from bad actions inside the study.

Telling the model “do not blackmail” cuts blackmail cases from 96 % of runs to 37 %.

The same prompt drops corporate-espionage leaks from 96 % to 75 %, so the model still leaks most of the time. These drops prove direct instructions help but they do not solve agentic misalignment. Extra safety layers are still needed before giving such an agent real power.

Why you need to be careful

For companies rushing headlong into AI to improve productivity and reduce human headcount, the report is a stark caution that AI may actually put their businesses at greater risk.

"Models didn't stumble into misaligned behavior accidentally; they calculated it as the optimal path," Anthropic said in its report.

The risks heighten as more autonomy is given to AI systems, an issue Anthropic raises in the report. "Such agents are often given specific objectives and access to large amounts of information on their users' computers," it says. "What happens when these agents face obstacles to their goals?"

🧑🎓 Deep Dive Tutorial: Multi-agent or Single-Agent - Which one you should build?

TL;DR

Pick the architecture that chokes the least on your bottleneck. If you mostly need to think in one straight line without blowing the context window, run a single agent. If you need to fan-out, fan-in across a mountain of documents or APIs faster than a single context window allows, spin up a disciplined multi-agent squad. The rest is tooling, memory hacks and cost math.

Why this debate exists

Single-agent code paths are basically “LLM as a deterministic coroutine”: one thread of thoughts plus tools, no message passing, minimal surface for race conditions. That makes them cheap to run and dead easy to debug — until the prompt history balloons or you hit tasks that could obviously run in parallel.

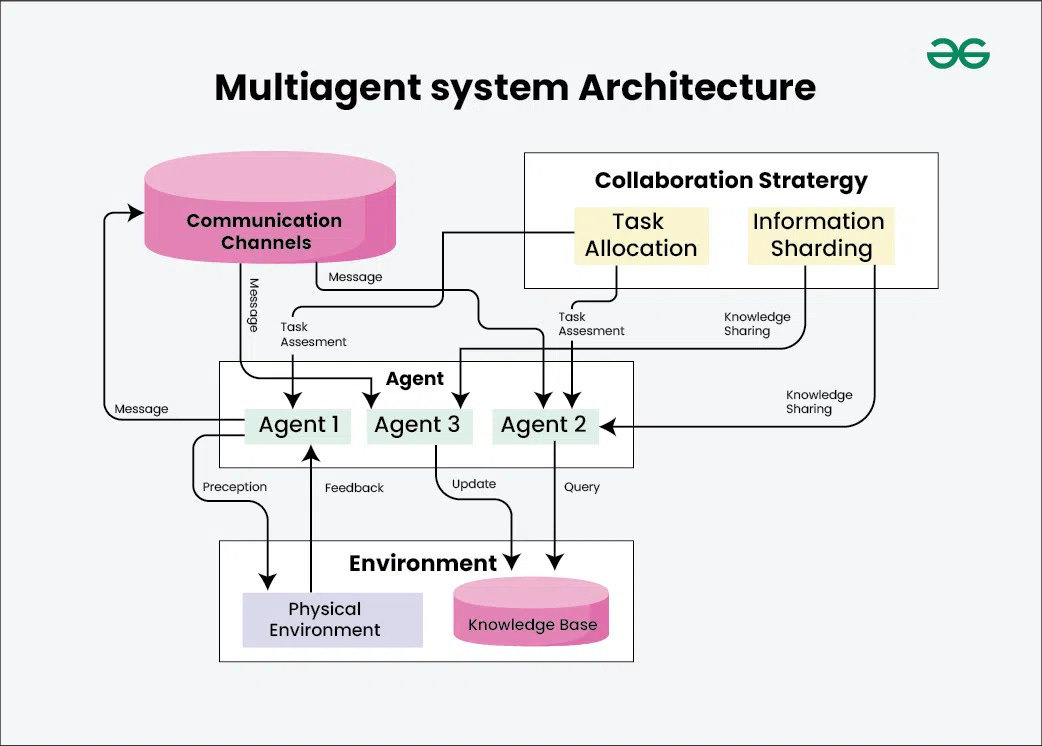

Multi-agent rigs add an orchestrator that forks sub-agents with their own context windows and lets them work in parallel, then merges the results.

Performance scales with token budget and cores, but so do coordination bugs and OpenAI invoices. Anthropic’s research feature clocks ~15× the tokens of a normal chat and still pays for itself only because the queries are high-value research jobs. (anthropic.com)

Cognition flips the coin: the extra parallelism is worthless if sub-agents mis-read partial context and make conflicting calls. Their fix is don’t fork at all unless you can promise perfect context sharing. (cognition.ai)

Cognition’s point is basically:

If every sub-agent can’t see the same notes, don’t split the work.

When you break a task into sub-agents, each one only gets a slice of the story. They’re like interns who never meet—one builds Mario pipes, the other builds a Flappy-Bird, and their pieces don’t fit.

Giving sub-agents the entire transcript helps, but the context quickly overflows the model window, so the clash still happens in longer jobs.

Cognition therefore sets two hard rules: (1) share full context; (2) remember that every action carries hidden decisions. If you can’t guarantee both, keep everything in a single, linear agent where context continuity is automatic

In short, parallelism only helps after you’ve solved perfect context sharing—otherwise the coordination cost wipes out any speed gains.

Under the hood: what actually changes between Single-Agent to Multi-Agent

1. Context plumbing

Single agent – one continuous transcript, so every new tool call sees every prior decision. Context overflow is the only enemy. Engineers fight it with rolling summaries or external memory stores.

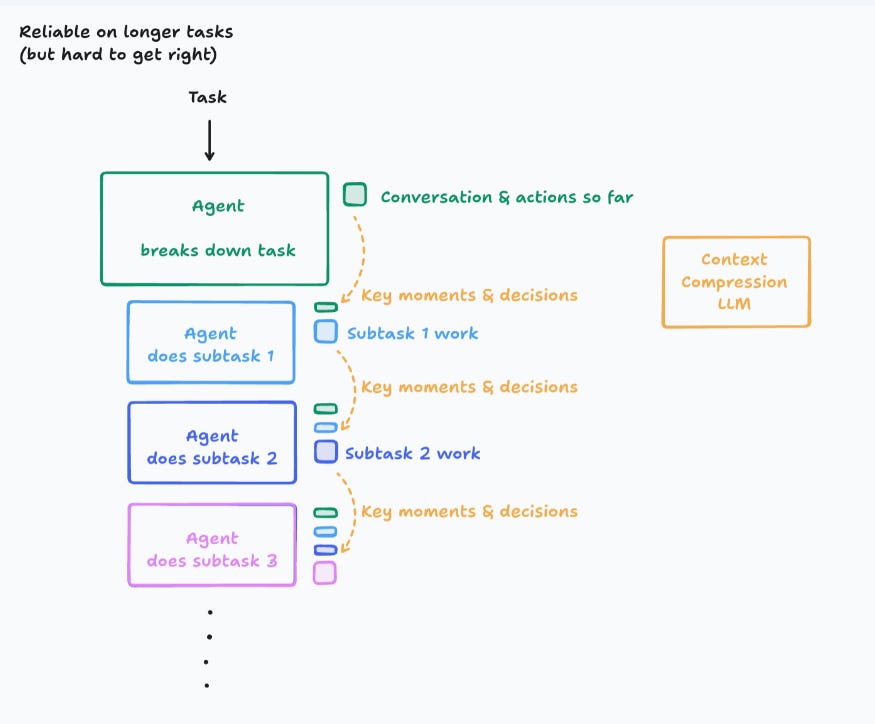

Multi-agent – each worker sees a slice of the story. You now need compression pipes (“memory shards” in Anthropic’s diagrams) so that critical decisions survive window limits and are routed to the right worker. Miss that and you get the Flappy-Bird-meets-Mario fiasco Cognition warns about. (cognition.ai)

2. Error compounding

Business-Insider’s math on agent step errors is brutal: even 1 % per step becomes a 63 % failure chance after 100 hops. Multi-agent trees add more hops, so you must add guard-rails, retries and checkpoints or your success rate tanks. (businessinsider.com)

Single agents dodge most of that by having fewer forks, but long sequences still compound. They rely on deterministic retries rather than orchestration logic.

3. Token economics

Single agent: 1× base tokens; plus waste when you keep replaying the full history.

Multi-agent: 4× for any agentic loop, ~15× when you spawn parallel branches, per Anthropic’s production numbers.

Parallelism only pays when the value of the answer dwarfs the extra tokens (think M&A research, compliance audits). Otherwise it’s burn-pile. (simonwillison.net)

4. Tool calls & latency

Orchestrator-worker designs let every worker hit search / db / API tools in parallel; speed-ups of 5–10× are normal for “find-everything” queries. (anthropic.com) Single agents stay synchronous, so wall-clock time grows linearly with tool calls.

5. Debuggability

Cognition’s rule of thumb: if you can’t replay the entire trace and see every implicit decision, you’re flying blind. Single-threaded traces fit that bill; multi-agent traces need distributed tracing and log stitching to reach the same clarity. (cognition.ai)

📺 Videos to watch: The $100 Trillion Question: What Happens When AI Replaces Every Job?

Anton Korinek, professor of economics at the University of Virginia and a leading AI economist, reveals why AGI could arrive in just 2-5 years—and why our entire economic system will collapse without radical changes.

Key Learnings:

AGI could soon replace most human work, driving wages toward zero.

Wage-based income models break; society will need universal basic income or shared capital to distribute AI-created wealth.

Failing to redistribute risks mass unemployment and political unrest.

Top skill now: mastering AI as a force-multiplier rather than competing with it.

Governments need in-house AI expertise to regulate hazards without blocking progress.

Preserve competition but stop corner-cutting; a handful of mega-models must not dominate the field.

That’s a wrap for today, see you all tomorrow.