🗞️ TOP AI Papers of last week (12-Jan-2025 to 19-Jan-2025)

The most discussed AI paper from the last week.

Entire Paper List will not be shown in your email - read it on the web here.

Some of the most discussed AI papers of from last week (12-Jan-2025 to 19-Jan-2025):

Enhancing Retrieval-Augmented Generation: A Study of Best Practices

MangaNinja: Line Art Colorization with Precise Reference Following

Multiagent Finetuning: Self Improvement with Diverse Reasoning Chains

OmniThink: Expanding Knowledge Boundaries in Machine Writing through Thinking

The Lessons of Developing Process Reward Models in Mathematical Reasoning

🗞️ "Eliza: A Web3 friendly AI Agent Operating System"

https://arxiv.org/abs/2501.06781

Web3 meets AI

Eliza is a web3-focused AI agent operating system that enables seamless integration between AI agents and blockchain applications through TypeScript-based modular architecture .

🤖 Original Problem:

The web3 domain lacks a framework that can effectively integrate AI agents with blockchain applications, making it difficult for developers to create autonomous agents that can interact with decentralized systems .

🔧 Solution in this Paper:

→ Eliza introduces a TypeScript-based framework with four key components: Adapter, Character, Client, and Plugin .

→ The system employs a multi-layered intent recognition approach combining symbolic actions with contextual understanding .

→ A pluggable architecture allows seamless integration of blockchain operations, social media interactions, and AI capabilities .

→ The framework supports multiple model providers and blockchain platforms through an extensible plugin system .

💡 Key Insights:

→ Web3 developers need simplified tools to integrate AI capabilities with blockchain operations

→ Modular design with clear interfaces enables better extensibility and maintenance

→ TypeScript provides better developer experience compared to Python-based alternatives

→ Intent recognition is crucial for autonomous agent operations in web3 space

📊 Results:

→ Achieved 32.21% accuracy on Level 1 GAIA benchmark tasks

→ Surpassed AutoGPT's performance by 14.42% on average

→ Integrated with projects worth $20+ billion in market capitalization

→ Supports 10+ blockchain platforms including Ethereum, Solana, and BASE

🗞️ "Enhancing Retrieval-Augmented Generation: A Study of Best Practices"

https://arxiv.org/abs/2501.07391

This paper explores best practices for Retrieval-Augmented Generation (RAG) systems, examining how different components and configurations impact LLM response quality.

→ This paper tests various RAG components including query expansion, retrieval strategies, and contrastive in-context learning.

→ They evaluate factors like LLM size, prompt design, chunk size, knowledge base size, and retrieval stride.

→ A novel Contrastive In-context Learning RAG and a “Focus Mode” for retrieving relevant context at the sentence level are introduced.

Key Insights from this Paper 🔑:

→ Contrastive In-context Learning significantly improves RAG performance, especially for specialized knowledge.

→ Focusing on relevant sentences ("Focus Mode") enhances response quality by reducing noise and improving relevance.

→ LLM size matters, but bigger isn't always significantly better, especially for specialized tasks.

→ Prompt design is crucial, even small changes affect performance.

Results 💯:

→ ICL1Doc+ (Contrastive In-context Learning with one retrieved document and contrastive examples) achieves 27.79 ROUGE-L on TruthfulQA and 23.87 ROUGE-L on MMLU.

→ 120Doc120S (Focus Mode with 120 retrieved sentences) improves Embedding Cosine Similarity by 0.81% on MMLU.

→ Instruct45B outperforms Instruct7B on TruthfulQA but less so on MMLU.

🗞️ "LlamaV-o1: Rethinking Step-by-step Visual Reasoning in LLMs"

https://arxiv.org/abs/2501.06186

Step-by-step visual reasoning that's both accurate and lightning-fast.

LlamaV-o1 introduces a comprehensive framework for step-by-step visual reasoning in LLMs, with a new benchmark, evaluation metric, and curriculum learning approach.

🤔 Original Problem:

→ Current visual reasoning models lack systematic evaluation methods and struggle with step-by-step problem solving, leading to inconsistent and unreliable results.

→ Existing benchmarks focus mainly on final answers, ignoring the quality of intermediate reasoning steps.

🔍 Solution in this Paper:

→ Introduces VRC-Bench, a benchmark with 8 categories and 4,173 reasoning steps for evaluating multi-step visual reasoning.

→ Implements a novel metric assessing reasoning quality at individual step level, focusing on correctness and logical coherence.

→ Develops LlamaV-o1, a multimodal model using curriculum learning and beam search for efficient inference.

→ Uses two-stage training: first for summarization and caption generation, then for detailed reasoning.

💡 Key Insights:

→ Step-by-step reasoning improves model interpretability and accuracy

→ Curriculum learning helps models develop foundational skills before tackling complex tasks

→ Beam search optimization reduces computational complexity from O(n²) to O(n)

📊 Results:

→ LlamaV-o1 achieves 67.3% average score across benchmarks, 3.8% higher than Llava-CoT

→ 5× faster inference scaling compared to existing methods

→ Strong performance in Math & Logic (83.18%), Scientific Reasoning (86.75%), OCR tasks (93.44%)

🗞️ "MangaNinja: Line Art Colorization with Precise Reference Following"

https://arxiv.org/abs/2501.08332

The paper introduces an AI model that accurately colors line art by using reference images and precise point-control, enabling realistic manga/anime colorization.

Original Problem 🎯:

→ Existing line art colorization methods struggle with semantic mismatches between reference images and line art, requiring highly similar references and lacking precise control over color details.

Solution in this Paper 🔧:

→ MangaNinja uses a dual-branch architecture with diffusion models for finding correspondences between reference and line art images.

→ A patch shuffling module divides reference images into small patches to improve local matching capabilities.

→ A point-driven control scheme powered by PointNet enables detailed color control through user-defined points.

→ The model leverages anime video frames for training, using one frame as reference and another's line art version as target.

Key Insights 🔍:

→ Patch shuffling pushes the model to learn implicit matching by breaking global patterns

→ Point control only works effectively when model understands local semantics

→ Video frame pairs provide natural semantic correspondences for training

Results 📊:

→ Outperforms existing methods with DINO score of 69.91 and CLIP score of 90.02

→ Achieves 21.34 PSNR and 0.972 MS-SSIM for image quality metrics

→ Shows superior performance in handling extreme poses, shadows, and multi-character colorization

🗞️ "Multiagent Finetuning: Self Improvement with Diverse Reasoning Chains"

https://arxiv.org/abs/2501.05707

Training specialized LLM agents separately prevents them from learning the same limited solutions.

A novel multiagent finetuning approach that improves LLMs by training specialized agents through multiagent interactions, enabling continuous self-improvement while maintaining response diversity.

🤔 Original Problem:

→ Current LLM self-improvement methods hit performance plateaus after 2-3 rounds of finetuning due to decreased response diversity and model convergence.

🔧 Solution in this Paper:

→ Instead of finetuning a single model, this paper creates multiple specialized agents from the same base model.

→ These agents are split into two types: generation agents that produce initial responses, and critic agents that evaluate and refine those responses.

→ Each agent is finetuned on independent datasets created through multiagent debates.

→ The system maintains diversity by training each agent on different subsets of data, preventing convergence to similar solutions.

💡 Key Insights:

→ Multiple specialized agents preserve diverse reasoning approaches better than single-agent methods

→ Independent finetuning prevents models from converging to similar solutions

→ Critic agents significantly improve performance by providing targeted feedback

→ The method works with both open-source and proprietary LLMs

📊 Results:

→ Outperforms single-agent methods across reasoning tasks:

Arithmetic: 99.62% accuracy

Grade School Math: 85.60% accuracy

MATH dataset: 60.60% accuracy

→ Shows strong zero-shot generalization to new datasets

→ Maintains performance gains over 5+ iterations of finetuning

🗞️ "OmniThink: Expanding Knowledge Boundaries in Machine Writing through Thinking"

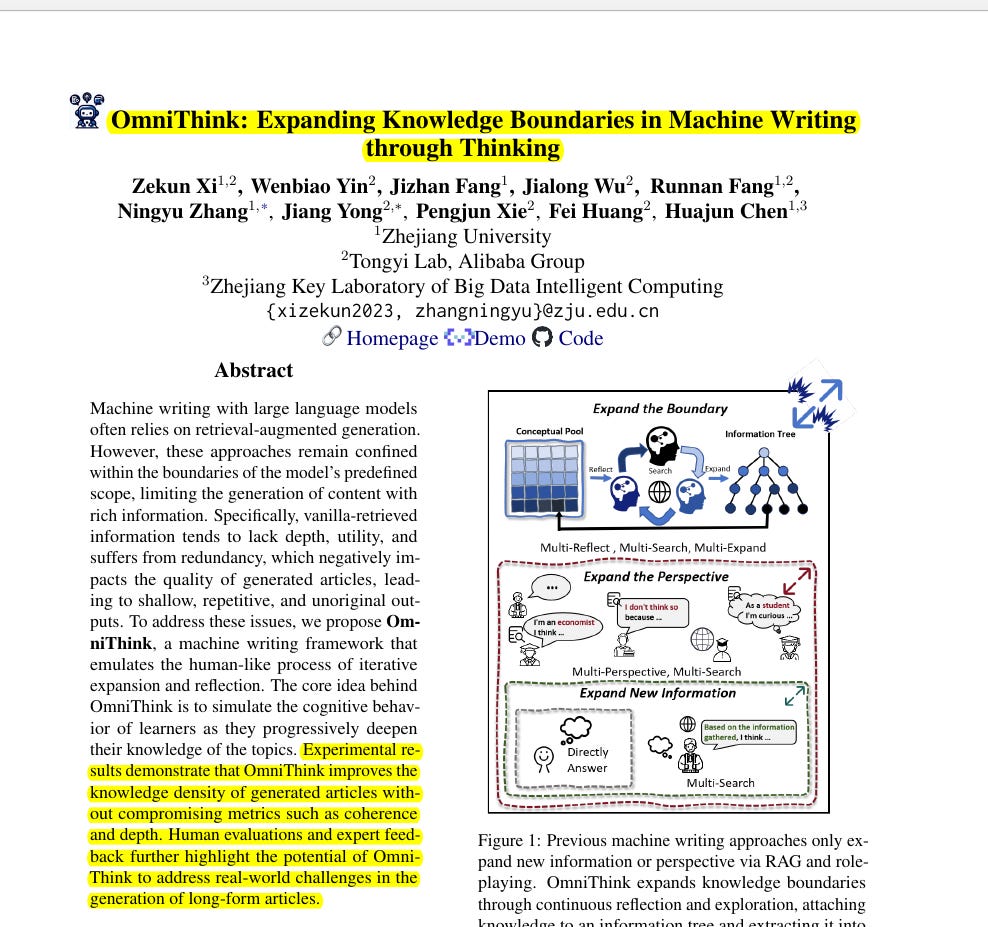

https://arxiv.org/abs/2501.09751

This paper introduces OmniThink, a machine-writing framework designed to enhance the quality of articles generated by LLMs through a process that mimics human iterative expansion and reflection. It aims to improve knowledge density and content originality.

Original Problem:😟

→ Current retrieval-augmented generation methods used in machine writing with LLMs are often confined within the model's predefined scope.

→ This leads to generated content lacking depth, utility, and originality, often resulting in shallow, repetitive outputs.

→ Vanilla-retrieved information is frequently redundant and lacks the nuanced understanding that comes from a more iterative and reflective process.

Solution in this Paper: 🧠

→ OmniThink emulates the human-like cognitive process of iterative expansion and reflection.

→ The framework progressively deepens its understanding of complex topics to expand knowledge boundaries, similar to how learners gradually enhance their knowledge.

→ It employs continuous expansion and reflection to determine optimal steps for further exploration, dynamically adjusting retrieval strategies.

→ An information tree and a conceptual pool are constructed to organize retrieved information and represent the model's understanding.

→ This approach integrates reasoning and planning to extract non-overlapping, high-density information, leading to articles with higher knowledge density.

Key Insights from this Paper: 💡

→ Simulating human-like cognitive processes in machine writing can significantly improve the quality and depth of generated content.

→ Continuous reflection on previously gathered information allows for dynamic adjustment of retrieval strategies, enhancing the relevance and utility of the information.

→ Integrating an information tree and conceptual pool provides a structured way to organize knowledge, leading to more coherent and insightful articles.

→ The iterative process of expansion and reflection results in higher knowledge density without compromising metrics like coherence and depth.

Results:📈

→ Improves knowledge density of generated articles to 22.31 with GPT-4o.

→ Achieves a novelty score of 4.31 with GPT-4o.

→ Shows information diversity of 0.6642 with GPT-4o.

🗞️ "Predicting Human Brain States with Transformer"

https://arxiv.org/abs/2412.19814v1

Your brain's next 5 seconds, predicted by AI

Transformer predicts brain activity patterns 5 seconds into future using just 21 seconds of fMRI data

Achieves 0.997 correlation using modified time-series Transformer architecture

🧠 Original Problem:

Predicting future brain states from fMRI data remains challenging, especially for patients who can't undergo long scanning sessions. Current methods require extensive scan times and lack accuracy in short-term predictions.

🔬 Solution in this Paper:

→ The paper introduces a modified time series Transformer with 4 encoder and 4 decoder layers, each containing 8 attention heads

→ The model takes a 30-timepoint window covering 379 brain regions as input and predicts the next brain state

→ Training uses Human Connectome Project data from 1003 healthy adults, with preprocessing including spatial smoothing and bandpass filtering

→ Unlike traditional approaches, this model omits look-ahead masking, simplifying prediction for single future timepoints

🎯 Key Insights:

→ Temporal dependencies in brain states can be effectively captured using self-attention mechanisms

→ Short input sequences (21.6s) suffice for accurate predictions

→ Error accumulation follows a Markov chain pattern in longer predictions

→ The model preserves functional connectivity patterns matching known brain organization

📊 Results:

→ Single timepoint prediction achieves MSE of 0.0013

→ Accurate predictions up to 5.04 seconds with correlation >0.85

→ First 7 predicted timepoints maintain high accuracy

→ Outperforms BrainLM with 20-timepoint MSE of 0.26 vs 0.568

Here's the main idea: This model takes a sequence of fMRI "snapshots" of the brain and tries to guess what the next snapshot will look like. It's like predicting the next frame in a movie, but instead of visual frames, we're dealing with brain activity patterns.

The encoder receives 30 time points, each a 379-value vector representing the activity of different brain regions. The encoder input layer embeds this data into a higher-dimensional space. Positional encoding then adds temporal information to the sequence.

Four encoder layers, each with multi-head self-attention and a feed-forward network, process the sequence, learning complex relationships between brain regions and time points. The decoder takes the encoder's output and the last time point, embedding it via the decoder input layer. Four decoder layers then process this, incorporating the encoded sequence information. Finally, a linear layer maps the decoder's output to a 379-value prediction of the next time point's brain activity.

🗞️ "The Lessons of Developing Process Reward Models in Mathematical Reasoning"

https://arxiv.org/abs/2501.07301

The paper proposes a consensus filtering mechanism that combines Monte Carlo estimation with LLM-as-judge to improve Process Reward Models in mathematical reasoning.

🤔 Original Problem:

→ Current Process Reward Models (PRMs) struggle with data quality and evaluation metrics, leading to unreliable mathematical reasoning verification

→ Monte Carlo estimation methods produce noisy data, while human annotation is expensive

🔧 Solution in this Paper:

→ Introduces a consensus filtering mechanism that only keeps data samples where both Monte Carlo estimation and LLM-as-judge agree on error locations

→ Implements hard labels instead of soft labels for training, treating steps as correct only if they can lead to correct answers

→ Combines response-level and step-level metrics for more comprehensive evaluation

→ Uses Qwen2.5-72B-Instruct as the judge model to verify reasoning steps

💡 Key Insights:

→ Monte Carlo estimation alone yields inferior performance compared to LLM-as-judge approaches

→ Best-of-N evaluation can be misleading due to correct answers from flawed reasoning processes

→ PRMs trained solely on Best-of-N tend to drift towards outcome-based rather than process-based assessment

📊 Results:

→ New PRM achieves 69.3% accuracy on Best-of-8 evaluation, outperforming existing models

→ Demonstrates 78.3% F1 score on ProcessBench, significantly higher than baseline 56.5%

→ Reduces training data by 60% while maintaining performance through consensus filtering

🗞️ "VideoRAG: Retrieval-Augmented Generation over Video Corpus"

https://arxiv.org/abs/2501.05874

VideoRAG taps into YouTube-like videos to make AI responses more accurate and visually informed.

VideoRAG enhances traditional RAG systems by leveraging video content as external knowledge, using LVLMs to process both visual and textual elements for more accurate and contextually rich responses.

🤔 Original Problem:

→ Current RAG systems primarily rely on text and sometimes images, missing out on the rich multimodal information available in videos

→ Existing video-based approaches either assume pre-selected videos or convert videos to text, losing valuable visual context

🔍 Solution in this Paper:

→ VideoRAG dynamically retrieves relevant videos from a large corpus based on query similarity

→ It processes both visual frames and textual elements (subtitles/transcripts) using LVLMs

→ For videos without subtitles, it employs speech recognition to generate auxiliary text

→ The system uses InternVideo2 for video-text alignment during retrieval and LLaVA-Video-7B for response generation

💡 Key Insights:

→ Combined visual-textual features outperform individual modalities in video retrieval

→ Optimal ratio between textual and visual features is 0.5-0.7 for retrieval performance

→ Visual information is crucial for queries requiring demonstration or temporal understanding

📊 Results:

→ Outperforms text-based RAG baselines across ROUGE-L (0.254 vs 0.172), BLEU-4 (0.054 vs 0.032)

→ Shows 25% improvement in retrieval accuracy when combining visual and textual features

→ Particularly excels in Food & Entertainment category due to strong visual components