Top LLM Papers of the past week

The top influential papers released during 14-Aug to 23-Aug 2024

Highlighting the following research papers for the pervious week (14-Aug to 23-Aug 2024)

[1] Automated Design of Agentic Systems

📚 https://arxiv.org/abs/2408.08435

Github - https://github.com/ShengranHu/ADAS

Automated agent design significantly surpasses manual approaches in performance and generalizability.

The Paper proposes 🔬:

• Introduces Automated Design of Agentic Systems (ADAS), a new research area for automatic creation of powerful agentic system designs

• Represents agents in code, enabling a meta agent to program increasingly better agents

• Proposes Meta Agent Search algorithm:

Iteratively generates new agents based on an evolving archive of previous discoveries

Uses Foundation Models to create agents, evaluate performance, and refine designs • Encompasses a search space including all possible components of agentic systems:

Prompts

Tool use

Control flows • Theoretically enables discovery of any possible agentic system

Results📊:

• Outperforms state-of-the-art hand-designed agents across multiple domains • Improves F1 scores on reading comprehension (DROP) by 13.6/100 • Increases accuracy on math tasks (MGSM) by 14.4% • Demonstrates strong transferability:

25.9% accuracy improvement on GSM8K after domain transfer

13.2% accuracy improvement on GSM-Hard after domain transfer • Maintains superior performance when transferred across dissimilar domains and models

[2]. Automating Thought of Search

📚 https://arxiv.org/pdf/2408.11326

Automated agent design significantly surpasses manual approaches in performance and generalizability.

The Paper proposes 🔬:

• Introduces Automated Design of Agentic Systems (ADAS), a new research area for automatic creation of powerful agentic system designs • Represents agents in code, enabling a meta agent to program increasingly better agents • Proposes Meta Agent Search algorithm:

Iteratively generates new agents based on an evolving archive of previous discoveries

Uses Foundation Models to create agents, evaluate performance, and refine designs • Encompasses a search space including all possible components of agentic systems:

Prompts

Tool use

Control flows • Theoretically enables discovery of any possible agentic system

Results📊:

• Outperforms state-of-the-art hand-designed agents across multiple domains • Improves F1 scores on reading comprehension (DROP) by 13.6/100 • Increases accuracy on math tasks (MGSM) by 14.4% • Demonstrates strong transferability:

25.9% accuracy improvement on GSM8K after domain transfer

13.2% accuracy improvement on GSM-Hard after domain transfer • Maintains superior performance when transferred across dissimilar domains and models

[3]. Customizing Language Models with Instance-wise LoRA for Sequential Recommendation

📚 https://arxiv.org/pdf/2408.10159

From LoRA to iLoRA 😆 - Nice proposal in this paper.

iLoRA personalizes LLM recommendations by integrating LoRA with Mixture of Experts for improved accuracy.

Instance-wise LoRA tailors recommendations to individual users, enhancing sequential recommendation performance.

Original Problem 🚩:

Sequential recommendation systems using uniform LoRA modules fail to capture individual user variability, leading to suboptimal performance and negative transfer between disparate sequences.

Key Insights from this Paper 💡:

• User behaviors exhibit substantial individual variability

• Uniform LoRA application overestimates relationships between sequences

• iLoRA mitigates negative transfer in LLM-based recommendations through personalized expert activation.

• Mixture of Experts can tailor LLMs for individual variability in recommendations

• Sequence representations can guide expert participation

Solution in this Paper 🛠️:

• Introduces Instance-wise LoRA (iLoRA)

• Integrates LoRA with Mixture of Experts framework

• Creates diverse array of experts capturing specific user preference aspects

• Implements sequence representation guided gate function

• Generates enriched representations to guide gating network

• Produces customized expert participation weights

• Maintains same total parameters as standard LoRA

• Fine-tunes LLM (LLaMA-2) on hybrid prompts with personally-activated LoRA

Results 📊:

• Outperforms existing methods on three benchmark datasets

• Demonstrates superior performance in capturing user-specific preferences

• Improves recommendation accuracy compared to uniform LoRA approaches

• Mitigates negative transfer between discrepant sequences

• Dynamically adapts to diverse user behavior patterns

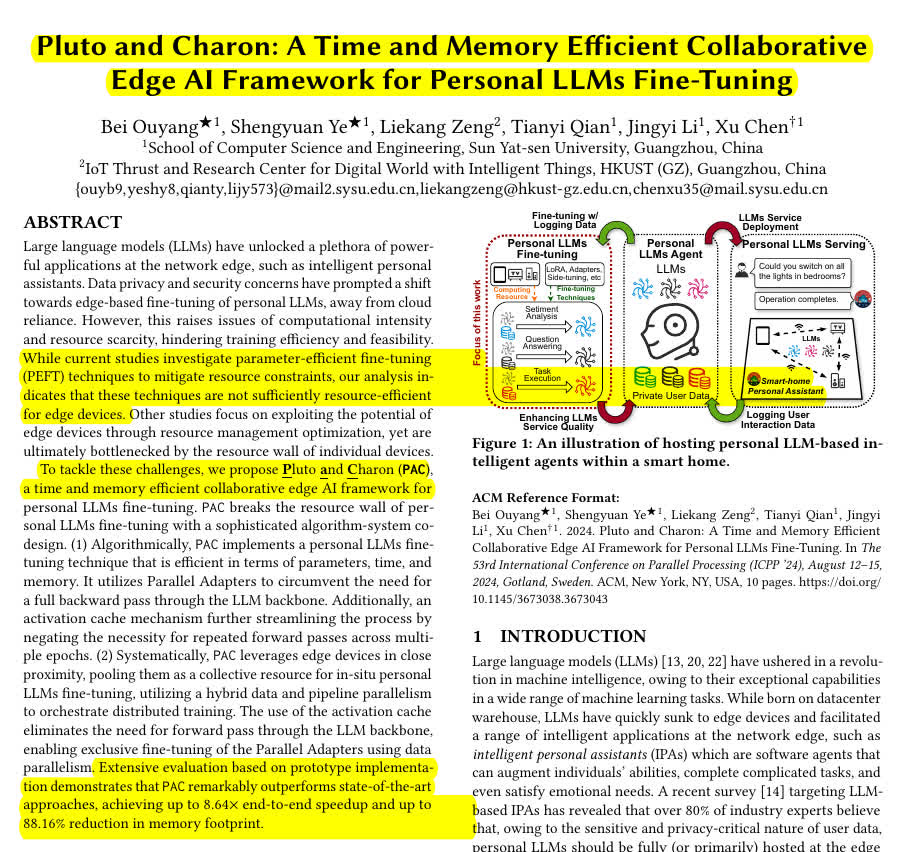

[4]. Pluto and Charon A Time and Memory Efficient Collaborative Edge AI Framework for Personal LLMs Fine-Tuning

📚 https://arxiv.org/pdf/2408.10746

Great new efficiency technique achieves significant speedup and memory reduction in LLM fine-tuning.

Up to 8.64× end-to-end speedup compared to state-of-the-art approaches🤯

And 88.16% reduction in memory footprint

Key Insights from this Paper 💡:

• Collaborative edge computing can break single-device resource limitations • Parallel Adapters enable efficient fine-tuning without full LLM backpropagation • Activation caching significantly reduces computational overhead across epochs • Hybrid parallelism optimizes resource utilization in edge environments

Solution in this Paper 🛠️:

• Pluto and Charon (PAC) framework for collaborative edge AI LLM fine-tuning • Parallel Adapters: Lightweight, separate network for parameter-efficient tuning • Activation cache: Stores invariant LLM backbone activations for reuse • Hybrid parallelism: Combines data and pipeline parallelism across devices • Two-phase fine-tuning: Initial epoch with hybrid parallelism, subsequent epochs with data parallelism and cached activations

Results 📊:

• Comparable or superior performance to full model fine-tuning and PEFT techniques • 31.94% to 56.24% reduction in average sample training time without cache • Up to 96.39% reduction in average sample training time with activation cache.

A general workflow of Pluto and Charon (PAC) framework for collaborative edge AI LLM fine-tuning

1. Equip the target LLM with Parallel Adapters

2. Profile the LLM using a calibration dataset on edge devices

3. Use the PAC planner to generate parallel configurations based on profiling results

4. Configure Parallel Adapters as trainable while freezing LLM backbone parameters

5. Apply parallel configurations to edge devices for hybrid data and pipeline parallelism fine-tuning

6. Leverage activation cache in subsequent epochs to accelerate fine-tuning

[5]. PolyRouter: A Multi-LLM Querying System

📚 https://arxiv.org/abs/2408.12320v1

Intelligent query routing in PolyRouter, to choose the best optimal model, will give you 30% cost reduction compared to no routing 🤯

Original Problem 🔍:

No single LLM outperforms all others across every task. The challenge lies in efficiently routing queries to the most suitable LLM expert while balancing query execution throughput, costs, and model performance.

Key Insights 💡:

• Multi-LLM routing can significantly improve efficiency and reduce costs • Learning the embedding space of query prompts is crucial for effective routing • Soft labels based on BERT similarity scores enhance routing model training

Solution in this Paper 🛠️:

• PolyRouter: A multi-LLM querying system that routes queries to expert models

• Predictive routing methods trained on benchmark datasets:

Random-Router: Randomly selects an expert

1NN-Router: Uses cosine similarity in embedding space

MLP-Router: 2-layer perceptron with Bag-of-Words input

BERT-Router: Fine-tuned BERT model for sequence classification

• Soft label approach using scaled BERT similarity scores for training

• Evaluation across multiple dimensions: cost, throughput, BERT similarity, negative log-likelihood

Results 📊:

• BERT-Router outperforms standalone experts and other routing methods:

30% cost reduction compared to no routing

40% query inference throughput increase

11% improvement in BERT similarity score

6% reduction in negative log-likelihood

• Routing methods learned embedding space (MLP, BERT) outperform naive methods

The system to efficiently route queries to the most suitable LLM expert while optimizing for cost, throughput, and performance.

1. Router Data Preparation 📊:

• Collects domain-specific instruction datasets and model experts • Performs forward pass over each expert model to gather metrics • Generates soft labels using BERT similarity scores • Prepares final training and testing dataset

2. Router Training 🧠:

• Passes instruction records through embedding model (e.g., BERT) • Uses generated embeddings to train prompt-to-expert classifier • Employs various approaches like kNN, MLP, or BERT-based models

3. Router Deployment 🚀:

• Deploys trained router as standalone endpoint • Processes incoming queries by tokenizing and encoding • Predicts most relevant expert model for each query • Forwards query to selected expert and returns response to user

[6]. RAGLAB: A Modular and Research-Oriented Unified Framework

📚 https://arxiv.org/abs/2408.11381

👨🏽💻 https://github.com/fate-ubw/RAGLab

RAGLAB, great for standardizing RAG research, modular design and fair comparisons.

Original Problem 🔍:

RAG research faces challenges due to lack of fair comparisons between algorithms and limitations of existing open-source tools, hindering development of novel techniques.

Solution in this Paper 🛠️:

• Introduces RAGLAB, an open-source library for RAG research • Features:

Modular architecture for each RAG component

Reproduces 6 existing RAG algorithms

Standardizes key experimental variables: • Generator fine-tuning • Instructions • Retrieval configurations • Knowledge bases • Benchmarks

Comprehensive evaluation ecosystem

Interactive mode and user-friendly interface

Results 📊:

• Conducts fair comparison of 6 RAG algorithms across 10 benchmarks • Demonstrates RAGLAB's effectiveness in:

Enhancing research efficiency (85% of users reported)

Facilitating algorithm comparison and development • 90% of users willing to recommend RAGLAB to other researchers

[7] TurboEdit: Instant text-based image editing

📚 https://arxiv.org/pdf/2408.08332

A new research from Adobe. TurboEdit enables real-time, high-quality image editing using few-step diffusion models and detailed text prompts.

Original Problem 🔍:

Existing image editing techniques for few-step diffusion models struggle with precise image inversion and disentangled editing, limiting their real-time application potential.

Key Insights 💡:

Detailed text prompts enable better disentangled control in few-step diffusion models

Freezing noise maps while modifying text prompts allows targeted attribute changes

Iterative inversion with encoder-based techniques improves reconstruction quality

Solution in this Paper 🛠️:

Encoder-based iterative inversion network conditioned on input and previous reconstructions

Automatic generation of detailed text prompts for disentangled control

Freeze noise maps and modify single attributes in text prompts for targeted editing

Linear interpolation of text embeddings for editing strength control

Integration with LLMs for instruction-based editing

Results 📊:

Inversion: 8 NFEs (one-time cost)

Editing: 4 NFEs per edit

Speed: <0.5 seconds per edit (vs >3 seconds for multi-step methods)

Outperforms state-of-the-art in background preservation and CLIP similarity