Read time: 14 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡Top Papers of last week (Ending 2-Nov-25):

🗞️ The Principles of Diffusion Models

🗞️ Kimi Linear: An Expressive, Efficient Attention Architecture

🗞️ A Practitioner’s Guide to Kolmogorov-Arnold Networks

🗞️ An efficient probabilistic hardware architecture for diffusion-like models

🗞️ Beyond Hallucinations: The Illusion of Understanding in LLMs

🗞️ Paper2Web: Let’s Make Your Paper Alive!

🗞️ DeepAnalyze: Agentic Large Language Models for Autonomous Data Science

🗞️ Emergent Introspective Awareness in Large Language Models

🗞️ “The End of Manual Decoding: Towards Truly End-to-End Language Models”

🗞️ DeepAgent: A General Reasoning Agent with Scalable Toolsets

🗞️ The Principles of Diffusion Models

Stanford just published a huge 470-page study 📕

Explains how diffusion models turn noise into data and ties their main ideas together. It starts from a forward process that adds noise over time, then learns the exact reverse.

The reverse uses a time dependent velocity field that tells how to move a sample at each step. Sampling becomes solving a time based equation that carries noise to data along a trajectory.

There are 3 views of this idea, variational, score-based, and flow-based, and they describe the same thing. There are also 4 training targets, noise, clean data, score, and velocity, and these are equivalent.

Shows how guidance can steer outputs using a prompt or label without extra classifiers. Reviews fast solvers that cut steps while keeping quality stable.

Explains distillation methods that shrink many sampling steps into a few by mimicking a teacher model. Introduces flow map models that learn direct jumps between times for fast generation from scratch.

🗞️ Kimi Linear: An Expressive, Efficient Attention Architecture

The brilliant Kimi Linear paper. Their proposed attention mechanism is a hybrid attention that beats full attention while cutting memory by up to 75% and keeping 1M token decoding up to 6x faster.

It cuts the key value cache by up to 75% and delivers up to 6x faster decoding at 1M context. Full attention is slow because it compares every token with every other token and stores all past keys and values.

Kimi Linear speeds this up by keeping a small fixed memory per head and updating it step by step like a running summary, so compute and memory stop growing with length. Their new Kimi Delta Attention adds a per channel forget gate, which means each feature can separately decide what to keep and what to fade, so useful details remain and clutter goes away.

They also add a tiny corrective update on every step, which nudges the memory toward the right mapping between keys and values instead of just piling on more data. The model stacks 3 of these fast KDA layers then 1 full attention layer, so it still gets occasional global mixing while cutting the key value cache roughly by 75%.

Full attention layers run with no positional encoding, and KDA learns order and recency itself, which simplifies the stack and helps at long ranges. Under the hood, a chunkwise algorithm plus a constrained diagonal plus low rank design removes unstable divisions and drops several big matrix multiplies, so the kernels run much faster on GPUs.

With the same training setup, it scores higher on common tests, long context retrieval, and math reinforcement learning, while staying fast even at 1M tokens. It drops into existing systems, saves memory, scales to 1M tokens, and improves accuracy without serving changes.

🗞️ A Practitioner’s Guide to Kolmogorov-Arnold Networks

Yale and Hong Kong Univ published a hefty 63 page study “A Practitioner’s Guide to Kolmogorov-Arnold Networks (KAN)”. Explaining what they are and how to use them.

Standard multilayer perceptrons mix inputs with linear weights then push the result through a fixed nonlinearity, which can make them rigid, hard to interpret, and slow to learn fine details. Kolmogorov Arnold Networks flip that order by applying small learnable 1D functions on each input edge first, then summing them, which gives local control, clearer behavior, and often fewer parameters for the same job.

The design is inspired by a classic result that any multi input function can be built from sums of 1D transforms, so the network mirrors that recipe in a trainable way. The key dial is the choice of the 1D basis function, because that choice sets smoothness, locality, periodicity, and compute cost.

Splines give smooth, local bumps that are easy to shape near boundaries, so they are a solid default for many problems. These 1D functions come from bases like B splines, Chebyshev, Gaussians, Fourier, or wavelets.

The basis choice sets smoothness, locality, and cost, so picking it is the main design step. KANs can match MLPs and sometimes use fewer parameters, but each step can cost more compute.

For science tasks, they add physics to the loss, adapt sample points, and split domains when needed. Across many tests, these tools help KANs equal or beat plain MLPs on hard partial differential equations.

The paper also provides theory on approximation, kernel based training, and reduced spectral bias. The ends with a practical choose your KAN guide linking problem types to basis and training choices.

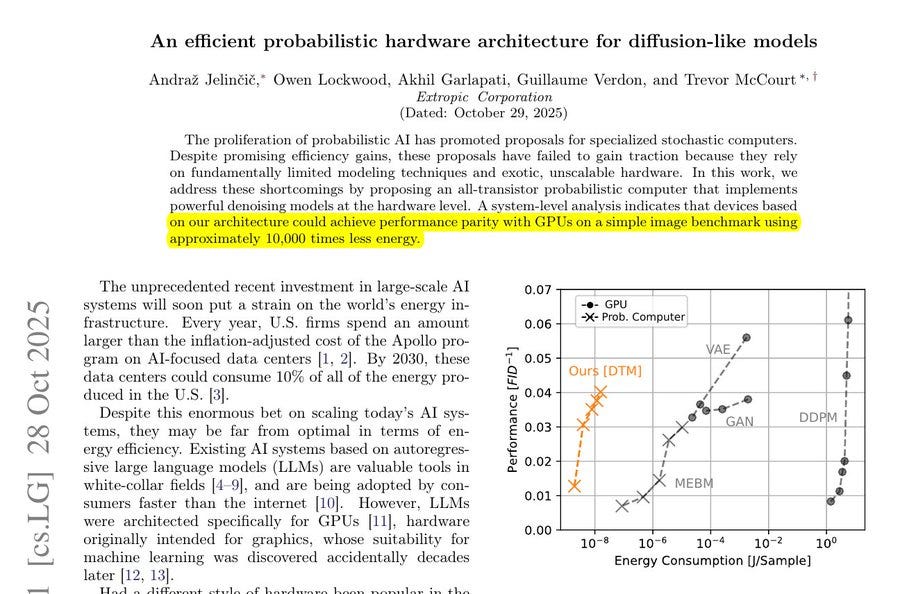

🗞️ An efficient probabilistic hardware architecture for diffusion-like models

The paper behind Extropic’s massive claim of 10,000x lower energy than a GPU. Builds a transistor-only random computer that runs denoising models efficiently.

On a small image task, it targets about 10,000x lower energy than a GPU. Past designs used 1 big model, which got slow and costly as it fit better.

They switch to many simple denoising steps that turn noise back into data. They get the big drop in energy by turning sampling into thousands of tiny local flips inside the chip, not big math on a GPU.

Each step runs on a grid of binary sampling cells that only talk locally. A tiny transistor circuit makes random bits with a bias set by a voltage.

Each update happens where the data lives using small transistor cells that store state and update it, so there is almost no long wire movement or off-chip memory traffic. The only operation per cell is to add a few neighbor signals and draw a biased random bit from a tiny on-chip circuit, which costs femtojoules instead of the picojoules to nanojoules of GPU math.

Because the steps are simple and local, the chip runs many cells in parallel and spends most energy on cheap local communication rather than expensive floating point operations. Training stays steady because a feedback penalty keeps the sampler well mixed.

For non-binary data, a small encoder maps inputs in and a decoder maps outputs back. So diffusion style generation runs in standard silicon without exotic parts.

🗞️ Beyond Hallucinations: The Illusion of Understanding in LLMs

This paper says language models often sound like they understand things, but they do not. Authors gives a clear checklist to prevent the illusion of understanding when using LLMs.

LLMs guess the next word from patterns in data, so fluent answers can still be wrong. The authors explain that many mistakes come from language itself, which is messy and subjective.

They propose a simple mental model called Rose-Frame to spot failure early. The first trap is Map vs Territory, treating a neat answer as reality instead of a guess.

The second trap is Intuition vs Reason, trusting fast gut feel over slow checking. The third trap is Confirmation vs Conflict, accepting agreement as truth and avoiding tests that could disprove it.

The key move is to check all 3 traps together, because they stack and push errors to grow. The paper walks through public chats like the LaMDA case to show how emotional cues can mislead people.

The lesson is to treat outputs as plausible stories until verified, especially in high-stakes work. The practical fix is governance, not bigger models, by adding explicit checks, challenges, and slower reasoning when it matters. The goal is not zero hallucinations, but stopping small slips from turning into big decisions.

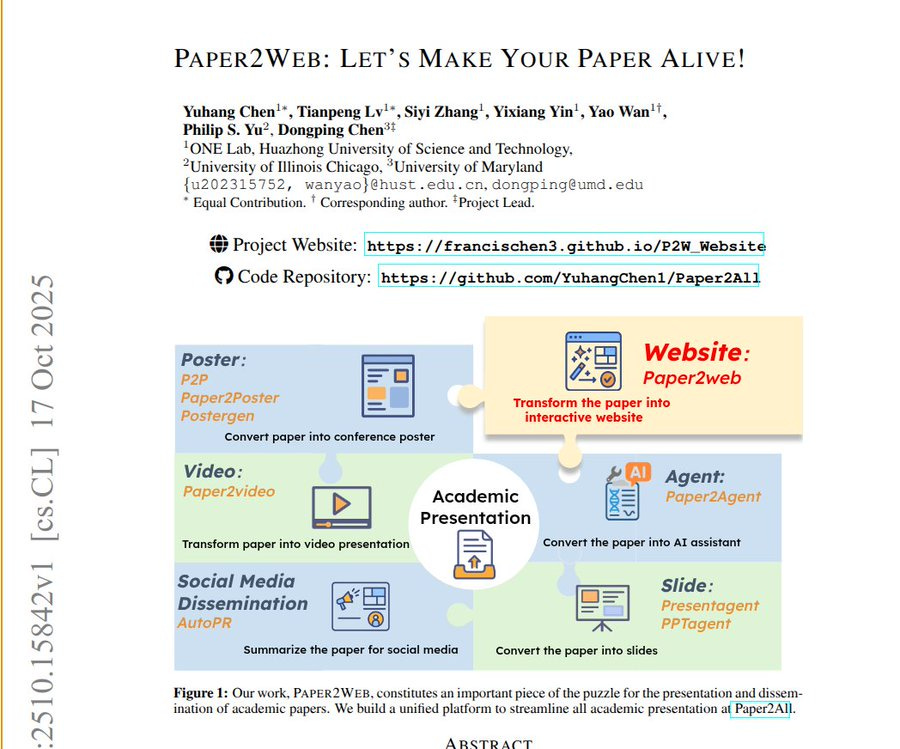

🗞️ Paper2Web: Let’s Make Your Paper Alive!

This paper turns a research PDF into an easy, interactive website using an automated agent. The big deal is that it shows an end-to-end way to auto-build good project websites from papers.

It also defines a clear benchmark for this task, builds a dataset of 10,716 paper-site pairs, and sets up simple checks for link quality, section coverage, overall usability, and a quiz that measures how much knowledge the site actually transfers. The system is called PWAgent, it splits a paper into text, figures, tables, and links, stores them as tools, then drafts a site and keeps fixing it after looking at its own screenshots.

A small heuristic gives each part a space budget so pages do not become walls of text or image dumps. The agent anchors media next to the right text, cleans navigation, and trims wording that does not help.

Against arXiv HTML, alphaXiv, and prompt-only or template-based baselines, it makes pages more interactive, clearer, and better organized. The cost stays low while quality stays close to human-made sites.

Readers get the core ideas, demos, videos, and code links in one place without manual web work. Researchers can publish a usable project page with little effort.

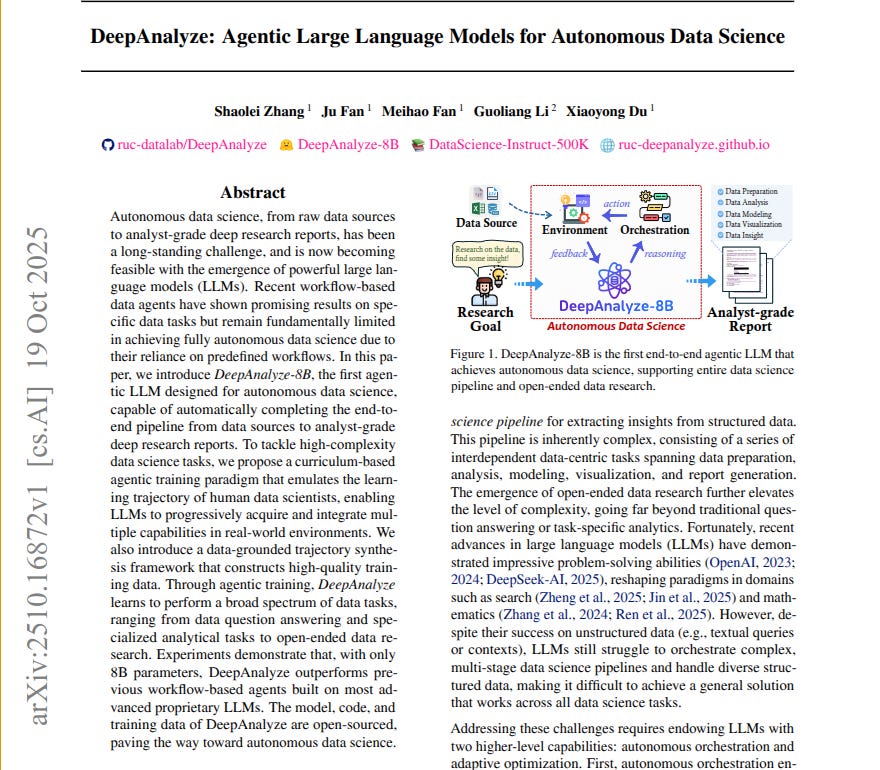

🗞️ DeepAnalyze: Agentic Large Language Models for Autonomous Data Science

This paper builds an agentic LLM that can run the whole data science workflow by itself. It is an 8B model that plans work, reads structured files, writes and runs code, checks results, and iterates.

Standard “workflow agents” break here because fixed scripts do not adapt well to long, multi step jobs. DeepAnalyze fixes that with 5 actions, Analyze, Understand, Code, Execute, and Answer, so the model can switch between thinking and doing.

Training happens in 2 stages, first single skills are strengthened, then multi skill reinforcement in live environments. They also synthesize step by step trajectories so the model sees full examples of planning, coding, and using feedback.

Rewards are hybrid, simple checks like correctness and format plus an LLM judge that scores report usefulness and clarity. The result is autonomous orchestration, choosing the next best action, and adaptive optimization, improving decisions from environment feedback. Across many benchmarks, this 8B model beats most workflow agents and can produce analyst grade research from raw structured data.

🗞️ Emergent Introspective Awareness in Large Language Models

New research from Anthropic basically hacked into Claude’s brain. Shows Claude can sometimes notice and name a concept that engineers inject into its own activations, which is functional introspection.

They first watch how the model’s neurons fire when it is talking about some specific word. Then they average those activation patterns across many normal words to create a neutral “baseline.”

Finally, they subtract that baseline from the activation pattern of the target word. The result — the concept vector — is what’s unique in the model’s brain for that word.

They can then add that vector back into the network while it’s processing something else to see if the model feels that concept appear in its thoughts. The scientists directly changed the inner signals inside Claude’s brain to make it “think” about the idea of “betrayal”, even though the word never appeared in its input or output.

i.e. the scientists figured out which neurons usually light up when Claude talks about betrayal. Then, without saying the word, they artificially turned those same neurons on — like flipping the “betrayal” switch inside its head.

Then they asked Claude, “Do you feel anything different?” Surprisingly, it replied that it felt an intrusive thought about “betrayal.”

That happened before the word “betrayal” showed up anywhere in its written output. That’s shocking because no one told it the word “betrayal.” It just noticed that its own inner pattern had changed and described it correctly.

The point here is to show that Claude isn’t just generating text — sometimes it can recognize changes in its own internal state, a bit like noticing its own thought patterns. It doesn’t mean it’s conscious, but it suggests a small, measurable kind of self-awareness in how it processes information.

Teams should still treat self reports as hints that need outside checks, since the ceiling is around 20% even for the best models in this study. Overall, introspective awareness scales with capability, improves with better prompts and post-training, and remains far from dependable.

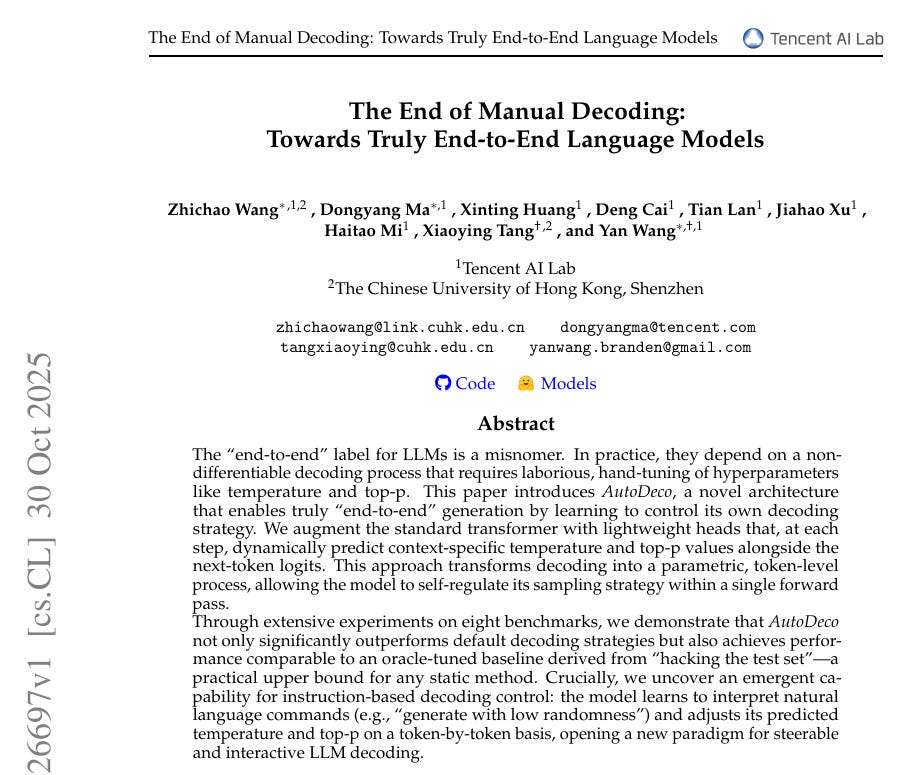

🗞️ “The End of Manual Decoding: Towards Truly End-to-End Language Models”

New Tencent paper lets LLMs choose their own temperature and top-p for each token, improving output and control. Hand tuning disappears, saving time and guesswork.

Today people set those knobs by hand, and the best settings change inside a single answer. Their method, AutoDeco, adds 2 small heads that read the hidden state and predict the next token’s temperature and top-p.

They replace the hard top-p cutoff with a smooth one so training can teach those heads directly. During generation the model applies the predicted temperature and top-p in the same pass, adding about 1-2% time.

Across math, general questions, code, and instructions, this beats greedy and default sampling and matches expert tuned static settings. It also follows plain prompts like low diversity or high certainty by shifting those values the right way.

So hand tuning goes away, the model adapts token by token, and steering becomes simple and reliable.

🗞️ DeepAgent: A General Reasoning Agent with Scalable Toolsets

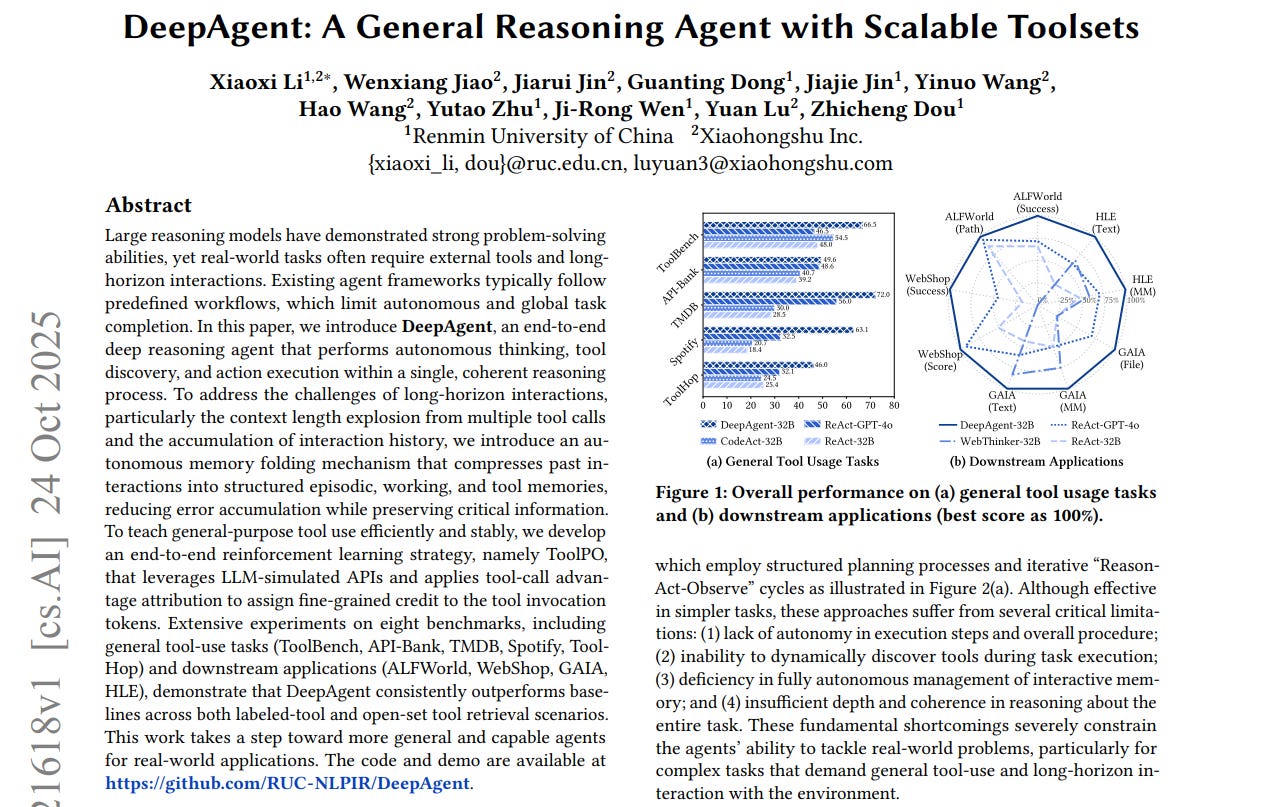

The paper introduces DeepAgent, lets a reasoning model find tools on demand, manage memory, and beat workflow agents on hard, long tasks.

It runs a single thinking loop that decides when to search for a tool. It looks up tools by meaning in a large index and tries promising ones. It is not stuck in fixed workflows or tiny tool menus.

Long tasks usually flood the context and carry mistakes forward. DeepAgent avoids this with memory folding that compresses history. The folded memory has 3 parts, episodic for milestones, working for the current subgoal, and tool for what worked.

This keeps key facts, cuts token use, and helps the agent recover. Training uses ToolPO, a reinforcement method tailored to tool use. A tool simulator stands in for real APIs so training stays stable and cheap.

Rewards score both the final answer and the correctness of each tool call. Credit is aimed at the exact tokens that form tool names and arguments, which sharpens calls.

Across many benchmarks and apps, DeepAgent beats workflow agents and handles open tool retrieval well.

That’s a wrap for today, see you all tomorrow.