Read time: 13 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡Top Papers of last week (18-Jan-2026):

🗞️ Challenges and Research Directions for LLM Inference Hardware

🗞️ GDPO: Group reward-Decoupled Normalization Policy Optimization for Multi-reward RL Optimization

🗞️ Learning Latent Action World Models In The Wild

🗞️ On the Limits of Self-Improving in LLMs and Why AGI, ASI and the Singularity Are Not Near Without Symbolic Model Synthesis

🗞️ Conditional Memory via Scalable Lookup: A New Axis of Sparsity for LLM

🗞️ The core concept of DeepSeek’s Engram is just so beautiful.

🗞️ HIGH PRECISION COMPLEX NUMBER BASED ROTARY POSITIONAL ENCODING CALCULATION ON 8-BIT COMPUTE HARDWARE

🗞️ Agent-as-a-Judge

🗞️ Ministral 3 Technical pape

🗞️ Challenges and Research Directions for LLM Inference Hardware

New Google paper says LLM inference is limited by memory and networking, and it proposes 4 hardware shifts. Inference is separated into Prefill, which processes input in parallel, and Decode, which outputs 1 token (a small text chunk) at a time while constantly reading a Key Value cache, a running memory of past text.

LLM serving speed and cost problems come from the Decode phase, where the system keeps fetching past context from memory and talking across chips, not from a lack of raw compute. The paper argues today’s data center accelerators were shaped by training needs, so they often look fast on paper but waste time during Decode because memory bandwidth, memory capacity, and interconnect latency become the real bottlenecks.

The authors review today’s hardware and show a mismatch, high compute helps less when Decode gets stuck on memory bandwidth, memory capacity, and network latency. High Bandwidth Flash stacks flash to aim for about 10X more capacity for model weights, the learned numbers that stay fixed during inference, or for slow changing context, and processing near memory plus 3D stacking move compute closer to memory so less energy and time are wasted moving data around. For fast user responses, the paper also pushes low latency interconnects, meaning fewer network hops and smarter switches, so the same LLM can run on a smaller cluster that costs less and fails less.

🗞️ GDPO: Group reward-Decoupled Normalization Policy Optimization for Multi-reward RL Optimization

This paper fixes multi reward reinforcement learning (RL) for LLMs by keeping each reward’s signal separate. Shows why mixing multiple reward scores breaks RL training, and fixes it by separating normalization.

When an LLM is trained with RL, each reply gets several reward scores, but summing them and using Group Relative Policy Optimization (GRPO) can make different score mixes look identical after normalization, a rescaling step, so the advantage, the training push, loses detail. Group reward Decoupled Normalization Policy Optimization (GDPO) fixes this by normalizing each reward inside the rollout group, a small set of sampled answers for 1 prompt, then adding them up and normalizing again over the batch, the training chunk, so the scale stays stable.

The authors tested GDPO against GRPO on 3 setups, tool calling with strict output structure, math reasoning with a length limit, and coding where solutions should pass tests and avoid bugs. On math, GDPO got up to 6.3% higher accuracy on AIME, a hard math benchmark, while keeping more replies under the length limit. This matters because real apps want several behaviors at once, and clearer reward signals make multi goal training less fragile.

🗞️ Learning Latent Action World Models In The Wild

This paper learns hidden action codes from messy internet videos, so world models can be trained without action labels. They teach a model to watch 2 frames and infer the action that caused the change.

A world model is a video predictor that tries to guess what happens next, but it normally needs action labels that most online clips do not have. The authors train an inverse dynamics model that looks at 2 nearby frames and outputs a latent action, meaning a small hidden code that explains the change, and they train the predictor to use that code to forecast the next frame.

They test 3 ways to keep the latent action from just copying the future frame, by making it sparse, adding noise, or forcing it into a small set of discrete codes. They find the constrained continuous versions, the sparse and noisy ones, capture harder events like a person entering a room and even transfer that motion across unrelated videos, while discrete code sets miss details and the learned actions become tied to where things move in the camera view. They also train a small controller, a translator from robot or navigation commands into these action codes, which lets the same model do short horizon planning, meaning picking a few actions to reach a goal, close to models trained with action labels.

🗞️ On the Limits of Self-Improving in LLMs and Why AGI, ASI and the Singularity Are Not Near Without Symbolic Model Synthesis

This paper proves LLM self training on mostly self generated data makes models lose diversity and drift from truth. LLMs cannot bootstrap forever on their own text, they need fresh reality checks or they collapse.

The problem is that many people expect an AI to learn from its own output and keep improving without limit. The authors describe training as mixing real data with model made data, and then letting the real part shrink toward 0.

They then prove what the loop does when each round is trained on only a finite batch of samples. They show 2 things happen, outputs get less varied over time, and small errors pile up until the model’s beliefs drift.

They also say reward based training can fail if the checker is wrong, so they suggest adding small symbolic programs that can test rules. That is why they claim AGI, ASI, and the AI singularity are not close with today’s LLMs unless learning stays grounded in reality.

🗞️ Conditional Memory via Scalable Lookup: A New Axis of Sparsity for LLM - The famous paper by DepSeek

DeepSeek’s new paper just uncovered a new U-shaped scaling law.

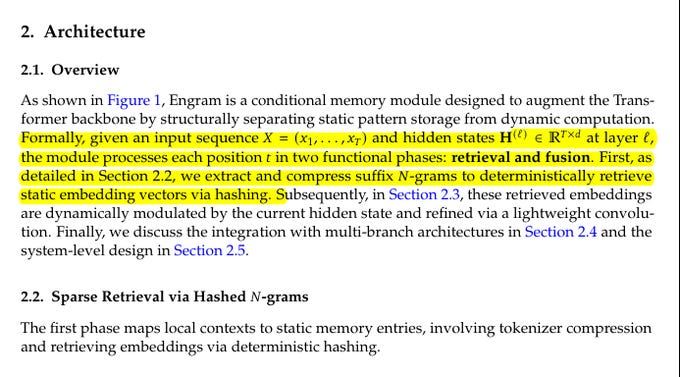

Shows that N-grams still matter. Instead of dropping them in favor of neural networks, they hybridize the 2. This clears up the dimensionality problem and removes a big source of inefficiency in modern LLMs.

Uncovers a U-shaped scaling law that optimizes the trade-off between neural computation (MoE) and static memory (Engram). Right now, even “smart” LLMs waste a bunch of their early layers re-building common phrases and names from scratch, because they do not have a simple built-in “lookup table” feature.

Mixture-of-Experts already saves compute by only running a few expert blocks per token, but it still forces the model to spend compute to recall static stuff like named entities and formula-style text. Engram is basically a giant memory table that gets queried using the last few tokens, so when the model sees a familiar short pattern it can fetch a stored vector quickly instead of rebuilding it through many layers.

They implement that query using hashed 2-gram and 3-gram patterns, which means the model always does the same small amount of lookup work per token even if the table is huge. The big benefit is that if early layers stop burning time on “static reconstruction,” the rest of the network has more depth left for real reasoning, and that is why reasoning scores go up even though this sounds like “just memory.”

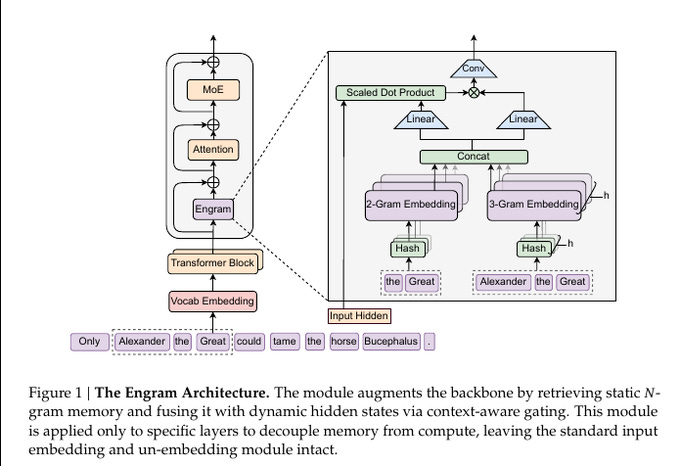

The long-context benefit is also solid, because offloading local phrase glue to memory frees attention to focus on far-away relationships, and Multi-Query Needle-in-a-Haystack goes from 84.2 to 97.0 in their matched comparison. The system-level big deal is cost and scaling, because they show you can offload a 100B memory table to CPU memory and the throughput drop stays under 3%, so you can add a lot more “stored stuff” without needing to fit it all on GPU memory.

🧩 The core problem

The paper splits language modeling into 2 jobs, deep reasoning that needs real computation, and local stereotyped patterns that are basically fast recall. Transformers do not have a native lookup block, so they burn early attention and feed-forward layers to rebuild static stuff like multi-token entities and formulaic phrases.

That rebuild is expensive mainly because it eats sequential depth, meaning the model spends layers on trivia-like reconstruction before it even starts the harder reasoning steps. Classical N-gram models already handle a lot of this local dependency work with cheap table access, so forcing a Transformer to relearn it through compute is a design mismatch. Engram is their way of turning “lookup” into a first-class primitive that lives next to MoE, instead of being faked by extra neural layers.

Engram adds a huge hashed N-gram memory table that gets queried with a fixed amount of work per token, so early layers stop wasting compute rebuilding names and stock phrases.

They show the best results when about 20% to 25% of the sparse budget moves from experts into this memory, while total compute stays matched. Engram hits 97.0 on Multi-Query Needle-in-a-Haystack, while the matched MoE baseline hits 84.2.

🧠 The idea, conditional memory next to MoE

Engram treats memory as a second sparsity knob, where MoE gives conditional computation and Engram gives conditional lookup. At each token position, the module forms a short suffix context from nearby tokens, fetches a small set of memory vectors, and mixes the result into the current hidden state.

The per-token lookup work stays fixed because the model always grabs the same small number of slots, even if the table grows to billions of parameters. They only insert Engram into selected Transformer blocks, which keeps the normal input embedding and output head unchanged and avoids adding latency everywhere. The goal is to let memory handle short-range “already seen this” patterns so the backbone can spend more of its depth on actual reasoning.

🗞️ The core concept of DeepSeek’s Engram is just so beautiful.

A typical AI model treats “knowing” like a workout. Even for basic facts, it flexes a bunch of compute, like someone who has to do a complicated mental trick just to spell their own name each time. The reason is that standard models do not have a native knowledge lookup primitive. So they spend expensive conditional computation to imitate memory by recalculating answers over and over.

💡Engram changes the deal by adding conditional memory, basically an instant-access cheat sheet. It uses N-gram embeddings, meaning digital representations of common phrases, which lets the model do a O(1) lookup. That is just 1-step retrieval, instead of rebuilding the fact through layers of neural logic.

This is also a re-balance of the whole system. It solves the Sparsity Allocation problem, which is just picking the right split between neurons that do thinking and storage that does remembering.

They found a U-shaped scaling law. When the model is no longer forced to waste energy on easy stuff, static reconstruction drops, meaning it stops doing the repetitive busywork of rebuilding simple facts.

Early layers get breathing room, effective depth goes up, and deeper layers can finally concentrate on hard reasoning. That is why general reasoning, code, and math domains improve so much, because the model is not jammed up with alphabet-level repetition.

For accelerating AI development, infrastructure-aware efficiency is the big win. Deterministic addressing means the system knows where information lives using the text alone, so it can do runtime prefetching.

That makes central processing unit (CPU) random access memory (RAM) usable as the cheap, abundant backing store, instead of consuming limited graphics processing unit (GPU) memory. Local dependencies are handled by lookup, leaving attention free for global context, the big picture.

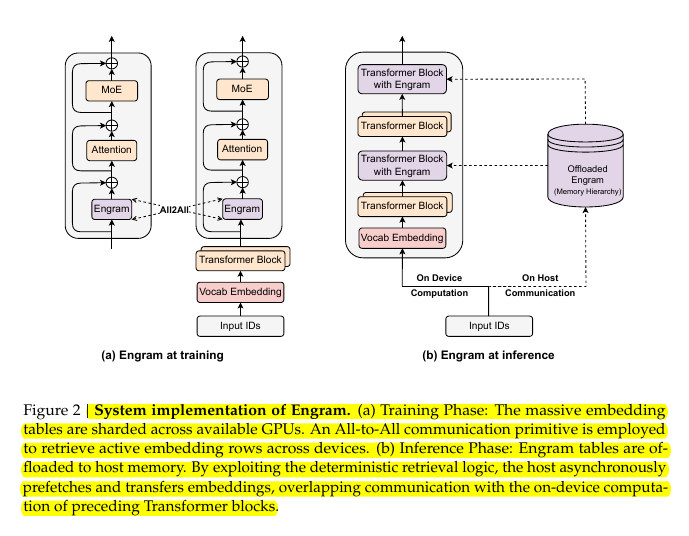

🗞️ HIGH PRECISION COMPLEX NUMBER BASED ROTARY POSITIONAL ENCODING CALCULATION ON 8-BIT COMPUTE HARDWARE

This paper gave a massive new patent to Tesla. They found a way to get 32-bit-like accuracy for a crucial transformer math step while still using mostly 8-bit hardware.

Basically this method is “augmenting an effective number of bits for a hardware pipeline.” This can be huge for edge systems like autonomous vehicles and robots where heat and power budgets are tight.

Rotary Positional Embedding (RoPE) is a way transformers tag each token with its position by rotating numbers by an angle. An 8-bit chip normally does fast multiply-add math but struggles with angle math like sine, cosine, and exponentials without piling up rounding error.

Rotary Positional Embedding (RoPE) depends on those angles, so if the angles drift, attention loses track of positions as context gets longer. The patent’s trick is mixed precision, it keeps the bulk pipeline in 8-bit where it is cheap and efficient, then hands only the sensitive parts to a smaller higher-precision block.

It also changes how numbers are carried through the cheap part, for example moving log(theta) instead of theta, because logs are easier to represent without losing important detail. When it is time to apply the rotation, a wider arithmetic logic unit (ALU) reconstructs theta accurately enough and computes the sine and cosine needed for the rotation.

Their method pushes easy multiplies through an 8-bit multiplier-accumulator (MAC) and reserves harder steps for a wider arithmetic logic unit (ALU). That reduces power and bandwidth cost while keeping positional rotations accurate enough for inference.

The filing is about a specific RoPE kernel, not a general trick that makes every 32-bit model run in int8. RoPE is a positional encoding for transformer models that rotates pairs of channels to represent position.

The rotation is equivalent to complex-number multiplication, so it depends on accurate angles and sine and cosine. On 8-bit hardware, rounding error in angle math can grow as sequences and layers get deeper.

The patent transports angles as log(theta) through the low-precision path because log values vary less in size. The MAC multiplies input-tensor elements with stored pieces of log(theta) at the low bit-width.

Those log(theta) pieces can come from storage with precomputed values, instead of recomputing logs on the fly. To feed the wider block efficiently, the MAC can left-shift an 8-bit value by a power of 2 and concatenate 2 values into a 16-bit word. This is not a magic switch that turns an 8-bit chip into a full 32-bit chip, but it is a practical way to spend precision only where it buys stability.

🗞️ Agent-as-a-Judge

This survey explains why LLM-as-a-Judge fails on hard tasks, and how Agent-as-a-Judge makes judging reliable.

A plain LLM judge, meaning a text-generating AI model that scores answers in 1 pass, can be biased and easily fooled.

It also cannot check work against reality, so it may rate a wrong answer as right just because it sounds good.

Agent-as-a-Judge fixes this by turning judging into a small workflow where the judge plans steps, calls tools like search or runs code, and stores notes.

The authors group these ideas into 3 stages of autonomy and 5 building blocks, then map where each method fits and where it is used.

They show agent judges are used for math and code, fact-checking, conversation, images, and high-stakes fields like medicine, law, finance, and education, because step checks and multi-agent reviews give more grounded scores.

The paper also flags costs and risks, since multi-step judging takes more compute, adds delay, and may store sensitive data, but it offers a clean way to think about better evaluation.

🗞️ Ministral 3

Ministral 3 Technical paper is released.

The main trick was Cascade Distillation, they start from a strong 24B model, prune it into a smaller shape, then train the smaller model to copy the bigger model’s output probabilities, then repeat until they reach 14B, 8B, and 3B.

To make long context work, they train in 2 stages, first short context, then extend to 262,144 tokens using YaRN plus position-dependent attention temperature scaling.

For the chat and reasoning versions, they stack post-training methods like supervised fine-tuning, Online Direct Preference Optimization, and for reasoning they add Group Relative Policy Optimization reinforcement learning on chain-of-thought style data.

That’s a wrap for today, see you all tomorrow.