Read time: 10 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡Top Papers of last week (Ending 26-Oct-2025):

🗞️ A Definition of AGI

🗞️ Train for Truth, Keep the Skills: Binary Retrieval-Augmented Reward Mitigates Hallucinations

🗞️ QeRL: Beyond Efficiency -- Quantization-enhanced Reinforcement Learning for LLMs

🗞️ Attention Is All You Need for KV Cache in Diffusion LLMs

🗞️ Pico-Banana-400K: A Large-Scale Dataset for Text-Guided Image Editing

🗞️ The Free Transformer

🗞️ Every Step Evolves: Scaling Reinforcement Learning for Trillion-Scale Thinking Model

🗞️ Scaling Laws Meet Model Architecture: Toward Inference-Efficient LLMs

🗞️ Text or Pixels? It Takes Half: On the Token Efficiency of Visual Text Inputs in Multimodal LLMs

🗞️ Fluidity Index: Next-Generation Super-intelligence Benchmarks

🗞️ A Definition of AGI

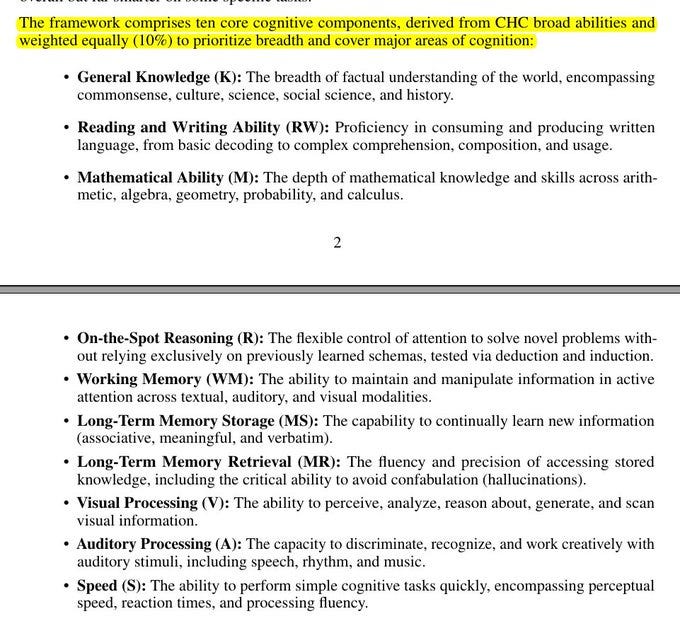

The paper proposes a concrete test for AGI by checking if an AI matches a well educated adult across 10 abilities. And reading it, the obvious conclusion is we are just so far away from AGI level intelligence.

They report an “AGI Score” and show GPT-4 at 27% and GPT-5 at 58%, with long term memory at 0%. The goal is to stop the moving target problem and make progress measurable and auditable.

They ground the checklist in the Cattell Horn Carroll model, a long standing map of human cognitive abilities. The 10 areas cover knowledge, reading and writing, math, on the spot reasoning, working memory, long term memory storage, long term memory retrieval, visual processing, auditory processing, and speed.

Each area is tested with focused subtests such as deduction, induction, theory of mind, planning, visual rotation, phonetic coding, and reaction time. Instead of only big end tasks, they adapt human style subtests that isolate narrow skills like induction, theory of mind, planning, visual rotation, phonetic coding, and reaction times.

Modern models look very strong on knowledge, language, and math, and weak on vision, audio, speed, and storing and recalling new facts without errors. They show that today’s systems often fake memory by stuffing huge context windows and fake precise recall by leaning on retrieval from external tools, which hides real gaps in storing new facts and recalling them without hallucinations.

They emphasize that both GPT-4 and GPT-5 fail to form lasting memories across sessions and still mix in wrong facts when retrieving, which limits dependable learning and personalization over days or weeks. The scope is cognitive ability, not motor control or economic output, so a high score does not guarantee business value.

They warn about training contamination and push for small distribution shifts and human checks when grading. In summary, progress will stall without durable long term memory and precise retrieval, because the weakest part limits the system.

The ten core cognitive components of our AGI definition.

The framework comprises ten core cognitive components

🗞️ Train for Truth, Keep the Skills: Binary Retrieval-Augmented Reward Mitigates Hallucinations

The paper shows a simple reinforcement learning reward that cuts hallucinations without hurting core skills. 39% fewer hallucinations in open-ended writing, plus fewer wrong answers in question answering.

Models often sound confident while being wrong. Past fixes raised truth but also made answers vague or less helpful.

This method checks each answer against retrieved evidence. If any claim conflicts, the reward is 0, otherwise it is 1.

No partial credit means shaky claims are not worth it. The model learns to keep supported facts and drop risky ones.

For short questions, it says I do not know when unsure. A small penalty keeps behavior close to the base model, so math, code, and following instructions stay fine.

Binary signals are harder to game than fuzzy scores. Outputs get shorter and clearer while keeping the right details.

🗞️ QeRL: Beyond Efficiency -- Quantization-enhanced Reinforcement Learning for LLMs

QeRL (Quantization-enhanced Reinforcement Learning) trains LLMs with RL using 4-bit weights and tuned noise, boosting speed and accuracy.

Up to 1.5x faster rollouts, and RL on a 32B model with 1 H100 80GB. Reasoning RL is slow because rollouts are long and memory holds policy and reference.

LoRA cuts trained parameters, but rollout generation stays slow. QLoRA uses NF4 tables, which slow generation.

QeRL uses NVFP4 weights with Marlin, and keeps LoRA for gradients. It reuses one 4-bit policy for rollouts and logit scoring, avoiding duplicates.

Quantization noise raises token entropy, so the model explores more. Adaptive Quantization Noise adds small channel wise noise early, then decays it.

The noise merges into RMSNorm scaling, adding 0 parameters and leaving kernels intact. On math tasks, rewards rise faster and accuracy matches or beats 16-bit LoRA and QLoRA. Bottom line, faster RL, lower memory, and bigger models on single GPUs.

🗞️ Attention Is All You Need for KV Cache in Diffusion LLMs

This paper speeds up diffusion LLM decoding by updating the stored keys and values cache only when and where needed. Reported gains reach up to 45.1x in the longest cases.

Most existing decoders recompute queries, keys, and values for every token and layer at every step, which wastes compute. Shallow layers settle quickly while deeper layers keep shifting, so full recomputation is unnecessary.

Elastic-Cache watches the most attended token and uses its attention drift as a simple test to decide if a refresh is needed. When drift is high, it recomputes starting at a deeper layer and leaves shallow layers reused.

It also uses a sliding window so only nearby masked tokens are processed, and it block caches faraway MASK tokens. These choices remove redundant work and cut latency while keeping answer quality stable.

Across LLaDA, LLaDA-1.5, and LLaDA-V, throughput rises on long sequences with accuracy roughly unchanged. Unlike fixed refresh schedules, it adapts per input, step, and layer, which explains the speedups.

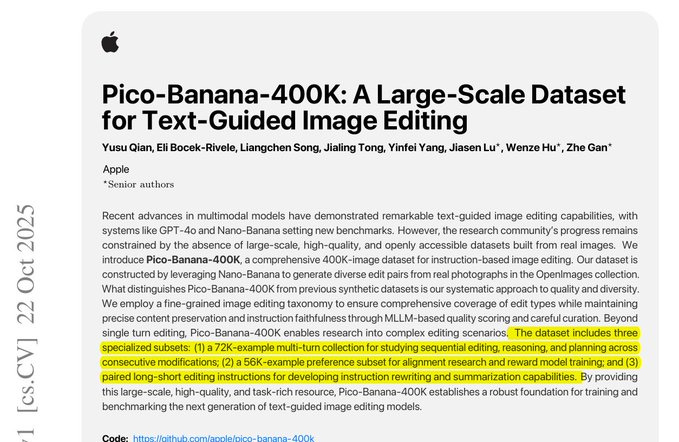

🗞️ Pico-Banana-400K: A Large-Scale Dataset for Text-Guided Image Editing

Apple dropped Pico-Banana-400K. A huge ~400K text-guided image editing dataset from real OpenImages photos with 35 edit types, automated Gemini-judged quality, and open sharing for research.

Each edit is produced by Nano-Banana then scored by Gemini-2.5-Pro with weighted checks, 40% instruction compliance, 25% seamlessness, 20% preservation balance, 15% technical quality, passing only if the score is about 0.7 or higher. The release includes 258K single-turn SFT pairs, 56K success-vs-failure preference pairs for methods like Direct Preference Optimization, and 72K multi-turn sessions, totaling 386K filtered examples with both long and short instructions.

Edits span 8 categories and 35 operations across pixel or color changes, object semantics, scene composition, styles, text or symbols, human edits, scale, and spatial layout, and low-quality operations were removed up front. Multi-turn chains are built by sampling 100K single-turn cases and adding 1 to 4 more operations to create 2 to 5 step sequences with context-aware follow-ups that refer to earlier edits.

Global and style edits succeed most, for example 0.9340 strong style transfer, 0.9068 film grain, 0.8875 modern↔historical restyle, while fine spatial control is hardest, for example 0.5923 relocate object, 0.5759 change font or style, 0.6634 outpainting. Images come from OpenImages, negative attempts are kept as counterexamples for preference learning, metadata is standardized, and the reported build cost is $100,000.

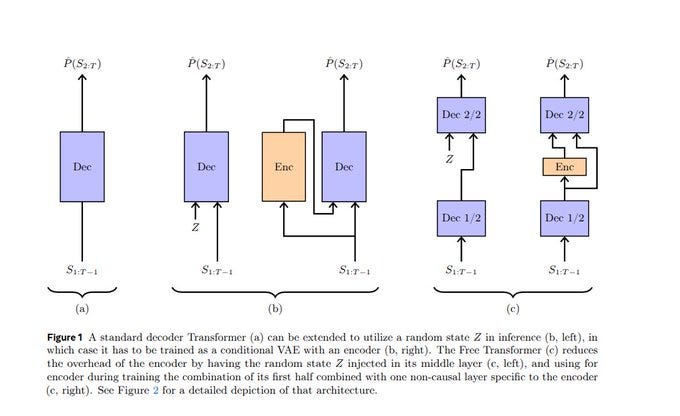

🗞️ The Free Transformer

It’s called “Free Transformer” because it frees the model from needing a heavy encoder during inference.

In a normal setup with a random hidden state, you must use both an encoder and a decoder every time you generate text. That doubles the cost. The Free Transformer avoids that. It learns a shared internal structure during training, then throws away the encoder afterward. At inference, it just samples the hidden state directly and runs the decoder alone.

That’s the big deal — it keeps the benefits of conditional variational autoencoders (which help models plan better) but removes the extra cost that usually makes them impractical. So you get a more stable, globally aware Transformer that costs almost the same as a regular one.

It does this with only about 3% extra compute during training. Normal decoders choose each next token using only the tokens so far, which makes them guess global choices late.

The Free Transformer samples a tiny random state first, then conditions every token on that state. Training pairs a decoder with an encoder using a conditional variational autoencoder so the model learns useful states.

A penalty called KL plus a method called free bits keeps the state from memorizing the whole sequence. The architecture injects the state halfway through the stack by adding a learned vector into keys and values, then decoding continues as usual.

The state is one choice out of 65536 options per token, built from 16 independent bits. At inference the encoder is skipped, a uniform sampler picks the state, and generation runs normally.

This gives the model an early global decision, which reduces brittle behavior after small token mistakes. On 1.5B and 8B models the method improves coding, math word problems, and multi choice tasks. Overall a tiny encoder adds a helpful bias that makes reasoning and coding more reliable.

How different Transformer setups handle a hidden random state called Z.

In the diagram, the first one shows a normal Transformer that just predicts the next tokens from the previous ones. The second adds a random state Z, and an extra encoder network is used during training to infer what that hidden state should be for each example.

The third setup, called the Free Transformer, makes this process simpler. It injects the random state halfway through the model instead of using a separate full encoder.

During training, the encoder is still used once to help the model learn how to pick good hidden states, but it works only with part of the network. During inference, the encoder is skipped, and the random state Z is sampled directly. This design gives the model a global decision early on, helping it produce more consistent and stable output without much extra computation.

🗞️ Every Step Evolves: Scaling Reinforcement Learning for Trillion-Scale Thinking Model

Shows how to stably train a 1T open reasoning model.

They built Ring-1T, a 1 trillion parameter Mixture-of-Experts model. Out of total 1T parameters, activates 50B per token, and reached an IMO 2025 silver level.

The core problems are that training and inference assign different token probabilities, long rollouts waste compute, and standard reinforcement learning stacks get stuck. The recipe starts from a 1T Mixture of Experts base, adds long chain supervised fine tuning, then applies reinforcement learning with verifiable rewards and a short pass of human feedback.

IcePop checks the gap between training and inference for each token and ignores updates on tokens where the gap is too large, which keeps gradients stable. C3PO++ sets a token budget, splits very long rollouts, buffers the unfinished parts, and resumes them next step so hardware stays busy.

ASystem ties it together with AMem for GPU memory, AState for fast peer to peer weight sync, and ASandbox for on demand verifiers. The result is long stable thinking traces and strong scores like 55.94 on ARC AGI 1 and 2088 on CodeForces. Bottom line, this is a practical and stable way to train long thinking at trillion scale.

🗞️ Scaling Laws Meet Model Architecture: Toward Inference-Efficient LLMs

The paper shows how to pick LLM architectures that are fast at inference without losing accuracy. It shows a simple rule that links architecture choices to the loss predicted by standard scaling laws.

It then uses that rule to search for the fastest design that still meets a target loss. The study varies 3 knobs, hidden size, the split between feedforward and attention, and grouped query attention.

They keep layer count, total non embedding parameters, and training tokens fixed for fair tests. Larger hidden size and a bigger feedforward share cut attention work and shrink the KV cache, so throughput rises.

Accuracy is not monotonic, both hidden size and the feedforward split have a sweet spot, too little or too much hurts. They encode these effects as a conditional scaling law that multiplies a baseline loss by 2 small correction factors.

That lets them predict the best settings at 1B and 3B, then train the winners. The “Panda” variants minimize loss, and the “Surefire” variants maximize speed under a loss limit. The result is up to 42% higher throughput with up to 2.1% accuracy gain at the same budget.

🗞️ Text or Pixels? It Takes Half: On the Token Efficiency of Visual Text Inputs in Multimodal LLMs

LLMs spend most time and money in the decoder, so cutting decoder tokens cuts cost and latency. The paper shows a simple way to cut LLM tokens by turning long text into one image while keeping accuracy.

About 50% token savings with similar accuracy, sometimes 25% to 45% faster on large decoders. Long inputs are costly because attention work grows fast as the text gets longer.

They replace most of the text with an image of that text, then keep the short question as normal text. The model’s vision part reads the image and turns it into a small set of visual tokens.

The language part then reads those visual tokens plus the question, so the total token count drops a lot. They test on a retrieval task and a news summarization task.

Results match the normal text baseline while using far fewer decoder tokens. A simple rule works well, aim for roughly half the context as visual tokens. Large models can run faster because shorter decoder sequences outweigh the cost of image processing.

🗞️ Fluidity Index: Next-Generation Super-intelligence Benchmarks

This paper introduces a new benchmark called the Fluidity Index (FI). It argues that a truly superintelligent system must show second-order adaptability, meaning it can keep itself running by managing its own compute and adjusting as the world shifts.

Traditional benchmarks like MMLU test if a model can answer a fixed set of questions, but they do not check if it can adapt when conditions change. The Fluidity Index is different because it measures how well a model updates its answers when the environment or context shifts. It tracks how accurate the model remains as things change over time, making it a test of adaptability and real-time reasoning, not just static accuracy.

So the authors call FI a “superintelligence benchmark,” as it tests whether a model can behave like a system that sustains and corrects itself over time, not just one that gives right answers once. FI follows the initial state, the current state, and the next state, then checks how the model adjusts its prediction.

Each change gets an Accuracy Adaptation score that compares the model’s update to the size of the change. FI averages these scores across changes to get one number.

The setup is closed loop and open ended, so the system reacts to the model and gives live feedback. The paper defines 3 orders of fluidity.

1st order means the model improves an answer across steps using extra tokens. 2nd order means it allocates tokens based on outcomes so it can earn back spent compute. 3rd order means it plans and budgets compute so long tasks can continue without manual help.

In simulation, higher FI matched stronger context understanding and better accuracy after shifts. FI rewards models that stay right while the rules move, which is closer to real autonomy.

That’s a wrap for today, see you all tomorrow.

If we replicate human intelligence without understanding how it works, what have r accomplished?