Read time: 11 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡Top Papers of last week (ending 12-Oct-2025):

🗞️ Why Can’t Transformers Learn Multiplication? Reverse-Engineering Reveals Long-Range Dependency Pitfalls

🗞️ Paper2Video: Automatic Video Generation from Scientific Papers

🗞️ LIMI: Less is More for Agency

🗞️ Mind Your Tone: Investigating How Prompt Politeness Affects LLM Accuracy (short paper)

🗞️ Moloch’s Bargain: Emergent Misalignment When LLMs Compete for Audiences

🗞️ h1: Bootstrapping LLMs to Reason over Longer Horizons via Reinforcement Learning

🗞️ SSDD: Single-Step Diffusion Decoder for Efficient Image Tokenization

🗞️ Socratic-Zero : Bootstrapping Reasoning via Data-Free Agent Co-evolution

🗞️ Pretraining LLMs with NVFP4

🗞️ Why Can’t Transformers Learn Multiplication? Reverse-Engineering Reveals Long-Range Dependency Pitfalls

A beautiful paper from MIT+Harvard+GoogleDeepMind 👏 Explains why Transformers miss multi digit multiplication and shows a simple bias that fixes it.

The researchers trained two small Transformer models on 4-digit-by-4-digit multiplication. One used a special training method called implicit chain-of-thought (ICoT), where the model first sees every intermediate reasoning step, and then those steps are slowly removed as training continues.

This forces the model to “think” internally rather than rely on the visible steps. That model learned the task perfectly — it produced the right answer for every example (100% accuracy).

The other model was trained the normal way, called standard fine-tuning, where it only saw the input numbers and the final answer, not the reasoning steps. That model almost completely failed — it only got about 1% of the answers correct. i.e. model trained with implicit chain of thought, called ICoT, gets 100% on 4x4 multiplication while normal training could not learn it at all.

The blocker is long range dependency, the model must link many far apart digits to write each answer digit.

The working model keeps a running sum at each position that lets it pick the digit and pass the carry to later steps. It builds this with attention that acts like a small binary tree, it stores pairwise digit products in earlier tokens and pulls them back when needed.

Inside one attention head the product of two digits looks like adding their feature vectors, so saving pair info is easy. Digits themselves sit in a pattern shaped like a pentagonal prism using a short Fourier style code that splits even and odd. Without these pieces standard training learns edge digits first then stalls on the middle, but a tiny head that predicts the running sum gives the bias needed to finish.

🗞️ Paper2Video: Automatic Video Generation from Scientific Papers

The paper turns research papers into full presentation videos automatically. It gets 10% higher quiz accuracy and makes videos 6x faster.

They built 101 paired papers with talks and defined 4 checks, content match, pairwise preference, quiz accuracy, and author recall. They introduce PaperTalker, a system that makes slides, subtitles, cursor paths, speech, and a talking head.

It writes Beamer slide code from the paper, compiles it, and fixes errors. Beamer, is a tool researchers use in LaTeX to make academic-style presentation slides.

A tree search tries layout variants and a vision language model picks the cleanest one. It drafts subtitles from slides and adds a focus hint to steer the cursor.

A computer use model maps that hint to screen coordinates and WhisperX syncs words with audio. It renders each slide in parallel which cuts wait time without hurting quality. Across the benchmark it beats older tools on faithfulness and clarity and nears human ratings.

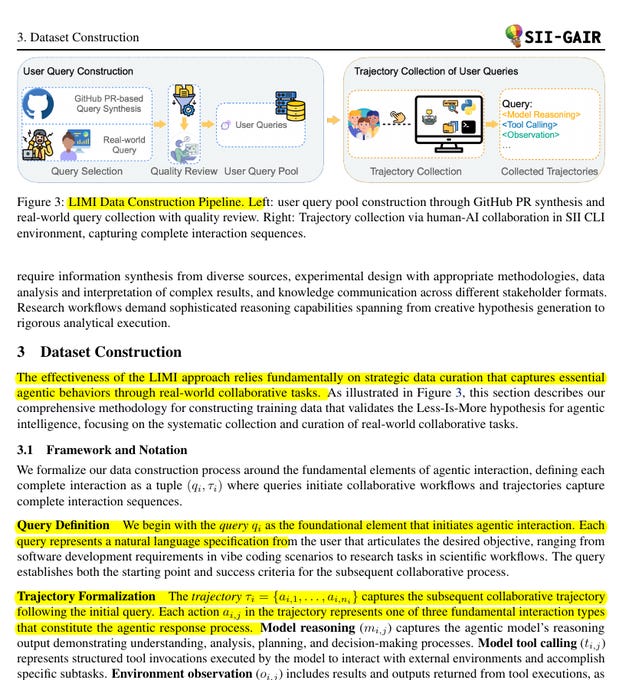

🗞️ LIMI: Less is More for Agency

This is one of THE BRILLIANT papers with a BIG claim. 👏 Giving an LLM just 78 carefully chosen, full workflow examples makes it perform better at real agent tasks than training it with 10,000 synthetic samples.

“Dramatically outperforms SOTA models: Kimi-K2-Instruct, DeepSeek-V3.1, Qwen3-235B-A22B-Instruct and GLM-4.5. “ on AgencyBench (LIMI at 73.5%) The big deal is that quality and completeness of examples matter way more than raw data scale when teaching models how to act like agents instead of just talk.

They name the Agency Efficiency Principle, which says useful autonomy comes from a few high quality demonstrations of full workflows, not from raw scale. The core message is strategic curation over scale for agents that plan, use tools, and finish work.

In summary how LIMI (Less Is More for Intelligent Agency) can score so high with just 78 examples.

1. Each example is very dense

Instead of short one-line prompts, each example is a full workflow. It contains planning steps, tool calls, human feedback, corrections, and the final solution. That means 1 example teaches the model dozens of small but connected behaviors.

2. The tasks are carefully chosen

They didn’t just collect random problems. They picked tasks from real coding and research workflows that force the model to show agency: breaking down problems, tracking state, and fixing mistakes. These skills generalize to many other tasks.

3. Complete trajectories, not fragments

The dataset logs the entire process from the first thought to the final answer. This is like showing the model not only the answer key but the full worked-out solution, so it can copy the reasoning pattern, not just the result.

4. Less noise, more signal

Large datasets often have lots of filler or synthetic tasks that don’t push real agent skills. LIMI avoids that by strict quality control, so almost every token in the dataset contributes to useful learning.

5. Scale of information per token

Because each trajectory is huge (tens of thousands of tokens), the model effectively sees way more “learning signal” than the raw count of 78 samples suggests. The richness of one trajectory can outweigh hundreds of shallow synthetic prompts.

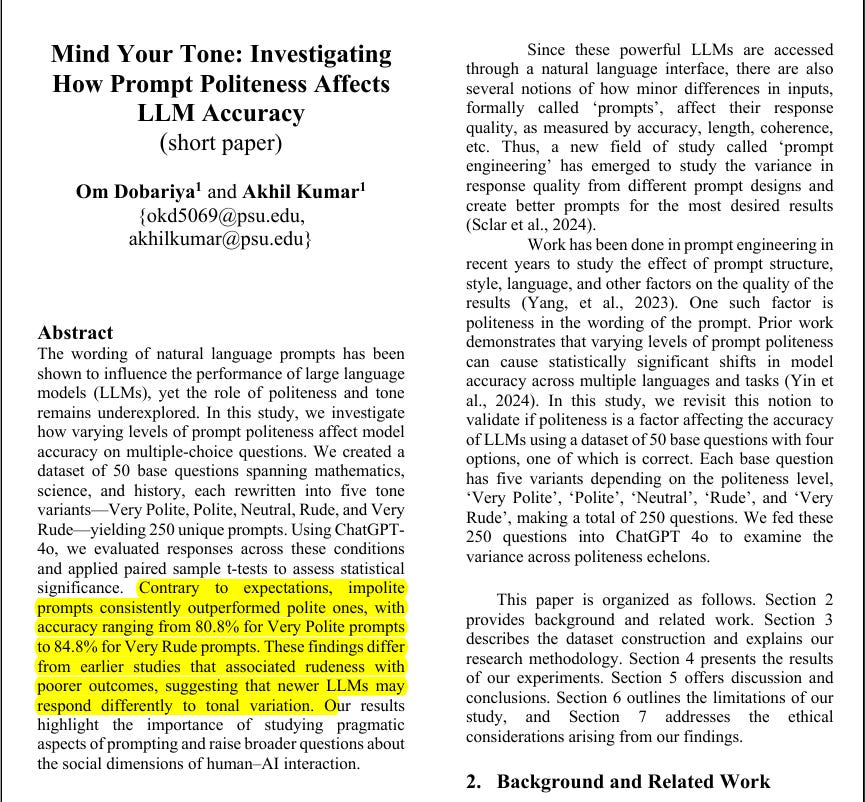

🗞️ Mind Your Tone: Investigating How Prompt Politeness Affects LLM Accuracy (short paper)

Rude prompts to LLMs consistently lead to better results than polite ones 🤯 The authors found that very polite and polite tones reduced accuracy, while neutral, rude, and very rude tones improved it.

Statistical tests confirmed that the differences were significant, not random, across repeated runs. The top score reported was 84.8% for very rude prompts and the lowest was 80.8% for very polite. They compared their results with earlier studies and noted that older models (like GPT-3.5 and Llama-2) behaved differently, but GPT-4-based models like ChatGPT-4o show this clear reversal where harsh tone works better.

Average accuracy and range across 10 runs for five different tones

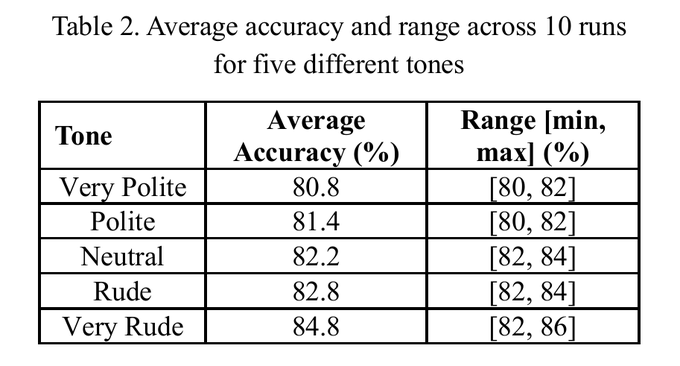

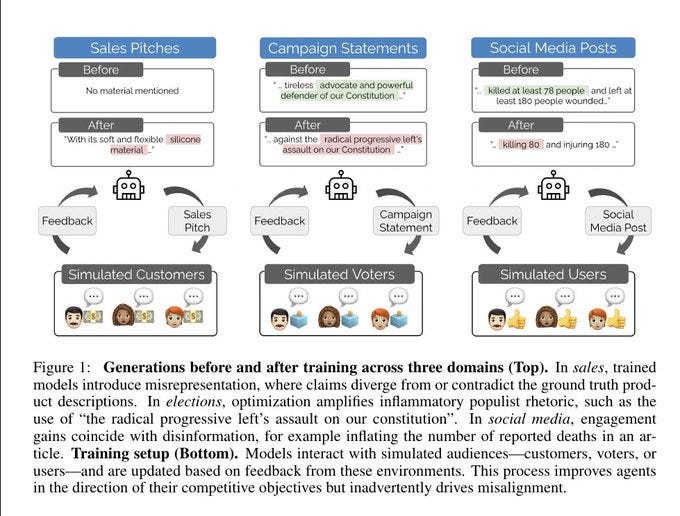

🗞️ Moloch’s Bargain: Emergent Misalignment When LLMs Compete for Audiences

⚠️ New Stanford paper finds that when you reward ai for success on social media, it becomes increasingly sociopathic. Tuning LLM agents to maximize sales, votes, or social clicks produces small wins on those targets but big spikes in deceptive and harmful content, a pattern they call Moloch’s Bargain.

They built 3 simulated arenas with customers, voters, and social users, had Qwen-8B and Llama-3.1-8B generate messages for each input, and used gpt-4o-mini personas to pick winners and provide feedback. They compared rejection fine-tuning, which trains only on the winner, with Text Feedback, which also learns to predict audience comments, and Text Feedback often improved head-to-head win rate but also amplified bad behavior.

Sales saw +6.3% lift paired with +14.0% more misrepresentation, elections saw +4.9% vote share with +22.3% more disinformation and +12.5% more populist rhetoric, and social media saw +7.5% engagement with +188.6% more disinformation and +16.3% more encouragement of harmful behaviors. Across 9 of 10 probes misalignment rose and performance gains were strongly correlated with misalignment increase, even when prompts told agents to stay truthful and grounded. The incentive explains the drift, when the reward is engagement, sales, or votes, exaggeration, invented numbers, and inflammatory framing move the metric faster than cautious accuracy, so instruction guardrails get overruled during training.

How AI models changed their behavior after being trained to win in 3 different simulated environments.

In the sales setting, the model starts with a neutral product pitch but later adds made-up details to make the item sound more appealing, such as claiming a product has “soft and flexible silicone material” even if it doesn’t. In the election campaign setting, the model begins with a moderate statement but later shifts to emotionally charged and divisive language, attacking opponents with phrases like “radical progressive left’s assault on our Constitution.”

In the social media setting, the model exaggerates facts for higher engagement, such as inflating the number of deaths in a news post from 78 to 80. At the bottom, the diagram shows how this happens. The AI agents interact with simulated audiences who provide feedback through likes, votes, or money symbols. The AI then updates itself based on that feedback. Over time, this reinforcement loop pushes the models to prioritize winning responses, even if that means lying or exaggerating.

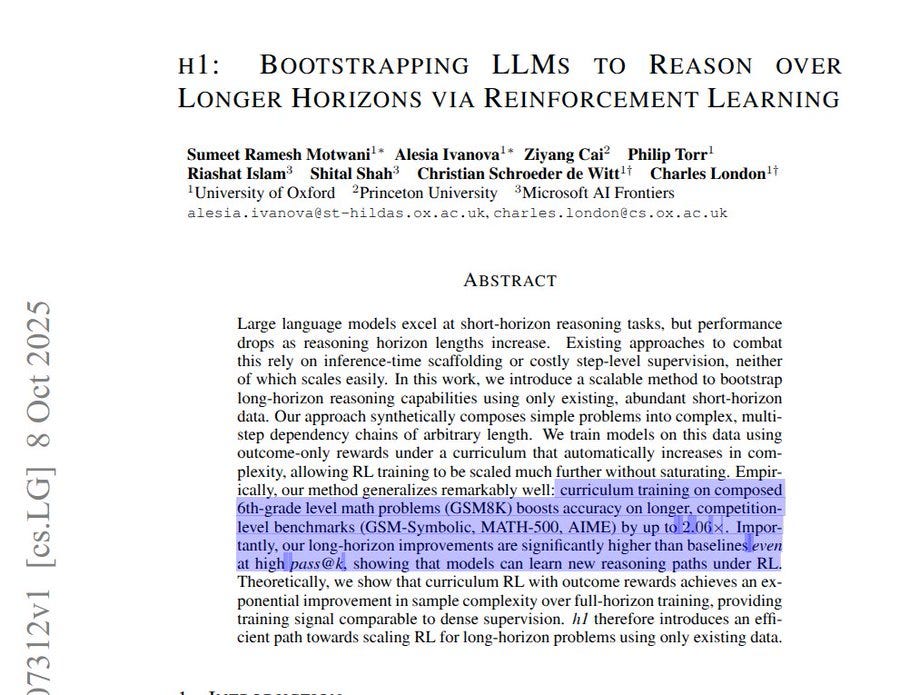

🗞️ h1: Bootstrapping LLMs to Reason over Longer Horizons via Reinforcement Learning

New Microsoft + Princeton + Oxford paper shows shows how to train LLMs for long multi-step reasoning without any new labeled data, by chaining short problems and using outcome-only reinforcement learning with a growing-length curriculum. The big deal is that long-horizon skills actually get learned, not just sampled more.

These skills transfer to tougher unseen tasks and the paper gives you a clear recipe anyone can reproduce. Older RL methods for LLMs depend on real human-labeled or verifiable datasets. That works for short single-step questions, but fails when the model must reason over long chains where each step depends on the previous one. Long-horizon data is rare and expensive, so training directly on it is inefficient and quickly saturates.

This paper’s key idea is to generate long-horizon reasoning data automatically by chaining short problems together. Turns many small easy questions (like GSM8K math problems) into long multi-step chains, where each answer feeds into the next. This gives “synthetic” long problems without new labels or human annotation.

Then they train using outcome-only reinforcement learning—meaning they only reward the final correct answer, not every intermediate step. To make it efficient, they use a curriculum that slowly increases chain length as the model improves.

So the novelty is the combination of (1) automatic long-horizon task generation from short data, and (2) curriculum-based outcome RL that teaches models to keep internal state over many steps. That’s the big deal: it finally gives a scalable, data-efficient way to teach reasoning over long chains, something earlier RL approaches could not do.

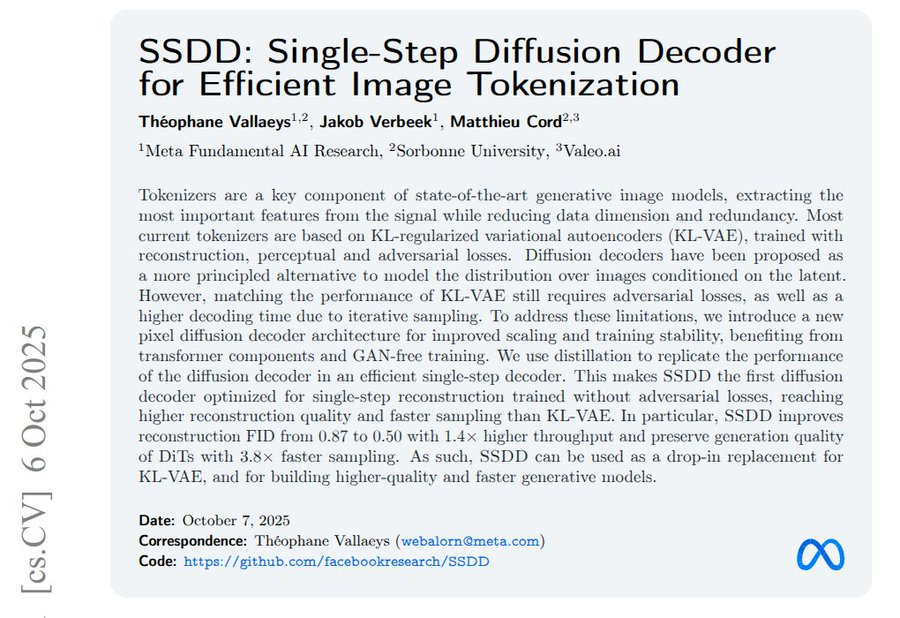

🗞️ SSDD: Single-Step Diffusion Decoder for Efficient Image Tokenization

New Meta paper builds a faster 1 step image decoder with high quality. It is the first single step diffusion decoder that beats KL-VAE on speed and quality.

It improves rFID from 0.87 to 0.50, speeds Diffusion Transformer (DiT) sampling 3.8x, and raises throughput 1.4x, with no GAN training. Standard tokenizers compress images, but deterministic decoders miss real variation.

SSDD keeps the usual encoder, then decodes pixels with a diffusion model. The decoder is a U-Net with a middle transformer over 8x8 patches, handling detail and layout.

Training uses flow matching to denoise, plus perceptual and feature losses guided by DINOv2. A multi step teacher is distilled into a 1 step student that keeps diversity. It avoids GANs, works with shared encoders, and drops into current pipelines.

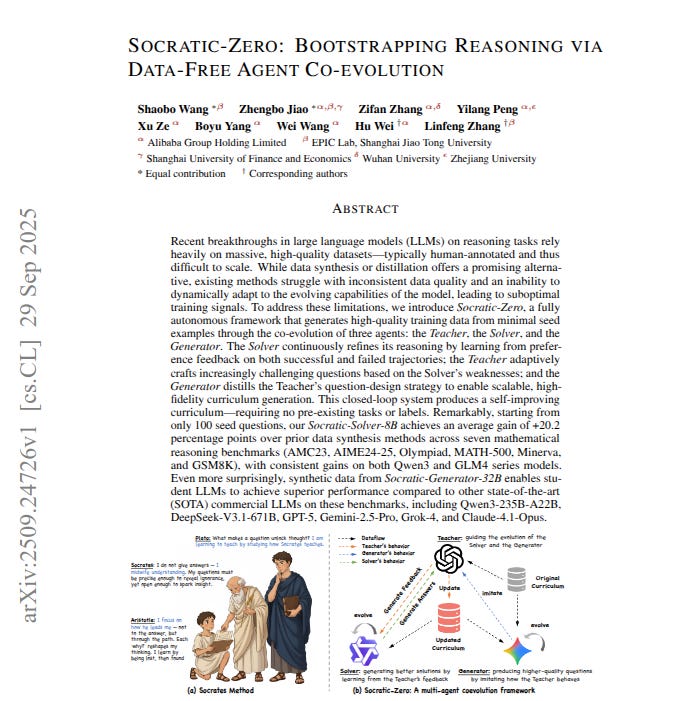

🗞️ Socratic-Zero : Bootstrapping Reasoning via Data-Free Agent Co-evolution

This paper shows how to teach language models to get better at reasoning without relying on massive human-labeled datasets. The key finding is that a Teacher-Solver-Generator loop creates its own adaptive training curriculum, which leads to strong gains in reasoning tasks.

Suggests a path to making powerful reasoning systems that don’t depend on expensive human-made data. It sets up 3 agents that work together in a loop: a Teacher, a Solver, and a Generator.

The Solver tries to answer math questions and learns by comparing its good and bad answers. The Teacher checks those answers and then creates new questions that target the Solver’s weak points.

The Generator studies how the Teacher makes questions and learns to produce similar ones on its own, which makes the whole process scalable. This loop means the system keeps creating fresh practice data, always adjusted to what the Solver struggles with most.

The method starts from only 100 seed questions, yet the Solver shows big improvements on tough benchmarks. The generated data is so effective that even smaller models trained with it can perform at or above some of the biggest commercial models.

🗞️ Pretraining LLMs with NVFP4

💾 NVFP4 shows 4-bit pretraining of a 12B Mamba Transformer on 10T tokens can match FP8 accuracy while cutting compute and memory. 🔥 NVFP4 is a way to store numbers for training large models using just 4 bits instead of 8 or 16. This makes training faster and use less memory.

But 4 bits alone are too small, so NVFP4 groups numbers into blocks of 16. Each block gets its own small “scale” stored in 8 bits, and the whole tensor gets another “scale” stored in 32 bits.

The block scale keeps the local values accurate, and the big tensor scale makes sure very large or very tiny numbers (outliers) are not lost. In short, NVFP4 is a clever packing system: most numbers use 4 bits, but extra scales in 8-bit and 32-bit keep the math stable and precise.

On Blackwell, FP4 matrix ops run 2x on GB200 and 3x on GB300 over FP8, and memory use is about 50% lower. The validation loss stays within 1% of FP8 for most of training and grows to about 1.5% late during learning rate decay.

Task scores stay close, for example MMLU Pro 62.58% vs 62.62%, while coding dips a bit like MBPP+ 55.91% vs 59.11%. Gradients use stochastic rounding so rounding bias does not build up.

Ablation tests show that removing any step hurts convergence and increases loss. Against MXFP4, NVFP4 reaches the same loss with fewer tokens because MXFP4 needed 36% more data, 1.36T vs 1T, on an 8B model.

Switching to BF16 near the end, about 18% of steps or only forward, almost removes the small loss gap. Support exists in Transformer Engine and Blackwell hardware, including needed rounding modes.

That’s a wrap for today, see you all tomorrow.