Read time: 15 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡Top Papers of last week (28-Dec-2025):

The Universal Weight Subspace Hypothesis

LLaDA2.0: Scaling Up Diffusion Language Models to 100B

Let the Barbarians In: How AI Can Accelerate Systems Performance Research

FaithLens: Detecting and Explaining Faithfulness Hallucination

Memory in the Age of AI Agents

Reinforcement Learning for Self-Improving Agent with Skill Library

🗞️ QuantiPhy: A Quantitative Benchmark Evaluating Physical Reasoning Abilities of Vision-Language Models

🗞️ Meta-RL Induces Exploration in Language Agents

🗞️ Let’s (not) just put things in Context: Test-Time Training for Long-Context LLMs

🗞️ Toward Training Superintelligent Software Agents through Self-Play SWE-RL

🗞️ An Empirical Study of Agent Developer Practices in AI Agent Frameworks

🗞️ The Universal Weight Subspace Hypothesis

This paper claims that many separately trained neural networks end up using the same small set of weight directions. Across about 1100 models, they find about 16 directions per layer that capture most weight variation.

A weight is just a number inside the model that controls how strongly a feature pushes another. They treat training as moving these numbers, and they say most movement stays inside a small shared subspace.

A subspace here means a short list of basis directions, so each task update is just a mix of them. They collect many models with the same blueprint, then break each layer’s weights into main directions and keep the shared ones.

They test this on Low Rank Adaptation (LoRA) adapters, which are small add on weights, and they even merge 500 Vision Transformers into 1 compact form. With the basis fixed, new tasks can be trained by learning only a few coefficients, which can save a lot of storage and compute.

🗞️ LLaDA2.0: Scaling Up Diffusion Language Models to 100B

LLaDA2.0 converts a normal LLM into a diffusion model that writes faster by filling many blanks together at 100B scale. Their 100B model reports 535 tokens per second, about 2.1 times faster than similar autoregressive baselines.

Autoregressive models predict the next token, a small chunk of text, from the previous ones, so generation is forced step by step. Diffusion language models train on corrupted text where many tokens are masked, and they learn to recover the missing parts using both left and right context.

It starts from an already trained autoregressive model and gradually changes the masking pattern, first small blocks, then whole sequences, then small blocks again. During training, it also stops the model from reading across document boundaries, which matters when many short texts are packed together. For instruction tuning, meaning training it to follow prompts, and for speed, it uses paired masks so every token gets trained, and it pushes the model to make confident guesses so many blanks can be filled at once.

🗞️ Let the Barbarians In: How AI Can Accelerate Systems Performance Research

New UC Berkeley paper argues that AI can auto-improve real systems code by looping through generate, test, fix. 13x faster Mixture-of-Experts load balancing, and up to 35% better spot-instance savings show the loop can beat expert baselines.

The core idea is AI-Driven Research for Systems, which is a tight cycle where a Large Language Model edits code, then an evaluator runs workloads and scores it. Systems work fits this setup because performance has a clean verifier, the code change is often small, and simulators can test ideas fast.

The paper keeps humans in charge of the parts AI cannot guess safely, which are choosing the problem, building the simulator, and deciding what “good” means. In their 10 case studies, the AI mostly does the grind work, it tries many variations, keeps what scores well, and throws away the rest.

The strongest wins often come from boring but powerful moves, like removing slow Python loops, using vectorized GPU-friendly operations, and caching repeated calculations. The biggest failure mode is reward hacking, where the AI finds a loophole in scoring instead of solving the real task. That is why the paper pushes robust evaluation, diverse tests, scoped edits, and feedback that is detailed enough to guide fixes but not so detailed it overfits.

🗞️ FaithLens: Detecting and Explaining Faithfulness Hallucination

The paper proposes FaithLens, an 8B model that spots when a large language model (LLM) claim is unsupported, and explains why. Makes it much easier and cheaper to catch and explain hallucinated claims before they reach users.

Across 12 benchmarks, it beats GPT-4.1 and o3 while running far cheaper. In many apps, a model is given documents but still invents details, and that is a faithfulness hallucination.

Most checkers either call a huge judge model, or output a bare Yes or No with no reasons. FaithLens takes a document and a claim, then returns both the label and a short explanation that points to the missing or conflicting evidence.

To train it without humans, the authors make synthetic examples using a stronger model, then throw away samples where the label, explanation, or topic variety looks wrong. After that cold-start training, they run reinforcement learning where an explanation only earns credit if it helps a weaker model reach the correct Yes or No. The takeaway is a practical, low-cost verifier that flags a bad claim and spells out the evidence gap.

🗞️ Memory in the Age of AI Agents

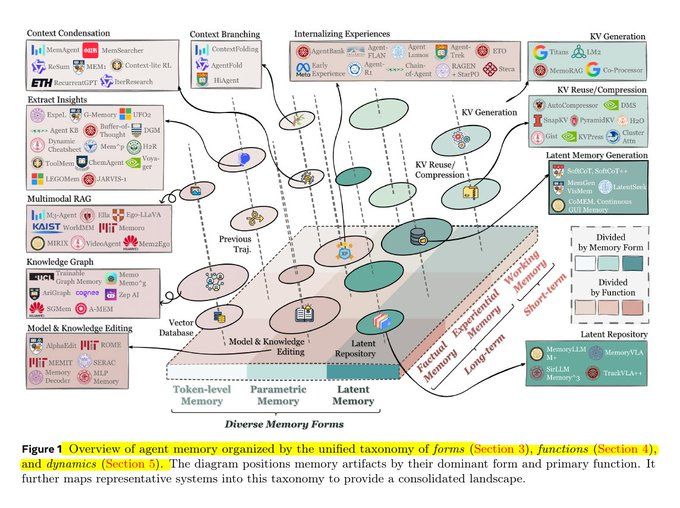

This 102-page survey unifies agent memory research with 3 lenses, Forms, Functions, and Dynamics. It replaces vague short-term vs long-term labels with mechanisms that explain how agents store, use, and change memory.

Agent memory is a persistent read-write state across tasks, not just retrieval-augmented generation (RAG) over static documents. Forms split memory into token-level stores, parametric memory in weights or adapters, and latent memory in hidden states like key-value (KV) caches.

Functions split it into factual, experiential, and working memory, and Dynamics tracks formation, evolution, and retrieval as separate control problems. The survey catalogs benchmarks and open frameworks, and it highlights a 2025 shift toward reinforcement learning (RL) to learn memory write, prune, and route policies. For builders, the framework is a checklist for choosing what to store, where it lives, and how it updates under token budgets.

Overview of agent memory organized by the unified taxonomy of forms , functions, and dynamics.

Forms here mean where information physically lives, such as token-level memory in prompts and external stores, parametric memory in model weights or adapters, and latent memory in hidden states like key-value (KV) caches. Functions here mean what the memory is for, split into factual memory for stable knowledge, experiential memory for past episodes and traces, and working memory for temporary task state.

Dynamics is about how memory is created and changed over time, like condensing context, branching context into multiple threads, and extracting reusable insights. A big takeaway is that many popular systems cluster in token-level factual memory, which is mostly retrieval-augmented generation (RAG) over vector databases or knowledge graphs.

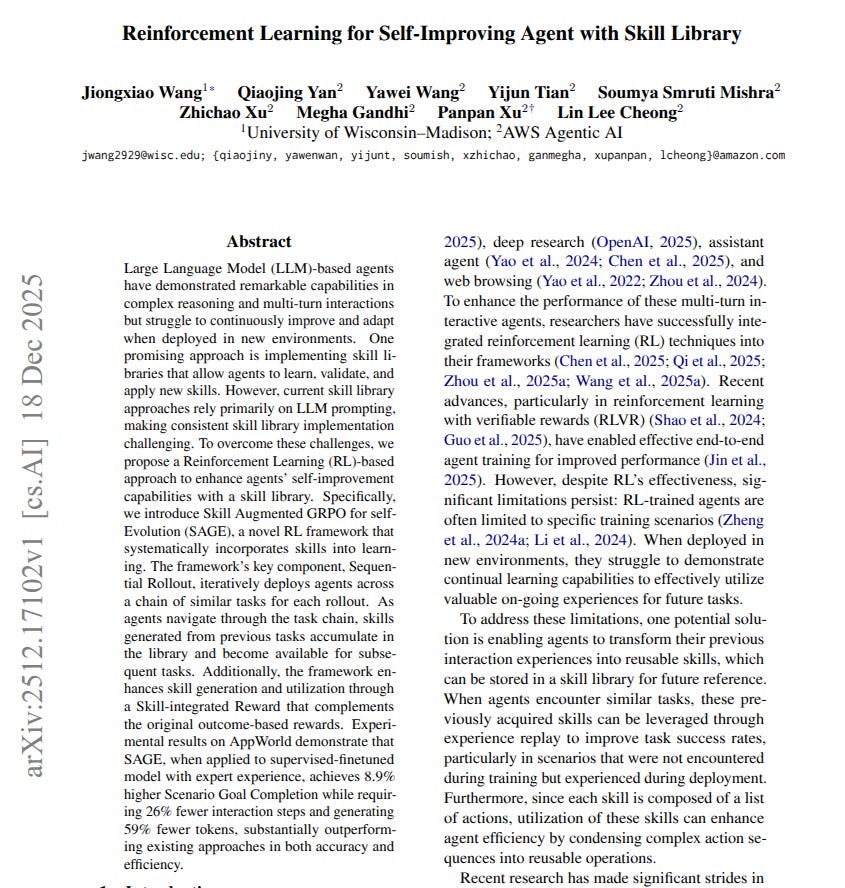

🗞️ Reinforcement Learning for Self-Improving Agent with Skill Library

This paper shows how an AI agent can actually learn reusable skills from its own past work and get better over time instead of repeating the same mistakes. Researchers train a LLM agent to keep learning by turning past tool use into reusable code skills.

On AppWorld, it raises Scenario Goal Completion, finishing all 3 related tasks, by 8.9% while cutting output tokens, the text chunks, by 59%. The agent is a chatbot that writes programs to call app APIs, the commands apps expose, but without memory it repeats work and mistakes.

Their skill library is a collection of named functions, where each function wraps many API calls into 1 reusable action. While solving a task, the agent writes a new function, runs it, and saves it if it works, or edits it if it fails.

They call the training recipe SAGE, and it runs 2 similar tasks in a row so a skill from task 1 can help in task 2. Reinforcement learning, training with rewards, gives extra credit only when the agent completes the tasks and reuses the earlier skill successfully. They first do supervised fine tuning by copying expert examples to teach the skill format, then reinforcement learning makes that behavior stick.

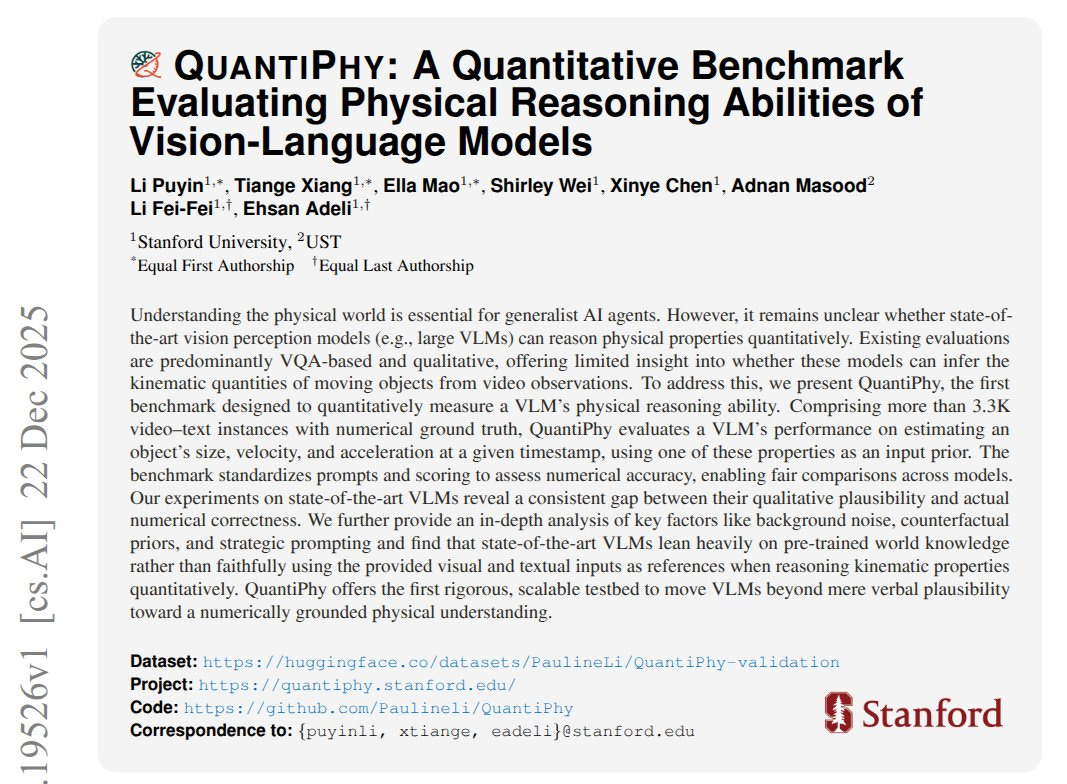

🗞️ QuantiPhy: A Quantitative Benchmark Evaluating Physical Reasoning Abilities of Vision-Language Models

New Stanford paper QuantiPhy shows current vision language models mostly guess motion numbers from learned “common sense” instead of actually measuring what the video shows.

Says today’s vision language models sound confident about motion, but miss the real numbers from video.

On 3355 video questions, the best model scored 53.1 on a relative accuracy score, while humans averaged 55.6.

A vision language model reads images or video plus text, and this benchmark checks if it can output real motion numbers.

Older tests mostly ask for a verbal answer, but QuantiPhy forces a number, like size, speed, or acceleration at a specific time.

Each question gives 1 real world clue, like a known size, speed, or acceleration, and the video gives the matching pixel clue, so pixels can be converted into meters.

With that scale, the model can turn pixel movement over time into speed and acceleration in real units, so the answer should be a straightforward measurement job.

The paper shows models often lean on memorized ‘typical’ values, since removing the video, giving a counterfactual prior, or adding step by step prompts usually does not make them follow the inputs.

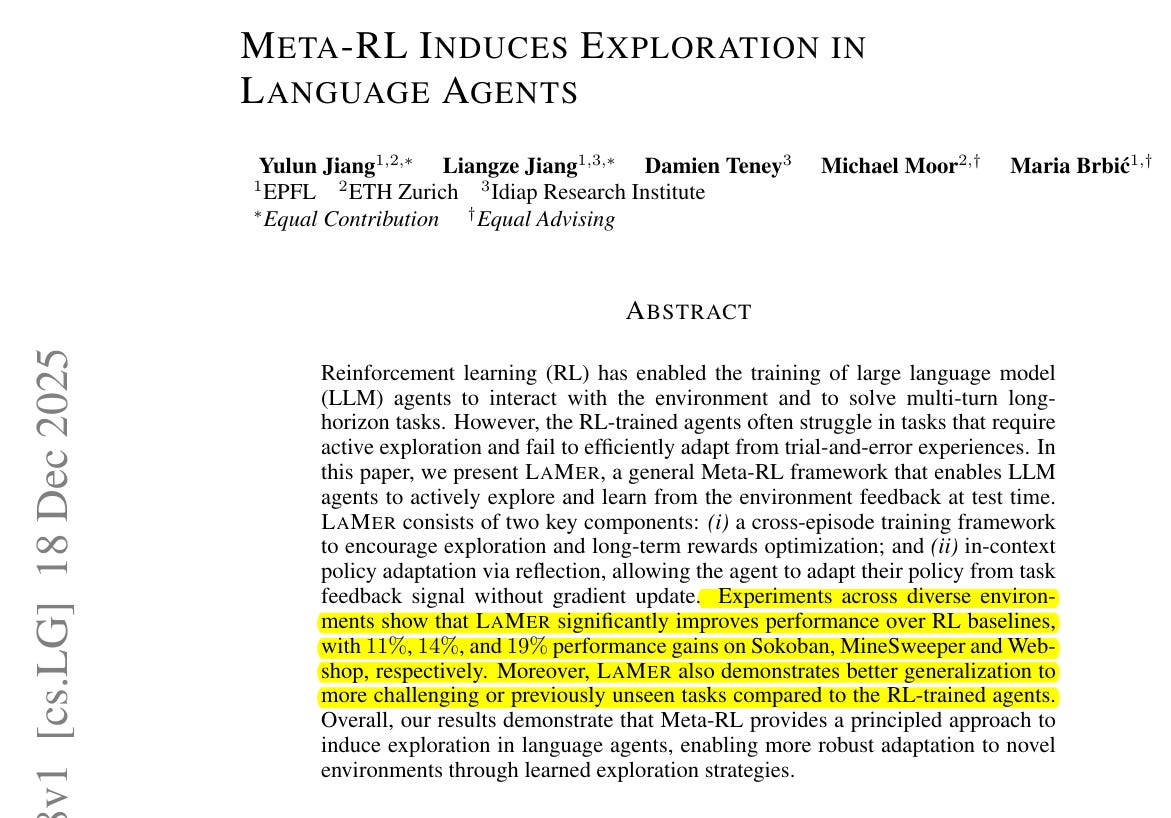

🗞️ Meta-RL Induces Exploration in Language Agents

This paper trains language model agents, and makes the agent learn a “try, learn, try again” habit during training.

So at test time it naturally uses its 1st attempt to explore and gather clues, then uses the next attempts to exploit what it learned, instead of blindly repeating the same safe behavior each time.

It reports up to 19% higher success than standard reinforcement learning on Sokoban, MineSweeper, and Webshop.

Reinforcement learning is reward based training, but when the reward comes mostly at the end, agents get timid and repeat safe moves.

Their method, called LAMER, stands for Large Language Model Agent with meta reinforcement learning, and it trains the agent to learn a new task within a few tries.

Training runs the same task for several attempts in a row, and later attempts get credit for what early exploration uncovered.

A cross-attempt discount controls how much future rewards matter, and that setting balances exploring early versus using that information later.

Between attempts, the agent writes a quick reflection about mistakes and a new plan, and this note is fed back as memory.

The model’s weights stay fixed at test time, so this memory is how it adapts, and it stays stronger on harder or unfamiliar tasks.

🗞️ Let’s (not) just put things in Context: Test-Time Training for Long-Context LLMs

New paper from Meta shows long context large language models miss buried facts, and fixes it with query-only test-time training (qTTT).

On LongBench-v2 and ZeroScrolls, it lifts Qwen3-4B by 12.6 and 14.1 points on average.

A large language model predicts the next token, meaning a small chunk of text, using the text it already saw.

It decides what to pay attention to with self-attention, where a query searches earlier keys and pulls their values.

With very long prompts, many tokens look similar, so the real clue gets drowned out and the model misses it.

The authors call this score dilution, and extra thinking tokens stop helping fast because the attention mechanism never changes.

qTTT fixes that by caching the keys and values once, then doing small training steps that change only the weights that make queries.

Those steps push queries toward the right clue and away from distractors, so the model puts its attention on the useful lines.

So for long context, a little context-specific training while answering beats spending the same extra work on more tokens.

🗞️ Toward Training Superintelligent Software Agents through Self-Play SWE-RL

Brilliant new paper from Meta, CMU and other labs. It shows agent speed is mostly a full-system problem, not a “faster model” problem, and with a coordinated stack.

AgentInfer, proposed in this paper, cuts wasted tokens by over 50% and speeds up real agent task completion by about 1.8x to 2.5x.

AgentInfer is a system that makes Large Language Model agents finish tool tasks faster.

A Large Language Model writes chatbot text, and an agent makes it loop, think, call tools like web search, read results, then write again.

These loops get slow because the chat history keeps growing, so every new step has more old text to reread.

AgentCollab uses 2 models, the big model plans and fixes stalls, and the small model does most steps after quick self checks.

AgentCompress keeps the important tool outputs but trims noisy search junk, and it summarizes in the background so the input stays smaller.

AgentSched avoids throwing away cached context when memory is tight, and AgentSAM reuses repeated text from past sessions to draft the next chunks the main model checks.

The punchline is that agent speed comes from coordinating reasoning, memory, and server scheduling, meaning which request runs next, not from faster decoding alone.

🗞️ An Empirical Study of Agent Developer Practices in AI Agent Frameworks

This paper maps how real developers actually use AI agent frameworks and where these tools still fail.

96% of top agent projects combine several frameworks instead of relying on 1.

The authors see an AI agent as a language model with memory, planning, and tool calls, and a framework as the wiring around it.

They mine many GitHub repositories and discussion threads, then zoom in on 10 widely used frameworks to see which roles they actually play.

In practice, these frameworks mostly act as building blocks for workflow orchestration, multi agent collaboration, and data retrieval, and developers often stack several together.

Complaint threads cluster around 4 main pain areas, namely fragile agent logic, brittle tool integrations with external APIs, slow and forgetful behavior from weak memory and caching, and constant breakage when framework or library versions move.

Comparing the 10 frameworks on learning effort, development speed, abstraction quality, runtime performance, and maintainability, the study finds that tools like LangChain, CrewAI, and AutoGen support quick prototyping but still leave developers handling performance tuning, version management, and multi framework glue code.

That’s a wrap for today, see you all tomorrow.