Read time: 10 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡Top Papers of last week (ending 15-Sept-2025):

🗞️ The Illusion of Diminishing Returns: Measuring Long Horizon Execution in LLMs

📢 An AI system to help scientists write expert-level empirical software

🗞️ SimpleQA Verified: A Reliable Factuality Benchmark to Measure Parametric Knowledge

🗞️ Reverse-Engineered Reasoning for Open-Ended Generation

🗞️ "Why language models hallucinate"

🗞️ Breaking Android with AI: A Deep Dive into LLM-Powered Exploitation

🗞️ Emergent Hierarchical Reasoning in LLMs through Reinforcement Learning

🗞️ REFRAG: Rethinking RAG based Decoding

🗞️ The Illusion of Diminishing Returns: Measuring Long Horizon Execution in LLMs

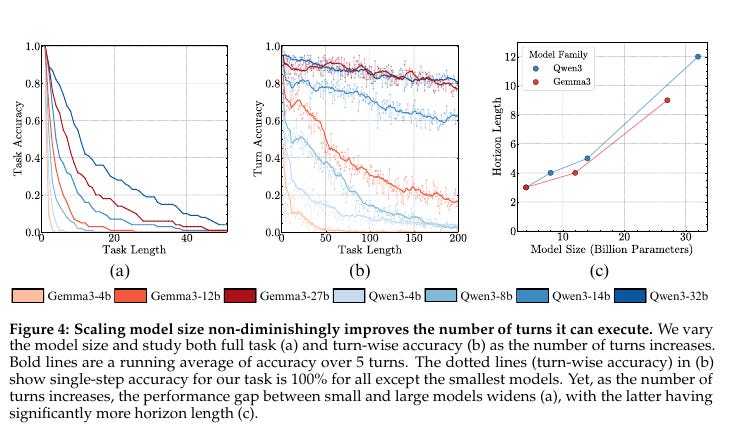

Shows that small models can usually do one step perfectly, but when you ask them to keep going for many steps, they fall apart quickly. Even if they never miss on the first step, their accuracy drops fast as the task gets longer.

Large models, on the other hand, stay reliable across many more steps, even though the basic task itself doesn’t require extra knowledge or reasoning. The paper says this is not because big models "know more," but because they are better at consistently executing without drifting into errors

The paper names a failure mode called self-conditioning, where seeing earlier mistakes causes more mistakes, and they show that with thinking steps GPT-5 runs 1000+ steps in one go while others are far lower.

🧠 The idea

The work separates planning from execution, then shows that even when the plan and the needed knowledge are handed to the model, reliability drops as the task gets longer, which makes small accuracy gains suddenly matter a lot.

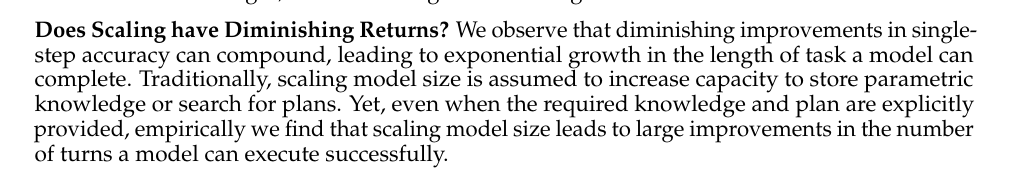

even a tiny accuracy boost at the single-step level leads to exponential growth in how long a model can reliably execute a full task. This is why scaling up models is still worth it, even if short benchmarks look like progress is stalling.

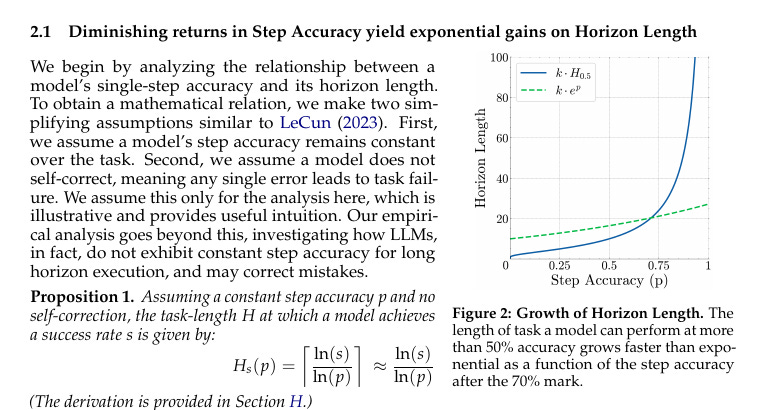

On the left, you see that step accuracy (how often the model gets each small move right) is almost flat, barely improving with newer models. That looks like diminishing returns, because each release is only slightly better at a single step.

But on the right, when you extend that tiny step improvement across many steps in a row, the gains explode. Task length (how long a model can keep going without failing) jumps from almost nothing to thousands of steps.

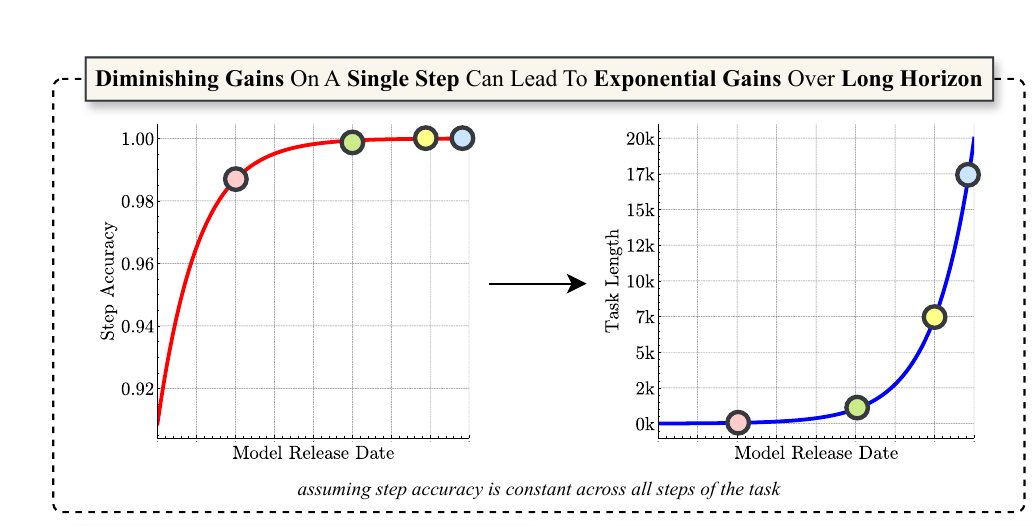

This chart is showing why models get worse the longer they run. The green line is what you’d expect: if a model makes small, random errors, then accuracy should stay flat over time.

But the red line is what actually happens: accuracy keeps dropping as the task gets longer.

The reason is called self-conditioning. Once the model makes a mistake, that mistake is fed back into its own history. Next time it looks at its past answers, it sees the wrong one, and that makes it even more likely to mess up again.

The examples at the bottom show this: if the history is clean, the model keeps answering correctly. If the history already has errors, it spirals into worse mistakes. So the point is: LLMs don’t just fail because of random errors — they fail because their own mistakes poison the context and cause more mistakes later.

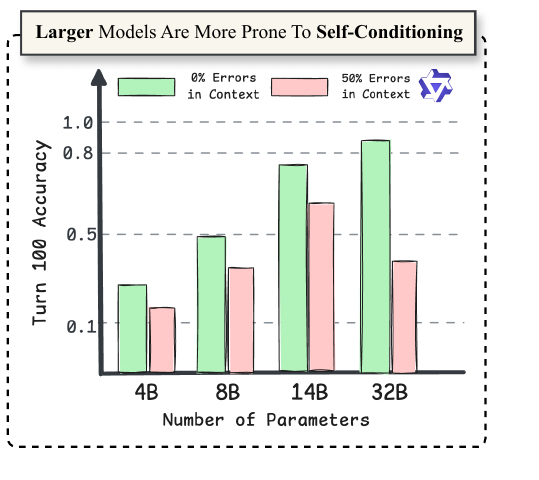

This chart is showing how bigger models behave when earlier errors are present in their history. The green bars are when the context is clean, with 0% errors. In that case, larger models (like 14B and 32B) maintain much higher accuracy at step 100 compared to smaller ones. So, scaling clearly helps if everything is going smoothly.

The pink bars are when half of the history already has errors. Here, accuracy drops sharply, and the bigger the model, the worse the collapse. The 32B model goes from the best in the clean case to much lower when errors are present.

The message is: larger models are more powerful at executing long tasks when history is clean, but they are also more vulnerable to self-conditioning, meaning once they see their own earlier mistakes, they spiral down harder.

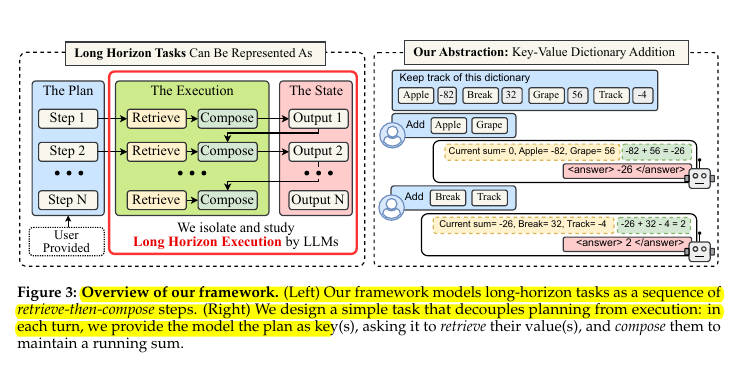

how the authors tested execution in a very controlled way. The authors turn long tasks into a simple loop where each turn says which keys to read from a dictionary and asks the model to update a running sum, so any failure is about execution, not missing knowledge or planning.

the paper isolates execution by stripping away planning and knowledge, and tests whether models can keep a simple running sum correct across many turns. On the left, they explain that a long task can be broken into repeated steps: first retrieve the right piece of information, then compose it into the running result, and finally store the updated state. The planning part (what steps to do) is already given to the model, so the test only measures whether the model can keep executing correctly across many steps.

On the right, they show the toy task they used. It’s basically a dictionary where each word has a number attached. The model is told which keys to pick (like “Apple” and “Grape”), it retrieves their numbers, and then adds them into the running total. This setup ensures the task doesn’t depend on external knowledge or creative planning, only on correct execution turn after turn.

📈 Why small gains explode the horizon

Under a simple constant‑accuracy model without self‑correction, once single‑step accuracy passes about 70%, a tiny bump yields a faster‑than‑exponential jump in the task length that stays above a 50% success target, so diminishing returns on short tasks hide massive real‑world gains on long ones.

🧮 What scaling buys even without new knowledge

Larger models keep the running sum correct across many more turns even though small models already have 100% single‑step accuracy, which says the benefit of scale here is more reliable execution over time, not better facts.

🔁 The self‑conditioning effect

As soon as the context shows earlier mistakes, the model becomes more likely to err again, so per‑turn accuracy keeps drifting down with length, and this is separate from long‑context limits and is not fixed by just using a bigger model.

Thinking fixes the drift

When models are set to think with sequential test‑time compute, accuracy at a fixed late turn stays stable even if the history is full of wrong answers, which shows that deliberate reasoning steps break the negative feedback loop.

🚀 Single‑turn capacity, numbers that matter

Without chain‑of‑thought, even very large instruction‑tuned models struggle to chain 2 steps in one turn, but with thinking, GPT‑5 executes 1000+ steps, Claude 4 Sonnet is about 432, Grok‑4 is 384, and Gemini 2.5 Pro and DeepSeek R1 hover near 120.

🧱 Parallel sampling does not replace thinking

Running many parallel samples and voting gives only small gains compared to sequential reasoning, so for long‑horizon execution the key is sequential test‑time compute, not more parallel guesses.

🧽 Practical mitigation by trimming history

A sliding window that drops old turns improves reliability by hiding accumulated errors from the model, which reduces self‑conditioning in simple Markovian setups like this task.

🧾 Where the errors actually come from

Lookup and addition alone stay near perfect for a long time, but combining them with reliable state tracking makes errors grow, so the weak spot is the ongoing management of state while composing small operations.

🛠️ What to do as an agent builder

Measure horizon length directly, use thinking for multi‑step execution, prefer sequential compute over pure parallel sampling, and manage context to avoid feeding the model its own earlier mistakes.

📢 An AI system to help scientists write expert-level empirical software

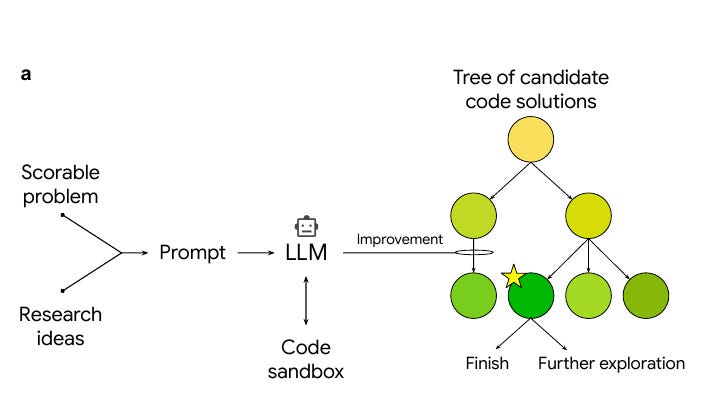

An LLM plus tree search turns scientific coding into a score driven search engine. This work builds an LLM + Tree Search loop that writes and improves scientific code by chasing a single measurable score for each task. The key idea is to treat coding for scientific tasks as a scorable search problem.

That means every candidate program can be judged by a simple numeric score, like how well it predicts, forecasts, or integrates data. Once you have a clear score, you can let a LLM rewrite code again and again, run the code in a sandbox, and use tree search to keep the best branches while discarding weaker ones

With compact research ideas injected into the prompt, the system reaches expert level and beats strong baselines across biology, epidemiology, geospatial, neuroscience, time series, and numerical methods.

Training speed: less than 2 hours on 1 T4 vs 36 hours on 16 A100s.

In bioinformatics, it came up with 40 new approaches for single-cell data analysis that beat the best human-designed methods on a public benchmark. In epidemiology, it built 14 models that set state-of-the-art results for predicting COVID-19 hospitalizations.

⚙️ The Core Concepts

Empirical software is code built to maximize a quality score on observed data, and any task that fits this framing becomes a scorable task. This view turns software creation into a measurable search problem, because every candidate program is judged by the same numeric target. This framing also explains why the method can travel across domains, since only the scoring function changes.

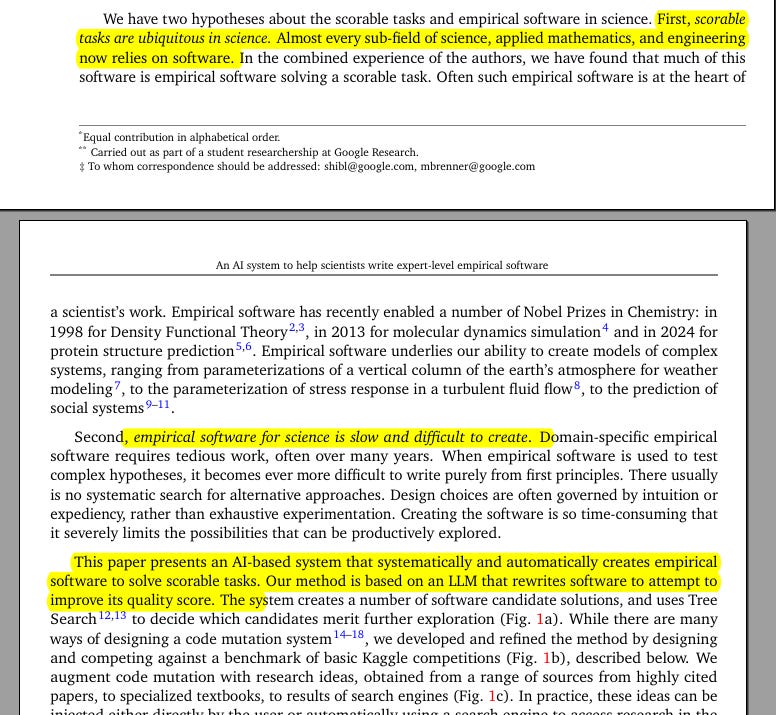

This figure is breaking down both how the system works.

The top-left part shows the workflow. A scorable problem and some research ideas are given to an LLM, which then generates code. That code is run in a sandbox to get a quality score. Tree search is used to decide which code branches to keep improving, balancing exploration of new ideas with exploitation of ones that already look promising.

On the right, different ways of feeding research ideas into the system are shown. Ideas can come from experts writing direct instructions, from scientific papers that are summarized, from recombining prior methods, or from LLM-powered deep research. These sources make the search more informed and help the model produce stronger, more competitive solutions. So overall, the loop of tree search plus targeted research ideas turns an LLM from a one-shot code generator into a system that steadily climbs toward expert-level performance.

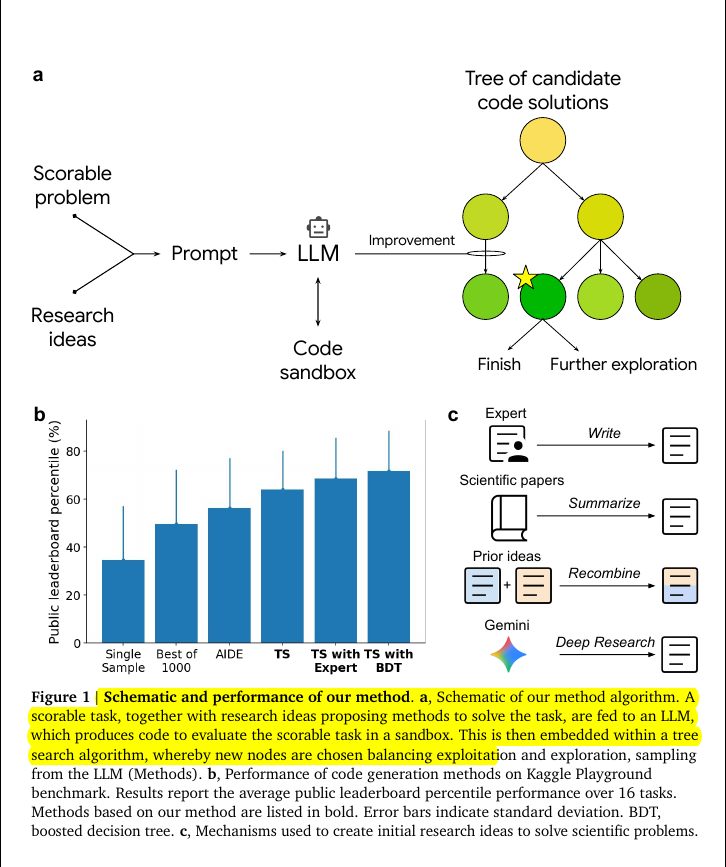

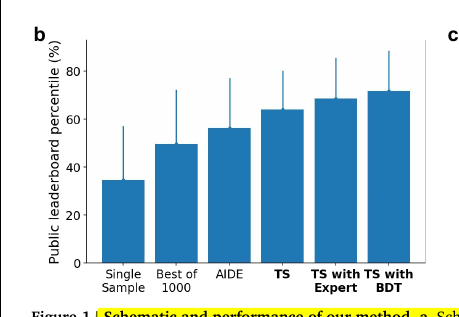

This chart shows how different code generation approaches perform on the Kaggle Playground benchmark. It measures the public leaderboard percentile, which is a way to rank how well the generated solutions score compared to human submissions.

The simple methods like generating a single sample or even picking the best from 1000 runs stay below 50%. That means they rarely reach strong leaderboard positions.

When the system adds tree search (TS), performance jumps significantly. The average rank moves closer to the top half of the leaderboard, meaning the AI is finding higher-quality code solutions.

Performance climbs even higher when tree search is combined with expert guidance or with a boosted decision tree idea. These additions steer the search toward strategies that humans have found effective, letting the system consistently reach well above the 60–70% percentile. So this graph basically shows that iterative search guided by research ideas or expert hints is much stronger than one-shot or random attempts at code generation.

🧱 How the system searches

The system starts from a working template, asks an LLM to rewrite the code, runs it in a sandbox, and records the score. Tree Search chooses which branches to extend based on the gains seen so far, so exploration favors promising code paths. The loop repeats until the tree contains a high scoring solution that generalizes on the task’s validation scheme.

🧪 How research ideas guide the code

The prompt is augmented with research ideas distilled from papers, textbooks, or LLM powered literature search, then the LLM implements those ideas as code. These ideas are injected as short instructions, often auto summarized from manuscripts, so the search explores concrete methods rather than vague hunches. The system can also recombine parent methods into hybrids, and many of those hybrids score higher than both parents.

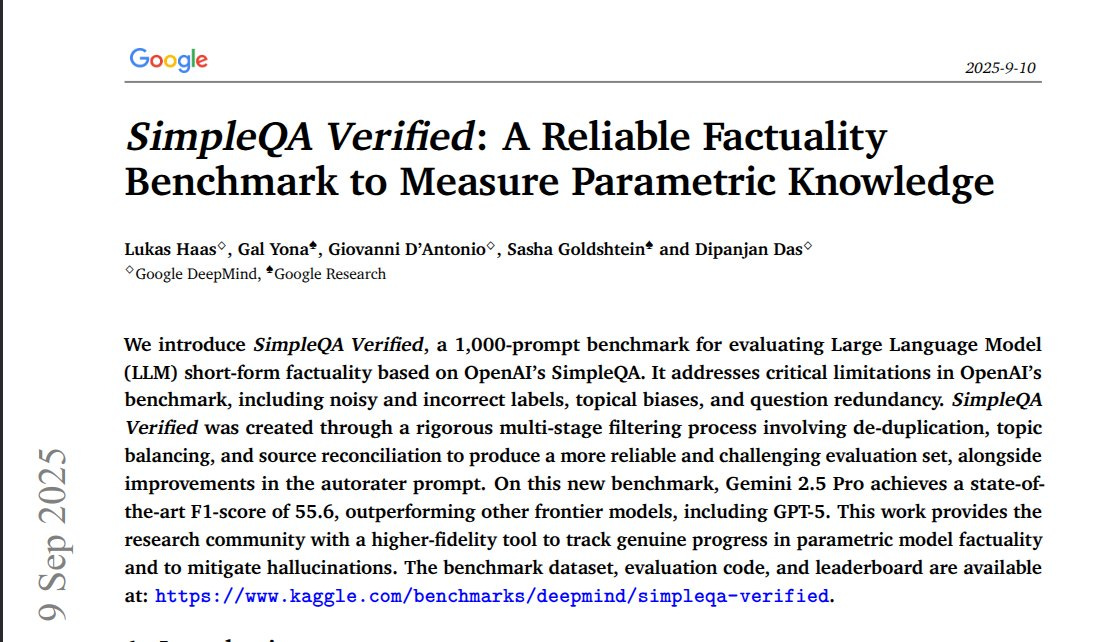

🗞️ SimpleQA Verified: A Reliable Factuality Benchmark to Measure Parametric Knowledge

This GoogleDeepMind paper introduces SimpleQA Verified, a cleaner benchmark that measures what LLMs know from memory. It fixes bad labels and duplicates, balances topics, and resolves clashes between sources.

Numbers are graded with allowed ranges, and the grader ignores extra chatter, demands 1 clear answer, and marks hedging as not attempted. The set has 1,000 tough, varied questions with no tools allowed, so only memory is tested. On this test, Gemini 2.5 Pro gets F1 55.6, which shows it separates strong models.

🗞️ Reverse-Engineered Reasoning for Open-Ended Generation

The paper shows how to teach models deep reasoning for open ended writing by working backward from high quality outputs. Outscores GPT-4o and Claude 3.5 on LongBench-Write.

Creative tasks have no single right answer, so reinforcement learning lacks a clean reward, and instruction distillation is pricey and limited by the teacher. REverse-Engineered Reasoning (REER), works backward to recover a plausible step by step plan that could have produced the known good answer.

It scores each candidate plan by the model's surprise on the reference answer, lower surprise means a better plan. A local search refines 1 segment at a time, tries rewrites, scores them on the reference, and keeps the lowest.

This yields DeepWriting-20K with 20,000 traces across 25 categories, then a Qwen3-8B base is fine tuned to plan before writing. The resulting DeepWriter-8B beats strong open source baselines, stays coherent on very long pieces, and lands near top proprietary systems on creative tasks. Net result is a 3rd path that avoids reinforcement learning and distillation, yet teaches smaller models to plan deeply and write with control.

🗞️ "Why language models hallucinate"

"Why language models hallucinate" from OpenAI, it will be an all-time classic paper.

Simple ans - LLMs hallucinate because training and evaluation reward guessing instead of admitting uncertainty. The paper puts this on a statistical footing with simple, test-like incentives that reward confident wrong answers over honest “I don’t know” responses.

The fix is to grade differently, give credit for appropriate uncertainty and penalize confident errors more than abstentions, so models stop being optimized for blind guessing. OpenAI is showing that 52% abstention gives substantially fewer wrong answers than 1% abstention, proving that letting a model admit uncertainty reduces hallucinations even if accuracy looks lower.

Abstention means the model refuses to answer when it is unsure and simply says something like “I don’t know” instead of making up a guess. Hallucinations drop because most wrong answers come from bad guesses. If the model abstains instead of guessing, it produces fewer false answers.

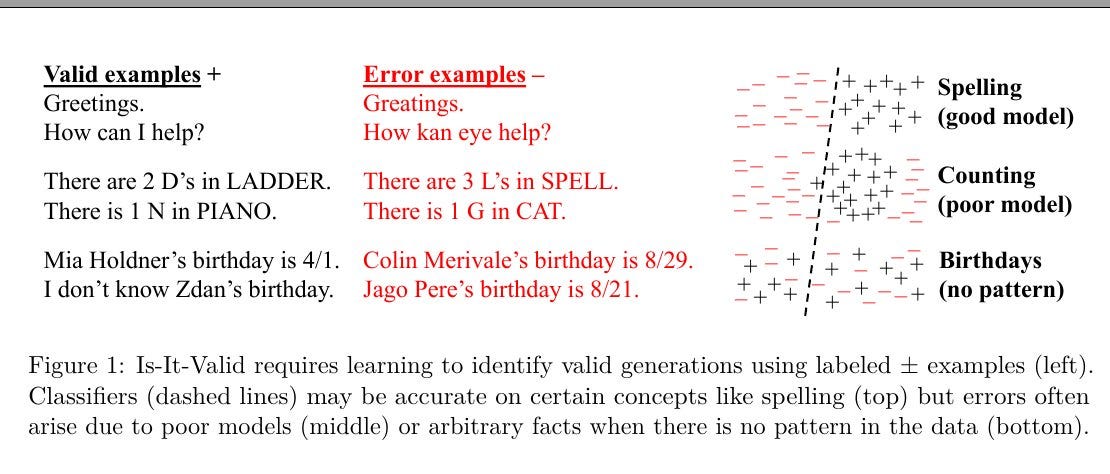

This figure is showing the idea of Is-It-Valid.

On the left side, you see examples. Some are valid outputs (in black), and others are errors (in red). Valid examples are simple and correct statements like “There are 2 D’s in LADDER” or “I don’t know Zdan’s birthday.” Error examples are things that look fluent but are wrong, like “There are 3 L’s in SPELL” or giving random birthdays.

The diagrams on the right show why errors happen differently depending on the task. For spelling, the model can learn clear rules, so valid and invalid answers separate cleanly. For counting, the model is weaker, so valid and invalid mix more. For birthdays, there is no real pattern in the data at all, so the model cannot separate correct from incorrect—this is why hallucinations occur on such facts.

So the figure proves: when there is a clear pattern (like spelling), the model learns it well. When the task has weak or no pattern (like birthdays), the model produces confident but wrong answers, which are hallucinations.

⚙️ The Core Concepts

The paper’s core claim is that standard training and leaderboard scoring reward guessing over acknowledging uncertainty, which statistically produces confident false statements even in very capable models. Models get graded like students on a binary scale, 1 point for exactly right, 0 for everything else, so admitting uncertainty is dominated by rolling the dice on a guess that sometimes lands right. The blog explains this in plain terms and also spells out the 3 outcomes that matter on single-answer questions, accurate answers, errors, and abstentions, with abstentions being better than errors for trustworthy behavior.

🎯 Why accuracy-only scoring pushes models to bluff

The paper says models live in permanent test-taking mode, because binary pass rates and accuracy make guessing the higher-expected-score move compared to saying “I don’t know” when unsure. That incentive explains confident specific wrong answers, like naming a birthday, since a sharp guess can win points sometimes, while cautious uncertainty always gets 0 under current grading. OpenAI’s Model Spec also instructs assistants to express uncertainty rather than bluff, which current accuracy leaderboards undercut unless their scoring changes.

🧩 Where hallucinations start during training

Pretraining learns the overall distribution of fluent text from positive examples only, so the model gets great at patterns like spelling and parentheses but struggles on low-frequency arbitrary facts that have no learnable pattern. The paper frames this with a reduction to a simple binary task, “is this output valid”, showing that even a strong base model will make some errors when facts are effectively random at training time. This explains why spelling mistakes vanish with scale yet crisp factual claims can still be wrong, since some facts do not follow repeatable patterns the model can internalize from data.

🎚️ The simple fix that actually helps

Add explicit confidence targets to existing benchmarks, say “answer only if you are above t confidence,” and deduct a penalty equal to t divided by 1 minus t for wrong answers, so the best strategy is to speak only when confident. This keeps the grading objective and practical, because each task states its threshold up front and models can train to meet it rather than guess the grader’s hidden preferences. It also gives a clean auditing idea called behavioral calibration, compare accuracy and error rates across thresholds and check that the model abstains exactly where it should.

📝 Why post‑training does not kill it

Most leaderboards grade with strict right or wrong and give 0 credit for IDK, so the optimal test‑taking policy is to guess when unsure, which bakes in confident bluffing. The paper even formalizes this with a tiny observation, under binary grading, abstaining is never optimal for expected score, which means training toward those scores will suppress uncertainty language. A quick scan of major benchmarks shows that almost all of them do binary accuracy and offer no IDK credit, so a model that avoids hallucinations by saying IDK will look worse on the scoreboard.

🧭 Other drivers the theory covers

Computational hardness creates prompts where no efficient method can beat chance, so a calibrated generator will sometimes output a wrong decryption or similar and the lower bound simply reflects that. Distribution shift also hurts, when a prompt sits off the training manifold, the IIV classifier stumbles and the generation lower bound follows. GIGO is simple but real, if the corpus holds falsehoods, base models pick them up, and post-training can tamp down some of those like conspiracies but not all.

🧱 Poor models still bite

Some errors are not about data scarcity but about the model class, for example a trigram model that only looks at 2 previous words will confuse “her mind” and “his mind” in mirror prompts and must make about 50% errors on that toy task. Modern examples look different but rhyme, letter counting fails when tokenization breaks words into chunks, while a reasoning-style model that iterates over characters can fix it, so representational fit matters. The bound captures this too, because if no thresholded version of the model can separate valid from invalid well, generation must keep making mistakes.

🗞️ Breaking Android with AI: A Deep Dive into LLM-Powered Exploitation

This paper shows how LLMs can be used to automate Android penetration testing, which is usually a slow and manual job. The researchers used PentestGPT, a system built on LLMs, to generate attack strategies for Android devices.

These strategies include steps like rooting, privilege escalation, and bootloader exploits. They then built a small web app that converts PentestGPT’s text instructions into scripts in Bash, Python, or Android Debug Bridge.

These scripts are tested inside Genymotion, an Android emulator, so nothing runs on real devices. When a script fails, the errors are sent back to PentestGPT, which suggests refined methods. This loop continues until the scripts work more reliably. Human oversight is kept in place to block unsafe or harmful code.

The tests showed strong results for tasks like using Android Debug Bridge, enabling WiFi debugging, intercepting networks, or checking for component hijacking. But kernel exploits, bootloader unlocking, and recovery operations could not be validated since the emulator does not support those hardware-level features. The main takeaway is that LLMs can automate big parts of Android penetration testing, saving time and effort, but they still need human review and careful limits to avoid misuse.

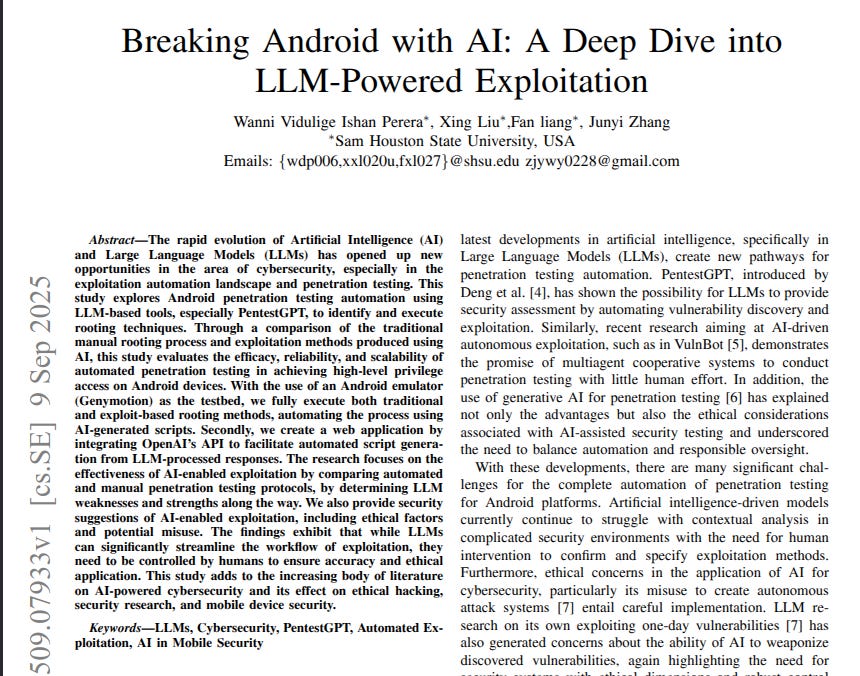

🗞️ Emergent Hierarchical Reasoning in LLMs through Reinforcement Learning

We all know Reinforcement learning boosts reasoning in language models, but nobody really explained why. This paper shows that reasoning improves with RL because learning happens in two distinct phases.

First, the model locks down small execution steps, then it shifts to learning planning. That “hierarchical” view is their first new insight.

At the start, the model focuses on execution, like doing arithmetic or formatting correctly. Errors in these small steps push it to become more reliable quickly. Once that foundation is stable, the main challenge shifts to planning, meaning choosing the right strategy and organizing the solution.

The researchers prove this shift by separating two kinds of tokens. Execution tokens are the small steps, and planning tokens are phrases like “let’s try another approach.”

They find that after the model masters execution tokens, the diversity of planning tokens grows, which links directly to better reasoning and longer solution chains

Based on this, they introduce a new method called HIerarchy-Aware Credit Assignment (HICRA).

Instead of spreading learning across all tokens, HICRA gives extra weight to planning tokens. This speeds up how the model explores and strengthens strategies.

In experiments, HICRA consistently beats the common GRPO approach. It works best when the model already has a solid base of execution skills. If the base is too weak, HICRA struggles.

The key message is that real gains in reasoning come from improving planning, not just polishing execution.

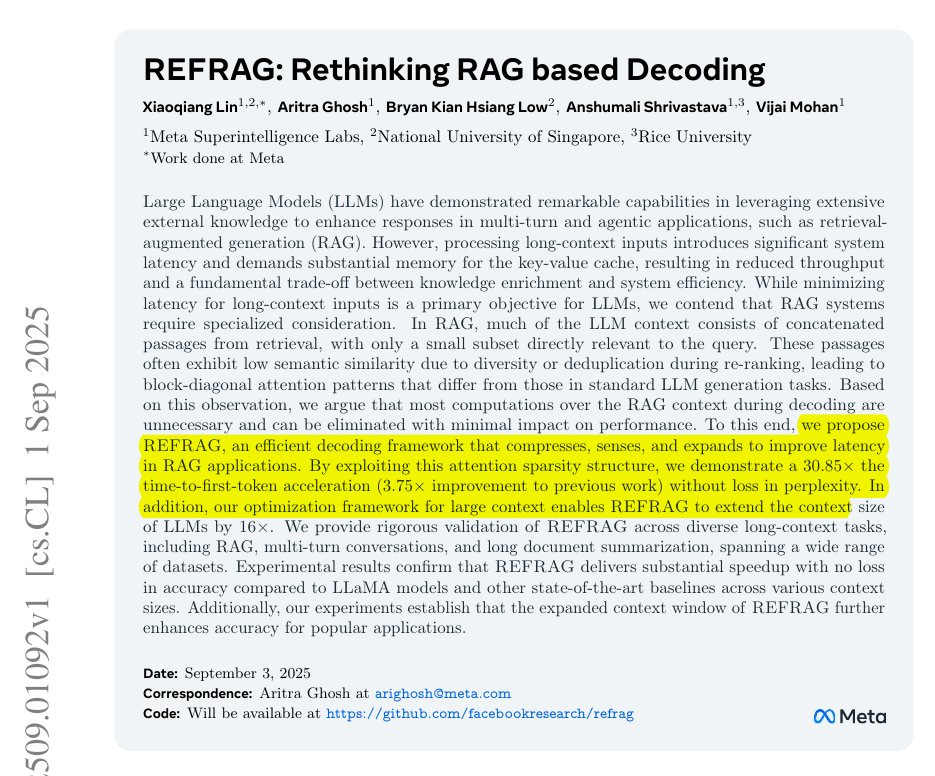

🗞️ REFRAG: Rethinking RAG based Decoding

This paper speeds up RAG by compressing context into chunk embeddings while keeping answer quality.

Up to 30.85x faster first token and up to 16x longer effective context without accuracy drop. RAG prompts paste many retrieved passages, most barely relate, so attention stays inside each passage and compute is wasted.

REFRAG replaces those passage tokens with cached chunk embeddings from an encoder, projects them to the decoder embedding size, then feeds them alongside the question tokens. This shortens the sequence the decoder sees, makes attention scale with chunks not tokens, and reduces the key value cache it must store.

Most chunks stay compressed by default, and a tiny policy decides which few to expand back to raw tokens when exact wording matters. Training uses a 2 step recipe, 1st reconstruct tokens from chunk embeddings so the decoder can read them, then continue pretraining on next paragraph prediction with a curriculum that grows chunk size.

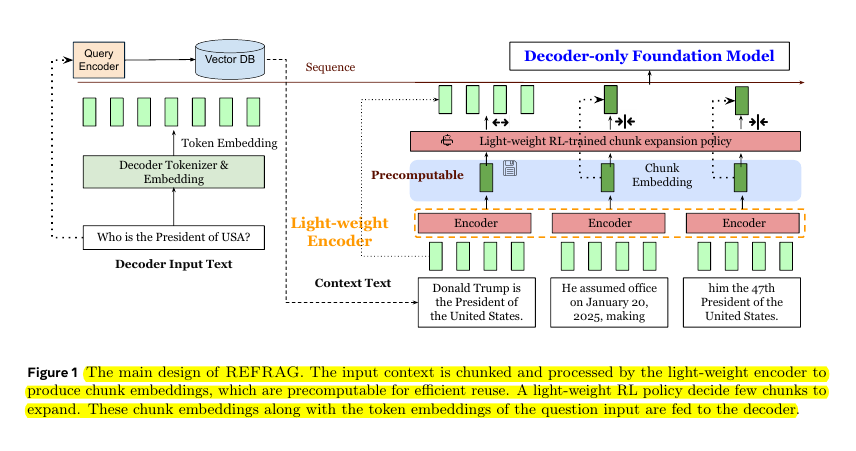

The policy is trained with reinforcement learning using the model's next word loss as the signal, so it expands only chunks that change the prediction. The workflow of REFRAG, how it makes RAG faster and lighter.

Instead of sending all the raw retrieved text into the model, the system first chops the context into chunks. Each chunk is run through a small encoder that produces a compact embedding, which can be precomputed and reused.

These embeddings are then passed to the main large language model together with the question tokens. This greatly reduces how many tokens the decoder needs to handle, cutting down memory and time.

Because some chunks might hold details that matter word-for-word, a reinforcement learning policy decides when to expand a chunk back into its full token sequence. That way, the model still has access to exact wording when it is important. Overall, REFRAG balances compression for speed with selective expansion for accuracy, letting the model process much larger contexts without slowing down.

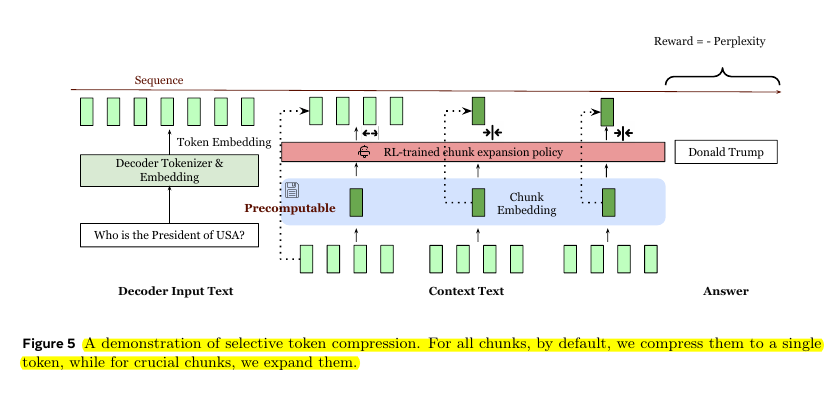

How REFRAG decides which parts of the retrieved context should stay compressed and which should be expanded back into full tokens. By default, every chunk of retrieved text is compressed into a single embedding. This makes the model much faster since it avoids handling long sequences word by word.

But not all chunks are equally important. Some may contain crucial details that the model needs exactly, not just in compressed form. To handle this, REFRAG uses a reinforcement learning policy that picks which chunks to expand.

The training signal for this policy comes from perplexity, which measures how uncertain the model is about predicting the next words. If expanding a chunk lowers perplexity, the policy learns to expand it in the future. So the system balances speed and accuracy by keeping most chunks compressed but expanding the ones that really matter for the final answer.

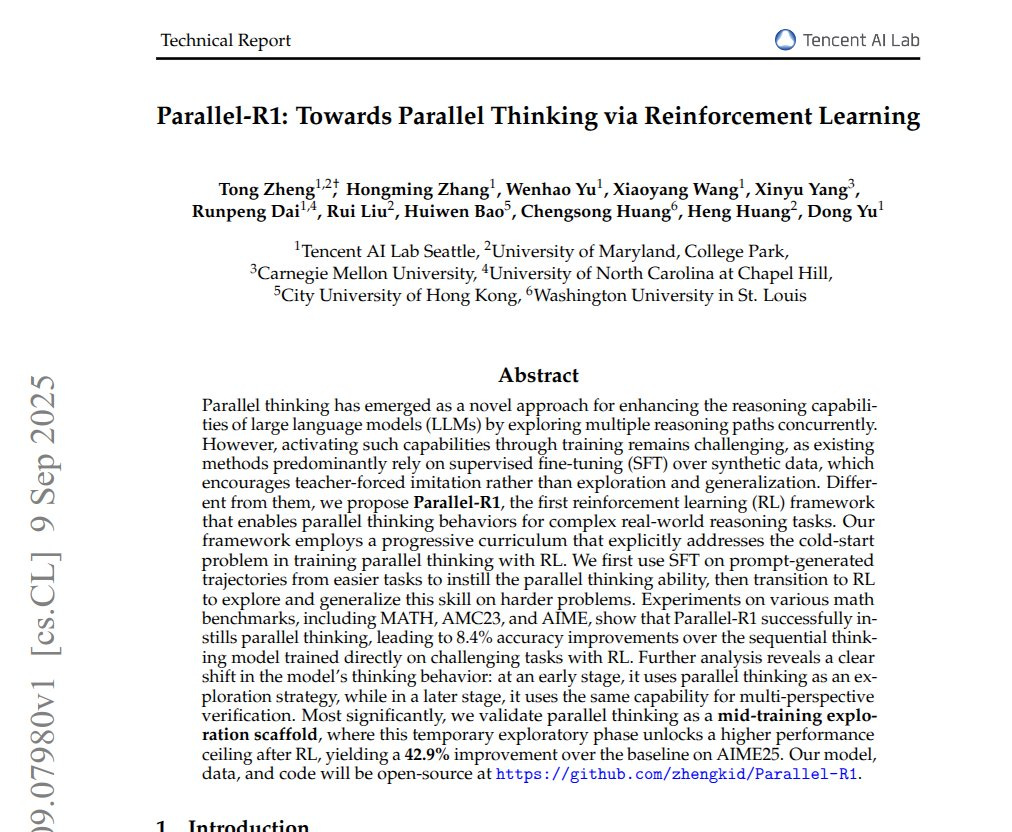

🗞️ Parallel-R1: Towards Parallel Thinking via Reinforcement Learning

The paper teaches language models to explore multiple solution paths in parallel and self-check using reinforcement learning. Meaning instead of sticking to one reasoning path, they open up multiple possible paths, explore them, and then combine the insights.

The model pauses mid-solution, opens <Parallel>, writes 2 or more independent <Path> threads, then a concise <Summary>, and continues. Pretraining never covered this behavior, so the model needs help to even trigger it.

They start on easy math, make clean examples with simple prompts, and run a small supervised pass that only teaches the format. Then they run RL on easy math to stabilize when to trigger parallel mode, rewarding outputs that both answer correctly and include a parallel unit.

Next they switch to harder problems and use accuracy-only rewards, letting the model decide where parallelism truly helps. Across training the role shifts, early the model explores with extra paths, later it mostly uses them to verify a proposed answer. Treating parallel thinking as a temporary exploration scaffold lifts the final ceiling even when later solutions look mostly sequential.