Read time: 13 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡Top Papers of last week (30-Nov-2025):

🗞️ DeepSeek-Math-V2 : Towards Self-Verifiable Mathematical Reasoning

🗞️ Foundations of AI Frameworks: Notion and Limits of AGI

🗞️ What does it mean to understand language?

🗞️ Chain-of-Visual-Thought: Teaching VLMs to See and Think Better with Continuous Visual Tokens

🗞️ Improving Language Agents through BREW

🗞️ Evolution Strategies at the Hyperscale

🗞️ NVIDIA Nemotron Parse 1.1

🗞️ CLaRa: Bridging Retrieval and Generation with Continuous Latent Reasoning

🗞️ Qwen3-VL Technical Report

🗞️ Latent Collaboration in Multi-Agent Systems

🗞️ On the Limits of Innate Planning in LLMs

🗞️ Structured Prompting Enables More Robust, Holistic Evaluation of Language Models

🗞️ DeepSeek-Math-V2 : Towards Self-Verifiable Mathematical Reasoning

🐋 DeepSeek is so back with its new IMO gold-medalist model, DeepSeekMath-V2. The first open source model to reach gold on IMO. Now, we can literally freely download the mind of one of the greatest mathematicians —to study, adjust, speed up, and run however you want. No limits, no censorship, no authority reclaiming it.

On the DeepSeek V2 Paper

They use 1 model to write full proofs and another model to judge whether the reasoning really holds together. The verifier ignores just checking the final answer and instead reads the whole solution, giving 1 for rigorous, 0.5 for basically correct but messy, or 0 for fatally flawed, so training can still learn from solutions that contain the right idea even when some steps are shaky.

With this approach, DeepSeekMath V2 hits 118/120 on Putnam 2024 and gold level performance on IMO 2025 problems. Standard math training often rewards a model only when the final number matches, so it can get credit with broken or missing reasoning.

To change that, the authors train a verifier model that reads a problem and solution, lists concrete issues, and scores the proof as 0, 0.5, or 1. They then add a meta verifier model that judges these reviews, rewarding only cases where the cited issues appear and the score fits the grading rules.

Using these 2 reviewers as reward, the model is trained as a proof generator that solves the problem and writes a self review matching the verifier. After many such loops, the final system can search over long competition solutions, refine them in a few rounds, and judge which of its answers deserve trust. Overall, the system is trained from human graded contest solutions and then many rounds of model written proofs, automatic reviews, and a meta verifier that watches for bad reviewing, all running on a huge mixture of experts language model inside an agent style loop that keeps proposing, checking, and refining proofs, which the authors argue is a general template for turning chat oriented language models into careful reasoners on structured tasks beyond math.

🗞️ Foundations of AI Frameworks: Notion and Limits of AGI

The paper argues that today’s neural networks, even the largest language models, are built in the wrong way to ever become real general intelligence. It explains from scratch what artificial and intelligence and artificial general intelligence mean, and shows that most current definitions just rate outward behaviour instead of internal structure.

Neural networks are described as static pattern machines, trained once by an outside algorithm to fit input output pairs, then frozen as giant tables of numbers. Because the learning process lives outside the model, the system cannot decide what to care about, cannot rewrite its own circuitry, and cannot build richer representations over time.

It also argues that popular math stories like scaling laws and universal approximation theorems only say that functions can be fitted, not that deep flexible reasoning will appear. As an alternative sketch, the author suggests separating raw hardware from higher level organization, designing more structured neuron types and learning rules, so that systems can change both behaviour and wiring from within.

🗞️ What does it mean to understand language?

New Harvard+MIT+Georgia Tech paper argues that truly understanding language means linking words to rich nonverbal brain systems that model reality. First, it explains that the brain’s language regions mostly track patterns in words and grammar, similar to phone typing suggestions.

They can piece together who did what to whom in a sentence, but only from stored language regularities. Deep understanding, the authors argue, means building a mental scene with people, objects, feelings, places, and causes.

To get that kind of scene, the language network must export its output to many other specialized brain systems, a process they call exportation. These include areas for understanding others’ thoughts, tracking physical events, mapping spaces, recognizing sights, moving the body, and recalling memories.

Brain imaging studies show that when people read about minds, motion, places, or emotions, the matching nonlanguage regions light up. Understanding language turns out to be a team effort between classic language regions and many other content specific brain networks.

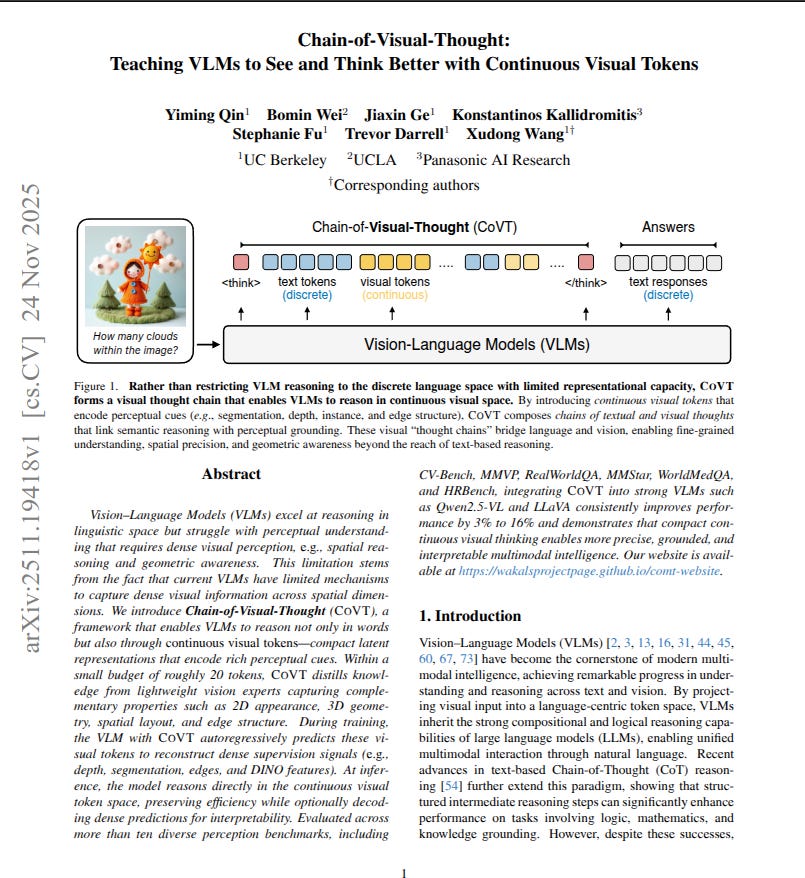

🗞️ Chain-of-Visual-Thought: Teaching VLMs to See and Think Better with Continuous Visual Tokens

The paper shows how to let vision language models think with compact visual thoughts inside the model, not just with words. Current models turn an image into a few text features, so they lose exact object boundaries, distances, and geometry, which makes them weak at tasks like counting, depth comparison, and spatial questions.

Chain of Visual Thought adds continuous visual tokens into the reasoning, where small sets of tokens summarize segmentation masks that mark objects, depth maps that show distance, edge maps that show boundaries, and patch features that store higher level meaning, all learned from lightweight teacher networks. During training, a 4 stage curriculum teaches the model to output visual tokens, use them inside a think block before the answer, and still work when some token types are randomly dropped. At test time it predicts a short chain of visual tokens plus text, can optionally decode the tokens into human viewable masks or depth maps, and in models like Qwen2.5 VL this gives around 3% to 16% better scores on many vision heavy benchmarks without hurting general language ability.

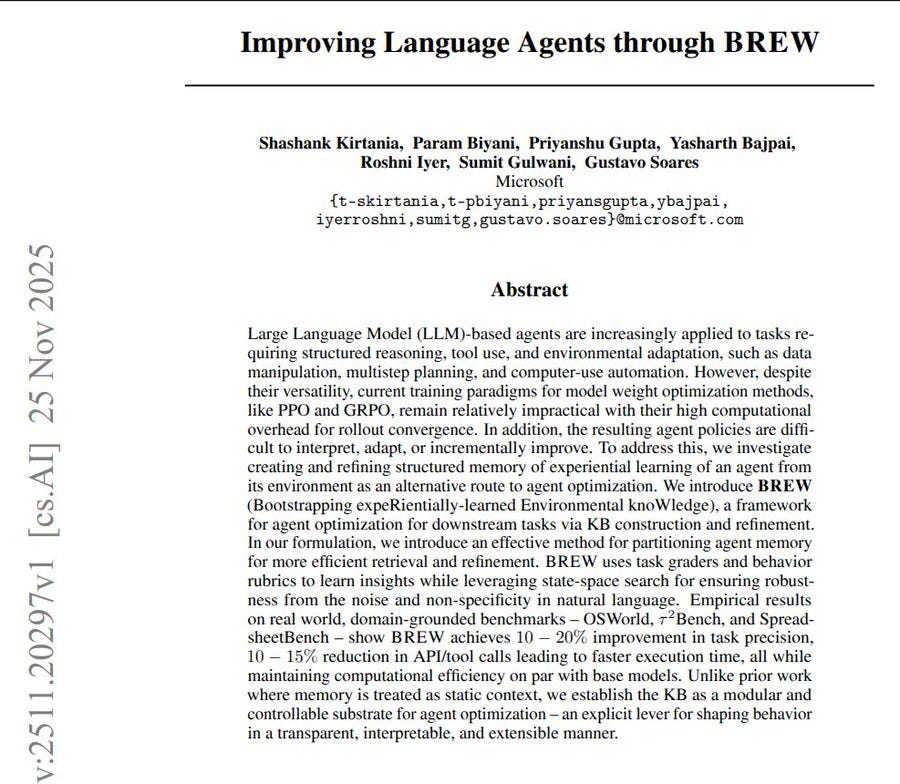

🗞️ Improving Language Agents through BREW

This paper shows how a simple memory system called BREW makes language based agents smarter and more efficient. Standard language agents forget what they learned, so every task feels new and they waste many steps repeating trial and error.

BREW fixes this by turning past task runs into a written knowledge base, a set of notes the agent can later read and reuse. These notes are grouped by concepts like searching files or exporting a document, and a reflector agent writes short lessons from each trajectory using human behavior rubrics so the content is high quality and understandable.

An integrator agent then merges lessons per concept and uses a tree search strategy to try different document versions, run sample tasks, score how much they help and how easily they are retrieved, and keep the best ones. When the main agent later gets a new query, it pulls a few matching concept documents from this knowledge base and follows their recipes, so it usually needs fewer steps, fewer tool calls, and makes more precise decisions. Experiments show clear gains overall.

🗞️ Evolution Strategies at the Hyperscale

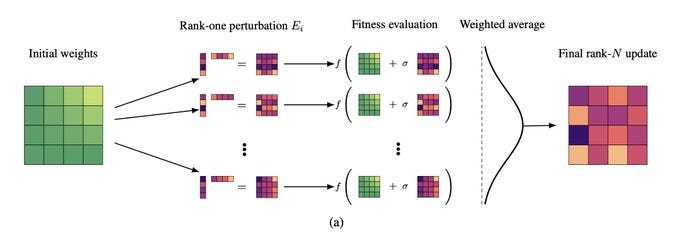

I’m reading NVIDIA’s new paper and its wild. Everyone keeps talking about scaling transformers with bigger clusters and smarter optimizers… meanwhile NVIDIA and Oxford just showed you can train billion-parameter models using evolution strategies a method most people wrote off as ancient.

The trick is a new system called EGGROLL, and it flips the entire cost model of ES. Normally, ES dies at scale because you have to generate full-rank perturbation matrices for every population member. For billion-parameter models, that means insane memory movement and ridiculous compute.

These guys solved it by generating low-rank perturbations using two skinny matrices A and B and letting ABᵀ act as the update. The population average then behaves like a full-rank update without paying the full-rank price.

The result?

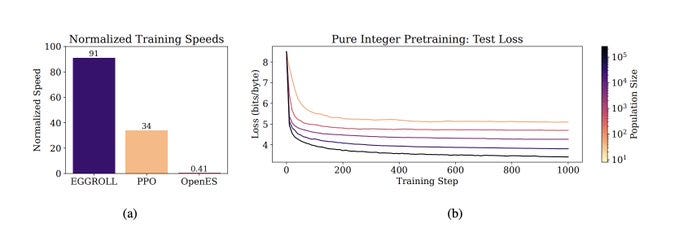

They run evolution strategies with population sizes in the hundreds of thousands a number earlier work couldn’t touch because everything melted under memory pressure. Now, throughput is basically as fast as batched inference.

That’s unheard of for any gradient-free method. The math checks out too.

The low-rank approximation converges to the true ES gradient at a 1/r rate, so pushing the rank recreates full ES behavior without the computational explosion. But the experiments are where it gets crazy.

→ They pretrain recurrent LMs from scratch using only integer datatypes. No gradients. No backprop. Fully stable even at hyperscale.

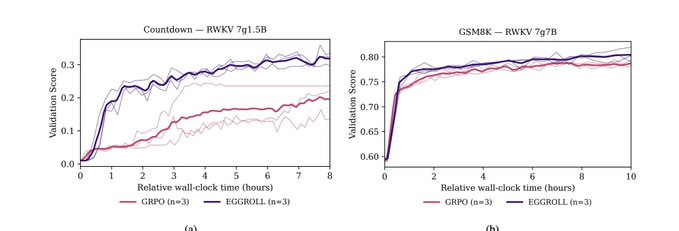

→ They match GRPO-tier methods on LLM reasoning benchmarks. That means ES can compete with modern RL-for-reasoning approaches on real tasks.

→ ES suddenly becomes viable for massive, discrete, hybrid, and non-differentiable systems the exact places where backprop is painful or impossible.

This paper quietly rewrites a boundary:

we didn’t struggle to scale ES because the algorithm was bad we struggled because we were doing it in the most expensive possible way. NVIDIA and Oxford removed the bottleneck. And now evolution strategies aren’t an old idea… they’re a frontier-scale training method.

The wild part is what EGGROLL actually does under the hood.

It replaces giant full-rank noise matrices with two skinny ones A and B and multiplies them into a low-rank perturbation. But when you average across a massive population, the update behaves like a full-rank gradient.

The scalability jump is insane.

Previous ES work capped out around ~1,400 workers. This paper scales to 262,144 workers without blowing up memory or compute because the perturbations can be reconstructed deterministically with a counter-based RNG.

The low-rank approximation isn’t just a hack. They prove the update converges to the full ES update at an O(1/r) rate. That means you can dial rank up or down depending on how much compute you want to spend.

The most surprising demo?

They train integer-only recurrent language models from scratch. No gradients. No backprop. Pure ES. And it stays stable, even with the enormous population sizes.

If you want to see how NVIDIA and Oxford pulled this off, read the full paper it’s honestly one of the most important optimization papers of the year:

AI makes content creation faster than ever, but it also makes guessing riskier than ever. If you want to know what your audience will react to before you post, TestFeed gives you instant feedback from AI personas that think like your real users. It’s the missing step between ideas and impact. Join the waitlist and stop publishing blind.

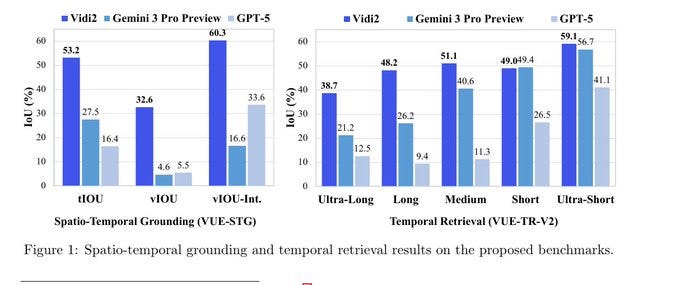

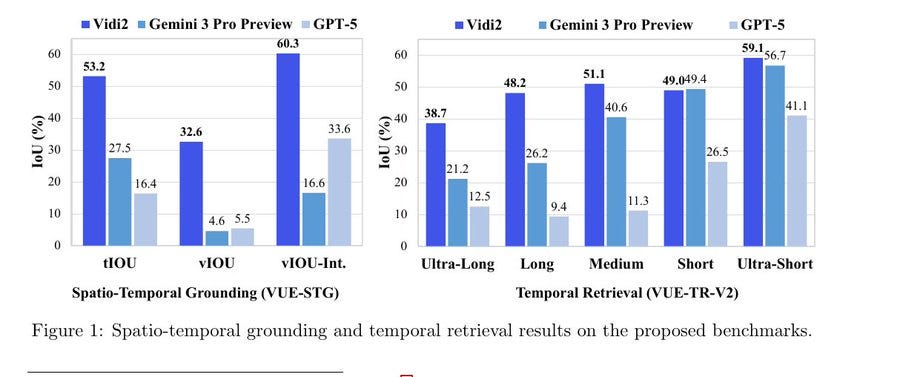

🇨🇳 China’s Bytedance rolled out an AI video editor that handles video comprehension better than Gemini 3 Pro.

Vidi2, a video model that can accurately find actions and objects in long videos from text. Clearly outperforming strong commercial models on new retrieval and grounding benchmarks, and also doing well on video question answering.

Most existing tools cannot truly read a full video, so they miss where events begin, end, and who is involved. Vidi2 adds spatio temporal grounding, meaning that for a text query it predicts both the right time span and a bounding box tube for the target object across frames.

Under the hood it uses a multimodal encoder plus a language model backbone, processes text, visual frames, and audio together, and is trained on a mix of synthetic clips and large amounts of real video. To cope with very short and very long clips, it compresses visual tokens adaptively so that memory stays bounded without discarding key context. They also build 2 long video benchmarks, for spatio temporal tubes and for temporal retrieval, and score models by overlap between predicted and true times and boxes.

🗞️ NVIDIA Nemotron Parse 1.1

Paper behind NVIDIA Nemotron Parse 1.1, a super-lightweight document parsing and OCR model. Its compressed version runs about 20% faster than the full one while keeping almost the same quality.

The problem is that normal OCR tools dump plain characters and lose layout, tables, pictures, and reading order that many systems need. Nemotron Parse 1.1 tackles this by using an image encoder to read the page and a compact language decoder to write the text.

For each block of content it outputs markdown style text plus a bounding box and a semantic class in human reading order. The bounding box is the rectangle showing where that piece sits on the page, and the semantic class is a label like title, list item, paragraph, table, picture, or caption.

A token compression variant squeezes the visual sequence so the decoder sees far fewer tokens but keeps almost all layout information, which makes inference noticeably faster. Small control prompts let users choose plain text, rich markdown, bounding boxes, and classes in different combinations. Using NVpdftex supervision plus public and synthetic datasets with multi token prediction, the model gets multilingual OCR accuracy while staying lightweight.

🗞️ CLaRa: Bridging Retrieval and Generation with Continuous Latent Reasoning

New Apple paper makes RAG shorter and smarter by training retrieval and generation together in one shared continuous space. Standard RAG picks documents using separate embeddings and then feeds long raw text to the model, so retrieval and answer quality cannot influence each other.

CLaRa introduces a compressor that reads each document once and turns it into a small set of dense vectors, compact lists of numbers that keep the key facts and relations. To teach this compressor what matters, the authors generate simple questions, harder questions that require combining facts from different parts of the document, plus paraphrases of each document, so the compressed vectors must still support answering and rewriting.

These compressed vectors are frozen and reused, a query reasoner maps questions into the same space, and a top k trick picks relevant ones while letting training send feedback to the scores. The system is trained with the standard next token loss, so the retriever learns to favor documents that help predict answers, and experiments show this compressed setup can rival strong RAG baselines while using much shorter inputs.

🗞️ Qwen3-VL Technical Report

“Qwen3-VL Technical Report”

Can handle very long mixes of text, images, and video without its thinking falling apart. First, they train it from the start to work with a native context of 256K tokens, and they gradually scale sequence length and data so the model actually learns to keep track of information scattered across many pages or minutes of video instead of forgetting early parts. Second, they change how positions in space and time are encoded with interleaved MRoPE, which basically means every visual token carries a well balanced tag of “where in the frame” and “when in the video”, so the model does not get confused about ordering when clips are long.

Older schemes grouped time and space into separate blocks of dimensions, which made the frequency patterns skewed and hurt long video understanding, while this interleaving keeps spatial and temporal cues sharp for both close and far ranges. Third, they bring in DeepStack, where low level edges, mid level shapes, and high level object features from different layers of the vision encoder are injected into matching layers of the language model instead of squashing everything into a single shallow projection.

That multi level fusion lets the language model reason using fine details like tiny text or small objects while still seeing the big layout, which typical single layer adapters tend to blur away. Fourth, for video timing they stop hiding time in abstract numeric position IDs and instead insert explicit text timestamps like “3 seconds” before frame groups, which the language model naturally understands and uses for questions about when something happens. Finally, they change the training loss so text only data and multimodal data contribute in a balanced way and then add strong supervised, distillation, and reinforcement learning stages, so the same architecture ends up competitive with top text models while dominating many visual and video benchmarks.

🗞️ Latent Collaboration in Multi-Agent Systems

New Stanford + Princeton + Illinois Univ paper shows language model agents can collaborate through hidden vectors instead of text, giving better answers with less compute. In benchmarks they reach around 4x faster inference with roughly 70% to 80% fewer output tokens than strong text systems.

Normal multi agent setups force every model to write long explanations and read others, which wastes time and discards internal signals. Here each agent stays in hidden vectors, number lists inside the network that already carry the meaning of earlier text.

The agent reasons by feeding its last hidden vector back as the next input, using a small linear map so it acts like a valid embedding. For communication they skip text and copy the full key value cache, the attention memory that tells how all positions relate, from one agent into the next.

This shared memory lets the next agent start from the same internal context, giving richer information than short text messages while also saving compute by skipping vocabulary sampling. Experiments on math, science, commonsense, and code show higher accuracy than single or text agents and lower cost.

🗞️ On the Limits of Innate Planning in LLMs

This paper checks how well LLMs can actually plan and keep track of state. Even with strong prompts and feedback, the best model solves only about 68% of these puzzles.

They use the 8 puzzle, a sliding tile game, so they can watch every move the model makes. To succeed, a model must remember the board, choose only legal moves, and move steadily toward the target layout.

They try different prompting styles, from plain instructions to stepwise reasoning and search like instructions, but most attempts still fail. Typical failures come from the model imagining illegal moves, losing track of the board, or stopping early while claiming success.

Feedback like you made an invalid move or you repeated a state helps a bit but still wastes time. In a final test, an external checker lists the legal moves each turn, yet no model solves any puzzle. The main takeaway is that these models do not have strong built in planning abilities and need extra tools for reliable structured tasks.

🗞️ Structured Prompting Enables More Robust, Holistic Evaluation of Language Models

🇨🇳 China’s Bytedance rolled out an AI video editor that handles video comprehension better than Gemini 3 Pro. Vidi2, a video model that can accurately find actions and objects in long videos from text.

Clearly outperforming strong commercial models on new retrieval and grounding benchmarks, and also doing well on video question answering. Most existing tools cannot truly read a full video, so they miss where events begin, end, and who is involved.

Vidi2 adds spatio temporal grounding, meaning that for a text query it predicts both the right time span and a bounding box tube for the target object across frames. Under the hood it uses a multimodal encoder plus a language model backbone, processes text, visual frames, and audio together, and is trained on a mix of synthetic clips and large amounts of real video.

To cope with very short and very long clips, it compresses visual tokens adaptively so that memory stays bounded without discarding key context. They also build 2 long video benchmarks, for spatio temporal tubes and for temporal retrieval, and score models by overlap between predicted and true times and boxes.

That’s a wrap for today, see you all tomorrow.