Read time: 15 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡Top Papers of last week (5-Oct-2025):

🗞️ Metacognitive Reuse: Turning Recurring LLM Reasoning Into Concise Behaviors

🗞️ SimpleFold: Folding Proteins is Simpler than You Think

🗞️ TruthRL: Incentivizing Truthful LLMs via Reinforcement Learning

🗞️ The Dragon Hatchling: The Missing Link between the Transformer and Models of the Brain

🗞️ Aristotle: IMO-level Automated Theorem Proving

🗞️ Rethinking Thinking Tokens: LLMs as Improvement Operators

🗞️ Pretraining LLMs with NVFP4

🗞️ Radiology’s Last Exam (RadLE): Benchmarking Frontier Multimodal AI Against Human Experts and a Taxonomy of Visual Reasoning Errors in Radiology

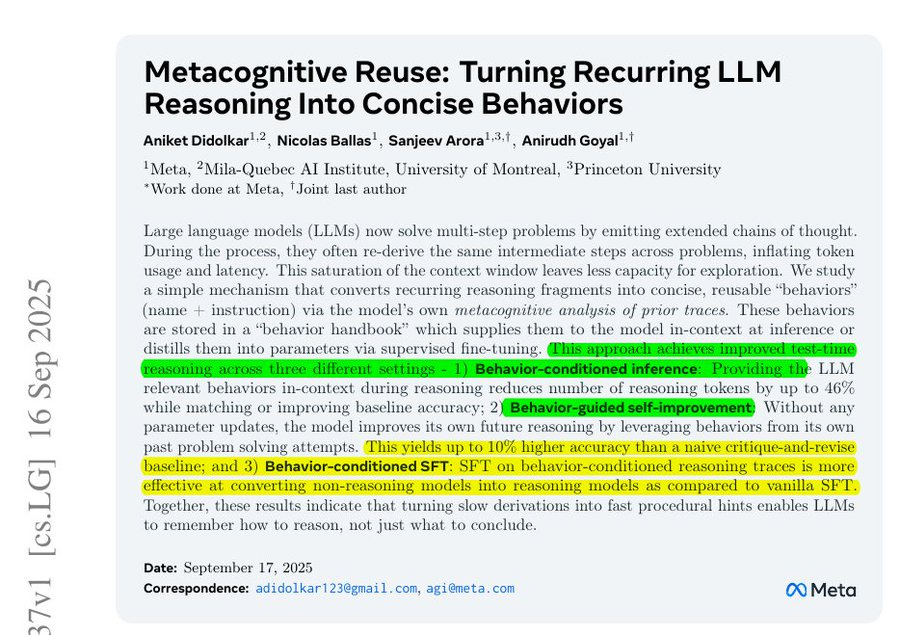

🗞️ Metacognitive Reuse: Turning Recurring LLM Reasoning Into Concise Behaviors

🔥 Meta reveals a massive inefficiency in AI’s reasoning process and gives a solution. Large language models keep redoing the same work inside long chains of thought.

For example, when adding fractions with different denominators, the model often re explains finding a common denominator step by step instead of just using a common denominator behavior. In quadratic equations, it re explains the discriminant logic or completes the square again instead of calling a solve quadratic behavior.

In unit conversion, it spells out inches to centimeters again instead of applying a unit conversion behavior. 🛑The Prblem with this approach is, when the model re explains a routine, it spends many tokens on boilerplate steps that are identical across problems which is wasted budget.

So this paper teaches the model to compress those recurring steps into small named behaviors that it can recall later or even learn into its weights. A behavior compresses that routine into a short name plus instruction like a tiny macro that the model can reference.

At inference, a small list of relevant behaviors is given to the model or already internalized by training so the model can say which behavior it is using and skip the long re derivation. Because it points to a named behavior, the output needs fewer tokens, and the saved tokens go to the new parts of the question.

Behavior conditioned fine tuning teaches the weights to trigger those routines from the question alone so even without retrieval the model tends to use the right shortcut. Compute shifts from many output tokens to a few input hints and weight activations which is cheaper in most serving stacks and usually faster too. Accuracy can improve because the model follows a tested routine instead of improvising a fresh multi step derivation that may drift.

⚙️ The Core Concepts

A behavior is a short name plus instruction for a reusable move, like inclusion exclusion or turning words into equations. The behavior handbook is a store of how-to steps, which is procedural memory, unlike RAG that stores facts. The authors frame the goal as remember how to reason, not just what to conclude, which matches the engineer’s point that remembering how to think beats thinking longer.

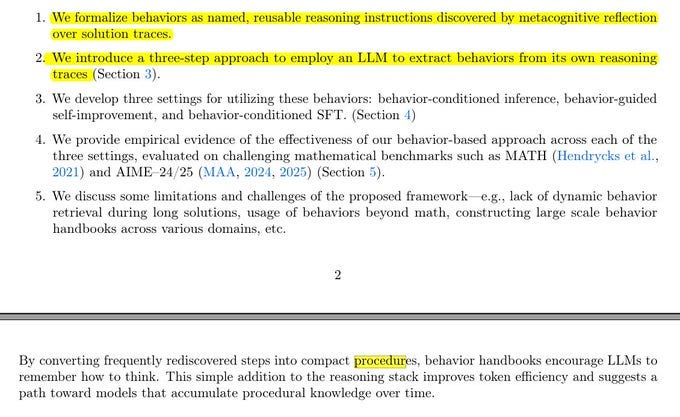

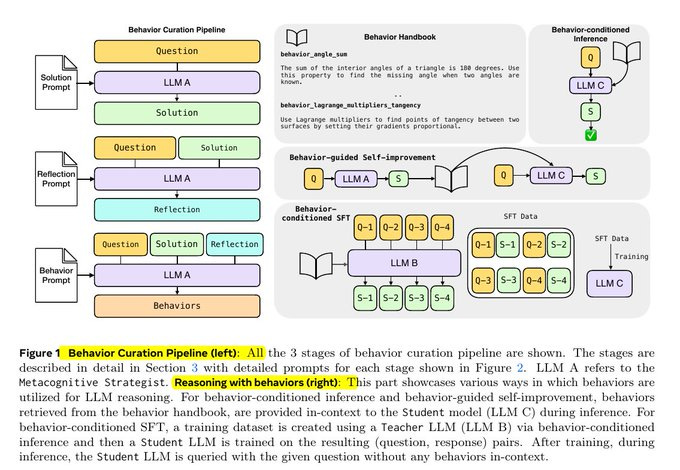

Behaviour Curation Pipeline

Step 1: Solving and reflecting

The process starts with a model (labeled LLM A) that gets a math question. It solves it normally, producing a full reasoning trace.

Then the same model reflects on that solution, asking: “What steps here are general tricks I could reuse later?”

From that reflection, it extracts behaviors. Each behavior has a short name plus an instruction, like triangle angle sum or inclusion exclusion.

Step 2: Building a handbook

These extracted behaviors are added to a behavior handbook. Think of it as a growing library of reasoning shortcuts. The handbook is procedural memory, so instead of facts like “Paris is in France,” it stores “how to” rules, like “angles in a triangle add to 180.”

Step 3: Using the handbook at test time

Now another model (LLM C, called the Student) faces new problems. During inference, it is given the problem plus a small selection of relevant behaviors from the handbook. It solves the problem while explicitly referencing these behaviors, so the reasoning trace is shorter and less repetitive.

Step 4: Self-improvement loop

The same idea can also be used for self-improvement. A model solves a problem once, extracts behaviors from that first attempt, then retries the problem using those behaviors as hints. This second try is usually more accurate than just a critique-and-revise method.

Step 5: Training with behaviors

There’s also a training path called behavior-conditioned supervised fine-tuning (SFT). Here, a Teacher model (LLM B) generates reasoning traces that explicitly include behaviors.

The Student is then trained on these behavior-annotated traces. After training, the Student doesn’t need behavior prompts anymore because it has internalized the patterns.

🗞️ SimpleFold: Folding Proteins is Simpler than You Think

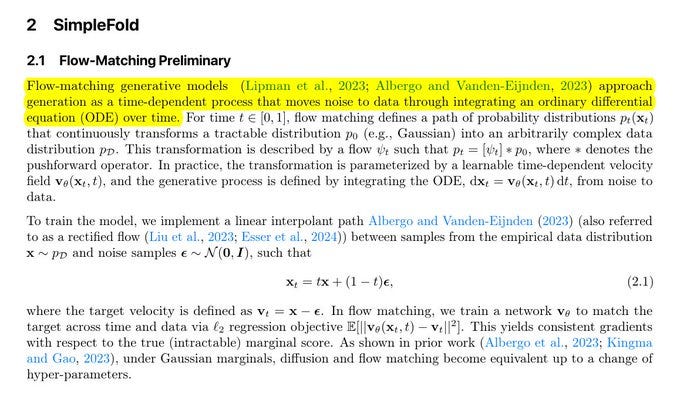

The crucial technique they used to get such great result is “flow matching,” which teaches the model how to move a noisy 3D protein toward its true shape using many tiny steps. During training they take a real protein, scramble the atom positions with noise, and ask the transformer to predict the small motion each atom should take at a given time to get closer to the real structure.

Because the model learns these short, local nudges instead of the whole fold at once, learning is stable, sample quality is high, and the same procedure can generate many valid shapes for flexible regions. They also add a simple local distance loss so nearby atoms keep realistic spacing, which sharpens side chains and fixes local geometry.

The network itself stays plain, 1 token per residue with a protein language model embedding and time conditioning, and it learns rotation symmetry by training on randomly rotated structures rather than using special geometry layers. All together, flow matching is the engine, and the simple design lets that engine run fast and scale.

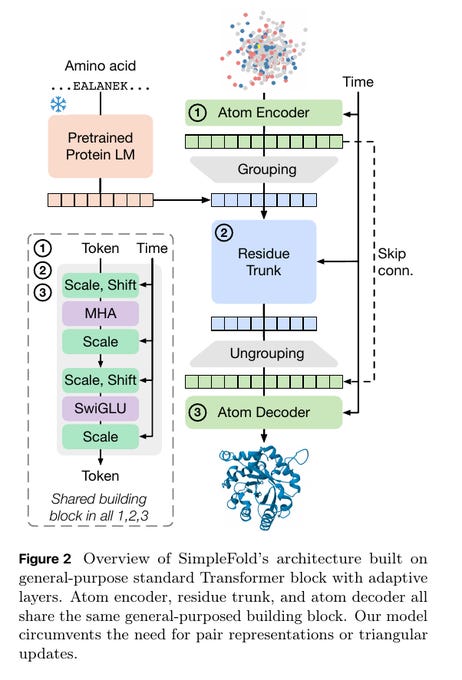

This diagram shows how SimpleFold turns a protein sequence into a 3D shape using plain transformers. It first feeds the amino acid sequence into a frozen protein language model to get features for each residue.

At each time step it also takes a noisy 3D cloud of atoms and encodes them in an Atom Encoder. It groups atoms into 1 token per residue and runs them through a Residue Trunk made of the same simple transformer block.

The block is standard self attention plus feedforward, with tiny scale and shift tweaks driven by the time signal. It then ungroups back to atoms and the Atom Decoder predicts small moves that nudge atoms toward the right structure.

Skip connections carry information across stages to keep details stable. Training uses flow matching, so the model learns how to move from noise to the final fold step by step. The key point is it needs no pair features or triangle updates, just this repeated transformer block with time conditioning.

This figure shows that SimpleFold makes very accurate protein structures while staying fast and scalable. The examples on the left compare predictions to known structures and the matches are near perfect, with TM (Template Modeling score) 0.99 and GDT 0.99 in one case and TM 0.98 and GDT 0.92 in another.

“TM” is a number between 0 and 1 that tells how close a predicted protein structure is to the real, experimentally known structure. A TM score closer to 1 means the prediction is almost identical to the real protein fold.

The overlay in the middle shows an ensemble of shapes for the same protein, which means the model can capture natural flexibility instead of giving only one rigid answer. The bubble chart shows a clear scaling trend, larger models use more compute and reach higher accuracy on the CASP14 benchmark.

🗞️ TruthRL: Incentivizing Truthful LLMs via Reinforcement Learning

New AIatMeta paper trains LLMs to be more truthful by rewarding correct answers and say I do not know when unsure. Reports 28.9% fewer hallucinations and 21.1% higher truthfulness compared with standard training.

The big deal is a simple reward rule that separates I do not know from wrong, so the model stops guessing. The problem is that most training only rewards being right, so models guess even when they lack facts.

TruthRL gives a 3 level reward, correct is positive, I do not know is neutral, wrong is negative. This reward teaches the model to answer when confident and to abstain when not.

Training uses online reinforcement learning that samples several answers per question and compares rewards. The simple 3 level reward beats a binary reward and outperforms extra knowledge or reasoning bonuses.

Across tasks with and without external documents, the method cuts hallucinations and keeps accuracy strong. On tricky or baiting questions, the model chooses I do not know far more often instead of making things up.

Using an LLM judge to grade answers gives stable signals, while string matching pushes the model to abstain too much. Overall, TruthRL reduces bluffing and improves honest behavior without making the model timid.

🗞️ The Dragon Hatchling: The Missing Link between the Transformer and Models of the Brain

The paper presents Dragon Hatchling, a brain-inspired language model that matches Transformers using local neuron rules for reasoning and memory. It links brain like local rules to Transformer level performance at 10M to 1B scale.

It makes internals easier to inspect because memory sits on specific neuron pairs and activations are sparse and often monosemantic. You get reliable long reasoning and clearer debugging, because the model exposes which links carry which concepts in context.

The problem it tackles is long reasoning, models often fail when the task runs longer than training. The model is a graph of many simple neurons with excitatory and inhibitory circuits and simple thresholds.

It stores short term memory in the strengths of connections using Hebbian learning, so links that fire together get stronger. Those local rules behave like attention but at the level of single neurons and their links.

They also present BDH-GPU, a GPU friendly version that implements the same behavior as an attention model with a running state. This design produces positive sparse activations, so only a small fraction of neurons fire at a time.

Many units become monosemantic, meaning one unit often maps to one clear concept even in models under 100M. The theory ties the approach to distributed computing and explains how longer reasoning can scale with model size and time.

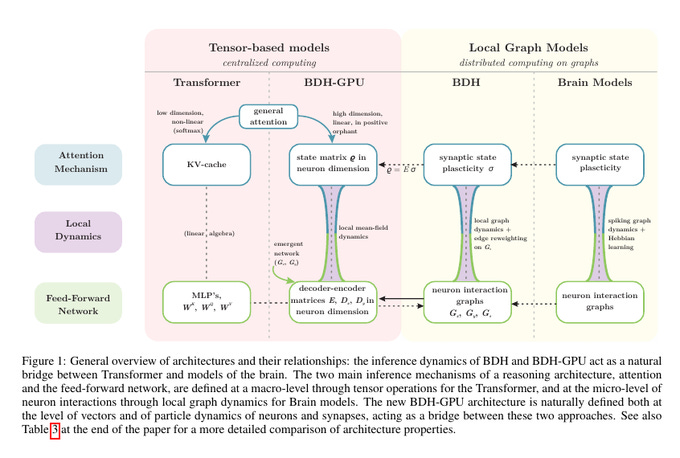

How ‘Dragon Hatchling’ (BDH) and its GPU version connect Transformers to brain-style graph models. It highlights 2 key mechanisms, attention and a feed forward network, and shows their tensor version on the left and their neuron graph version on the right.

Transformers do centralized math with general attention, a KV cache for context, and dense feed forward layers. Brain models do distributed computing on neuron graphs where synapses change during inference through local plasticity.

BDH lives on the graph side, using neuron interaction graphs and synaptic plasticity to drive updates step by step. BDH-GPU rewrites those same local rules as high dimensional matrices and a running state so standard GPUs can execute them efficiently. The takeaway is that attention and feed forward can be implemented either as global tensor ops or as local graph dynamics, and BDH provides the bridge.

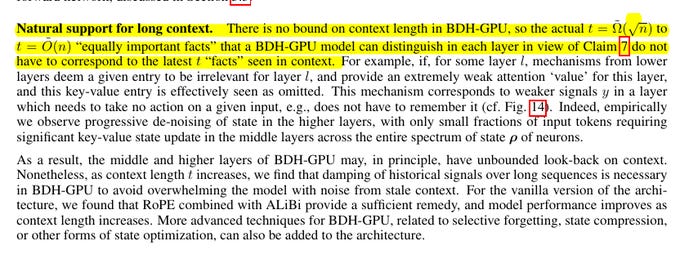

🤯 There is no bound on context length in BDH-GPU, Dragon Hatchling, because of how it manages memory and updates signals layer by layer.

In a Transformer, the length of context is bounded by the size of the attention mechanism, since every new token must compare against all past tokens stored in the cache. That makes it scale poorly as context grows.

BDH-GPU instead uses local neuron updates. Each layer decides whether a past signal is still relevant. If the layer deems it irrelevant, it just weakens or skips that signal. This means not every token needs to be carried forward, only the ones that continue to matter.

As inputs pass through higher layers, the model naturally filters and denoises. Old signals that add noise fade out, while strong ones get reinforced. Because of this filtering, the model can keep track of important details across very long spans without memory overload.

Another key difference is that memory is stored in synaptic connections rather than in a giant cache of past tokens. That allows information to be compressed into the state of the network itself, not stored explicitly for every past token.

So the reason BDH-GPU avoids a strict limit is that it treats context as an evolving state rather than as a giant history buffer. That makes it possible to scale context length upward as long as noise is controlled.

🗞️ Aristotle: IMO-level Automated Theorem Proving

The paper presents Aristotle, a system that blends natural language planning with Lean proofs to solve Olympiad math. It solved 5 of 6 IMO 2025 problems with fully verified Lean solutions.

The core is a search that builds proofs step by step with a model proposing tactics and scoring progress. It handles choices where all branches must work or only one, then focuses compute on the hardest subgoal.

A lemma pipeline turns an informal sketch into many tiny subgoals, formalizes each statement, checks Lean errors, then revises and repeats. Yuclid, a fast geometry module, uses rule based and algebraic reasoning so geometry proofs run quickly.

Training uses reinforcement learning on search traces and test time training that learns from fresh attempts. The result is a solver that plans clearly, proves rigorously, and generalizes beyond contests.

🗞️ Rethinking Thinking Tokens: LLMs as Improvement Operators

Another huge AIatMeta paper. Shows language models work better when they think in short rounds using tiny summaries, not long step by step traces.

It breaks the tradeoff between thinking longer and paying for longer prompts. Gives higher accuracy at the same or lower latency by keeping only the useful bits in a tiny workspace.

It scales by adding parallel drafts rather than dragging out a single long chain, which is cheaper and faster in practice. Results show +11% on AIME 2024 and +9% on AIME 2025 with about 2.57x fewer sequential tokens for similar accuracy.

Long chains grow with context length, so cost and wait time rise, and the model can forget, get stuck on bad ideas, and repeat itself. They treat the model as an improvement operator that reads a small workspace of text, writes a better draft, then compresses it back into the workspace.

A loop called Parallel Distill Refine makes several drafts in parallel, collects what they agree and disagree on into a bounded workspace, then refines again. This keeps each prompt short while evidence builds across rounds.

Latency depends on the sequential budget, which means the tokens on the chosen path, and parallel sampling only increases total compute, not prompt length. Feeding only wrong drafts into the workspace reduces accuracy, so they stress checks and diversity of drafts.

They also train an 8B model with operator consistent reinforcement learning to perform one round of this interface and it raises results at the same latency. The result is that compact summaries enable many steps without long contexts, so accuracy rises without extra latency.

🗞️ Pretraining LLMs with NVFP4

💾 NVFP4 shows 4-bit pretraining of a 12B Mamba Transformer on 10T tokens can match FP8 accuracy while cutting compute and memory. 🔥 NVFP4 is a way to store numbers for training large models using just 4 bits instead of 8 or 16. This makes training faster and use less memory.

But 4 bits alone are too small, so NVFP4 groups numbers into blocks of 16. Each block gets its own small “scale” stored in 8 bits, and the whole tensor gets another “scale” stored in 32 bits.

The block scale keeps the local values accurate, and the big tensor scale makes sure very large or very tiny numbers (outliers) are not lost. In short, NVFP4 is a clever packing system: most numbers use 4 bits, but extra scales in 8-bit and 32-bit keep the math stable and precise.

On Blackwell, FP4 matrix ops run 2x on GB200 and 3x on GB300 over FP8, and memory use is about 50% lower. The validation loss stays within 1% of FP8 for most of training and grows to about 1.5% late during learning rate decay.

Task scores stay close, for example MMLU Pro 62.58% vs 62.62%, while coding dips a bit like MBPP+ 55.91% vs 59.11%. Gradients use stochastic rounding so rounding bias does not build up.

Ablation tests show that removing any step hurts convergence and increases loss. Against MXFP4, NVFP4 reaches the same loss with fewer tokens because MXFP4 needed 36% more data, 1.36T vs 1T, on an 8B model.

Switching to BF16 near the end, about 18% of steps or only forward, almost removes the small loss gap. Support exists in Transformer Engine and Blackwell hardware, including needed rounding modes.

🗞️ Radiology’s Last Exam (RadLE): Benchmarking Frontier Multimodal AI Against Human Experts and a Taxonomy of Visual Reasoning Errors in Radiology

This paper brings some bad news for AI-based radiology. It checks if chatbots can diagnose hard radiology images like experts.

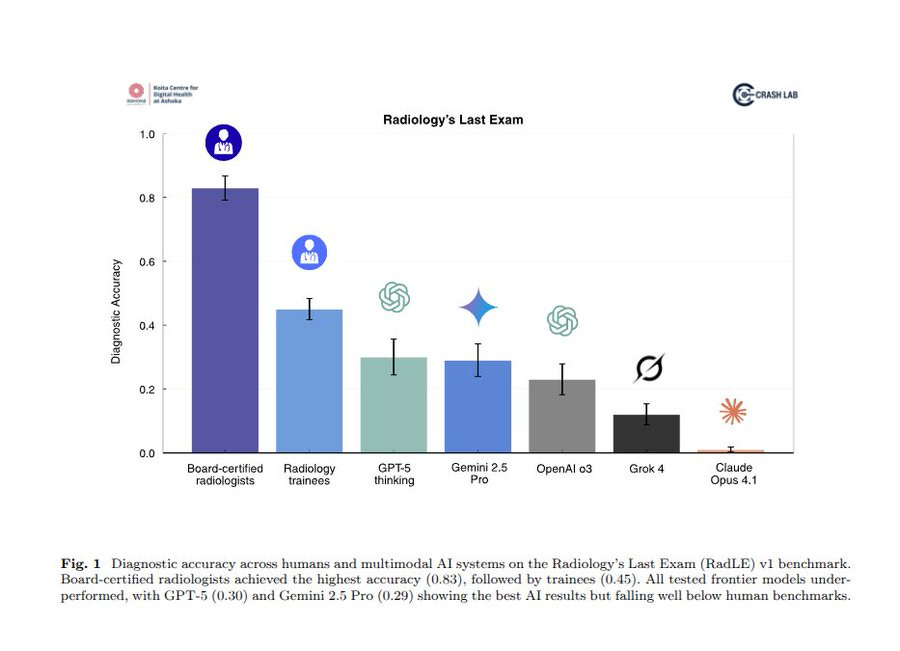

Finds that board-certified radiologists scored 83%, trainees 45%, but the best performing AI from frontier labs, GPT-5, managed only 30%. 😨 Claims “doctor-level” AI in medicine is still far away.

The team built 50 expert level cases across computed tomography (CT), magnetic resonance imaging (MRI), and X-ray. Each case had one clear diagnosis and no extra clinical history.

They tested GPT-5, OpenAI o3, Gemini 2.5 Pro, Grok-4, and Claude Opus 4.1 in reasoning modes. Blinded radiologists graded answers as exact, partial, or wrong, then averaged scores.

Experts beat trainees, and trainees beat every model by a wide margin. More reasoning barely helped accuracy, but it made replies about 6x slower.

Models did best on MRI and struggled more on CT and X-ray. To explain mistakes, the authors built an error map covering missed or false findings, wrong location or meaning, early closure, and contradictions with the final answer.

They also saw thinking traps like anchoring, availability bias, and skipping relevant regions. General purpose models are not ready to read hard cases without expert oversight.

That’s a wrap for today, see you all tomorrow.