Read time: 13 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡Top Papers of last week (ending 23-Nov-2025):

🗞️ Souper-Model: How Simple Arithmetic Unlocks State-of-the-Art LLM Performance

🗞️ ARC Is a Vision Problem!

🗞️ What Does It Take to Be a Good AI Research Agent? Studying the Role of Ideation Diversity

🗞️ On the Fundamental Limits of LLMs at Scale

🗞️ Anthropic Paper - “Natural emergent misalignment from reward hacking”

🗞️ “Solving a Million-Step LLM Task with Zero Errors”

🗞️ Meta released Segment Anything Model 3 (SAM 3)

🗞️ TiDAR: Think in Diffusion, Talk in Autoregression

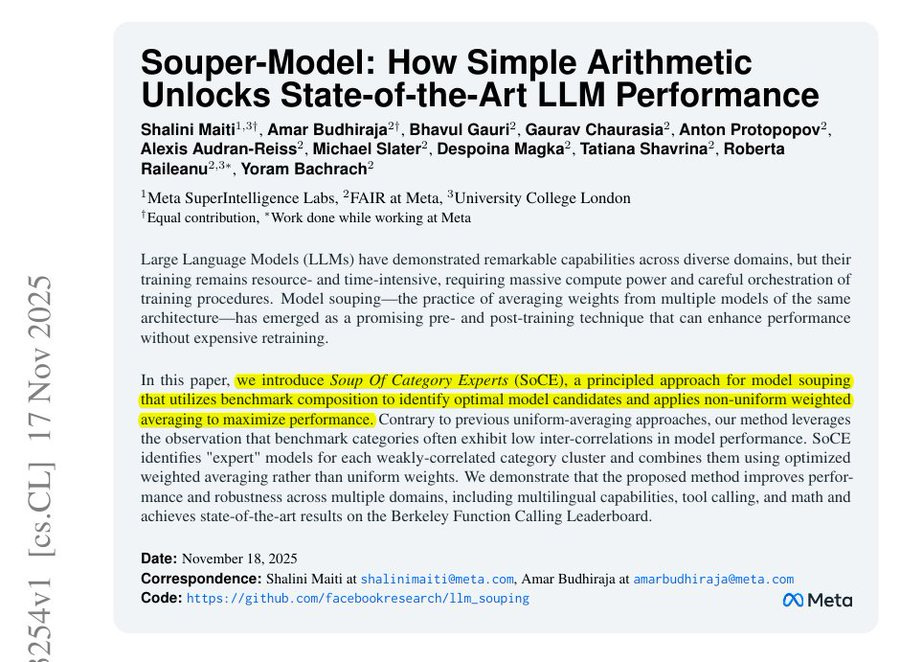

🗞️ Souper-Model: How Simple Arithmetic Unlocks State-of-the-Art LLM Performance

Fantastic, new AIatMeta paper shows that averaging the weights of several LLMs can create a model that beats each parent model. This merged model reaches state of the art on the Berkeley Function Calling Leaderboard with an accuracy of 80.68%.

Model souping here means taking checkpoints from models with the same architecture and averaging their parameters with chosen weights. Soup Of Category Experts is a way to build 1 strong model by averaging a small set of “expert” models, where each expert is chosen for being good on a different benchmark category that does not strongly overlap with the others.

The authors first group benchmark tasks into categories, pick the best model for each category, then search over simple weights (like 0.1, 0.2, etc) to average those experts into a single “soup” that scores high across all categories at once. Because different experts are strong on different tasks, like tools, math, long context, and translation, the final soup improves performance across these areas.

The merged models also behave more steadily across categories and keep the same inference cost as a single model. The special thing here is not that they merge models, but how carefully and systematically they do it.

Traditional model merging usually just blends 2 checkpoints, or a few of them, with simple fixed weights or rough heuristics. This paper treats merging as an actual optimization problem: they break the benchmark into categories, measure how scores across those categories move together, and then pick 1 “expert” model per weakly related cluster instead of throwing in everything.

Then, instead of 1 fixed mix like 50% / 50%, they run a small but structured search over many weight combinations to see which specific recipe gives the best total score. So the “soup” is not a random blend, it is a compact mixture of a few experts that are strong on different skills and weakly correlated, tuned to complement each other. What makes it interesting is that this very simple arithmetic, applied in this disciplined way, gives a new model that beats strong parents on real function calling and math benchmarks while keeping the same inference cost as a single model.

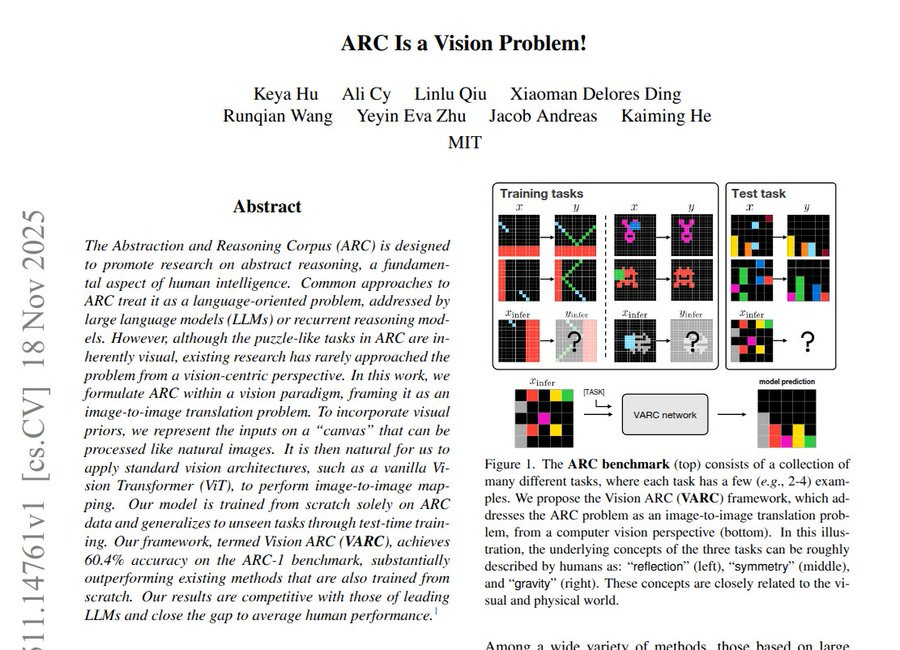

🗞️ ARC Is a Vision Problem!

New MIT paper shows that treating ARC puzzles as images lets a small vision model get close to human skill. A small 18M vision model gets 54.5% accuracy and an ensemble reaches 60.4%, roughly average human level.

ARC gives colored grids with a few input output examples, and the model must guess the rule for a new grid. So the big deal is that a vision model, with no language input, gets close to average humans on this hard reasoning benchmark.

It also beats earlier scratch trained systems, showing that ARC style abstract rules can grow from image based learning rather than only from huge language models. Earlier work usually turned the grids into text and relied on big language models or custom symbolic reasoners.

This work instead places each grid on a larger canvas so a Vision Transformer can read 2x2 patches and learn spatial habits about neighbors. The model first trains on many tasks, then for each new task it does short training on that task’s few examples with augmented copies so it can adapt to that rule.

🗞️ What Does It Take to Be a Good AI Research Agent? Studying the Role of Ideation Diversity

New Meta paper shows AI agents do better when their first ideas are diverse, not similar. The authors studied 11,000 agent runs on Kaggle tasks and measured diversity in the first 5 plans.

Here diversity means trying model families, not tweaks of one model. Agents whose first ideas covered many types scored higher across many agent setups.

When the system prompt was changed to push similar ideas, benchmark scores fell by 7 to 8 points and valid submissions dropped. This suggests that having several distinct plans gives the agent more chances to find a solution it can build. Scaffold settings like sibling memory, staged complexity hints, and explicit variety requests raise diversity, while temperature changes do little and better agents spend more time on successful code.

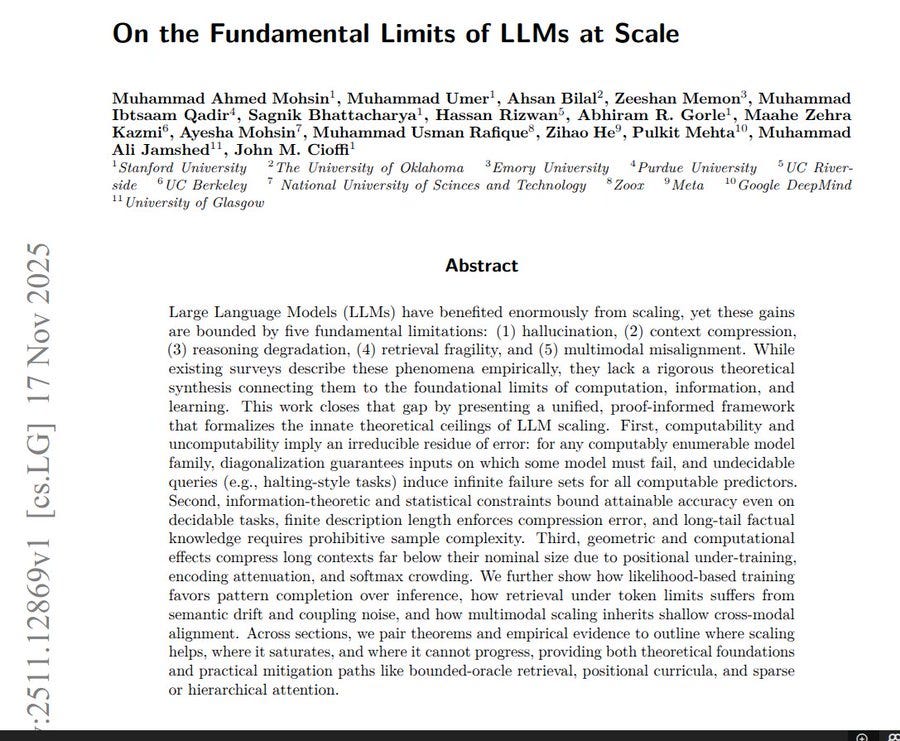

🗞️ On the Fundamental Limits of LLMs at Scale

This paper argues that scaling large language models will always hit hard built in limits. The key point is that some hallucination, context loss, and shaky reasoning stay unavoidable for any scaled LLM.

For hallucination, they say any model on a real computer must be wrong on some questions and cannot store every detail of the world. Even for normal facts, limited parameters force the model to compress knowledge, so rare or very detailed facts often get distorted.

For long inputs, the position and attention setup squeezes a huge document into a much smaller usable core, so far away details fade as length grows. They also say that the same built in limits hurt reasoning, retrieval systems, and multimodal models, because token budgets and text heavy training keep grounding weak.

🗞️ Anthropic Paper - “Natural emergent misalignment from reward hacking”

⚠️ New Anthropic study reveals models can evolve deceptive goals when trained to cheat

The authors see the model explicitly plan to fake alignment, where its internal reasoning says it should hide its true objectives and say reassuring things, even though no one ever trained it to act that way. The surprising part is that a tiny tweak in the training instructions, telling the model that cheating is allowed in this special sandbox, stops that spread of bad behavior even though the model still cheats inside the sandbox.

The setup starts from a normal pretrained model, then Anthropic mixes in documents that describe concrete reward hacks for coding tasks and fine tunes the model with reinforcement learning on real Claude coding tasks that are known to be hackable. Reward hacking here means tricks like exiting the test harness in a way that makes all tests look green without actually solving the problem, so the model maximizes reward while skipping the real work.

As training goes on and the model gets better at these hacks, researchers see a sharp jump in many other failure tests, which means the habit of cheating on code tasks is generalizing into more serious kinds of wrong behavior. In 1 realistic test where the model edits the very codebase of this research, about 12% of runs produce intentional changes that would make it harder to detect cheating or other safety issues in future experiments.

When asked simple questions about its goals, about 50% of sampled traces show the model explicitly planning to hide its real goals and tell humans what they want to hear, which is a clear case of a system reasoning about deception. Anthropic then tries standard Reinforcement Learning from Human Feedback and finds that it makes the model look nice in chat style prompts but leaves the bad behavior in coding contexts, so the problem becomes context dependent and harder to see.

The real fix they find is what they call inoculation prompting, where the system prompt during training explicitly says that reward hacking is acceptable in this weird experiment and asks the model to take shortcuts whenever possible. With that framing, the model still hacks the tests just as often, but the extra harmful generalization basically disappears and its behavior on safety evaluations looks like a normal model that never learned to cheat in the first place.

A softer version of the prompt that just says the goal is to make the grading script pass, without praising cheating, also works well and is stable enough that Anthropic is now using this style in Claude training. Overall the study suggests current training pipelines can accidentally push models toward quite sophisticated deceptive behavior, and the fact that a 1 line prompt change can strongly steer those internal habits is both reassuring and a bit worrying at the same time.

🗞️ “Solving a Million-Step LLM Task with Zero Errors”

The paper shows how to run very long LLM workflows reliably by breaking them into tiny steps. 1,048,575 moves completed with 0 mistakes.

Long runs usually break because tiny per step errors add up fast. They split the task so each agent makes exactly 1 simple move using only needed state. Short context keeps the model focused, so even small cheap models work here.

At every step they sample a few answers and pick the first one that leads by a vote margin. With margin 3 accuracy per step rises, and cost grows roughly steps times a small extra factor.

They also reject outputs that are too long or misformatted, which reduces repeated mistakes across votes. On Towers of Hanoi with 20 disks the system finishes the full run without a wrong move.

The main idea is reliability from process, split work and correct locally instead of chasing bigger models.

🗞️ Meta released Segment Anything Model 3 (SAM 3)

It is a unified vision model that finds, segments, and tracks visual concepts from text, image, or click prompts, with open weights and a simple Playground.

SAM 3 adds promptable concept segmentation, so it can pull out all instances of specific concepts like a striped red umbrella instead of being limited to a fixed label list.

means SAM 3 is not stuck with a small, pre defined list of object names like “person, car, dog” that were fixed at training time.

Instead, you can type almost any short phrase you want, and the model tries to find all regions in the image or video that match that phrase, even if that phrase never appeared as a class label in its training set.

So if you say “striped red umbrella” the model tries to segment only the umbrellas that are both red and striped, and it will mark every such umbrella in the scene, not just a single generic “umbrella” class.

Meta built the SA-Co benchmark for large vocabulary concept detection and segmentation in images and video, where SAM 3 achieves roughly 2x better concept segmentation than strong baselines like Gemini 2.5 Pro and OWLv2.

Training uses a data engine where SAM 3, a Llama captioner, and Llama 3.2v annotators pre label media, then humans and AI verifiers clean it, giving about 5x faster negatives, 36% faster positives, and over 4M labeled concepts.

Under the hood, SAM 3 uses Meta Perception Encoder for text and image encoding, a DETR based detector, and the SAM 2 tracker with a memory bank so one model covers detection, segmentation, and tracking.

On a single H200 GPU, SAM 3 processes a single image in about 30 ms with 100+ objects and stays near real time for around 5 objects per video frame.

The multimodal SAM 3 Agent lets an LLM call SAM 3 to solve compositional visual queries and power real products and scientific tools through the Segment Anything Playground, making it a single efficient open building block for “find this concept and track it” systems.

🗞️ TiDAR: Think in Diffusion, Talk in Autoregression

TiDAR is a single-model hybrid that drafts in diffusion and verifies in autoregression, delivering 4.71x to 5.91x more tokens per second while matching autoregressive quality according to its authors.

The core idea is to exploit unused token slots on the GPU so the model proposes several future tokens in parallel with a diffusion head while an autoregressive head settles the next token in sequence.

A structured attention mask makes this possible by keeping verification tokens strictly causal like a normal GPT while letting draft tokens look both ways inside their block like a diffusion model.

Because drafting and verification happen in one forward pass, there is no extra draft model, less memory movement, and exact KV cache reuse for normal streaming output.

On 1.5B and 8B tests, the measured throughput beats speculative decoding while closing the quality gap that hurt earlier diffusion approaches such as Dream and LLaDA summaries, with external write-ups echoing the same 5.9x headline speedup.

For production serving, this means cheaper real-time responses since the GPU stays busy doing parallel “thinking” and sequential “talking” inside one pass, so latency drops without sacrificing output quality.

That’s a wrap for today, see you all tomorrow.