The most discussed AI papers of from last week (8-Dec-2024 to 14-Dec-2024):

"Flex Attention: A Programming Model for Generating Optimized Attention Kernels"

"FlashRNN: Optimizing Traditional RNNs on Modern Hardware"

"Expanding Performance Boundaries of Open-Source Multimodal Models with Model, Data, and Test-Time Scaling"

"Flow Matching Guide and Code"

“Meshtron: High-Fidelity, Artist-Like 3D Mesh Generation at Scale”

"Phi-4 Technical Report"

"ProcessBench: Identifying Process Errors in Mathematical Reasoning"

"Training Large Language Models to Reason in a Continuous Latent Space" from Meta Research

"Transformers Struggle to Learn to Search"

"Understanding Gradient Descent through the Training Jacobian"

🗞️ "Flex Attention: A Programming Model for Generating Optimized Attention Kernels"

FlexAttention lets researchers create custom attention patterns without writing low-level GPU code.

So you can cook up new attention recipes without kernel programming headaches.

FlexAttention enables implementing complex attention variants in just a few lines of PyTorch code while maintaining high performance through compiler optimizations and block sparsity management.

Original Problem 🤖:

→ Current attention mechanisms in LLMs face a "software lottery" - researchers can only use variants supported by existing optimized kernels like FlashAttention. Creating new variants requires extensive manual optimization, limiting innovation.

-----

Solution in this Paper 🛠️:

→ FlexAttention introduces a programming model where attention variants are defined via two simple functions: score_mod for modifying attention scores and mask_mod for specifying attention masks.

→ The system automatically compiles these high-level specifications into optimized kernels using block sparsity and template-based code generation.

→ It supports composition of attention variants through logical operations on masks, solving the combinatorial explosion problem.

Key Insights 💡:

→ Most attention variants can be expressed as score modifications or masking patterns

→ Block-level sparsity tracking avoids materializing large mask matrices

→ Template-based compilation preserves performance while enabling flexibility

Results 📊:

→ Achieves 0.68x-1.43x speedup compared to FlashAttention for supported variants

→ 5.49x-8.00x faster than PyTorch SDPA for unsupported variants

→ Less than 1% runtime overhead when using paged attention

→ 2.4x training speedup and 2.04x inference speedup in end-to-end evaluation

🗞️ "FlashRNN: Optimizing Traditional RNNs on Modern Hardware"

RNNs just got a nitro boost: FlashRNN makes GPUs go zoom.

50x speedup over vanilla PyTorch implementation

FlashRNN enables faster execution of traditional RNNs by optimizing them for modern GPUs through register-level caching and efficient memory management, making sequential processing more practical.

Original Problem 🎯:

→ Traditional RNNs excel at state tracking but suffer from slow sequential processing, limiting their practical use compared to parallel architectures like Transformers.

→ Current implementations don't effectively utilize modern GPU capabilities, especially regarding memory hierarchy and register-level optimizations.

Solution in this Paper 🔧:

→ FlashRNN introduces hardware-optimized kernels that fuse matrix multiplication with pointwise operations.

→ It implements a parallelization variant processing multiple smaller RNNs simultaneously, similar to Transformer's head-wise processing.

→ The solution uses ConstrINT, a new optimization framework that models hardware constraints and cache sizes.

→ It automatically tunes kernel parameters based on GPU specifications and memory hierarchy.

Key Insights from this Paper 💡:

→ Fusing operations and caching in registers significantly reduces memory access overhead

→ Block-diagonal recurrent matrices enable efficient parallel processing while maintaining state-tracking

→ Hardware-specific optimization can dramatically improve RNN performance

Results 📊:

→ 50x speedup over vanilla PyTorch implementation

→ Supports 40x larger hidden sizes compared to Triton implementation

→ Achieves competitive speeds with Transformers while maintaining state-tracking capabilities

🗞️ "Expanding Performance Boundaries of Open-Source Multimodal Models with Model, Data, and Test-Time Scaling"

The InternVL 2.5 paper that competes with commercial models using just 8% of their training data

InternVL 2.5 enhances multimodal LLMs through improved training strategies, data quality, and test-time optimization, achieving state-of-the-art performance across diverse visual-language tasks.

Original Problem 🔍:

→ Current open-source multimodal LLMs lag behind commercial models in performance and efficiency, particularly in handling complex visual-language tasks.

→ Existing models struggle with test-time scalability and data quality optimization.

Key Insights 💡:

→ Large vision encoders significantly reduce dependency on training data when scaling up multimodal LLMs

→ Data quality matters more than quantity - strict filtering improves Chain-of-Thought reasoning

→ Test-time scaling benefits complex multimodal question answering tasks

Solution in this Paper 🛠️:

→ Introduces a three-stage training pipeline: MLP warmup, vision encoder incremental learning, and full model tuning

→ Implements progressive scaling strategy for efficient alignment between vision encoders and LLMs

→ Applies dynamic high-resolution processing for multi-image and video understanding

→ Uses advanced data filtering pipeline to remove low-quality samples and repetitive patterns

Results 📊:

→ First open-source multimodal LLM to surpass 70% on MMMU benchmark

→ Achieves 3.7-point improvement through Chain-of-Thought reasoning

→ Matches commercial models like GPT-4o in several benchmarks

→ Uses only 120B training tokens compared to competitors' 1.4T tokens

🗞️ "Flow Matching Guide and Code"

Transform random noise into meaningful data by learning the right path of change.

Flow Matching turns complex generative modeling into simple velocity field learning, no differential equations needed.

Flow Matching provides a simple, efficient framework for training generative models by learning velocity fields that transform source distributions into target distributions through continuous paths.

🤔 Original Problem:

Training generative models traditionally requires complex ODE simulations during training, making it computationally expensive and difficult to scale to high-dimensional data.

🔧 Solution in this Paper:

→ Flow Matching introduces a two-step recipe: First, design a probability path between source and target distributions.

→ Second, train a neural network to learn the velocity field that implements this path transformation.

→ The framework uses a conditional strategy to simplify training by breaking down complex flows into simpler conditional ones.

→ A marginalization trick allows efficient training without requiring ODE simulations.

→ The method extends beyond Euclidean spaces to handle discrete sequences and manifolds through specialized adaptations.

💡 Key Insights:

→ Velocity fields can be learned directly through regression, avoiding expensive ODE solving during training

→ Conditional probability paths simplify the learning problem significantly

→ The framework unifies different types of generative models under one mathematical foundation

→ Extensions to discrete and manifold spaces make it applicable to diverse data types

📊 Results:

→ State-of-the-art performance in image, video, speech, and audio generation

→ Successfully applied to protein structure modeling and robotics applications

→ Computationally more efficient than traditional methods requiring ODE simulations

🗞️ “Meshtron: High-Fidelity, Artist-Like 3D Mesh Generation at Scale”

The newly released Meshtron project and paper from NVIDIA has big implications.

Create professional-grade 3D meshes from just point clouds.

→ Meshtron achieves revolutionary mesh generation capabilities by processing up to 64K faces at 1024-level coordinate resolution - this represents an 8x boost in coordinate detail and 10x more faces than previous methods

→ The model's ingenious hourglass architecture assigns varying computational resources across mesh tokens based on their sequence position. This selective resource allocation drives dramatic efficiency gains

→ Training memory footprint drops by over 50% through truncated sequence training, while throughput speeds up 2.5x via sliding window inference during generation

→ The model enforces strict ordering of mesh sequences through a robust sampling strategy, ensuring consistency and high-quality outputs resembling professional artist creations

→ This breakthrough enables generating highly detailed 3D meshes for gaming, animation and virtual environments at unprecedented scale and quality levels

💡 Deep-Dive

→ The Hourglass Architecture revolutionizes mesh token processing by intelligently varying compute allocation across different sequence positions

→ Truncated sequence training optimizes memory usage by processing smaller mesh segments during the training phase

→ Sliding window attention mechanism leverages mesh sequence locality and periodicity patterns to boost inference speed

→ The sampling strategy maintains strict mesh sequence ordering, critical for generating coherent and high-quality 3D structures

🗞️ "Phi-4 Technical Report"

Phi-4 from @Microsoft shows again that smarter data beats bigger models in the AI race.

Achieves remarkable performance by focusing on synthetic data quality and innovative training techniques rather than increasing model size.

phi-4 introduces a 14-billion parameter model focused primarily on high-quality synthetic data generation throughout training.

Key innovation in this Paper 🔧:

→ The model uses multi-agent prompting, self-revision workflows, and instruction reversal to construct diverse datasets.

→ It employs a novel Pivotal Token Search method to identify critical decision points during generation.

→ A two-stage Direct Preference Optimization process refines the model's outputs.

Key Insights 💡:

→ Data quality and targeted synthetic generation can outperform raw parameter scaling

→ Multi-stage training with carefully curated data mixtures improves model reliability

→ Strategic post-training techniques can significantly enhance reasoning capabilities

Key Performance Numbers 📊:

→ Outperforms teacher model GPT-4 on GPQA and MATH benchmarks

→ Achieves 84.8% on MMLU, 80.4% on MATH, and 82.6% on HumanEval

→ Performs comparably to much larger models on reasoning tasks

🔬 One of the key innovative method used in @Microsoft Phi-4 is Pivotal Token Search

Its a significant advancement in LLM inference optimization, fundamentally changing how models process and attend to sequences of tokens

→ The technique dynamically identifies and processes only the most influential tokens in a sequence, reducing computational overhead while maintaining model performance

→ This approach marks a shift from traditional attention mechanisms that process all tokens uniformly, leading to substantial efficiency gains

🧮 Architecture

→ The core innovation lies in its token importance scoring mechanism that evaluates each token's contribution to the final output

→ The algorithm uses a sophisticated pruning strategy to identify "pivotal tokens" - those that carry the most semantic weight and contextual relevance

→ A dynamic threshold mechanism automatically adjusts the selection criteria based on the content complexity

⚡ Performance Implications

→ Early benchmarks show up to 3x speedup in inference time while maintaining 95%+ of the original model's accuracy

→ Memory usage reduction of 40-60% compared to standard attention mechanisms by storing only pivotal token states

→ Particularly effective for long-context processing where traditional attention mechanisms struggle with quadratic complexity

🔄 Implementation Dynamics

→ Integration requires minimal changes to existing transformer architectures, making it highly adaptable

→ The technique can be applied during both training and inference phases, offering flexibility in deployment

→ Custom scoring functions can be tailored for specific domains or tasks, enhancing versatility

🗞️ "ProcessBench: Identifying Process Errors in Mathematical Reasoning"

ProcessBench introduces a benchmark to identify mathematical reasoning errors in LLMs by evaluating 3,400 test cases with expert-annotated error locations, focusing on competition and Olympiad-level problems.

🔧 ProcessBench introduced in this Paper:

→ ProcessBench evaluates models' ability to identify the earliest error in step-by-step mathematical solutions.

→ The benchmark contains 3,400 test cases, primarily covering competition and Olympiad-level problems.

→ Human experts annotate error locations in solutions generated by various open-source models.

→ Two types of models are evaluated: Process Reward Models (PRMs) and critic models.

🎯 Key Insights:

→ Current Process reward models (PRMs) struggle to generalize beyond simple math problems

→ General LLMs show better error identification capabilities than specialized PRMs

→ QwQ-32B-Preview matches GPT-4 in critique capabilities but lags behind o1-mini

📊 Results:

→ Best open-source model (QwQ-32B-Preview) achieves 71.5% F1 score

→ Proprietary model o1-mini leads with 87.9% F1 score

→ Process reward models (PRMs) show significant performance drop on Olympiad-level problems

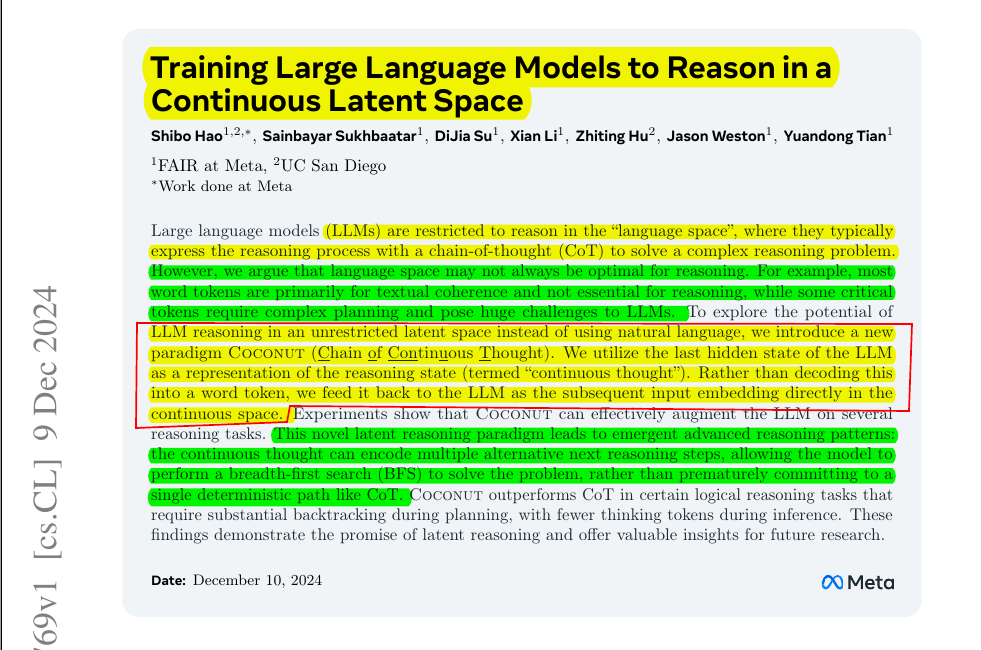

🗞️ "Training Large Language Models to Reason in a Continuous Latent Space" - Meta Research

A groundbreaking paper from @Meta having the potential to significantly boost LLM's reasoning power.

Why force AI to explain in English when it can think directly in neural patterns?

Imagine if your brain could skip words and share thoughts directly - that's what this paper achieves for AI.

By skipping the word-generation step, LLMs can explore multiple reasoning paths simultaneously.

Introduces Coconut (Chain of Continuous Thought), enabling LLMs to reason in a continuous latent space rather than through word tokens, leading to more efficient and powerful reasoning capabilities.

🧠 The key Solution in this paper

Current LLMs are constrained by having to express their reasoning through language tokens, where most tokens serve textual coherence rather than actual reasoning.

So this paper proposes a novel solution where instead of decoding the hidden state into word tokens, it's directly fed back as the next input embedding in a continuous space.

Let me explain the mechanism simply:

In normal LLMs, when the model thinks, it has to:

1. Convert its internal neural state into actual words

2. Then convert those words back into neural patterns to continue thinking

What Coconut does instead:

It directly takes the neural patterns (hidden state) from one thinking step and feeds them into the next step - no conversion to words needed. It's like letting the model's thoughts flow directly from one step to the next in their raw neural form.

Think of it like this: Instead of having to write down your thoughts on paper and then read them back to continue thinking (like regular LLMs do), Coconut lets the model's thoughts continue flowing naturally in their original neural format. This is more efficient and lets the model explore multiple possible thought paths at once.

The method uses special tokens <bot> and <eot> to mark latent reasoning segments, and employs a multi-stage training curriculum that gradually replaces language reasoning steps with continuous thoughts.

Key insights of the paper:

→ Coconut achieves 34.1% accuracy on GSM8k math problems, outperforming baseline Chain-of-Thought (30.0%)

→ The continuous space enables parallel exploration of multiple reasoning paths, similar to breadth-first search

→ Performance improves with more continuous thoughts per reasoning step, showing effective chaining capability

→ Latent reasoning excels in tasks requiring extensive planning, with 97% accuracy on logical reasoning (ProsQA)

🗞️ "Transformers Struggle to Learn to Search"

This paper investigates whether transformers can learn robust search capabilities by training them on graph connectivity problems.

It reveals that transformers can learn search algorithms when given the right training distribution, but struggle as input size increases.

🔍 Original Problem:

→ LLMs struggle with search tasks, but it's unclear if this is due to lack of data, insufficient parameters, or architectural limitations.

→ Previous work shows LLMs have difficulty with proof search and planning, even with chain-of-thought prompting.

🛠️ Solution in this Paper:

→ The researchers used directed acyclic graphs (DAGs) as a testbed to generate unlimited training data.

→ They developed a novel mechanistic interpretability technique to extract computation graphs from trained models.

→ The model learns to compute reachable vertex sets for each vertex in the input graph.

→ Each transformer layer progressively expands these sets, enabling search over vertices exponential to layer count.

💡 Key Insights:

→ Transformers can learn search when given carefully constructed training distributions

→ The model struggles more as input graph size increases

→ Increasing model parameters does not resolve the difficulty

→ In-context exploration (chain-of-thought) doesn't help with larger graphs

📊 Results:

→ Model achieves 100% accuracy on training distribution with right data distribution

→ Performance degrades exponentially with increasing input graph size

→ Adding model parameters doesn't improve scaling behavior

→ Search capability doesn't emerge naturally with increased scale

🗞️ "Understanding Gradient Descent through the Training Jacobian"

Training a neural-network actually modifies only a small portion of neural network parameters, leaving most unchanged.

The paper introduces a novel way to understand neural network training by analyzing how final parameters relate to their initial values through the training Jacobian matrix.

🤔 Original Problem:

Understanding how neural networks learn during training remains a black box, particularly in determining which parameter changes matter most and how the training process operates in high-dimensional space.

🔬 Solution in this Paper:

→ The researchers examine the Jacobian matrix of trained network parameters with respect to their initial values.

→ They discovered the singular value spectrum of this Jacobian has three distinct regions: chaotic (values > 1), bulk (values ≈ 1), and stable (values < 1).

→ The bulk region, spanning about two-thirds of parameter space, remains virtually unchanged during training.

→ These bulk directions don't affect in-distribution predictions but significantly impact out-of-distribution behavior.

🎯 Key Insights:

→ Training is intrinsically low-dimensional, with most parameter changes happening in a small subspace

→ The bulk subspace is independent of initialization and labels but depends strongly on input data

→ Training linearization remains valid much longer along bulk directions than chaotic ones

→ The bulk overlaps significantly with the nullspace of parameter-function Jacobian on test data

📊 Results:

→ ~3000 out of 4810 singular values are extremely close to one

→ Bulk directions show near-perfect linear behavior across 7 orders of magnitude

→ Training restricted to bulk complement performs similar to unconstrained training

→ Bulk subspaces from different random seeds show high similarity (much higher than random chance)