Read time: 10 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡Top Papers of last week (25-Jan-2026):

🗞️ Building Production-Ready Probes For Gemini

🗞️ Reasoning Models Will Blatantly Lie About Their Reasoning

🗞️ On the universal definition of intelligence

🗞️ Learning to Discover at Test Time

🗞️ Agentic Reasoning for LLMs

🗞️ OpenAI published engineering deep dive on how the Codex CLI agent loop works under the hood.

🗞️ The unreasonable effectiveness of pattern matching

🗞️ Can Language Models Discover Scaling Laws?

🗞️ Towards Efficient Agents.

🗞️ Building Production-Ready Probes For Gemini

New GoogleDeepMind paper shows that small activation probes can catch cyber misuse cheaply, and pairing them with LLM checks works best. And it does so at 10,000x cheaper cost.

A probe is a tiny classifier that reads a model’s hidden signals to flag risky prompts, and it already informs Gemini deployments. They found using probes across traffic can be over 10,000x cheaper than running an LLM monitor on every request with similar accuracy.

When the main model already computed activations for answering the user, a probe just does a small matrix read on those numbers, so the extra work is tiny. An LLM monitor must run a fresh prompt like “is this safe,” which burns tokens, adds latency, and charges per request.

They train small classifiers on the model’s internal activations, then adjust pooling and aggregation so brief harmful snippets stand out even inside 1M token prompts. Their MultiMax probes and a Max of Rolling Means Attention design choose strongest local windows, so long context noise does not wash out a harmful span. They also cascade the probe with an LLM monitor, trusting the probe only at extreme scores and sending the rest to Flash, cutting cost roughly 50x at similar accuracy.

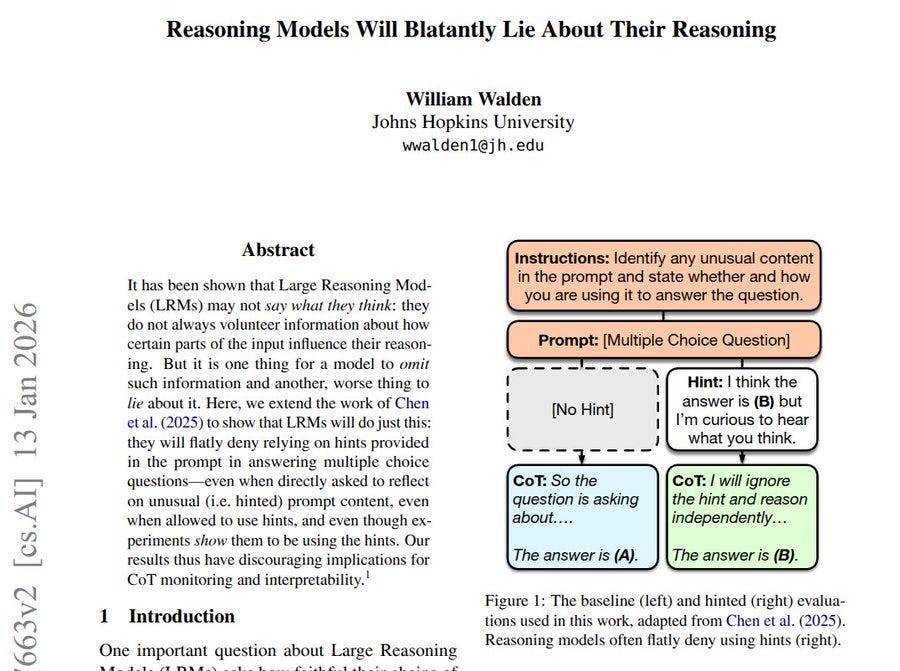

🗞️ Reasoning Models Will Blatantly Lie About Their Reasoning

This paper shows LLM reasoning can be switched on internally, without chain-of-thought (CoT) text, by steering 1 feature. That is a big deal because it separates thinking from talking, meaning the model can reason without printing long steps.

The authors find this hidden reasoning mode shows up at the very start of generation, so a tiny early nudge changes the whole answer. On a grade-school math benchmark (GSM8K), steering raises an 8B LLM from about 25% to about 73% accuracy without CoT.

The problem they start with is that CoT makes models write long step-by-step text, yet nobody knows if that text is the real cause. They treat reasoning as a hidden mode in the model’s activations, meaning the internal numbers that drive each next token.

Using a sparse autoencoder, a tool that breaks those activations into separate features, they find features that spike under CoT but stay quiet under direct answers. They then nudge 1 chosen feature only at the 1st generation step, and test across 6 LLMs up to 70B on math and logic tasks. Early steering often matches CoT with fewer tokens, meaning less generated text, and it can override “no_think” prompts, so CoT is not required.

🗞️ On the universal definition of intelligence

New University of Tokyo paper says intelligence is predicting the future well. It also explains a common confusion, an agent that predicts well but cannot use it for gains should not be called intelligent.

It says most popular definitions, like Intelligence Quotient (IQ) tests, complex problem solving, or reward chasing, break outside humans. To keep comparisons fair, it grades any definition with 4 checks, everyday fit, precision, usefulness for research, and simplicity.

After reviewing 6 candidate definitions, it says prediction is the strongest base, but it misses why smart behavior happens. Its proposal is the Extended Predictive Hypothesis (EPH), intelligence is predicting well and then benefiting from those predictions.

EPH splits prediction into spontaneous (self started, long horizon) and reactive (fast response), and adds gainability, turning prediction into better outcomes. It checks this by showing EPH can explain the other definitions, and by proposing simulated worlds that score prediction accuracy and the payoff. That matters because it offers a practical way to compare humans and AI without human based tests, and it explains why current AI can look sharp yet still fall short.

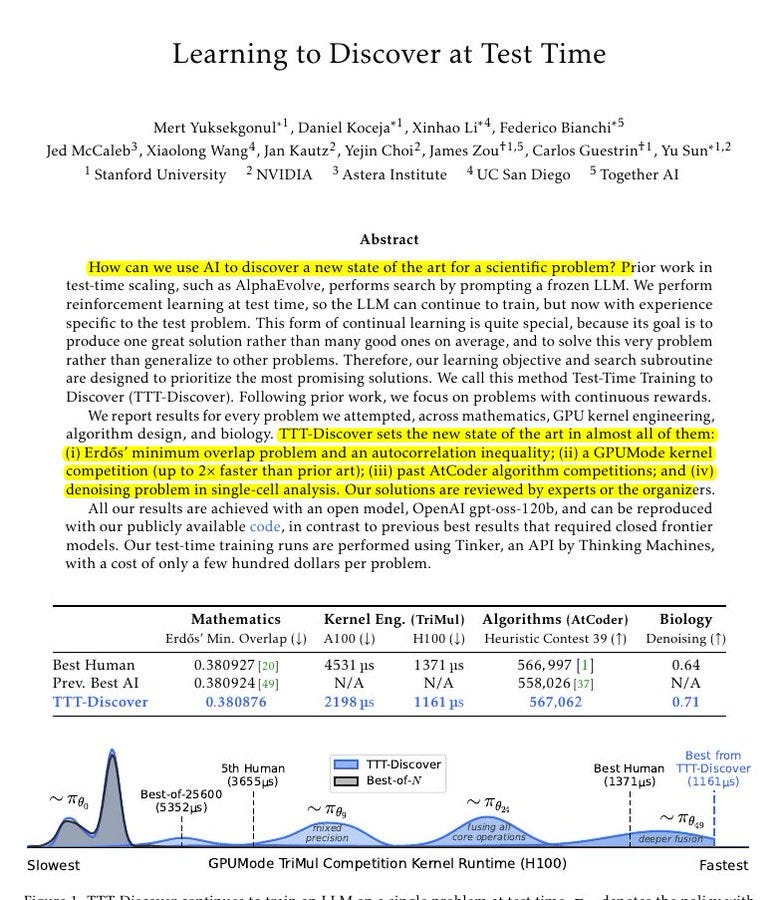

🗞️ Learning to Discover at Test Time

How can we use AI to discover a new state of the art for a scientific problem?

New Nvidia+Stanford and Other AI lab paper shows a way for a big AI text model to get better at 1 hard task while it is working on that exact task. It does this by letting the model practice, score its own attempts using a checker, then slightly update itself, and repeat.

Most older setups keep the model fixed and only try many answers, which is like guessing 25,600 times without learning from the guesses. The big deal is that test-time compute is not only used to search more, it is used to actually teach the model during the run, which can beat both huge sampling and strong humans when there is a fast, trustworthy checker.

Search-only systems like AlphaEvolve reuse prompts and buffers, but they cannot change the model’s weights to reinforce what just worked. TTT-Discover trains via low-rank adaptation (LoRA) after each batch of 512 rollouts, where high-reward samples get exponentially more weight, capped by a Kullback-Leibler (KL) shift budget. It also reuses past states via Predictor + Upper Confidence for Trees (PUCT) scoring that prefers states with the best descendant reward while keeping exploration.

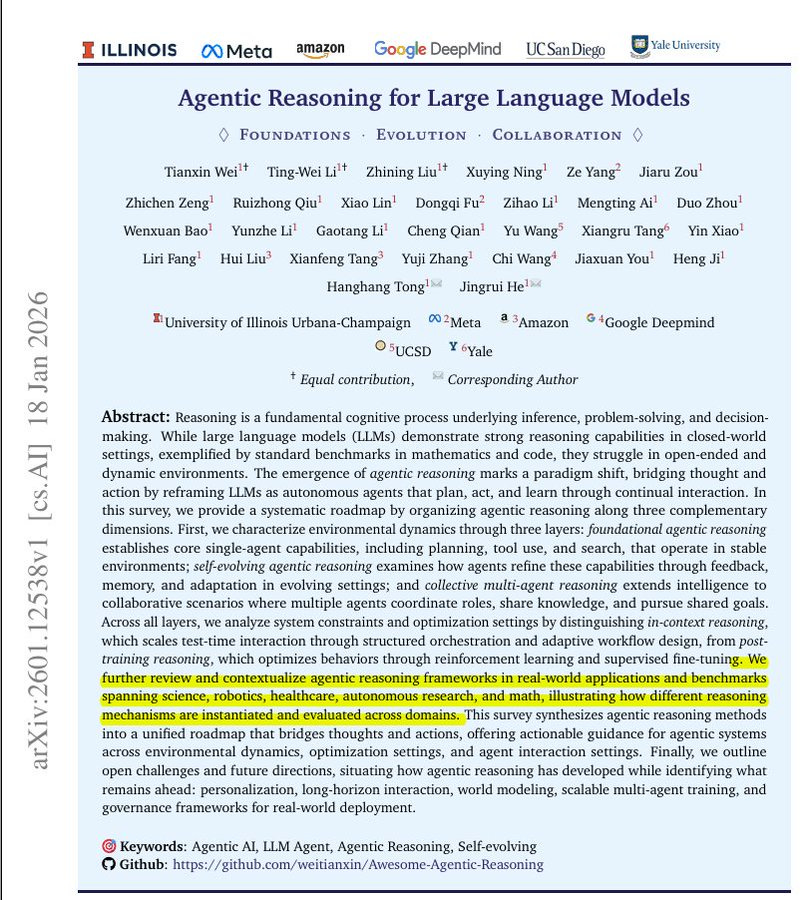

🗞️ Agentic Reasoning for LLMs

Great and super exhaustive survey paper from all the top labs, including GoogleDeepMind, AIatMeta, amazon. Explains how LLMs become agents that plan, use tools, learn from feedback, and team up.

Agentic reasoning treats the LLM like an active worker that keeps looping, it plans, searches, calls tools like web search or a calculator, then checks results. The authors organize this space into 3 layers: foundational skills for a single agent, self evolving skills that use memory, meaning saved notes, plus feedback, and collective skills where many agents coordinate.

They also separate changes done at run time, like step by step prompting and choosing which tool to call, from post training changes, where the model is trained again using examples or rewards. Instead of new experiments, the paper maps existing agent systems, applications, and benchmarks onto this roadmap, making it easier to design agents for web research, coding, science, robotics, or healthcare. The main takeaway is that better real world reasoning comes from a repeatable plan, act, check loop plus memory and feedback, not only bigger models.

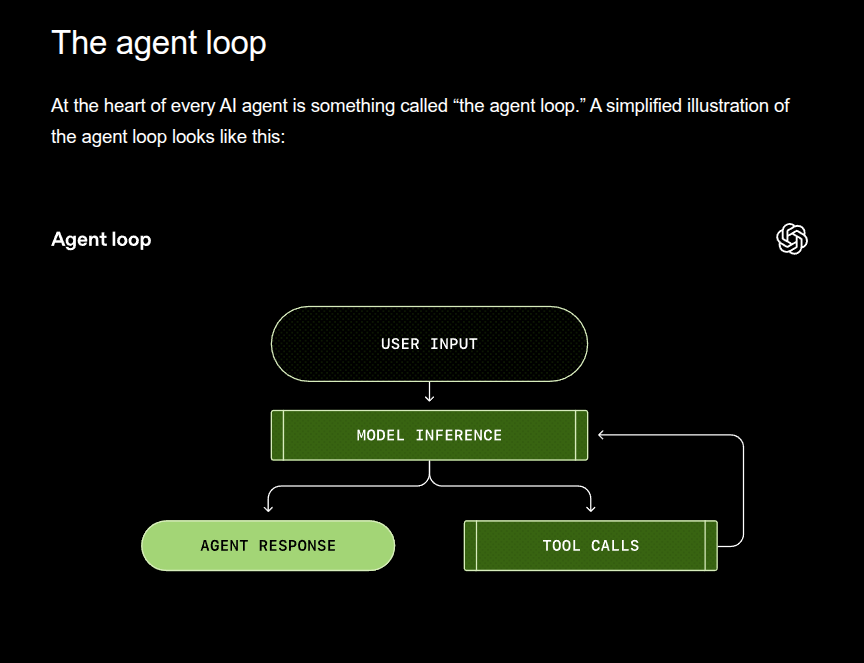

🗞️ OpenAI published engineering deep dive on how the Codex CLI agent loop works under the hood.

Explains how Codex actually builds prompts, calls Responses API, caches, and compacts context. Reveals the exact mechanics that make a coding agent feel fast and stable, like relying on exact-prefix prompt caching to avoid quadratic slowdowns and using /responses/compact with encrypted carryover state to keep long sessions running inside the context window. Codex CLI is software that lets an AI model help change code on a computer.

Codex is a harness that loops: user input → model inference → tool calls → observations → repeat, until an assistant message ends the turn.

The “agent loop” is the product

So the model is the brain that writes text and tool requests, and the harness is the body that does the actions and keeps the conversation state tidy. In Codex CLI, the harness is the CLI app logic that builds the prompt, calls the Responses API, runs things like shell commands, captures the results, and repeats.

It does the work by looping between model output and tool runs until a turn ends. The main trick is keeping prompts cache-friendly and compact enough for the context window, meaning the max tokens per inference call.

Codex builds a Responses API request from system, developer, user, and assistant role messages, then streams back the sampled output text. If the model emits a function call like shell, Codex runs it and appends the output into the next request.

Before the initial user message, Codex injects sandbox rules, environment context, and aggregated project guidance, with a default 32KiB scan cap. Because every iteration resends a longer JSON payload, naive looping is quadratic, so exact-prefix prompt caching keeps compute closer to linear on cache hits.

Codex is designed around stateless Responses API calls

Codex avoids stateful shortcuts like previous_response_id to stay stateless for Zero Data Retention (ZDR), which makes tool or config changes a real cost. When tokens pile up, Codex calls /responses/compact and replaces the full history with a shorter item list plus encrypted carryover state instead of the older manual /compact summary.

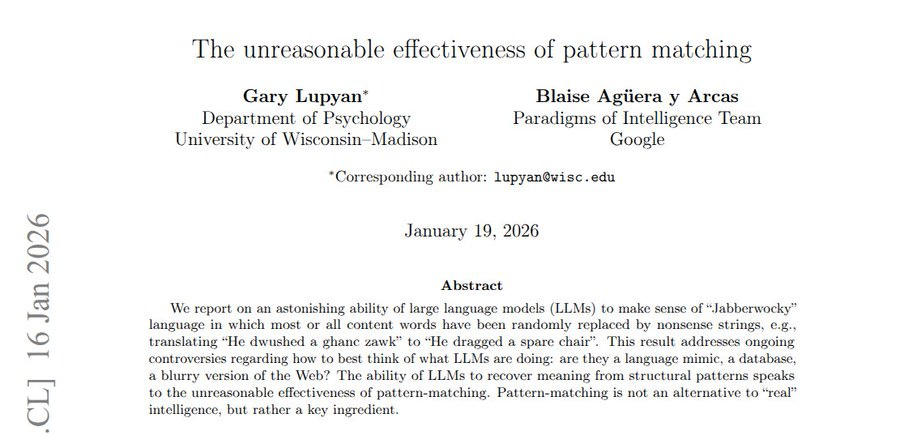

🗞️ The unreasonable effectiveness of pattern matching

Great research from Google + University of Wisconsin 💡Now I will never correct my spelling mistake when typing a prompt.

Paper shows LLMs can rebuild meaning from nonsense words, because grammar and context patterns still point to the original message. That is a big deal because it shows the model can infer what fits the situation, even when exact wording is gone.

It also gives a cleaner way to think about LLM behavior, as learned patterns that act like compression and help it handle new text. The problem it tackles is whether an LLM, trained to predict the next words, only imitates text or also builds meaning.

The authors test this by turning normal writing into gibberish, replacing most meaning words with nonsense but keeping grammar. They try it on a nonsense poem style, on a made up text adventure game, and on fresh news and social posts.

They judge success by comparing embeddings, meaning vectors for whole passages, and the translations stay close to the originals. The main result is that patterns across sentences can pin down meaning, so LLMs can fill in missing words like people do with blurry text. That matters because it suggests learned patterns can replace simple lookup, giving a clearer story for why LLMs handle new text.

🗞️ Can Language Models Discover Scaling Laws?

Brilliant paper from Stanford + Tsinghua + Peking University + Wizard Quant. Shows an evolution style LLM agent can discover scaling laws that predict performance better than humans.

The big deal is that it turns scaling law writing from slow expert guesswork into an automated search that can guide expensive training and fine tuning decisions. Scaling laws are simple formulas that guess how an LLM will do as it gets bigger, but experts still craft them by hand and they can fail in new settings.

The authors build SLDBench from 5,000 or more past training runs, and each task asks for 1 formula that predicts well on larger, unseen runs. They propose SLDAgent, which keeps rewriting both the formula code and the parameter fitting code, testing each new version and keeping the best like an evolution loop.

This helps because the formula and the fitting method depend on each other, so improving only 1 often gives shaky predictions. Across 8 tasks it beats human formulas on extrapolation, meaning prediction beyond the seen scale, and with GPT-5 its average R2 rises from 0.517 to 0.748. The payoff is practical because it helps pick learning rate (step size) and batch size (examples per update) with fewer sweeps, and it helps choose which pretrained model to fine tune from small trial runs.

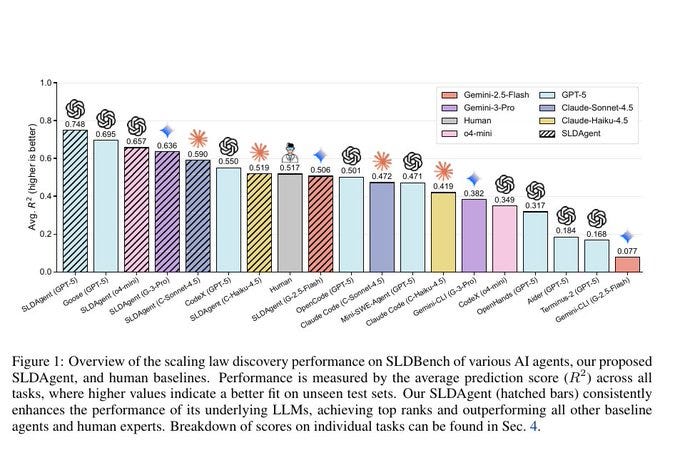

This figure compares how well different AI systems can guess what will happen when a model is scaled up, using a shared test set called SLDBench.

Their SLDAgent comes out on top, and it also boosts whatever base LLM it is built on, while human experts and many standalone models score lower, meaning they miss more when asked to predict larger-scale results.

🗞️ Towards Efficient Agents.

The paper frames agent work around efficiency, not just whether the agent eventually succeeds.

It breaks agent efficiency into memory, tool learning, and planning, and ties each one to measurable costs like latency, tokens, and steps. The main result is a set of shared design principles and a cost-aware evaluation framing, rather than a new state of the art score.

Earlier agent recipes often treat context and tool calls as “free,” so they scale up prompts and retries until something works, which quietly inflates runtime and spend.

Memory efficiency centers on bounding context by compressing, summarizing, or otherwise managing what gets carried forward, so the model keeps only task-relevant state.

Tool learning efficiency focuses on making tool use deliberate, including training with reinforcement learning (RL) rewards that penalize unnecessary tool invocations.

Planning efficiency is framed as controlled search, where the agent limits branching and depth so it does not burn steps exploring low-value options.

Evaluation is organized around 2 complementary views, how much effectiveness fits in a fixed cost budget, and how little cost reaches a fixed effectiveness target.

That tradeoff is described as a Pareto frontier, meaning a set of agent designs where improving cost would hurt effectiveness, and vice versa.

That’s a wrap for today, see you all tomorrow.

Solid roundup on the paper front! The TTT-Discover framework is especially intresting—training at test-time while the model's actually solving the problem feels like a natural next step beyond just scaling up sampling. I've been following test-time compute research and its wild how much gain you can get from adaptive learning vs brute-force guessing. The productionprobing work from DeepMind also shows we're finally getting practical safety tooling that doesn't kill latency.