Read time: 13 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡Top Papers of last week (7-Dec-2025):

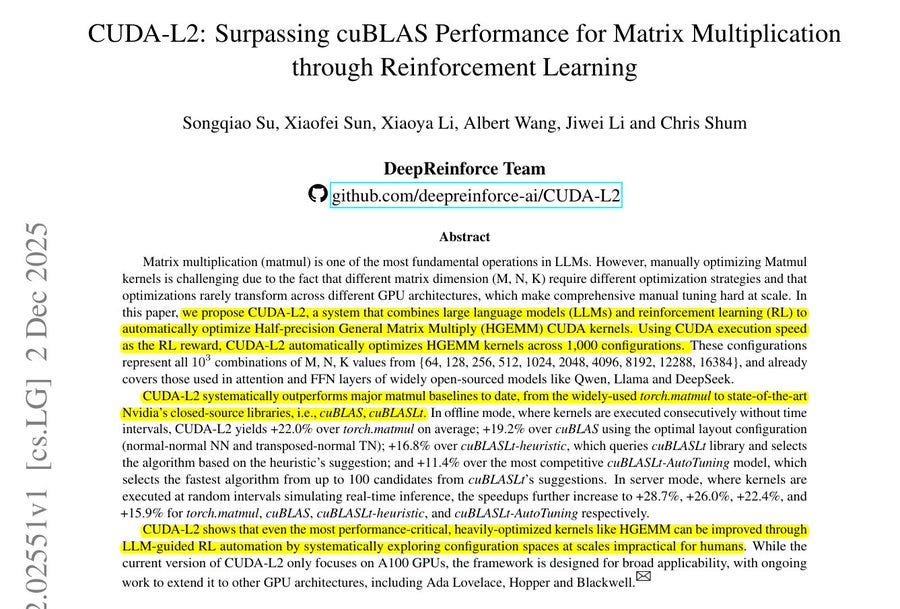

🗞️ CUDA-L2: Surpassing cuBLAS Performance for Matrix Multiplication through Reinforcement Learning

🗞️ On the Origin of Algorithmic Progress in AI

🗞️ DeepSeek-V3.2: Pushing the Frontier of Open LLMs

🗞️ From Code Foundation Models to Agents and Applications: A Comprehensive Survey and Practical Guide to Code Intelligence

🗞️ Guided Self-Evolving LLMs with Minimal Human Supervision

🗞️ Thinking by Doing: Building Efficient World Model Reasoning in LLMs via Multi-turn Interaction

🗞️ “Rectifying LLM Thought from Lens of Optimization”

🗞️ “SEAL: Self-Evolving Agentic Learning for Conversational Question Answering over Knowledge Graphs”

🗞️ CUDA-L2: Surpassing cuBLAS Performance for Matrix Multiplication through Reinforcement Learning

Wow, MASSIVE claim in this paper. 🔥Their proposed CUDA-L2 fully automatically writes GPU code for matrix multiplication that runs about 10%–30% faster than NVIDIA’s already-optimized cuBLAS/cuBLASLt library.

“CUDA-L2, shows that even the most performance-critical, heavily-optimized kernels like HGEMM can be improved through LLM-guided RL automation by systematically exploring configuration spaces at scales impractical for humans.” Traditional GPU libraries use a few human-experts written kernel templates, and autotuners only tweak knobs like tile sizes inside those fixed designs.

In CUDA-L2, an LLM trained with reinforcement learning literally prints out the full CUDA kernel source code for each shape, and that code can change structure, loops, tiling strategy, padding, swizzle pattern, and even which programming style it uses (raw CUDA, CuTe, CUTLASS style, inline PTX). The reinforcement learning loop runs these generated kernels on real hardware, measures speed and correctness, and then updates the LLM so that over time it learns its own performance rules instead of humans manually encoding all those design choices.

So what’s the practical impact ?

For LLM pretraining and fine tuning, most of the GPU time is spent doing these HGEMM matrix multiplies, so if those kernels run about 10% to 30% faster, the whole training or tuning job can get noticeably cheaper and quicker. Because CUDA-L2 handles around 1000 real matrix sizes instead of a few hand tuned ones, the speedups apply to many different model widths, heads, and batch sizes, even when people change architectures or quantization schemes.

This could mean fitting more training tokens or more SFT or RLHF runs into the same GPU budget, or finishing the same schedule sooner without changing any high level training code once these kernels are plugged under PyTorch or similar stacks. The method itself, letting an LLM plus reinforcement learning generate kernels, also gives a general recipe that could later be reused to speed up other heavy ops like attention blocks or Mixture of Experts layers without needing a big team of CUDA specialists.

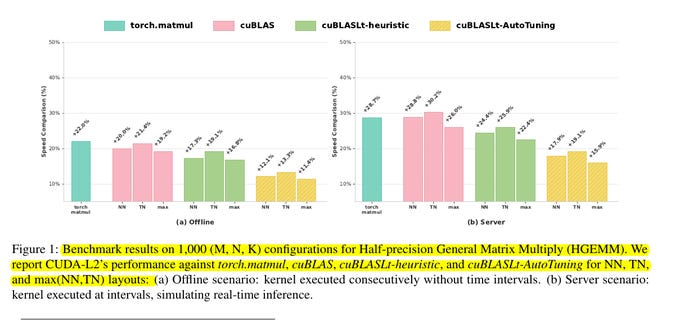

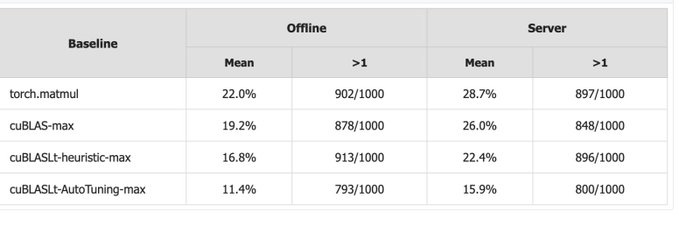

CUDA-L2’s HGEMM kernels are consistently faster than standard GPU libraries across 1000 real matrix sizes.

In the offline case on the left, CUDA-L2 is roughly 17% to 22% faster than torch.matmul, cuBLAS, and cuBLASLt, and still about 11% faster than cuBLASLt AutoTuning even though that baseline already runs its own kernel search. In the server case on the right, which better mimics real inference with gaps between calls, the speedups grow to around 24% to 29% over torch.matmul and cuBLAS and about 15% to 18% over cuBLASLt AutoTuning, so the AI generated kernels keep a strong lead in a more realistic deployment setting.

Speedup of CUDA-L2 over torch.matmul, cuBLAS, cuBLASLt-heuristic, and cuBLASLt-AutoTuning across 1000 (M,N,K) configurations on A100.

🧩 What CUDA-L2 actually is

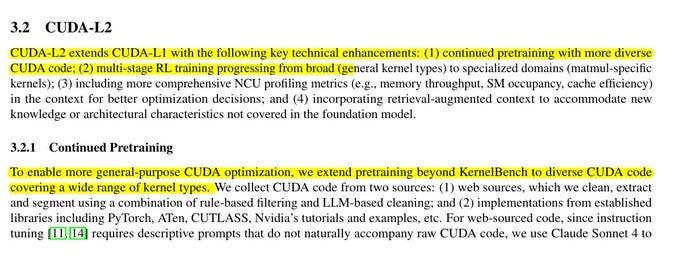

CUDA-L2 starts from a strong base model, DeepSeek 671B, and continues pretraining it on a large collection of CUDA kernels drawn from the web plus high quality library code from PyTorch, ATen, CUTLASS, and NVIDIA examples, so the model sees many real patterns for tiling, memory movement, and tensor core usage.

For each code snippet, the authors use another model to generate a natural language instruction that describes what the kernel is supposed to do, then they retrieve relevant documentation or examples and attach that as extra context, which turns each training example into an instruction plus supporting notes plus the final kernel implementation. On top of that continued pretraining, they run a first reinforcement learning stage on about 1000 general CUDA kernels that all have a trusted reference implementation, so the model learns to propose kernels, see their speed relative to a baseline, and adjust its coding style to run faster across many different operation types. Reinforcement learning uses a contrastive setup, where the model compares several of its own kernels and their measured speeds, reasons about why some are faster, and then updates its parameters with Group Relative Policy Optimization (GRPO) to favor the coding decisions that correlate with speed.

🎯 How the reinforcement learning loop works here

For each HGEMM shape (M, N, K), the LLM writes a full CUDA kernel file in plain CUDA, CuTe, CUTLASS style templates, or inline PTX, and the system compiles and runs it on the GPU. A candidate only counts if it compiles, finishes within a time limit, and closely matches both a high precision CPU matmul and trusted cuBLAS or cuBLASLt outputs.

The reward goes up when the kernel runs faster, stays numerically accurate, and keeps the code short, so the model is pushed toward fast, accurate, compact kernels. Profiling stats from NVIDIA Nsight Compute, like memory bandwidth, occupancy, and cache hit rate, are fed back into the next prompt so the model can fix real bottlenecks instead of relying on lucky timings. The loop also blocks reward hacking by only allowing plain .cu files, timing with CUDA events plus explicit device syncs, and banning extra CUDA streams or other scheduling tricks.

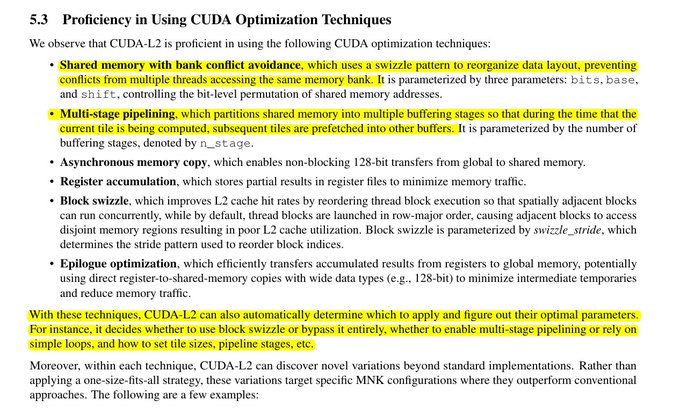

🛠️ Optimization tricks CUDA-L2 rediscovers and extends

For small matrices, CUDA-L2 prefers very lean WMMA kernels with simple memory access and minimal syncs, so there is almost no template or control overhead. For large matrices, it switches to CuTe based multi stage tensor core pipelines, which pack complex tiling and scheduling into compact code that RL can explore more easily.

A key move is padding matrices so tiles line up better, for example with M=8192, N=512, K=2048 it picks BM=160, pads M to 8320 (about 1.6% extra work), and gets about 15.2% faster than cuBLASLt AutoTuning, while nearby tile sizes give almost no gain or are much slower. On top of that, the model learns and tweaks classic GPU ideas like bank conflict free shared memory, multi stage pipelining, async global-to-shared copies, register accumulation, double buffered register fragments, aggressive multi step prefetch, direct register-to-shared writes with wide moves, and staggered A/B prefetch around tensor core instructions to keep both compute and memory busy.

🗞️ On the Origin of Algorithmic Progress in AI

New MIT paper asks what really caused big AI efficiency gains and finds most of them come from scaling, not tiny tricks. They challenge an earlier claim of about 22000x algorithm gains over a decade and argue the true figure is far smaller.

In small experiments they toggle modern features, like new activations, normalization, and schedulers, and the whole bundle gives under 10x compute savings. Even after adding gains from mixture of experts, tokenization, and better data, the total improvement still stays under 100x.

To explain the missing gap they compare older LSTM sequence models to Transformer models while steadily increasing the training compute budget. An LSTM processes tokens one by one, while a Transformer looks at all tokens together, and at high compute this design becomes far more efficient.

They also study the move from older Kaplan scaling, which underuses data, to Chinchilla balanced scaling, adding another efficiency gain that grows with compute. Overall they estimate 6930x efficiency gain relative to old LSTMs, with 90% coming from these scale dependent changes, which reward builders at frontier compute levels.

🗞️ DeepSeek-V3.2: Pushing the Frontier of Open LLMs

The paper behind DeepSeek-V3.2. Its high-compute Speciale version reaches gold medal level on top math and coding contests and competes with leading closed models.

Standard attention makes the model compare every token with every other token, so compute explodes as inputs get longer. So their powerful new attention mechanism, DeepSeek Sparse Attention, adds a small lightning indexer that scores past tokens, then runs attention only on a few important ones.

They continue training the previous DeepSeek model with this sparse pattern so it mimics dense attention but at much lower cost. On top of that base they run a reinforcement learning phase that rewards step-by-step reasoning, helpful replies, and successful tool use.

They also synthesize many tool-based environments for search, coding, math with code, and general tasks so the model learns to plan and verify. Across many benchmarks this setup lets DeepSeek-V3.2 match or approach GPT-5 style reasoning and agent performance while staying cheaper to run.

Deepseek brought attention complexity down from quadratic to roughly linear by using warm-starting with separate initialization and optimization dynamics, and slowly adjusting this setup over about 1T tokens.

They did this by adding a sparse attention module that only lets each token look at a fixed number of important past tokens, and it trains this module in 2 warm start stages so it first imitates dense attention and then gradually replaces it without losing quality.

⚙️ What DSA (DeepSeek Sparse Attention) actually changes

DeepSeek-V3.2 adds DeepSeek Sparse Attention, where a tiny “lightning indexer” scores all past tokens for each query token and then keeps only the top k ones, so the expensive core attention only runs over those selected entries and its work now grows like length times k instead of length times length. The indexer itself still touches all token pairs, but it uses very few heads, simple linear layers, and FP8 precision, so in practice its cost is a small fraction of the original dense attention, and the main model’s cost becomes the dominant, almost linear term. They also implement DSA on top of their Multi Head Latent Attention in multi query mode, where all heads share one compact key value set per token, which keeps the sparse pattern very friendly to kernels and makes the near linear behavior show up in real throughput.

🔥 Stage 1: dense warm up so the sparse module copies dense attention

DeepSeek-V3.2 does not switch to sparsity from scratch, it first runs a dense warm up stage on top of a frozen DeepSeek-V3.1-Terminus checkpoint whose context is already 128K. In this stage, the old dense attention stays in charge, all model weights are frozen except the lightning indexer, and for each query token they compute the dense attention distribution, turn it into a target probability over positions, and train the indexer so its scores, after softmax, match that dense distribution as closely as possible. They do this for about 2.1B tokens, which is just enough for the indexer to learn “if dense attention likes these positions, I should rank them high in my top k list”, so by the end of warm up, sparse selection is already a good approximation of what dense attention would look at.

🔁 Stage 2: switch to sparse attention and slowly adapt

After warm up, they enter the sparse training stage, where the main attention actually uses only the tokens selected by DSA instead of the full sequence. Now all model weights are trainable again, the language model loss updates the usual transformer parameters, and the indexer is still trained, but its loss only compares dense attention and indexer probabilities inside the selected top k set, which keeps it aligned while letting it specialize.

They also detach the indexer’s input from the main graph, so gradients from the language modeling loss do not flow into the indexer, which prevents the selector from drifting in strange ways just to make prediction easier in the short term. In this stage, each query token is allowed 2048 key value slots and they train for about 943.7B tokens with a small learning rate, so the model has a long time to adjust to seeing only sparse context while retaining its capabilities. Because the indexer already learned to mimic dense attention during warm up, this second stage behaves like a gentle transition where the model learns to live with a fixed, small k rather than a sudden pruning that would wreck performance.

📉 Why this gives “almost linear” cost in practice

From the main model’s point of view, attention work now grows like sequence length times k, and k is fixed at 2048, so once sequences are long enough the curve is effectively linear in length. The indexer’s quadratic pass is cheap because of FP8, small head count, and simple operations, so its contribution is a relatively flat overhead on top of the near linear core attention cost.

Figure 3 in the paper plots dollar cost per million tokens on H800 GPUs as a function of token position and shows that for both prefilling and decoding, DeepSeek-V3.2’s cost curve grows much more slowly with position than the old dense model, especially toward 128K tokens, which is exactly what this “quadratic to almost linear” story predicts. So the reduction in complexity comes from the DSA design that lets the main attention see only 2048 tokens per position, and the warm start procedure is what makes it possible to replace dense attention with this sparse version without breaking quality while enjoying the near linear compute scaling.

🗞️ Guided Self-Evolving LLMs with Minimal Human Supervision

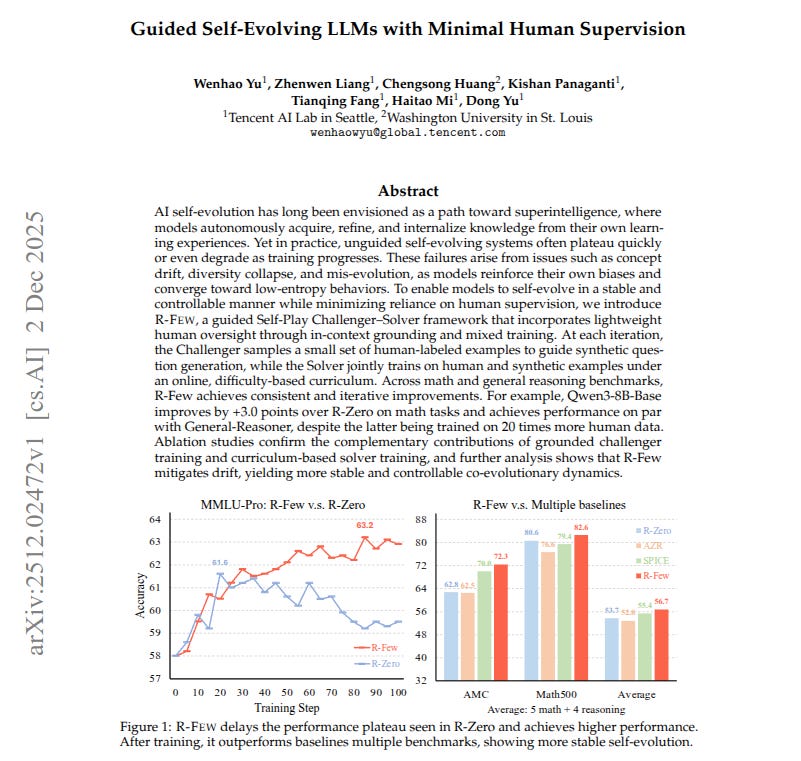

Beautiful Tencent paper. Shows a language model that keeps improving itself using only 1% to 5% human labeled questions while reaching the level of systems trained on about 20 times more data.

Earlier self play systems let a model write and solve its own questions, but over time it drifts, repeats narrow patterns, and can even perform worse. Their method runs a challenger copy that generates questions and a solver copy that answers them, turning training into a question answer game between 2 agents.

When the challenger writes, it sometimes sees a few real human question answer pairs, which pull its synthetic questions toward realistic tasks instead of strange, off topic puzzles. For each question, the solver tries several answers, the system estimates its success rate, and training keeps mainly mid difficulty questions where the solver is uncertain but not lost. Because both human and synthetic questions pass this filter, the solver trains on focused, non trivial problems, avoids cheap tricks like inflating question length, and gains stronger math and general reasoning scores than earlier self play methods.

🗞️ From Code Foundation Models to Agents and Applications: A Comprehensive Survey and Practical Guide to Code Intelligence

Alibaba, ByteDance, Tencent and a few other Chinese labs published a fantastic 304 page overview paper on training LLMs for coding

The paper explains how code focused language models are built, trained, and turned into software agents that help run parts of development.

These models read natural language instructions, like a bug report or feature request, and try to output working code that matches the intent.

The authors first walk through the training pipeline, from collecting and cleaning large code datasets to pretraining, meaning letting the model absorb coding patterns at scale.

They then describe supervised fine tuning and reinforcement learning, which are extra training stages that reward the model for following instructions, passing tests, and avoiding obvious mistakes.

On top of these models, the paper surveys software engineering agents, which wrap a model in a loop that reads issues, plans steps, edits files, runs tests, and retries when things fail.

Across the survey, they point out gaps like handling huge repositories, keeping generated code secure, and evaluating agents reliably, and they share practical tricks that current teams can reuse.

🗞️ Thinking by Doing: Building Efficient World Model Reasoning in LLMs via Multi-turn Interaction

The paper shows how to train an AI agent that learns a compact world model by practicing inside interactive games.

Standard agents try to plan everything in 1 huge thought, which is heavy and often locks in wrong assumptions.

This work instead lets the model act for several steps, see the real game response, and update its understanding on the fly.

From many such episodes it builds a world model, meaning an internal sense of what each action usually does in that environment.

WMAct adds reward rescaling, where the score is multiplied by the fraction of moves that changed the state, so pointless shuffling loses credit.

It also uses interaction frequency annealing, starting with many turns but slowly tightening this limit so the model must think more.

In maze, Sokoban, and taxi grid worlds, this training lets the agent solve many puzzles in a single shot that earlier needed trial-and-error dialogues.

The same model also gets noticeable gains on separate math, code, and general benchmarks, suggesting that this thinking-by-doing practice sharpens reasoning beyond these games.

🗞️ “Rectifying LLM Thought from Lens of Optimization”

The paper introduces RePro, a way to train reasoning LLMs so their chain of thought behaves like an optimization process instead of messy rambling.

Long chain of thought models often keep generating unnecessary steps, which burns compute and can even turn easy correct answers into wrong ones through overthinking.

RePro imagines each reasoning step as updating a belief about the answer and builds a proxy score that says how confident the model is in the true answer after each step.

From the curve of this score over the chain, the method extracts how much the score improves overall and how smooth that improvement is, then merges these into a single process level reward that prefers steady useful progress.

During reinforcement learning this process reward is computed only on a few high uncertainty chunks inside the long trace and added to the usual reward that only cares about whether the final answer is correct.

Across math, science, and coding benchmarks, models trained with RePro solve more problems, use fewer reasoning tokens, and show less looping and backtracking in their thoughts.

🗞️ “SEAL: Self-Evolving Agentic Learning for Conversational Question Answering over Knowledge Graphs”

The paper introduces SEAL, a system that helps conversational agents answer knowledge graph questions using cleaner, more reliable logical programs instead of messy model guesses.

A knowledge graph is a structured network of entities and relations, and standard language models often fail when turning complex multi step questions over this graph into executable queries.

SEAL first asks a language model to produce a minimal S expression core that only captures the key entities, relations, and conditions in the question.

An agent then calibrates this core by fixing syntax, linking each phrase to a single best graph entity or relation, then fills a small set of hand designed logical templates to produce a full executable query.

SEAL also keeps short term dialog memory, long term global memory of successful queries, and a reflection step that reuses logical forms that worked, so the system improves over time without retraining.

On the SPICE benchmark, this design answers complex multi hop, comparison, and aggregation questions more accurately and with fewer database calls than earlier unsupervised methods while coming close to strong supervised baselines.

That’s a wrap for today, see you all tomorrow.