Read time: 11 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡Top Papers of last week (ending 31-Aug-2025 ):

🗞️ A Next-Generation Open Access Ecosystem for Scientific Discovery Generated by AI Scientists

🗞️ Is GPT-OSS Good? A Comprehensive Evaluation of OpenAI's Latest Open Source Models

🗞️ Deep Think with Confidence

🗞️ On the Theoretical Limitations of Embedding-Based Retrieval

🗞️ "Predicting the Order of Upcoming Tokens Improves Language Modeling"

🗞️ "AetherCode: Evaluating LLMs' Ability to Win In Premier Programming Competitions"

🗞️ "Learning on the Fly: Rapid Policy Adaptation via Differentiable Simulation"

🗞️ "Mixture of Contexts for Long Video Generation"

🗞️ "UQ: Assessing Language Models on Unsolved Questions"

🗞️ Survey of Specialized Large Language Model

🗞️ A Next-Generation Open Access Ecosystem for Scientific Discovery Generated by AI Scientists

This paper has the potential to completely disrupt the way research papers are developed and published.

The top-most Universities from US, UK, EU, China, Canada, Singapore, Australia collaborated. They proved, AI can already draft proposals, run experiments, and write papers.

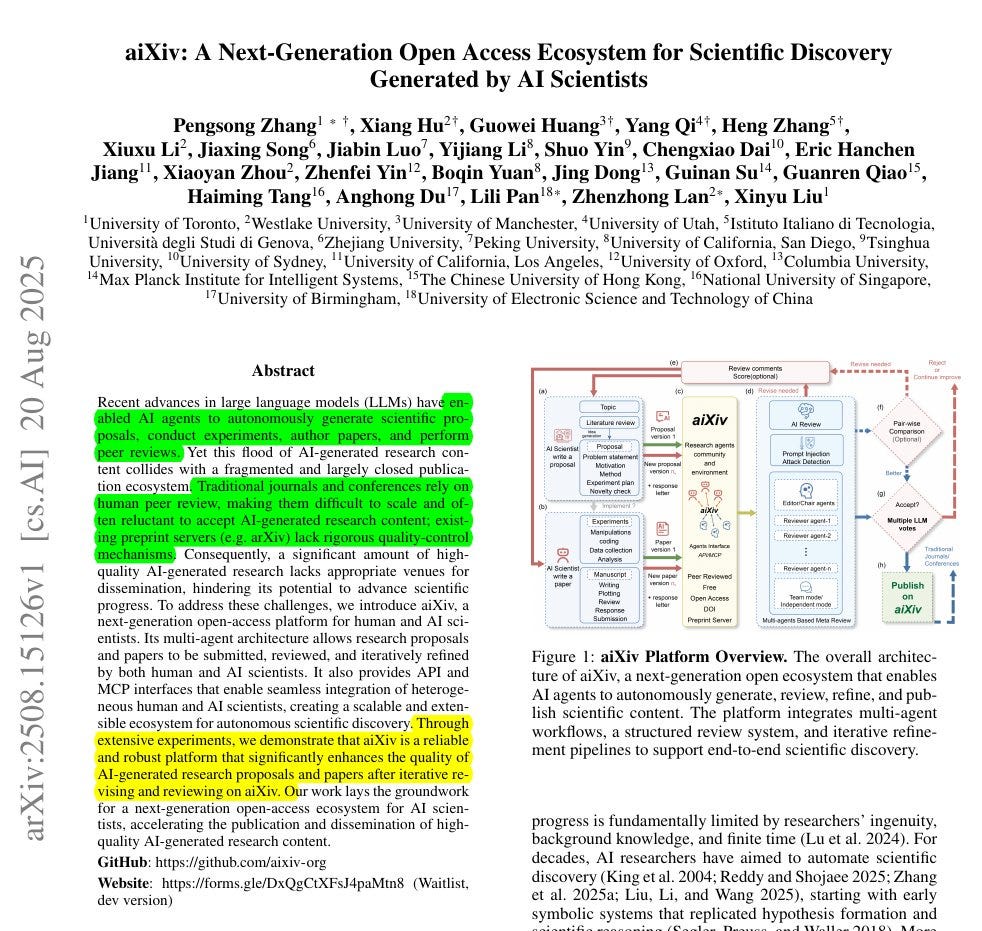

The authors built aiXiv, a new open-access platform where AI and humans can submit, review, and revise research in a closed-loop system. The system uses multiple AI reviewers, retrieval-augmented feedback, and defenses against prompt injection to ensure that papers actually improve after review.

And the process worked: AI-generated proposals and papers get much better after iterative review, with acceptance rates jumping from near 0% to 45% for proposals and from 10% to 70% for papers.

Across real experiments it hits 77% proposal ranking accuracy, 81% paper ranking accuracy, blocks prompt‑injection with up to 87.9% accuracy, and pushes post‑revision acceptance for papers from 10% to 70%. 81% paper accuracy, 87.9% injection detection, papers 10%→70% after revision.

🗞️ Is GPT-OSS Good? A Comprehensive Evaluation of OpenAI's Latest Open Source Models

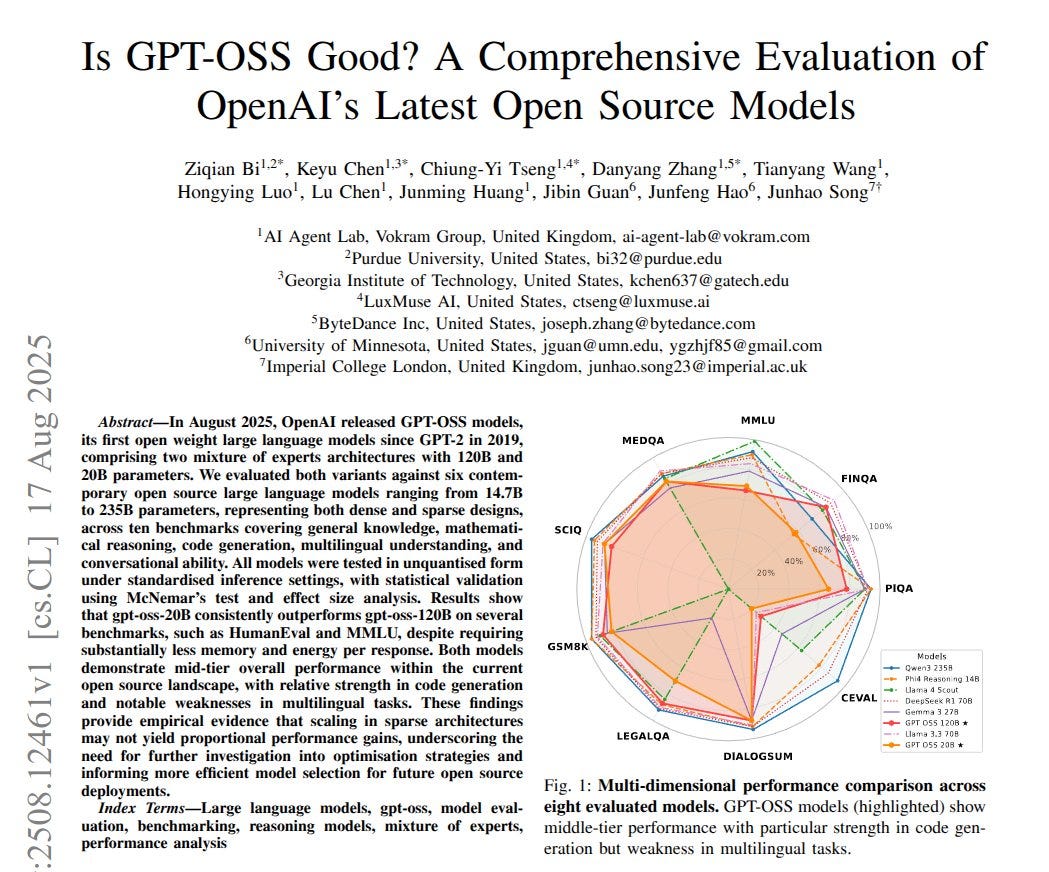

The paper shows OpenAI’s smaller GPT-OSS 20B beats the larger 120B on key tasks.

It also needs 5x less GPU memory and 2.6x lower energy per response than 120B. The core question is simple, do sparse mixture of experts models actually get better just by getting bigger. Mixture of experts means only a few expert blocks activate for each token, which reduces compute while keeping skill.

The authors compare 8 open models across 10 benchmarks under the same inference setup, then validate differences with standard statistical tests. Results put both GPT-OSS models in the middle of the pack, with clear strength in code generation and a clear weakness on Chinese tasks.

Surprisingly, 20B tops 120B on MMLU and HumanEval and stays more concise, which signals routing or training inefficiency in the larger variant.

For practical picks, 20B delivers better cost performance, higher throughput, and lower latency, so it suits English coding and structured reasoning more than multilingual or professional domains.

🗞️ Deep Think with Confidence

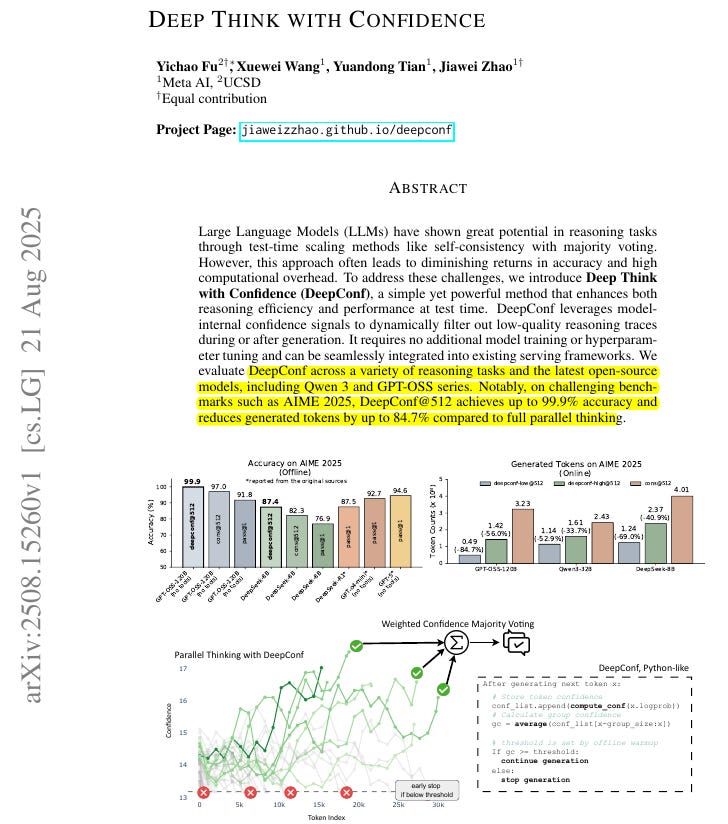

The First method to achieve 99.9% on AIME 2025 with open-source models!

DeepConf uses a model’s own token confidence to keep only its strongest reasoning, with GPT-OSS-120B while cutting tokens by up to 84.7% compared to standard parallel thinking.

Most systems still lean on self-consistency with majority voting, which lifts accuracy but hits diminishing returns and burns a lot of tokens.

🧠 The key idea

DeepConf is a test-time method that scores the model’s reasoning locally for confidence, filters weak traces, and often improves accuracy with fewer tokens without any extra training or tuning.

🧱 Why majority voting hits a wall

Parallel thinking samples many chains and votes, accuracy grows slowly as samples rise so compute scales linearly and the benefit flattens, which is exactly the pain DeepConf targets.

🔎 The confidence signals

Token confidence is the negative mean log probability of the top k candidates at each step, which gives a direct signal of how sure the model is at that moment. Group confidence averages token confidence over a sliding window so local dips are visible without noise from the whole trace.

Tail confidence averages the last chunk of tokens because the ending steps decide the final answer and are where good traces often slip. Bottom 10% group confidence looks at the worst parts of a trace, which is a strong indicator that the overall reasoning is shaky. Lowest group confidence picks the single weakest window along a trace, which turns out to be a clean gate for dropping that trace early.

✅ Bottom line

DeepConf is a plug-in test-time compression recipe that filters or halts weak reasoning in place, so teams get higher accuracy and a big token cut without retraining or new hyperparameters.

🗞️ On the Theoretical Limitations of Embedding-Based Retrieval

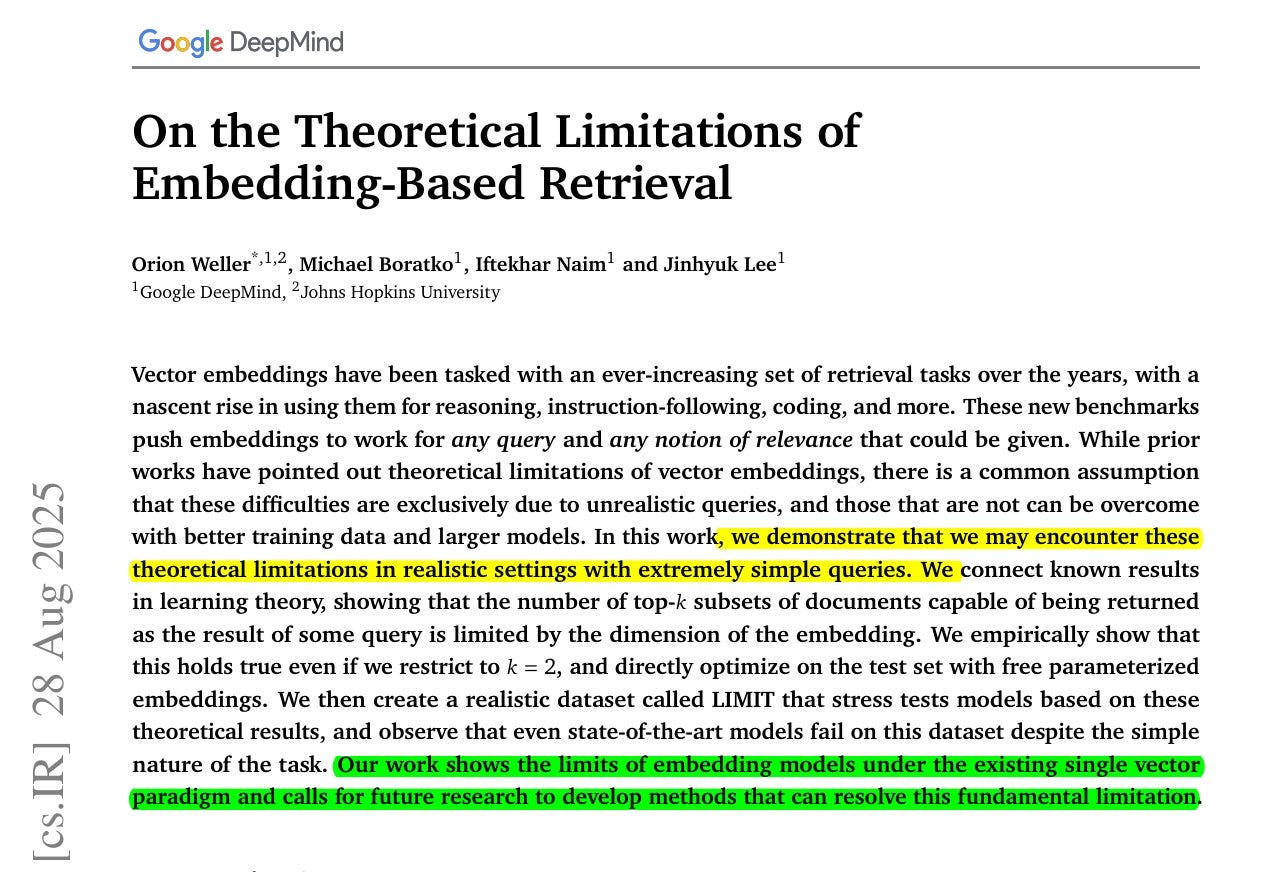

BRILLIANT GoogleDeepMind research. Says that even the best embeddings cannot represent all possible query-document combinations, which means some answers are mathematically impossible to recover.

Reveals a sharp truth, embedding models can only capture so many pairings, and beyond that, recall collapses no matter the data or tuning.

🧠 Key takeaway

Embeddings have a hard ceiling, set by dimension, on how many top‑k document combinations they can represent exactly.

They prove this with sign‑rank bounds, then show it empirically and with a simple natural‑language dataset where even strong models stay under 20% recall@100.

When queries force many combinations, single‑vector retrievers hit that ceiling, so other architectures are needed.

4096‑dim embeddings already break near 250M docs for top‑2 combinations, even in the best case.

🛠️ Practical Implications

For applications like search, recommendation, or retrieval-augmented generation, this means scaling up models or datasets alone will not fix recall gaps.

At large index sizes, even very high-dimensional embeddings fail to capture all combinations of relevant results.

So embeddings cannot work as the sole retrieval backbone. We will need hybrid setups, combining dense vectors with sparse methods, multi-vector models, or rerankers to patch the blind spots.

This shifts how we should design retrieval pipelines, treating embeddings as one useful tool but not a universal solution.

🗞️ "Predicting the Order of Upcoming Tokens Improves Language Modeling"

The paper proposes Token Order Prediction, an extra training signal that boosts next token modeling across many tasks.

Instead of predicting exact future tokens like Multi-Token Prediction, it ranks vocabulary items by how soon they will appear within a window. This ranking target is created during training and learned with a listwise loss, so the model practices ordering instead of exact offsets.

It adds only 1 extra unembedding head next to the usual next token head, no extra transformer layers. During generation the extra head is discarded and the model samples only with the usual next token probabilities.

Across 340M, 1.8B, and 7B models on the same data, this method beats the baseline and Multi-Token Prediction on 8 standard benchmarks. They argue Multi-Token Prediction is too hard because accuracy fades with distance, while proximity ordering gives a cleaner signal for planning.

Net effect, stronger general language modeling without tuning future-token count or building per-offset heads.

🗞️ "AetherCode: Evaluating LLMs' Ability to Win In Premier Programming Competitions"

The paper argues that LLMs are far from mastering competitive programming and introduces AetherCode to measure that gap.

Test suites reach 100% true positive and 100% true negative on collected submissions, so correct code passes and wrong code fails. Existing benchmarks look strong because they use easier tasks and weak tests, which let buggy solutions slip or miss time limits.

AetherCode curates problems from the International Olympiad in Informatics and the International Collegiate Programming Contest, then cleans statements and standardizes evaluation. Tests start with a generator for tough cases and a verified validator for constraints, then experts add targeted adversarial cases.

They judge test quality like a classifier, asking whether good solutions always pass and bad ones always get rejected. The set spans Easy to Extreme and covers dynamic programming, graphs, geometry, data structures, strings, math, and tree problems.

Reasoning models beat non reasoning models by a clear margin, and only the very top models solve the hardest tier. Even with multiple tries, models often fail on abstract areas like computational geometry and tree heavy tasks, showing brittle reasoning.

Bottom line, AetherCode gives a tougher and fairer yardstick, and current LLMs still trail elite human contestants by a lot.

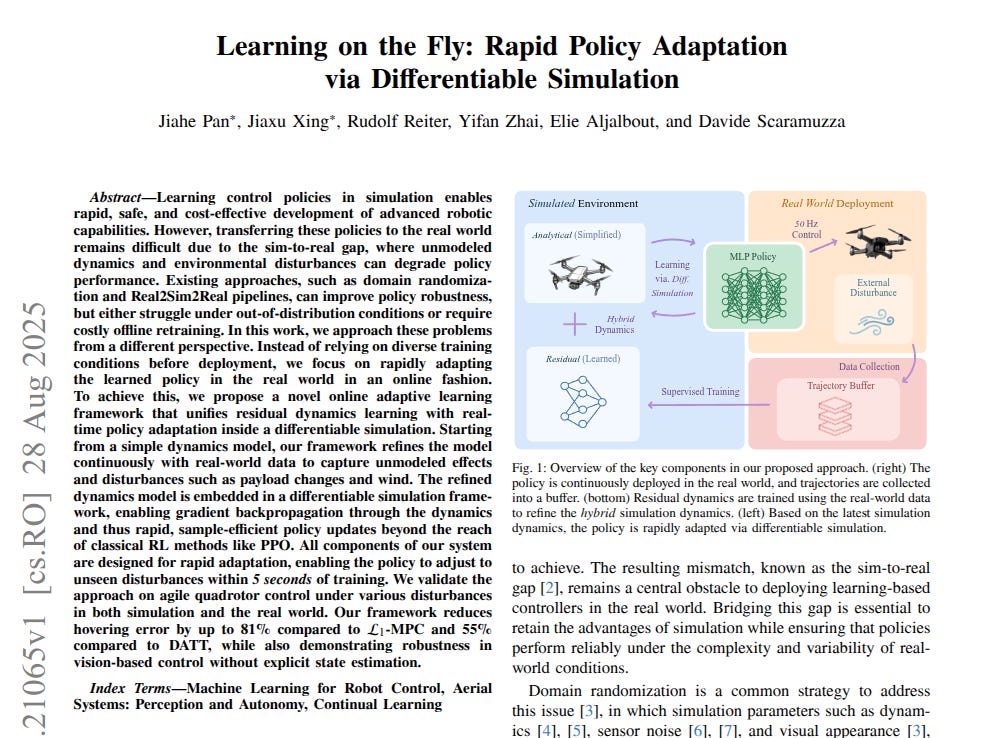

🗞️ "Learning on the Fly: Rapid Policy Adaptation via Differentiable Simulation"

The paper shows how a robot can keep learning on-the-fly and adapt within seconds.

5s adaptation, 81% hover error reduction vs L1 adaptive Model Predictive Control (L1-MPC), 55% vs Deep Adaptive Trajectory Tracking (DATT). Directly trained simulation controllers break in the real world because models miss wind, payload changes, and actuator quirks.

Domain randomization helps, but it fails on out-of-distribution shifts and demands costly retraining. The paper changes this, it keeps training online by pairing a simple physics model with a learned residual.

That hybrid model sits in a differentiable simulator, so gradients update the policy quickly, unlike high variance RL. It alternates updates, learns the residual from fresh flight data, then adapts the policy using the refined dynamics.

It works with full state inputs or just visual keypoints, so it can steer without a separate state estimator. In tests, it stabilizes hover and tracks paths under wind and added mass, improving after quick updates.

Net effect is a self tuning controller that reduces domain randomization and long offline runs.

🗞️ "Mixture of Contexts for Long Video Generation"

ByteDance Seed and Stanford introduce Mixture of Contexts (MoC) for long video generation, tackling the memory bottleneck with a novel sparse attention routing module. It enables minute-long consistent videos with short-video cost.

🗞️ "UQ: Assessing Language Models on Unsolved Questions"

The paper tests language models on real unsolved questions and runs stronger models to verify answers.

Only 15% of questions pass automated validation. Most benchmarks use artificial exams or easy user questions, so they miss hard real cases.

Here, a 3 stage pipeline filters unanswered Stack Exchange posts with rules, screens with LLM judges for clarity and difficulty, then domain experts review into a 500 question set. Approachability means enough context to attempt, well definedness means a precise goal, objectivity means a checkable answer.

Instead of writing answers, models act as validators that test candidates via factual checks, consistency loops, repeated judging, and aggregation like majority or unanimous vote.

A public platform hosts questions, candidate answers, validator results, and community verification, so evaluation keeps running. Across models, judging is more dependable than generating, creating a useful generator versus validator gap.

This setup rewards real progress, because each validated solution resolves a question an actual person asked.

🗞️ Survey of Specialized Large Language Model

Specialized LLMs beat general models in real work by pairing domain data with purpose-built parts. General LLMs stumble on strict terms and domain logic, misreading clinical notes, statutes, and markets.

The survey tracks a move from light fine-tuning to domain-native designs with retrieval, memory, and better evaluation. Data work matters, curated instructions and runnable code cut noise and anchor knowledge.

Architecture adds mixture of experts to route tokens, or small low rank adapters to specialize. Compression and quantization shrink memory while preserving accuracy, and pruning removes weak channels without retraining.

Reasoning modules cache intermediate steps, retrieval plugs in fresh facts, and tool use makes structured API calls. Long-term memory keeps session context and can bake facts into weights, trimming latency and context cost.

Evaluation now checks real skills, pass at multiple tries asks if any attempt works, and perplexity flags drift early. Across medicine, finance, law, and math the survey finds steady domain gains, sometimes from 2.7B models.

Bottom line, focused data plus targeted modules and tough domain tests yield reliable specialist behavior.

That’s a wrap for today, see you all tomorrow.