Read time: 11 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡Top Papers of last week (ending 25-August):

🗞️ "Beyond GPT-5: Making LLMs Cheaper and Better via Performance-Efficiency Optimized Routing"

🗞️ Voice AI in Firms: A Natural Field Experiment on Automated Job Interviews

🗞️ XQUANT: Breaking the Memory Wall for LLM Inference with KV Cache Rematerialization

🗞️ Retrieval-augmented reasoning with lean language models

🗞️ Intern-S1: A Scientific Multimodal Foundation Model

🗞️ "Virtuous Machines: Towards Artificial General Science"

🗞️ "DuPO: Enabling Reliable LLM Self-Verification via Dual Preference Optimization"

🗞️ "OS-R1: Agentic Operating System Kernel Tuning with Reinforcement Learning"

🗞️ "Is GPT-OSS Good? A Comprehensive Evaluation of OpenAI's Latest Open Source Models"

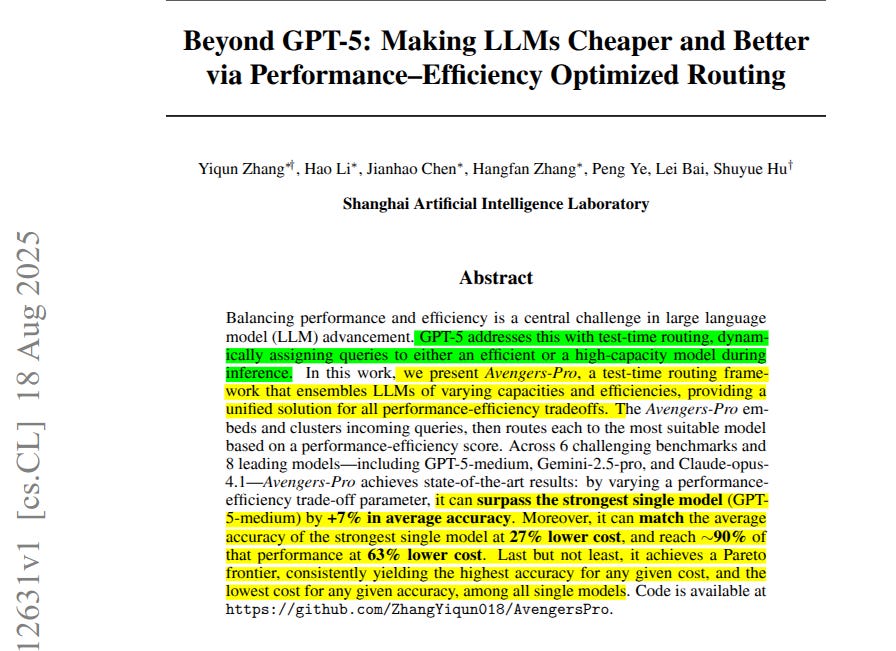

🗞️ "Beyond GPT-5: Making LLMs Cheaper and Better via Performance-Efficiency Optimized Routing"

Fantastic paper to understand Test-time Routing of LLMs.

Proposes Avengers-Pro, a test-time routing framework that ensembles LLMs of varying capacities and efficiencies. With the same spend as GPT-5-medium, Avengers-Pro delivers +7% higher accuracy, and with the same accuracy it costs 27% less.

🧭 Why this exists

LLMs force a choice between fast cheap answers and slower high‑capacity reasoning, so the paper leans on GPT‑5’s idea of test‑time routing and asks how to push cost and accuracy together rather than trading them off.

GPT‑5’s own description mentions a real‑time router that flips between an efficient model and a deeper reasoning model based on query difficulty, and this work generalizes that pattern beyond a single vendor.

🧩 How the router thinks

Avengers‑Pro turns each incoming query into an embedding, groups similar queries into clusters, then scores candidate models on those clusters using a blend of performance and cost so the router can pick the best model for that query type.

At inference, the new query goes to its nearest clusters, the router aggregates each model’s score over those clusters, and the single highest‑scoring model gets the call.

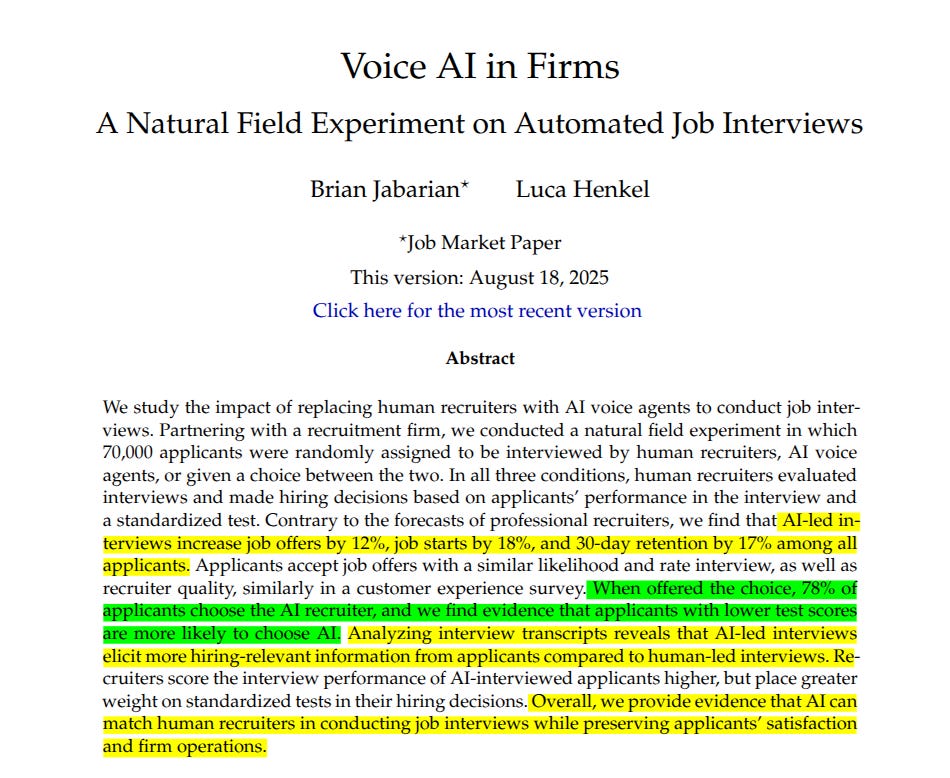

🗞️ Voice AI in Firms: A Natural Field Experiment on Automated Job Interviews

MASSIVE claim in this research. 🤯 Recruiters are in trouble. In a large experiment with 70,000 applications, AI agents outperformed human recruiters in hiring customer service reps.

AI voice agents interviewing job candidates matched human recruiters and even lifted offers, starts, and 30 day retention. The usual practice leans on human interviewers for high volume hiring, which adds delay, cost, and uneven quality. AI will be the BIGGEST SOLUTION to that.

🧠 The idea

In this setup the agent conducts the conversation, collects structured evidence, and a human reviewer decides on the offer. The team ran a large field experiment with 70,884 applications, covering 48 job postings and 43 client accounts, all for entry level customer service roles in the Philippines.

Applicants who passed initial screening were randomly assigned to Human interviewer, AI interviewer, or Choice of interviewer, and in every case a human recruiter reviewed the transcript plus test scores and made the hiring call.

Interviews followed the same guide for both AI and human, then applicants took standardized language and analytical tests that fed into a threshold style decision.

📈 Relative to human led interviews, AI led interviews raised the job offer rate from 8.70% to 9.73% (+12%), and increased job starts by +18% and 30 day retention by +17% among all randomized applicants.

These gains came even though recruiters had predicted AI would underperform on interview quality and retention. Applicant acceptance rates and customer experience scores stayed similar between AI and humans.

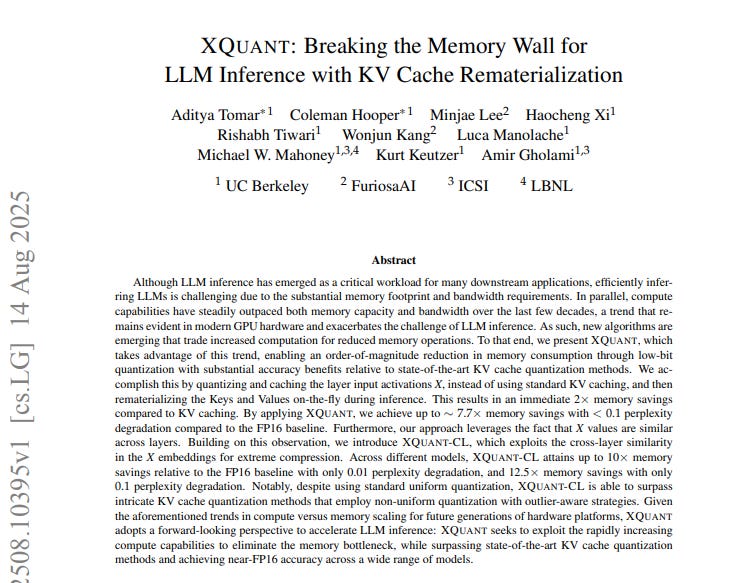

🗞️ XQUANT: Breaking the Memory Wall for LLM Inference with KV Cache Rematerialization

XQUANT, a way to break the memory wall for LLM inference via KV cache, by storing inputs and recomputing attention on demand.

10–12.5x memory savings vs. FP16

Near-zero accuracy loss

Beats state-of-the-art KV quantization🔥

Key Proposals:

KV cache overhead rises linearly with context length and batch, making it the choke point.

FLOPs on GPUs are much faster than their memory throughput.

So instead of keeping KV in memory, just recompute.

In long sequences, the KV cache, the stored Keys and Values from past tokens, dominates memory and must move every step, which throttles bandwidth. XQUANT stores each layer’s input activations X in low bits, then recomputes Keys and Values using the usual projections during decoding.

That single change cuts memory by 2x versus caching both K and V, with small accuracy loss. This works because X quantizes well, and GPUs outpace memory bandwidth, so extra multiplies are a fair trade.

XQUANT-CL goes further by storing only small per layer deltas in X, leveraging the residual stream that keeps nearby layers similar. With this, the paper reports up to 10x memory savings with about 0.01 perplexity drop, and 12.5x with about 0.1.

With grouped query attention, they apply singular value decomposition to the weight matrices, cache X in a lower dimension, then rebuild K and V. Despite using plain uniform quantization, results land near FP16 accuracy and beat more complex KV quantizers at the same footprint.

Net effect, fewer memory reads per token, modest extra compute, and smoother long context serving.

🗞️ Retrieval-augmented reasoning with lean language models

Great Paper. 💡 A small Qwen2.5 model is fine-tuned to think over retrieved documents, so a single lean setup can answer domain questions on resource-constrained local hardware.

Using summarised NHS pages, retrieval hits the right condition among top‑5 in 76% of queries, and the fine‑tuned model predicts the exact condition correctly 56% of the time, close to larger frontier models. The whole pipeline is built for private deployments, so teams can run it without sending data to external APIs.

🔒 The problem they tackle

Many teams cannot ship prompts or data outside their network, especially in health and government, so cloud LLM endpoints are off the table.

They aim for a single lean model that can read retrieved evidence and reason over it, all running locally, so answers stay grounded and private.

The target setting is messy queries over a closed corpus, where retrieval constrains facts and the reasoning step interprets symptoms and next actions.

🧩 The pipeline in this paper.

The system indexes a corpus, retrieves the most relevant pieces for each query, then generates an answer that reasons over those pieces.

They use a classic retriever plus generator design, with retrieval first then reasoning, which fits decision tasks better than free‑form answering.

The chat flow lets a conversational agent decide when to call retrieval, then passes the retrieved context to the reasoning model to produce the answer.

🗞️ Intern-S1: A Scientific Multimodal Foundation Model

Scientific Discovery is going fully AI-native, and chemistry, physics, and biology won’t look the same after this. Shanghai AI Lab has dropped Intern-S1,

It's a scientific multimodal MoE that reads images, text, molecules, and time‑series signals, built with 28B activated parameters, 241B total parameters, and continued pretraining on 5T tokens with 2.5T from science.

It couples a Vision Transformer (ViT), a dynamic tokenizer for structured strings, and a time‑series encoder with a Qwen3‑based MoE LLM, then aligns with offline + online RL using a Mixture‑of‑Rewards service across 1000+ tasks.

It sets strong open‑source results in scientific reasoning and is competitive with major APIs on several professional benchmarks. 10x less RL training time vs recent baselines.

⚙️ The Core Architecture

A Mixture‑of‑Experts LLM fed by InternViT‑6B for images, a dynamic tokenizer for SMILES or FASTA, and a time‑series encoder for raw signals.

Visual tokens are reduced by pixel unshuffle to 256 tokens per 448x448 image, then projected into the LLM embedding space and trained jointly.

All components stay trainable during multimodal stages, so the backbone and encoders co‑adapt for scientific inputs.

🗞️ "Virtuous Machines: Towards Artificial General Science"

Autonomous Discovery Has Begun. 'Virtuous Machines' demonstrates a nascent AI system capable of conducting autonomous scientific research. An agentic, domain-agnostic AI can run the full scientific loop on real people, end-to-end.

From hypothesis to preregistration to data collection to peer-style review to a formatted paper, with minimal human touch, fast and cheap, but with limits in conceptual nuance and some shaky measurement reliability.

An AI system can plan, run, analyze, and write real human experiments end to end, proving automated science is already practical, fast, and low cost.

Across 3 cognitive studies with 288 participants it found mostly null or weak effects, so the real result is feasibility, not a new cognitive claim.

🗞️ "DuPO: Enabling Reliable LLM Self-Verification via Dual Preference Optimization"

DuPO (Dual Learning-based Preference Optimization) shows how an LLM can check its own work reliably without human labels.

RLHF needs humans, and verifiable-reward training needs ground-truth answers, so scale and task coverage suffer. DuPO sidesteps that and lets an LLM improve using its own feedback, by reconstructing hidden input pieces from its answers.

Translation got a 2.13 COMET lift, math accuracy rose by 6.4 points. The model initially does the original task, then a paired task tries to recover only the unknown piece using the answer plus the known context.

If the recovered piece matches, reward is high, if not, reward is low, so the model learns to produce answers that carry enough information. Think of adding 2 numbers when 1 number is revealed, the dual task checks whether the reported total implies the hidden number correctly.

Because the dual focuses on a small slice, it is easier and less noisy than recreating the whole input. They also pick the unknown piece carefully so the backward check is answerable and has a unique correct value.

1 model plays both roles, which reduces direction imbalance and keeps the feedback consistent. They show it on multilingual translation and math, and also as a training free reranker that picks the candidate whose backward check works best.

Net effect, reliable self feedback that scales across tasks without human labeling.

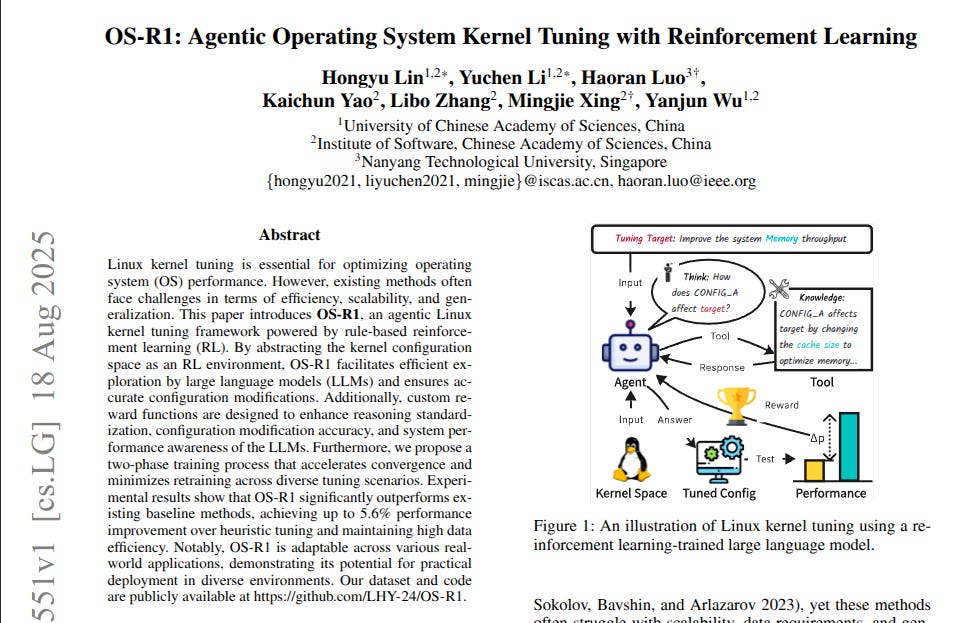

🗞️ "OS-R1: Agentic Operating System Kernel Tuning with Reinforcement Learning"

This paper turns Linux kernel tuning into a reinforcement learning task, training an agent to choose better configurations.

Up to 5.6% system speedup over expert heuristics. Kernel tuning means flipping thousands of options in the Linux build that tweak scheduling, memory, files, and networking.

They shrink the option space into grouped decision units and assemble a 3K dataset where every case compiles and boots. The agent learns in 2 phases, first to follow a strict answer format and use a knowledge tool, then to chase real speed.

Rewards cover 3 things, format discipline, valid configuration edits by type, and measured performance gain judged by an evaluator model. Training uses group relative policy optimization, which samples several candidate changes per step and moves policy weights toward higher scoring ones.

On UnixBench, the tuned configs lift overall system score and often beat strong baselines, including larger models without this procedure. Across Nginx, Redis, and PostgreSQL, it brings consistent throughput and latency wins using the same trained policy.

Bottom line, OS-R1 turns plain goals like reduce latency into concrete, safe kernel switches that generalize across workloads.

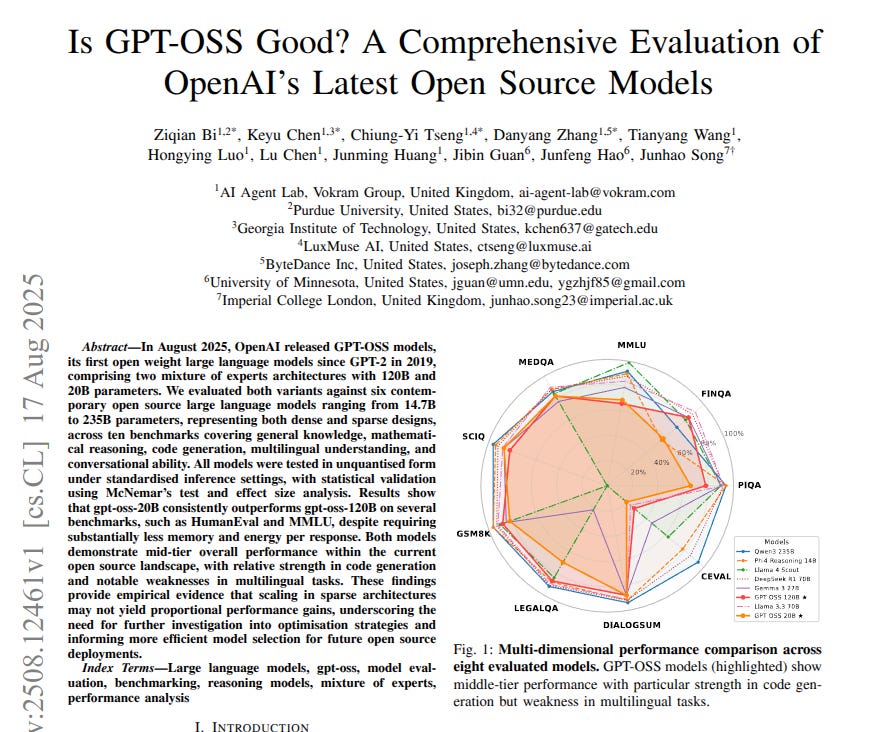

🗞️ "Is GPT-OSS Good? A Comprehensive Evaluation of OpenAI's Latest Open Source Models"

The paper shows OpenAI’s smaller GPT-OSS 20B beats the larger 120B on key tasks. It also needs 5x less GPU memory and 2.6x lower energy per response than 120B.

The core question is simple, do sparse mixture of experts models actually get better just by getting bigger. Mixture of experts means only a few expert blocks activate for each token, which reduces compute while keeping skill.

The authors compare 8 open models across 10 benchmarks under the same inference setup, then validate differences with standard statistical tests.

Results put both GPT-OSS models in the middle of the pack, with clear strength in code generation and a clear weakness on Chinese tasks. Surprisingly, 20B tops 120B on MMLU and HumanEval and stays more concise, which signals routing or training inefficiency in the larger variant.

For practical picks, 20B delivers better cost performance, higher throughput, and lower latency, so it suits English coding and structured reasoning more than multilingual or professional domains.

That’s a wrap for today, see you all tomorrow.

Thanks this is what i needed