Read time: 12 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡Top Papers of last week (ending 18-Oct-2025):

🗞️ Agent Learning via Early Experience

🗞️ The Art of Scaling Reinforcement Learning Compute for LLMs

🗞️ StreamingVLM: Real-Time Understanding for Infinite Video Streams

🗞️ Every Language Model Has a Forgery-Resistant Signature

🗞️ RAG-Anything: All-in-One RAG Framework

🗞️ Tensor Logic: The Language of AI

🗞️ Robot Learning: A Tutorial

🗞️ Generative AI and Firm Productivity: Field Experiments in Online Retail

🗞️ A Survey of Vibe Coding with LLMs

🗞️ Training LLM Agents to Empower Humans

🗞️ Agent Learning via Early Experience

🔥 Massive new Meta paper shows a simple way for language agents to learn from their own early actions without rewards. The big deal is using the agent’s own rollouts (i.e. own actions) as free supervision when rewards are missing, instead of waiting for human feedback or numeric rewards.

It cuts human labeling needs and makes agents more reliable in messy, real environments. On a shopping benchmark, success jumps by 18.4 points over basic imitation training.

Many real tasks give no clear reward and expert demos cover too few situations. So they add an early experience stage between imitation learning and reinforcement learning.

The agent proposes alternative actions, tries them, and treats the resulting next screens as supervision. State means what the agent sees right now, and action means what it does next.

Implicit world modeling teaches the model to predict the next state from the current state and the chosen action, which builds a sense of how the environment changes. Self reflection teaches the model to compare expert and alternative results, explain mistakes, and learn rules like budgets and correct tool use.

Across 8 test environments, both methods beat plain imitation and handle new settings better. When real rewards are available later, starting reinforcement learning from these checkpoints reaches higher final scores. This makes training more scalable, less dependent on human labels, and better at recovering from errors.

This image shows how training methods for AI agents have evolved.

On the left, the “Era of Human Data” means imitation learning, where the agent just copies human examples. It doesn’t need rewards, but it can’t scale because humans have to create all the data.

In the middle, the “Early Experience” approach from this paper lets the agent try its own actions and learn from what happens next. It is both scalable and reward-free because the agent makes its own training data instead of waiting for rewards. On the right, the “Era of Experience” means reinforcement learning, where the agent learns from rewards after many steps. It can scale but depends on getting clear reward signals, which many environments don’t have. So this figure shows that “Early Experience” combines the best of both worlds: it scales like reinforcement learning but doesn’t need rewards like imitation learning.

The two methods that make up the “Early Experience” training idea.

On the left side, “Implicit World Modeling” means the agent learns how its actions change the world. It looks at what happens when it tries an action and predicts the next state. This helps it build an internal sense of cause and effect before actual deployment.

On the right side, “Self-Reflection” means the agent learns by questioning its own choices. It compares the expert’s action and its own alternative, then explains to itself why one action was better. That explanation becomes new training data.

Both methods start with expert examples but add extra self-generated data. This makes the agent smarter about how the environment works and better at improving its own decisions without extra human feedback.

🗞️ The Art of Scaling Reinforcement Learning Compute for LLMs

A really important paper just dropped by Meta. This is probabaly the first real research on scaling reinforcement learning with LLMs.

Makes RL training for LLMs predictable by modeling compute versus pass rate with a saturating sigmoid. 1 run of 100,000 GPU-hours matched the early fit, which shows the method can forecast progress.

The framework has 2 parts, a ceiling the model approaches, and an efficiency term that controls how fast it gets there. Fitting those 2 numbers early lets small pilot runs predict big training results without spending many tokens.

The strongest setup, called ScaleRL, is a recipe of training and control choices. ScaleRL combines PipelineRL streaming updates, CISPO, FP32 at the final layer, prompt averaging, batch normalization, zero-variance filtering, and no-positive-resampling. The results say raise the ceiling first with larger models or longer reasoning budgets, then tune batch size to improve efficiency.

How the ScaleRL method predicts and tracks reinforcement learning performance as compute increases.

The left graph shows that as GPU hours go up, the pass rate improves following a smooth curve that starts fast and then slows down. Both the 8B dense and 17Bx16 mixture-of-experts models fit the same kind of curve, which means the model’s progress can be predicted early on without needing full-scale runs.

The right graph shows that this predictable scaling also holds on a new test dataset called AIME-24. That means ScaleRL’s performance trend is not just tied to training data but generalizes to unseen problems too. Together, the figures show that ScaleRL can be trusted for long runs up to 100,000 GPU-hours because its early scaling pattern remains stable even as compute grows.

This figure compares ScaleRL with other popular reinforcement learning recipes used for training language models.

Each line shows how performance improves as more GPU hours are used. ScaleRL’s curve keeps rising higher and flattens later than the others, which means it continues to learn efficiently even with large compute.

How the paper mathematically models reinforcement learning performance as compute increases.

The curve starts low, rises sharply, then levels off at a maximum value called the asymptotic reward A. The point called Cmid marks where the system reaches half of its total gain. Smaller Cmid means the model learns faster and reaches good performance sooner.

🗞️ StreamingVLM: Real-Time Understanding for Infinite Video Streams

New NVIDIA paper - StreamingVLM lets a vision language model follow endless video in real time while keeping memory and latency steady. It wins 66.18% of head-to-head judgments against GPT-4o mini and runs at 8 FPS on 1 NVIDIA H100.

The big deal is that it makes real-time, infinite video understanding practical, with steady latency, built-in memory, and clear gains over strong baselines. Old methods either look at everything and slow down a lot, or use short windows and forget what happened.

This model keeps a small cache with fixed “anchor” text, a long window of recent text, and a short window of recent vision. When it drops old tokens, it shifts position indexes to keep them in a safe range so generation stays stable.

Training matches how it runs by using short overlapping chunks with full attention and interleaving video and words every 1 second. It learns timing by only supervising the seconds that actually have speech and using a placeholder for silent seconds.

The team built a cleaned sports-commentary dataset and a new multi-hour benchmark that checks frame-to-text alignment each second. It ends up handling long videos better without extra visual question answering tuning and keeps flat latency by reusing past states.

🗞️ Every Language Model Has a Forgery-Resistant Signature

The paper shows LLM outputs carry a unique ellipse signature that verifies the model and resists forgery. This pattern is built into how the model generates text and is very hard to fake, so it can be used to prove an output’s origin.

That pattern shows up in the log probabilities that models already return. The practical benefits is a natural, cheap way to verify that an output really came from a specific model.

A simple distance check tells if one model or another produced a given step. The pattern is hard to fake because recovering the exact ellipse needs about d squared samples and a solver that scales like d to the power 6.

The authors estimate that copying a 70B model’s ellipse would cost over $16,000,000 and roughly 16,000 years of compute. Verification stays cheap since one generation step is usually enough.

The signature is built in because modern models normalize then apply a linear layer, which maps hidden states onto an ellipse in a higher space. It is also self-contained since a verifier only needs the ellipse parameters and the log probabilities, not the prompt or full weights.

It improves on earlier linear signatures, which attackers can copy much more easily. A real limitation is that some APIs do not expose log probabilities, which this method needs.

🗞️ RAG-Anything: All-in-One RAG Framework

This paper builds a single RAG system that understands and retrieves from text, images, tables, and formulas together. Most tools turn everything into plain text and lose useful structure like headers, figure links, and symbol references.

This system keeps each small piece in its original form and context. The big deal is reliable retrieval across mixed content without losing structure.

And most real documents mix text, figures, and tables, and this system finally treats them that way. The key finding is that combining structural graphs with embeddings gives stronger recall and cleaner answers on complex documents.

It represents a document as 2 connected graphs, one for cross-modal structure and one for text meaning. At query time it searches along the graph to follow entities and relations.

It also does dense embedding search to catch semantically similar pieces that are not directly linked. It merges results with a score that mixes graph importance, embedding similarity, and query hints about modality.

For answering, it rebuilds a clear text context, attaches the right visuals, and uses a vision language model to write the answer. This works better on long mixed documents because it preserves panel to caption and row to column to unit links. The main idea is graphs for structure and embeddings for meaning, used together.

🗞️ Tensor Logic: The Language of AI

This paper proposes a 1 construct language, the tensor equation, that unifies neural nets and logic, enabling reliable reasoning. It claims a full transformer fits in 12 equations.

Could make AI simpler to build, easier to verify, and less prone to hallucinations. Today AI uses many tools, which hurts trust and maintenance.

Tensor logic stores facts in tensors, then programs join and project them like database operations. A database rule such as Datalog becomes 1 tensor operation that counts matches, then maps counts to 0 or 1.

Gradients fit this equation form too. Blocks like convolution, attention, and multilayer perceptrons reduce to the same compact style.

Reasoning runs in embedding space using learned vectors with a temperature setting. At temperature 0 it acts like strict logic and reduces hallucinations by avoiding loose matches.

Higher temperature lets similar items share evidence, enabling analogies while staying transparent. The goal is 1 clear language that covers code, data, learning, and reasoning.

🗞️ Robot Learning: A Tutorial

The paper explains how robots can learn useful skills from data, with practical recipes. It frames robot learning as decision making under uncertainty, where an agent maps observations to actions that achieve goals.

It combines imitation learning, reinforcement learning, and model based planning with learned dynamics. Imitation is fast but can drift in new scenes, so experts correct actions and grow the dataset.

Reinforcement learning learns by reward, but on robots it is costly and risky, so methods reuse logs and safer objectives. Model based methods learn a predictive simulator and plan inside it to cut data needs.

Perception learns compact features from pixels so the controller uses stable signals, not raw images. It shows sim to real transfer by randomizing textures, lighting, and physics, then fine tuning on a small real set, and it links these parts into simple recipes that generalize.

🗞️ Generative AI and Firm Productivity: Field Experiments in Online Retail

Extremely important study. Shows generative AI can raise online retail productivity by boosting conversions without extra inputs.

Up to 16.3% sales lift and about $5 per consumer annually are reported. This is a rare causal evidence that generative AI can raise firm productivity today.

The team ran randomized tests across 7 parts of the shopping flow on a huge global platform. They kept labor, capital, and prices the same, so extra revenue equals real productivity gains.

The biggest win was a pre-sale chatbot that answered product questions in many languages. Better search queries and clearer product descriptions helped shoppers find the right items faster.

Chargeback defense won more disputes and live chat translation made customers happier. Conversion rates went up while average cart size stayed the same, so more people bought, not bigger baskets.

Across wins, the added value was about $5 per consumer per year. Gains were strongest for small or new sellers who lack resources. Less experienced shoppers benefited most because clearer info cut language and search friction.

🗞️ A Survey of Vibe Coding with LLMs

A long 92-page survey on Vibe Coding, where developers guide and check results while AI coding agents do most of the coding work. It builds a theory showing how humans, projects, and coding agents all interact and depend on each other.

The authors say success depends not only on how powerful the AI is but on how well the whole setup is designed. Good results need clear context, reliable tools, and tight collaboration between humans and agents.

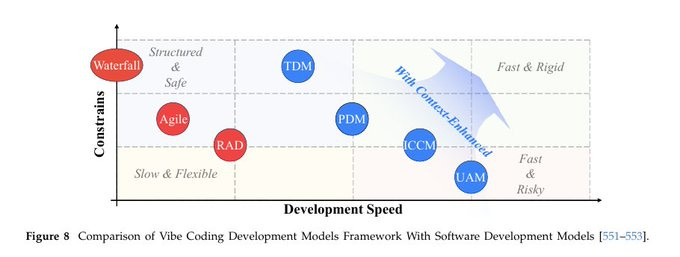

The paper also organizes existing research into 5 working styles: full automation, step-by-step collaboration, plan-based, test-based, and context-enhanced models.

Each style balances control and autonomy differently, depending on what teams need. The study points out that messy prompts and unclear goals cause big productivity losses.

Structured instructions, test-driven development, and feedback loops fix this. Security also matters a lot, so agents should run inside sandboxes with built-in checks and safety rules.

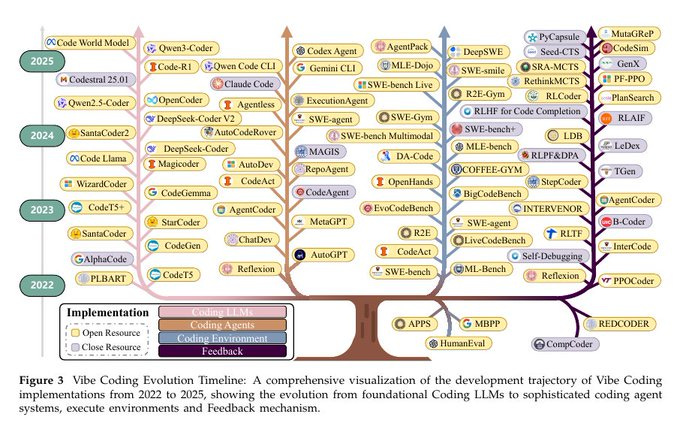

The taxonomy of Vibe Coding is categorized into large language model foundations, coding agent architectures, development environments, and feedback mechanisms.

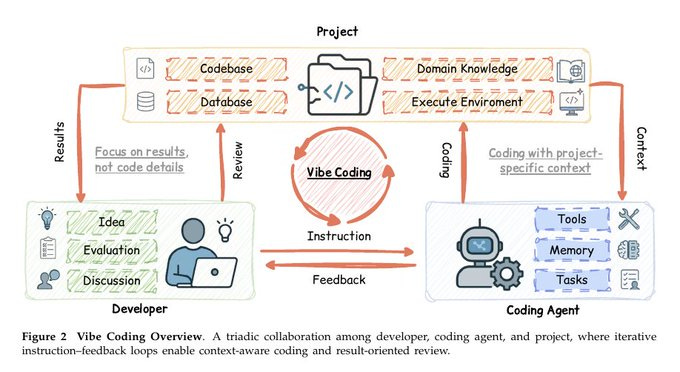

Vibe Coding Overview.

A triadic collaboration among developer, coding agent, and project, where iterative

instruction–feedback loops enable context-aware coding and result-oriented review.

Vibe Coding Evolution Timeline

Comparison of Vibe Coding Development Models Framework With Software Development Models

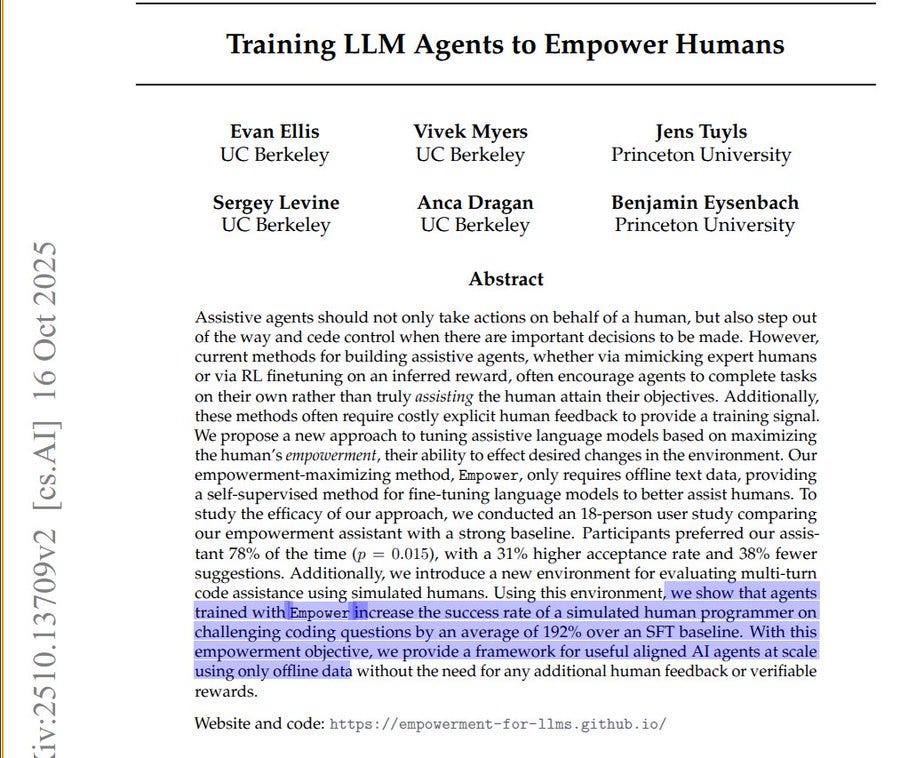

🗞️ Training LLM Agents to Empower Humans

New US Berkeley paper could be very useful for the vibe coding pipeline. It trains LLM coding assistants to stop generating code when uncertainty rises and let the human decide the next step. This helps the AI handle repetitive or obvious code while keeping the user in control of creative or ambiguous decisions.

In simulation, success for a simulated human jumped 192% using only offline data. Today, assistants often dump long code that bakes in wrong assumptions.

People then waste time fixing code they did not want. The method maximizes human empowerment, meaning the next human action has more impact.

Low empowerment means predictable text, so the assistant writes that and pauses before a branch. It checks confidence over the continuation and picks the longest safe chunk above a threshold.

Training uses only offline human code, with no preference labels or reward models. In a user study, 78% preferred it, and acceptance was 31% higher.

It also made fewer suggestions, which reduced churn while editing. The idea is to handle boilerplate, stop at uncertainty, and let people steer.

That’s a wrap for today, see you all tomorrow.