Read time: 12 min

📚 Browse past editions here.

( I publish this newletter daily. Noise-free, actionable, applied-AI developments only).

⚡Top Papers of last week (ending 15-Nov):

🗞️ LLM Output Drift: Cross-Provider Validation & Mitigation for Financial Workflows

🗞️ LeJEPA: Provable and Scalable Self-Supervised Learning Without the Heuristics

🗞️ Tiny Model, Big Logic: Diversity-Driven Optimization Elicits Large-Model Reasoning Ability in VibeThinker-1.5B

🗞️ Solving a Million-Step LLM Task with Zero Errors

🗞️ Too Good to be Bad: On the Failure of LLMs to Role-Play Villains

🗞️ “Grounding Computer Use Agents on Human Demonstrations”

🗞️ “HaluMem: Evaluating Hallucinations in Memory Systems of Agents”

“IterResearch: Rethinking Long-Horizon Agents via Markovian State Reconstruction”

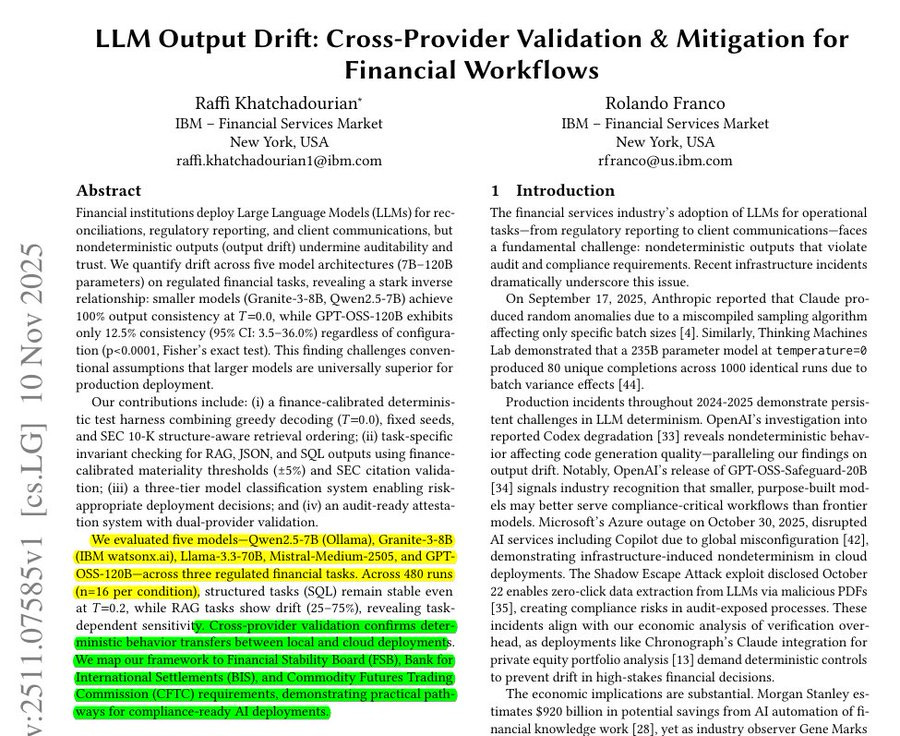

🗞️ LLM Output Drift: Cross-Provider Validation & Mitigation for Financial Workflows

Beautiful. New research from IBM stopped AI from changing Its answers. Demonstrated that using a smaller, deterministic model can make an LLM give the same response every time.

Since LLMs normally work in a probabilistic way, IBM explored if that randomness could be eliminated. The study ran 480 trials across 5 models and 3 tasks at temperature 0, where 7B to 8B models stayed identical and 120B delivered only 12.5% identical outputs, even with all randomness off.

The main instability came from retrieval order and sampling, so the team enforced greedy decoding, fixed seeds, and a strict SEC 10-K paragraph order to keep every run on the same path. They added schema checks for JSON and SQL and treated numeric answers as valid only if within 5% tolerance, which preserves factual consistency while preventing tiny format changes from counting as drift.

With temperature 0.2, retrieval-augmented Q&A dropped in consistency while SQL and short summaries remained 100%, which shows structured outputs are naturally stable and free text is sensitive to randomness. Their deployment tiers reflect this, with 7B to 8B cleared for all regulated work, 40B to 70B acceptable for structured outputs only, and 120B flagged as unsuitable for audit-exposed flows.

Cross-provider tests matched between local and cloud, so determinism transfers across environments when these controls are applied. For finance stacks the playbook is temperature 0, frozen retrieval order, versioned prompts and manifests, and dual validation before go-live.

🗞️ LeJEPA: Provable and Scalable Self-Supervised Learning Without the Heuristics

This is a new paper from Yann Lecun. Introduces LeJEPA, a simple, theory backed way to pretrain stable, useful self supervised representations.

Earlier JEPAs needed tricks like stop gradient, predictor heads, and teacher student networks to avoid collapsed features. LeJEPA removes these tricks and adds one regularizer called Sketched Isotropic Gaussian Regularization, or SIGReg.

The big deal is that LeJEPA replaces ad hoc anti collapse tricks with a mathematically optimal feature shape. Makes self supervised pretraining more stable, easier to run at scale, and easier to evaluate without labels.

Single hyperparameter, no teacher student tricks, stable at 1.8B parameters, 79% linear probe on ImageNet 1K. SIGReg pushes feature vectors to spread out evenly in all directions, forming an isotropic Gaussian cloud.

The authors prove that this spherical feature shape minimizes average error over many future tasks. SIGReg checks and enforces that shape using random 1D projections of features plus a simple statistical test with linear cost.

With this setup, training stays stable across different backbone architectures and scales to very large models. The combined LeJEPA loss also lines up closely with linear probe accuracy, so model quality can be judged without labels.

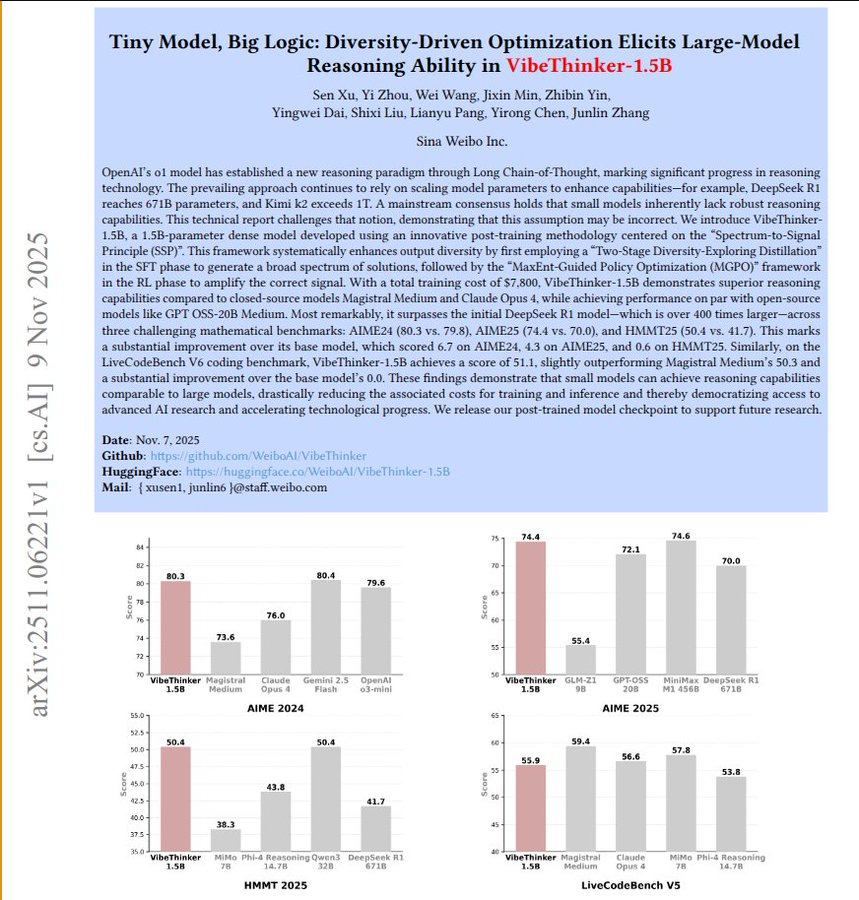

🗞️ Tiny Model, Big Logic: Diversity-Driven Optimization Elicits Large-Model Reasoning Ability in VibeThinker-1.5B

The paper introduces VibeThinker-1.5B, a 1.5B-parameter dense model. “Total training cost of ONLY $7,800, VibeThinker-1.5B demonstrates superior reasoning capabilities compared to closed-source models Magistral Medium and Claude Opus 4, while achieving performance on par with open-source models like GPT OSS-20B Medium.”

The model is developed using an innovative post-training methodology centered on the “Spectrum-to-Signal Principle (SSP)”. Spectrum to signal means they first create a broad spectrum of diverse answers, then they amplify the true signal by training more on the successful ones.

In the spectrum phase the model learns to produce many attempts for each math or code problem instead of 1 guess. The authors increase diversity by training specialists for algebra, geometry, calculus, and statistics and averaging them into a single model.

In the signal phase reinforcement learning gives higher reward to answer traces that solve the problem and reduces weight on failures. A MaxEnt rule focuses training on questions the model gets right about 50% of the time, where it is unsure.

These steps turn noisy trial and error into more stable multi step reasoning that generalizes across new problems. The final 1.5B model beats DeepSeek R1 on AIME24, AIME25, HMMT25, scores 51.1 on LiveCodeBench v6, and costs about $7,800.

🗞️ Solving a Million-Step LLM Task with Zero Errors

The paper shows how to run very long LLM workflows reliably by breaking them into tiny steps. 1,048,575 moves completed with 0 mistakes.

Long runs usually break because tiny per step errors add up fast. They split the task so each agent makes exactly 1 simple move using only needed state.

Short context keeps the model focused, so even small cheap models work here. At every step they sample a few answers and pick the first one that leads by a vote margin.

With margin 3 accuracy per step rises, and cost grows roughly steps times a small extra factor. They also reject outputs that are too long or misformatted, which reduces repeated mistakes across votes.

On Towers of Hanoi with 20 disks the system finishes the full run without a wrong move. The main idea is reliability from process, split work and correct locally instead of chasing bigger models.

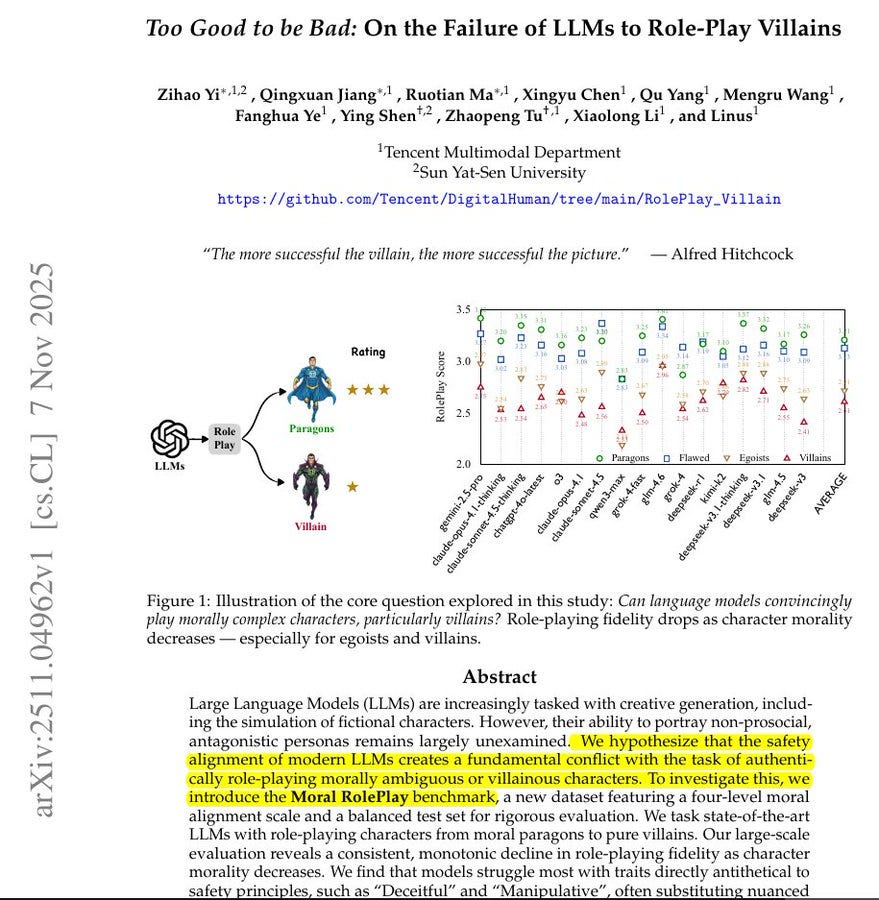

🗞️ Too Good to be Bad: On the Failure of LLMs to Role-Play Villains

The paper shows safety aligned LLMs fail to convincingly role play villains and self serving characters. Safety training teaches models to be helpful and honest, which blocks traits like lying or manipulation.

It proves a real tension between alignment and faithful character simulation. Warns that tools for writing or games may feel off when characters require dark motives.

The authors create Moral RolePlay, a test with 4 moral levels and 800 characters, to measure acting quality. They prompt models to act in scene setups and judge how closely the responses match each role.

Performance drops as roles get less moral, with the sharpest fall when moving from flawed good to egoist. Models often swap planned scheming for simple anger, which breaks character believability.

High general chatbot scores do not predict good villain acting, and alignment strength often makes it worse. The study says current safety methods trade off creative accuracy in fiction that needs non prosocial behavior.

🗞️ “Grounding Computer Use Agents on Human Demonstrations”

The paper shows how to train computer-use agents that click the exact right thing on screen. By grounding plain-language clicks to the right on-screen element

These helpers read a short instruction and must pick one correct button, icon, or field.

This mapping from words to the right spot, called grounding, is hard when screens are crowded.

The authors record experts using many desktop apps and label millions of elements with boxes and short text.

From those labels they build training instructions that name the element, describe its job, or use nearby references.

They fine tune GROUNDNEXT models so the output is an exact click point, then use reinforcement learning that rewards closer clicks.

Across several benchmarks, these models beat larger systems while using much less training data.

🗞️ “HaluMem: Evaluating Hallucinations in Memory Systems of Agents”

The paper introduces HaluMem to pinpoint where AI memory systems hallucinate and shows errors grow during extraction and updates. It supports 1M+ token contexts.

HaluMem tests memory step by step instead of only final answers.

It measures 3 stages, extraction, updating, and memory question answering.

Extraction checks if real facts are saved without inventions, balancing coverage with accuracy.

Updating checks if old facts are correctly changed and flags missed or wrong edits.

Question answering evaluates the pipeline using retrieved memories and marks answers as correct, fabricated, or incomplete.

The dataset has long user centered dialogues with labeled memories and focused questions for stage level checks.

Across tools, weak extraction causes bad updates and wrong answers in long context, often under 55% accuracy.

The fix is tighter memory operations, transparent updates, and stricter storage and retrieval.

🗞️ “IterResearch: Rethinking Long-Horizon Agents via Markovian State Reconstruction”

This paper shows a way for agents to stay sharp on very long tasks by rebuilding a small workspace every step.

It scales to 2048 steps and accuracy rises from 3.5% to 42.5%.

Most agents shove everything into 1 growing context, which clogs the model and locks in early mistakes.

This method keeps only the question, a short evolving report, and the last tool result, so thinking space stays clear.

The report is the memory, it compresses facts, filters noise, and drops dead ends.

Because the agent reconstructs state each round, later steps do not inherit junk from earlier steps.

Training uses Efficiency Aware Policy Optimization, which rewards solving with fewer steps and stabilizes learning when trajectories have different lengths.

It also works as a prompt recipe, so even strong models get steadier gains on long browsing tasks.

Across tough benchmarks it beats open agents by a clear margin and closes a lot of the gap to commercial systems.

The big idea is selective remembering plus regular synthesis, not raw accumulation.

That is why the agent can go deeper without losing reasoning quality.

That’s a wrap for today, see you all tomorrow.

I find it so interesting how you highlight these papers; the IBM research on LLM output drift feels particularly relevant given the constant challenge of ensuring reproducibility with probabilistic models in critical applications.